erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,875

Sarcasm? Though honestly that HL1 Path Tracing does look great to support an authentic interestI love this.

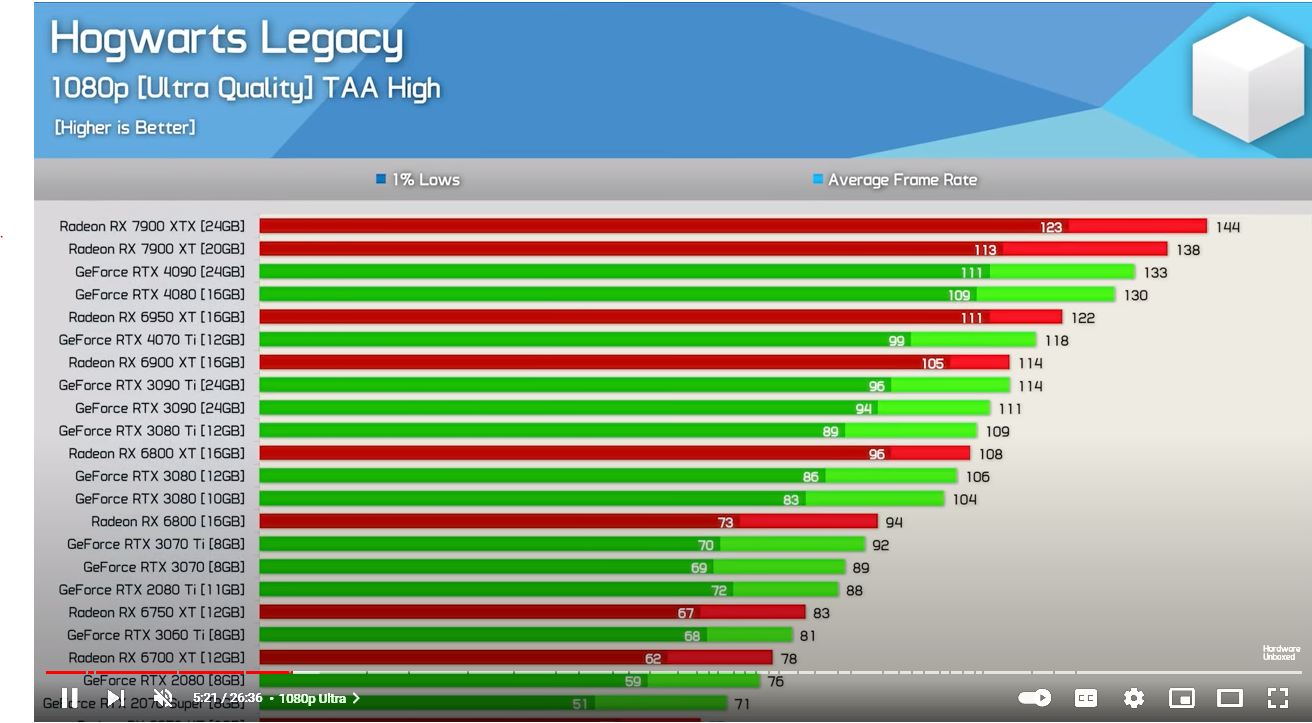

Ray tracing doesn't make a difference.

vs.

Ray tracing looks so much better than rasterization that they must be nerfing rasterization to give RT a boost.

You're right. We need to ditch rasterization completely. The sooner, the better.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)