imsirovic5

Limp Gawd

- Joined

- Jun 21, 2011

- Messages

- 341

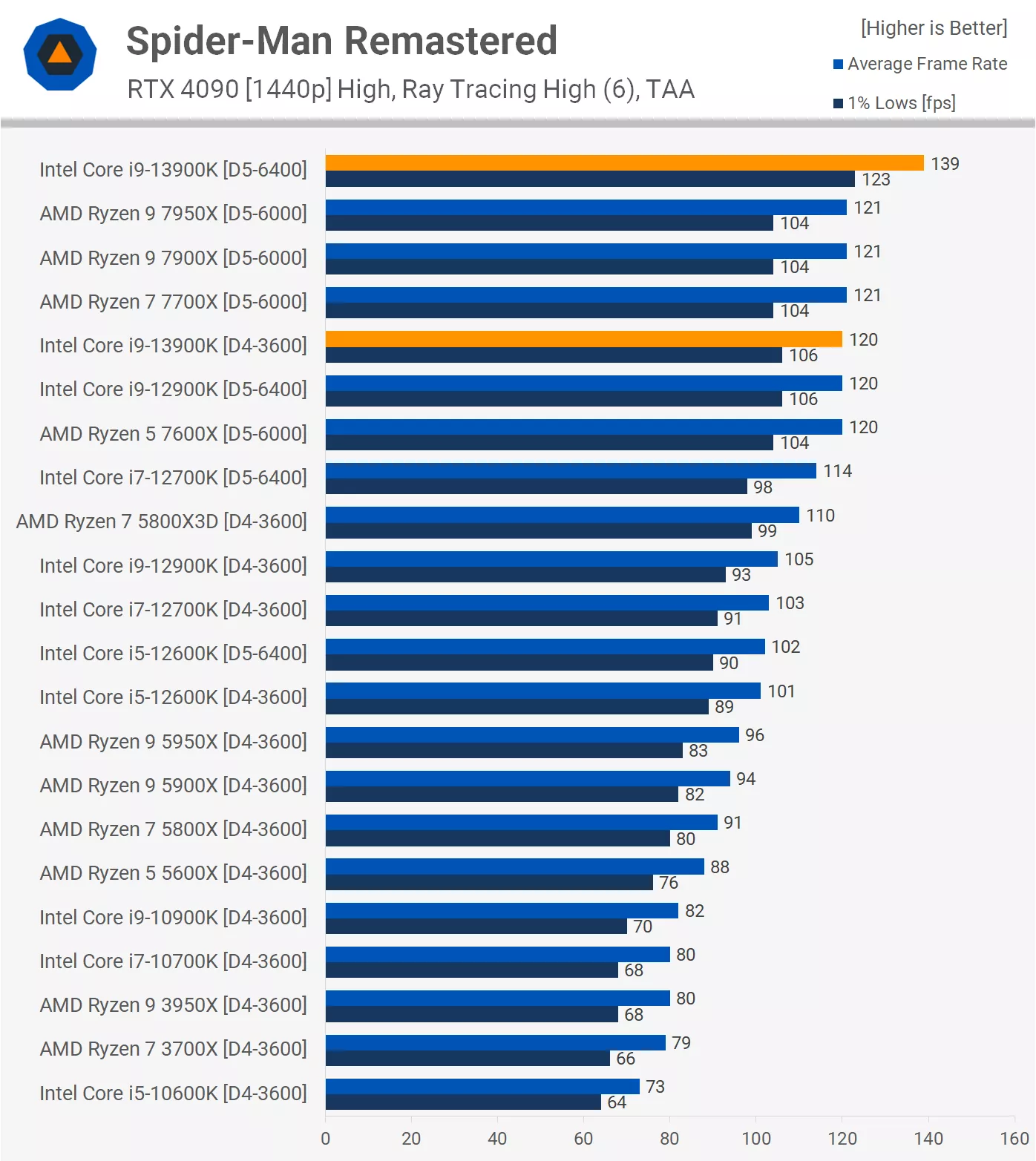

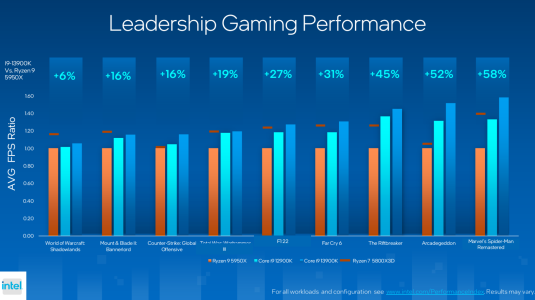

Interesting TechPowerUp benchmarks on RTX 4090 & 53 Games: Ryzen 7 5800X vs Core i9-12900K. Quite a gap even @ 4K on some of the games. Not surprising given the sheer power of the GPU, but still interesting.

https://www.techpowerup.com/review/rtx-4090-53-games-ryzen-7-5800x-vs-core-i9-12900k/

https://www.techpowerup.com/review/rtx-4090-53-games-ryzen-7-5800x-vs-core-i9-12900k/

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)