Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

I have a feeling these coolers became necessary once they got a idea of where RDNA2 was going to land. Their wattage's scream of a process being pushed to the limit and I have a feeling these will not overclock well.

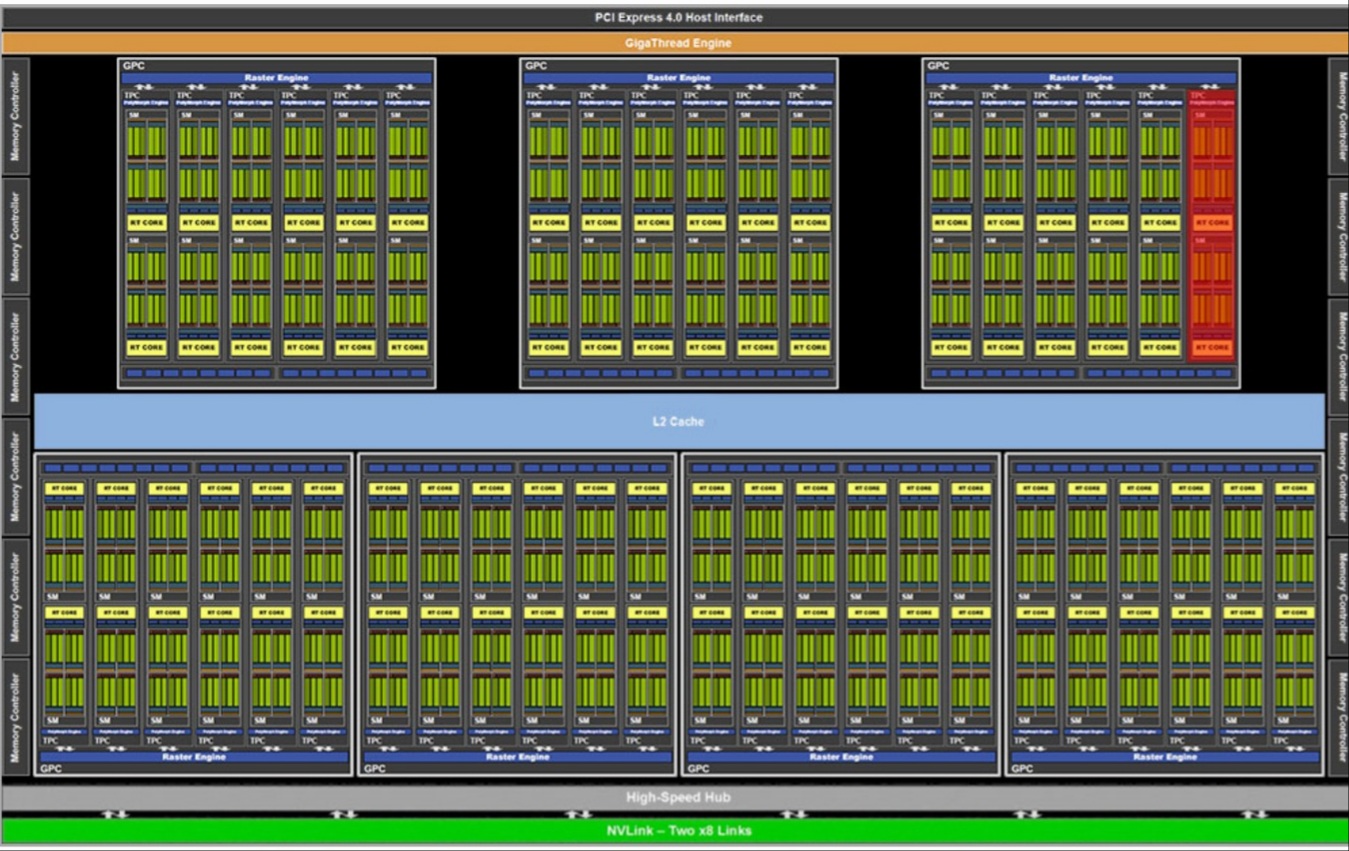

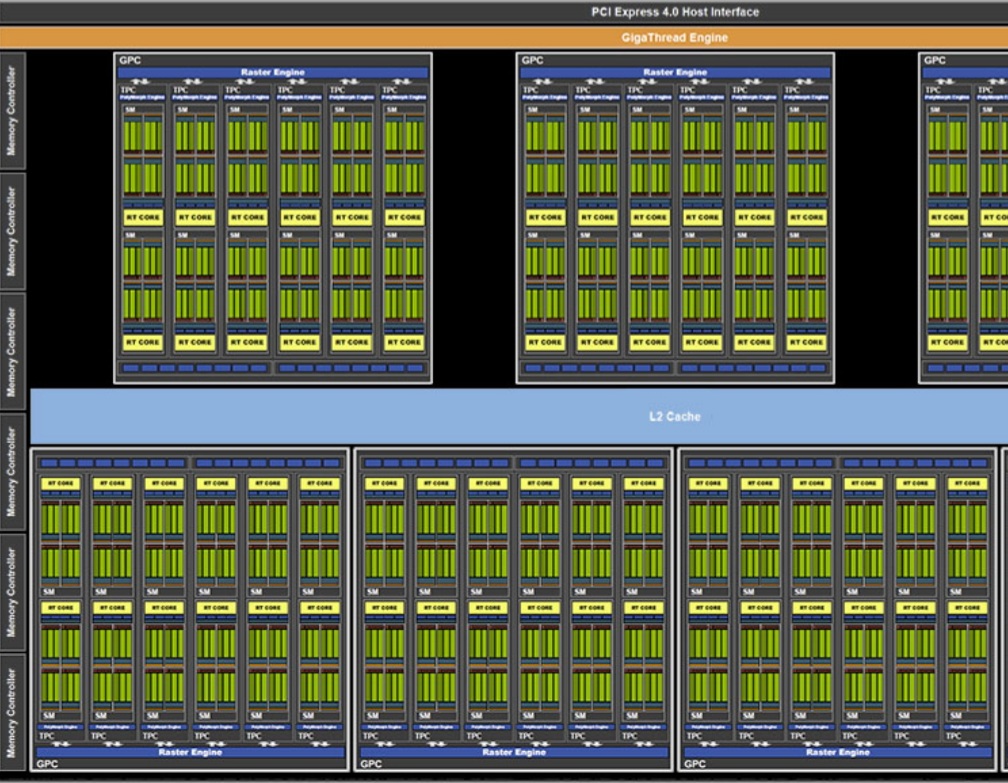

I think this probably started when they realized they were going to have more than 2x the CUDA cores compared to a 2080ti and needed a silent way to cool 400W in even crappy cases.

Based on history for overclocking for CPUs and GPUs I don’t think we’ll see a lot left on the table anymore. Only chance is if they undervolt/underclock the 3090 to keep the power at 400W.

I bios modded and hardmodded my 2080ti and it topped out around 2140Mhz and 400W under water. That’s less than 10% OC.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)