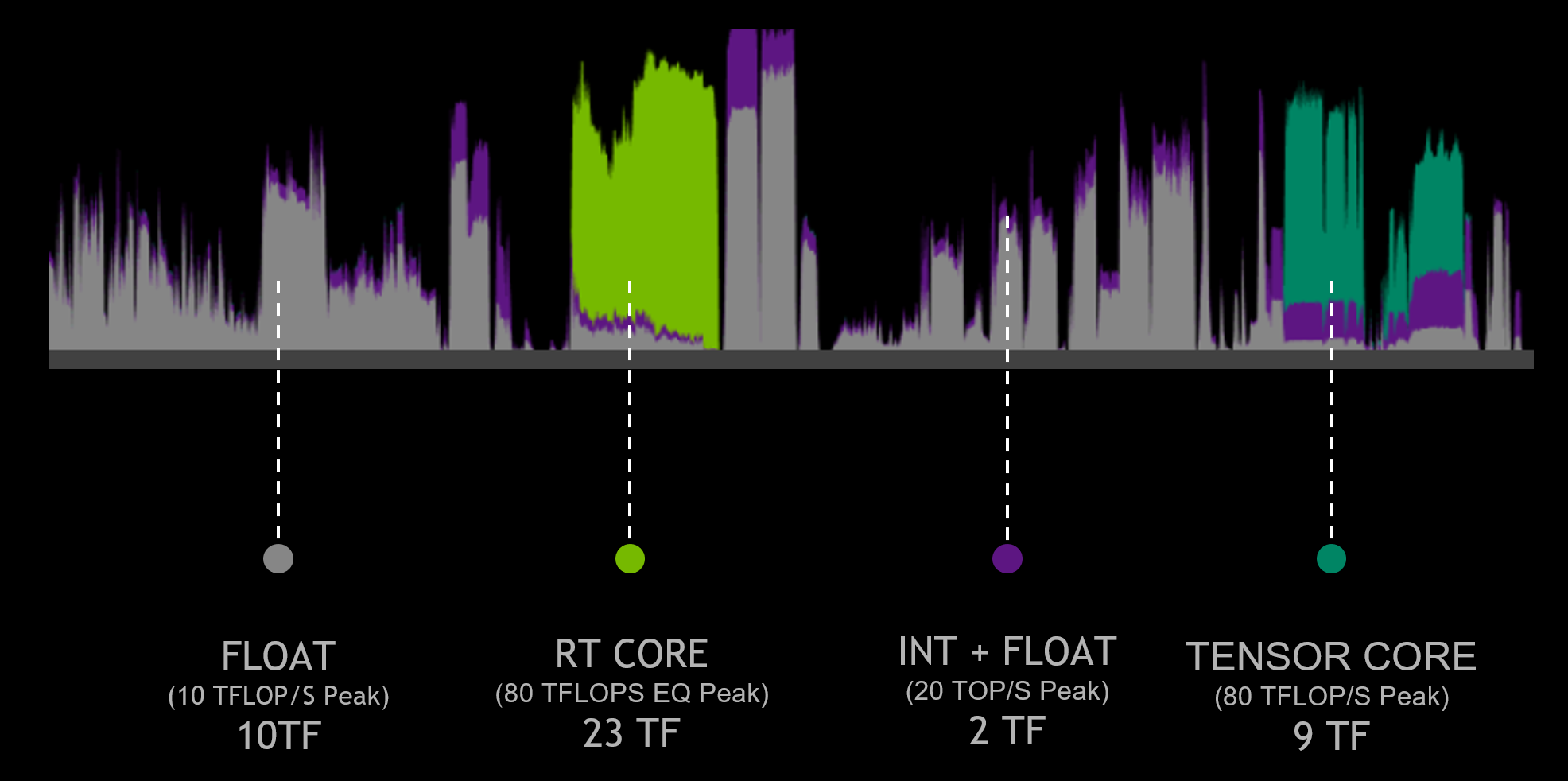

The anatomy of a typical RT effect is one part Intersection Testing (RT cores - the actual tracing of rays) one part shading those results (traditional cores) and one part denoising (contrary to NVidia arguments, usually traditional cores). More of that RT effect frame time was spent dealing with the output of RT cores, than actually in the RT cores.

It also highlights why spending precious die space, mostly on RT HW boosting has poor payback. A balanced approach makes much more sense given how much more traditional HW affects frame rates. You want enough RT HW so it isn't the bottleneck. That has already been accomplished, to the point that Traditional cores are more the bottleneck now.

For Ampere, or whatever the next gen GPUs are, Maybe Nvidia finally got the denoising part of the pipeline moved over to the Tensor cores.

RT cores only take up a tiny amount of Die Space.

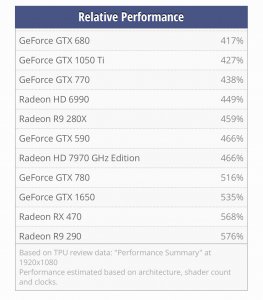

Surely having the 3060 matching the 2080Ti in Ray Traced performance is a realistic target? Especially when you consider how small a jump Turing was over Pascal in normal rendering. Because, unlike others in this thread, I think Nvidia are going to go big on Ray Traced performance this generation. I think they are going to make sure that the owners of Pascal and lower cards will want to make the switch. And I bet they are going to make sure there is enough RT performance to entice Turing owners to upgrade as well.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)