Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,822

Hey everyone,

I've been searching on eBay and have seen that Intel 100Gbit QSFP28 adapters have started to become affordable, and I am toying in my mind with maybe upgrading.

From googling, it looks as if qsfp28 is just four sfp28 lanes, and sfp28 is backwards compatible with SFP+, so if I use a QSFP28 to 4x SFP28 breakout cable I should be able to have 4x 10gbit lanes until such time as I upgrade my switch hardware.

Initially I wouldn't be any better off than I would be with a 40Gbit QSFP+ adapter, but that seems like a tech that is going the way of the dodo, so I'd rather not invest in it, and instead jump straight to QSFP28.

Is my understanding accurate?

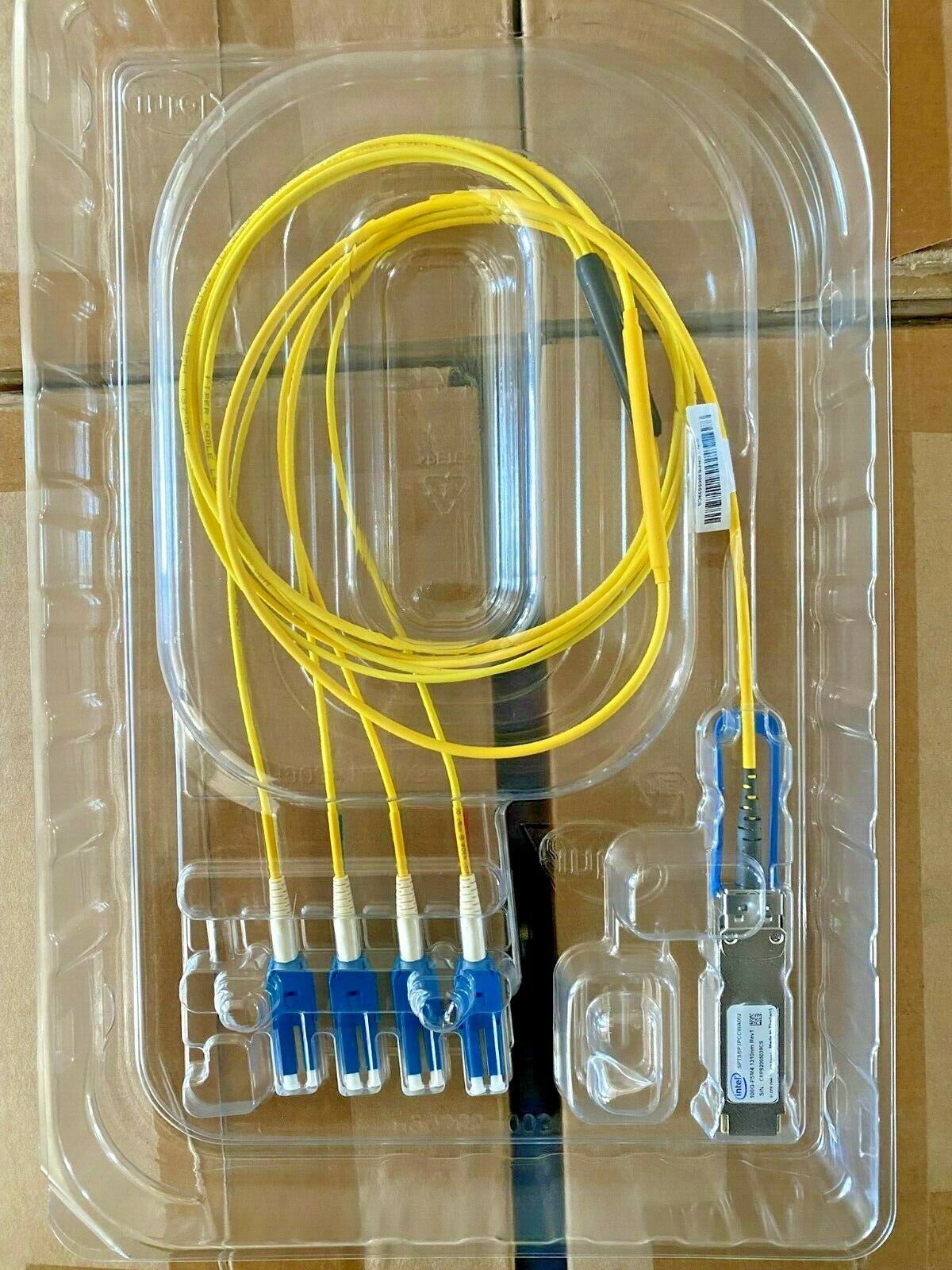

My second question is, is it possible to get 4x fiber outputs from a single QSFP28 port?

The four way splitting cables I have seen are all for DAC use, but is there any way to split the QSFP28 port on the back of a server to - lets say - two dac cables that use LAG to connect to two 10gig SFP+ ports on my switch, and use the other two SFP28 lanes to install either SFP28 or SFP+ SR transducers and run two sets of fiber from there to remote devices?

Appreciate any input and thoughts on this.

I've been searching on eBay and have seen that Intel 100Gbit QSFP28 adapters have started to become affordable, and I am toying in my mind with maybe upgrading.

From googling, it looks as if qsfp28 is just four sfp28 lanes, and sfp28 is backwards compatible with SFP+, so if I use a QSFP28 to 4x SFP28 breakout cable I should be able to have 4x 10gbit lanes until such time as I upgrade my switch hardware.

Initially I wouldn't be any better off than I would be with a 40Gbit QSFP+ adapter, but that seems like a tech that is going the way of the dodo, so I'd rather not invest in it, and instead jump straight to QSFP28.

Is my understanding accurate?

My second question is, is it possible to get 4x fiber outputs from a single QSFP28 port?

The four way splitting cables I have seen are all for DAC use, but is there any way to split the QSFP28 port on the back of a server to - lets say - two dac cables that use LAG to connect to two 10gig SFP+ ports on my switch, and use the other two SFP28 lanes to install either SFP28 or SFP+ SR transducers and run two sets of fiber from there to remote devices?

Appreciate any input and thoughts on this.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)