I simply disabled gsync in the NVCP and tested Cyberpunk with FG at 120Hz locked with VSYNC. It was very bad.Yeah all my displays at this point are GSync certified, I’ve never used it on a display that isn’t so I can’t say if that would make it noticeably worse or not. I assume it would but I’m not going to go about testing that.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia Says Native Resolution Gaming is Out, DLSS is Here to Stay

- Thread starter 1_rick

- Start date

- Status

- Not open for further replies.

Yeah that’s more effort than I want to put in to what I know would be a worse experience.I simply disabled gsync in the NVCP and tested Cyberpunk with FG at 120Hz locked with VSYNC. It was very bad.

Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,469

Big plans for DLSS in the future.

https://wccftech.com/nvidia-predict...ith-game-engines-for-much-better-visuals/amp/

https://wccftech.com/nvidia-predict...ith-game-engines-for-much-better-visuals/amp/

In the final segment of the recent 'AI Visuals' roundtable hosted by Digital Foundry, NVIDIA's VP of Applied Deep Learning Research Bryan Catanzaro said he believes future releases of DLSS, perhaps in version 10, could take care of every aspect of rendering in a neural, AI-based system.

I do not believe that AI is gonna build games in a way where you just write a paragraph about making a cyberpunk game and then pop comes out something as good as Cyberpunk 2077. I do think that let's say DLSS 10 in the far future is going to be a completely neural rendering system that interfaces with a game engine in different ways, and because of that, it's going to be more immersive and more beautiful.

Big plans for DLSS in the future.

https://wccftech.com/nvidia-predict...ith-game-engines-for-much-better-visuals/amp/

Will we need to?

At that point, you'd think we're running path traced games which are practically a match for offline renderers.

Or maybe it's just an "optimization." Like you just need hardware to render the bare minimum and the neural network will hallucinate the rest over it.

Imagine remasters where there's actually no real changes to any of the original. All the new visuals are just AI barfing out the new image.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,818

Can we tell the AI to NOT mess up the actual style of the original game? Seems like a lot of remasters run into that issue.All the new visuals are just AI barfing out the new image.

Will we need to? At that point, you'd think we're running path traced games which are practically a match for offline renderers.

If I want to make a game, making what Pixar need to do to make a movie (with their 200-300 millions budget) vs just telling an AI I want a sad looking horse walking down that path, looking at the result, saying make it more gray will be way more interesting, it is not so much for performance but for game engine conviviality.

Also game are highly interactive, but could be by a magnitude level more, imagine if game asset could be dynamic to the previous players choice path in unpredictable way (or just too many possibles to make it realistic possible to make all of them).

One example of such game someone tried to do and could become more possible as we go is a zombie survivor game that let your start where you want in the world, say your current house address. It look at google map picture and generate a 3d first/third person world for you to play in a bit like Flight Simulator do but in a more granular level, place food in food like store from the map info, pharmacie item in pharmacies, gasoline in station and so on, then the game evolve over time as time goes without grid electricity and so on. Something that make it possible to generate game asset from a game engine list of description would make it much more possible for a single dev.

I can see, at first for low budget movies-games an interest in mostly if not full neural render path as a way to save steps and for big production to make them able to be fully interactive to the played choice (they would have done a lot of world and trained the AI on them, direct it, etc... and not just a, make me a small town in the style of MadMax 1)

May we won’t need it, but the best we currently have managed to barely do 30fps as an average path tracing.Will we need to?

At that point, you'd think we're running path traced games which are practically a match for offline renderers.

Or maybe it's just an "optimization." Like you just need hardware to render the bare minimum and the neural network will hallucinate the rest over it.

Imagine remasters where there's actually no real changes to any of the original. All the new visuals are just AI barfing out the new image.

If we have reached a state of diminishing returns on Silicon and games continue to evolve as they have been I don’t think 10 years would be enough time to get the hardware to a place where we could do that without upgrading to 220, not feasible.

Newer more efficient render paths would be a solution, I don’t know what that exactly looks like but I’d hear them out.

But the current silicon roadmaps only net us 30% or so in performance over the next 6 years so no way do we more than double it in 10.

Now with architecture and other technologies maybe they squeeze out more but I’m not sure I like the price tag my brain is pulling up on that.

Better faster stronger hardware is great, but that doesn’t mean we can’t also have smarter more efficient software. Developers already tweak the hell out of the game engines, why not let them tweak the render paths too?

BG5 but with a full AI running as the DM…If I want to make a game, making what Pixar need to do to make a movie (with their 200-300 millions budget) vs just telling an AI I want a sad looking horse walking down that path, looking at the result, saying make it more gray will be way more interesting, it is not so much for performance but for game engine conviviality.

Also game are highly interactive, but could be by a magnitude level more, imagine if game asset could be dynamic to the previous players choice path in unpredictable way (or just too many possibles to make it realistic possible to make all of them).

One example of such game someone tried to do and could become more possible as we go is a zombie survivor game that let your start where you want in the world, say your current house address. It look at google map picture and generate a 3d first/third person world for you to play in a bit like Flight Simulator do but in a more granular level, place food in food like store from the map info, pharmacie item in pharmacies, gasoline in station and so on, then the game evolve over time as time goes without grid electricity and so on. Something that make it possible to generate game asset from a game engine list of description would make it much more possible for a single dev.

I can see, at first for low budget movies-games an interest in mostly if not full neural render path as a way to save steps and for big production to make them able to be fully interactive to the played choice (they would have done a lot of world and trained the AI on them, direct it, etc... and not just a, make me a small town in the style of MadMax 1)

Baldur Gates is a clear example of where generative AI would be used and a clear plus.BG5 but with a full AI running as the DM…

For one your character not speaking during dialogue is quite jarring, specially that you are involved in almost all of them and everyone else speak, recording voices for all the way one could sound from a female wizard gnome to a male orc barbarian would have been too much, but seem a perfect task for AI and not having to pre-record all NPC dialogue (you can still do it for the most important characther-moments to have the best performance) seem like a big plus. Your choice path painting the way the world look in very specific ways, painting on wall of your legendary exploit, bards singing song that relate to the custom things that happened in the world, etc... There is easy and certain to happen generative AI stuff that are to be made, maybe as soon has in game that start to be made in 2025 and released in 2029-2031 (it will happen much faster for small game that use it to get attention, but I mean serious big one for which it is not the point)

Then maybe more difficult and more later on, AI driven NPC-desire-quest-, i.e. an AI DM, could probably also be interesting.

A Crusader Kings games could be another one where generative AI could do a lot of fun stuff, could generate short movies of your Royal Hunts, decorate the thrones room of painting of your ancestor at key moments, have voice dialogue (or even full on just made up movies)

May we won’t need it, but the best we currently have managed to barely do 30fps as an average path tracing.

If we have reached a state of diminishing returns on Silicon and games continue to evolve as they have been I don’t think 10 years would be enough time to get the hardware to a place where we could do that without upgrading to 220, not feasible.

Nvidia has like 4x'd their ray tracing performance since the 2080ti. Maybe even a bit more.

I'm not terribly concerned with it suddenly stagnating.

StoleMyOwnCar

2[H]4U

- Joined

- Sep 30, 2013

- Messages

- 3,011

I think we're close to procedurally generated full storylines along with on-the fly assets to back them. I made a post to that degree here in the Starfield topic (which continues for a bit of back and forth):If I want to make a game, making what Pixar need to do to make a movie (with their 200-300 millions budget) vs just telling an AI I want a sad looking horse walking down that path, looking at the result, saying make it more gray will be way more interesting, it is not so much for performance but for game engine conviviality.

Also game are highly interactive, but could be by a magnitude level more, imagine if game asset could be dynamic to the previous players choice path in unpredictable way (or just too many possibles to make it realistic possible to make all of them).

One example of such game someone tried to do and could become more possible as we go is a zombie survivor game that let your start where you want in the world, say your current house address. It look at google map picture and generate a 3d first/third person world for you to play in a bit like Flight Simulator do but in a more granular level, place food in food like store from the map info, pharmacie item in pharmacies, gasoline in station and so on, then the game evolve over time as time goes without grid electricity and so on. Something that make it possible to generate game asset from a game engine list of description would make it much more possible for a single dev.

I can see, at first for low budget movies-games an interest in mostly if not full neural render path as a way to save steps and for big production to make them able to be fully interactive to the played choice (they would have done a lot of world and trained the AI on them, direct it, etc... and not just a, make me a small town in the style of MadMax 1)

https://hardforum.com/threads/starfield.1962232/page-42#post-1045724837

Naturally most people are going to disagree (and a lot of people in this forum are a bit conservative)... but if AI specialists got together and decided to randomly try to create this, along with some willing game developers and artists, I can almost guarantee you this is already possible. It would just take a lot of fine tuning of the AI models. Like maybe a year or two... but yeah the stuff is already here. It's scary how close we are.

The future is going to be a fun time.

By fun I mean mass unemployment.

They have added nearly that amount more in Tensor cores to the cards as well.Nvidia has like 4x'd their ray tracing performance since the 2080ti. Maybe even a bit more.

I'm not terribly concerned with it suddenly stagnating.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,558

I think people overrate the current capabilities of supposed AI. It also takes a ton of processing power for it to do much of anything, were decades away from it really being able to really think for itself. An AI designed game and graphics would be a laughable thing to se though.

Don’t think of it like that but if the developer provides a horse model with full skeleton, proper articulation, material properties, weight, size, blah blah blah. A fully defined horse, and the game calls for a horse to walk down the road, or a group of horses to be grazing in a pasture.I think people overrate the current capabilities of supposed AI. It also takes a ton of processing power for it to do much of anything, were decades away from it really being able to really think for itself. An AI designed game and graphics would be a laughable thing to se though.

Could an AI animate that with the skeleton and the knowledge of what a horse look like when walking grazing or running? Then path it from A to B?

Or even easier, cars on a road, or maybe pedestrian traffic? Could an AI just randomly generate background characters and what if that traffic wasn’t actually rendered but generated by the AI based on game assets and fully generated by the frame generation system? Just empty square hit boxes with the AI generated, people, cars, objects, etc, superimposed overtop?

Because the hardest part of any open world game is putting things in that world worth doing or visually interesting. (Not so subtle dig at Ubisoft) But AI is already to a place where it can build good looking and convincing open world environments based on images and descriptions of what you want the area to have. Why not expand it to NPC or moderately interactive content?

Last edited:

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

Generative AI (make things like a script, engine, textures, sounds etc) doesn't need/need to be General Artificial Intelligence AI (it's own completely self thinking digital individual or whatever)

Edit: And yes, 'General Artificial Intelligence AI' - just like ATM Machine

Edit: And yes, 'General Artificial Intelligence AI' - just like ATM Machine

StoleMyOwnCar

2[H]4U

- Joined

- Sep 30, 2013

- Messages

- 3,011

We don't need for it to think for itself, to just create a game (or a subquest inside of a game). All you need to create a game is assets, a storyline, and interactivity. Physics engines and the interactivity is more or less already handled by the developers on a pretty generic basis. Modeling and piecing together the level on a 3D map even be done if the AI simply generates a height heatmap with relevant points to go along with this. Storylines and assets can already be created by contemporary AI in Stable Diffusion, provided you don't want like 4k x 4k size textures). Sure, both of them can end up being silly, but simply creating a story with dialogue (even a slightly branching story with dialogue) is not going to be too hard for even contemporary AI. You do not need them to "think for themselves" so I'm not sure why people keep thinking that's the bottom line requirement for an "AI" (reality it's just advanced machine learning that generates sort of via very advanced interpolation) to create a game. And no you don't need a supercomputer... even my 2080 can generate a 512x512 image very quickly. Give it like 1 minute and you have probably 40-60 images. Upscaling to 1k size textures would take longer, but that's a different goalpost.I think people overrate the current capabilities of supposed AI. It also takes a ton of processing power for it to do much of anything, were decades away from it really being able to really think for itself. An AI designed game and graphics would be a laughable thing to se though.

The only reason you would need an AI to think for itself is if you wanted lifelike interaction with the NPCs, like you basically wanted an actual DM thinking for each individual actor in the story. That's not really necessary for a game, that's necessary to recreate real life inside of a game...

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,581

Agreed. "AI" is very good at interpretation and extrapolation from existing data / training sets. In the current forms - it is not very good at making truly novel things at all.I think people overrate the current capabilities of supposed AI. It also takes a ton of processing power for it to do much of anything, were decades away from it really being able to really think for itself. An AI designed game and graphics would be a laughable thing to se though.

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,906

is this basically the same content / article?Big plans for DLSS in the future.

https://wccftech.com/nvidia-predict...ith-game-engines-for-much-better-visuals/amp/

Nvidia Hints at DLSS 10 Delivering Full Neural Rendering, Potentially Replacing Rasterization and Ray Tracing

https://www.tomshardware.com/news/n...ially-replacing-rasterization-and-ray-tracing

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,688

Yep.is this basically the same content / article?

imsirovic5

Limp Gawd

- Joined

- Jun 21, 2011

- Messages

- 341

I do not understand why some people are upset at image reconstruction techniques, like DLSS or upcoming replacement of rasterization and ray tracing with full neural rendering - because somehow this does not represent the accurate reality? Are people aware, that we humans do not perceive accurate reality? It is a well established fact that our brains do not perceive reality as it really is, for example:

https://neuroscience.stanford.edu/n...ur-brain-here-s-what-means-and-why-it-matters

Take a look at Donald Hoffman (cognitive psychologist at University of California, Irvin) - who argues that our brain actually constructs the reality - which is an approximation of the actual reality kinda like looking at the computer interface and seeing icons as representations of reality of underlying hardware. It is well established that most of our brain power is used in actually constructing "reality" for us.

So if everything I am experiencing in this "physical" world is fake, how can I be mad at our attempts to do the same with computer games?

https://neuroscience.stanford.edu/n...ur-brain-here-s-what-means-and-why-it-matters

Take a look at Donald Hoffman (cognitive psychologist at University of California, Irvin) - who argues that our brain actually constructs the reality - which is an approximation of the actual reality kinda like looking at the computer interface and seeing icons as representations of reality of underlying hardware. It is well established that most of our brain power is used in actually constructing "reality" for us.

So if everything I am experiencing in this "physical" world is fake, how can I be mad at our attempts to do the same with computer games?

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,950

I think you're misunderstanding the problem here. For years we've had a lot of situations where both ATI and Nvidia would cheat in games with their image quality. ATI is known for their Quake Quack situation, and Nvidia would often cheat in 3DMark frequently in the past. The method of choice was to not render something and hope that nobody would notice it. Today we have software rendering tools to make sure that whatever Nvidia and AMD are rendering are done accurately, because we don't want to deceive the customer. With things like DLSS and FSR, what is accurate anymore? We know that DLSS and FSR lower image quality, but the idea is that it's acceptable image quality loss. When Nvidia says that native resolution is out the door, they're also saying that you'll take the inaccuracies and love it. It's also a problem for them that in order to make graphic cards more powerful, they need to spend more on silicon and cooling and power consumption. There's a reason why the AMD RX 5500 and RX 480 and RX 6500 are nearly identical in performance with some outliers here and there. You see the same situation with Nvidia's RTX 2060, 3060, 4060 in that there isn't any huge leaps in performance. AMD and Nvidia don't want to lower the price of better GPU's when they can barely find resources to make their high end GPU's attractive enough for consumers to buy them. You think an RTX 4090 owner is going to use DLSS in their games? With that kind of performance, why would you? Ray-Reconstruction is a different story, since Nvidia is pretty much admitting that you can't do Ray-Tracing without upscaling, and DLSS 3.5 is just that combined.I do not understand why some people are upset at image reconstruction techniques, like DLSS or upcoming replacement of rasterization and ray tracing with full neural rendering - because somehow this does not represent the accurate reality? Are people aware, that we humans do not perceive accurate reality? It is a well established fact that our brains do not perceive reality as it really is, for example:

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,772

DLSS has come a long way since introduction.

Complete Rasterization replacement is highly likely achievable. I'm sure the only point in going there will be better visuals... if it looks worse no one will want it. So I am really not worried that this is the future some Nvidia engineers are predicting.

Complete Rasterization replacement is highly likely achievable. I'm sure the only point in going there will be better visuals... if it looks worse no one will want it. So I am really not worried that this is the future some Nvidia engineers are predicting.

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

There are people that claim to never see ghosting or other issues with DLSS or DLAA which is laughable. Until garbage like this piss poor DLAA implementation is no longer an issue then I will not like the thought of having to fully rely on it.DLSS has come a long way since introduction.

Complete Rasterization replacement is highly likely achievable. I'm sure the only point in going there will be better visuals... if it looks worse no one will want it. So I am really not worried that this is the future some Nvidia engineers are predicting.

View: https://www.youtube.com/shorts/LmLrMHxQLWA

Last edited:

You think an RTX 4090 owner is going to use DLSS in their games? With that kind of performance, why would you? Ray-Reconstruction is a different story, since Nvidia is pretty much admitting that you can't do Ray-Tracing without upscaling, and DLSS 3.5 is just that combined.

I use DLSS everywhere I can in combination with DLDSR. Much better image quality than native + TAA. Even at native res, DLSS quality can often times look better than TAA. Not even speaking of the heavy performance uplift. It is often times pretty silly to not use DLSS instead of native 4K.

There are people that claim to never see ghosting or other issues with DLSS or DLAA which is laughable. Until garbage like this piss poor DLAA implementation is no longer an issue then I will not like the thought of having to fully rely on it.

View: https://www.youtube.com/shorts/LmLrMHxQLWA

Just a bug In LOU1. You get ghosting when you start the game with DLSS/DLAA and if you switch from TAA to DLSS/DLAA. Try to switch to FSR first and then back to DLSS/DLAA and the ghosting should be gone.

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

If that even works then that is still ridiculous and something no user should have to figure out or deal with. And are we going to make excuses for every other game where there are obvious DLSS/DLAA issues?Just a bug In LOU1. You get ghosting when you start the game with DLSS/DLAA and if you switch from TAA to DLSS/DLAA. Try to switch to FSR first and then back to DLSS/DLAA and the ghosting should be gone.

EDIT: And no that did not fix the blurring with DLAA.

Last edited:

EDIT: And no that did not fix the blurring with DLAA.

I just tested it again. You need to enable FSR first, then DLSS and then you can enable DLAA without ghosting. If you go back to TAA, again FSR - DLSS then DLAA. What a weird bug. I replaced the DLSS file with the latest DLSS 3.5.0 though.

If that even works then that is still ridiculous and something no user should have to figure out or deal with. And are we going to make excuses for every other game where there are obvious DLSS/DLAA issues?

I don't make excuses, but I think I haven't experienced such a bug in any game. You make it sound like DLSS ghosting issues are widespread. That is still pretty rare from my experience. Latest issues with CP and Ray Reconstruction is a different story.

RamboZombie

Limp Gawd

- Joined

- Jul 11, 2018

- Messages

- 141

i have a 4090 - i allways use DLSS quality if it's available - it kinda looks the same.. and usually with a better framerate.I think you're misunderstanding the problem here. For years we've had a lot of situations where both ATI and Nvidia would cheat in games with their image quality. ATI is known for their Quake Quack situation, and Nvidia would often cheat in 3DMark frequently in the past. The method of choice was to not render something and hope that nobody would notice it. Today we have software rendering tools to make sure that whatever Nvidia and AMD are rendering are done accurately, because we don't want to deceive the customer. With things like DLSS and FSR, what is accurate anymore? We know that DLSS and FSR lower image quality, but the idea is that it's acceptable image quality loss. When Nvidia says that native resolution is out the door, they're also saying that you'll take the inaccuracies and love it. It's also a problem for them that in order to make graphic cards more powerful, they need to spend more on silicon and cooling and power consumption. There's a reason why the AMD RX 5500 and RX 480 and RX 6500 are nearly identical in performance with some outliers here and there. You see the same situation with Nvidia's RTX 2060, 3060, 4060 in that there isn't any huge leaps in performance. AMD and Nvidia don't want to lower the price of better GPU's when they can barely find resources to make their high end GPU's attractive enough for consumers to buy them. You think an RTX 4090 owner is going to use DLSS in their games? With that kind of performance, why would you? Ray-Reconstruction is a different story, since Nvidia is pretty much admitting that you can't do Ray-Tracing without upscaling, and DLSS 3.5 is just that combined.

i have a 4k240Hz monitor - so can allways use more frames. or less noise if it's an older game that runs closer to the 240Hz.

I actually thinks that a game like horizon zero dawn looks waay better with dlss quality.

so there - a 4090 user who uses DLSS

Can we really blame them when Devs keep releasing games with PS4 graphics and worse performance over and over?

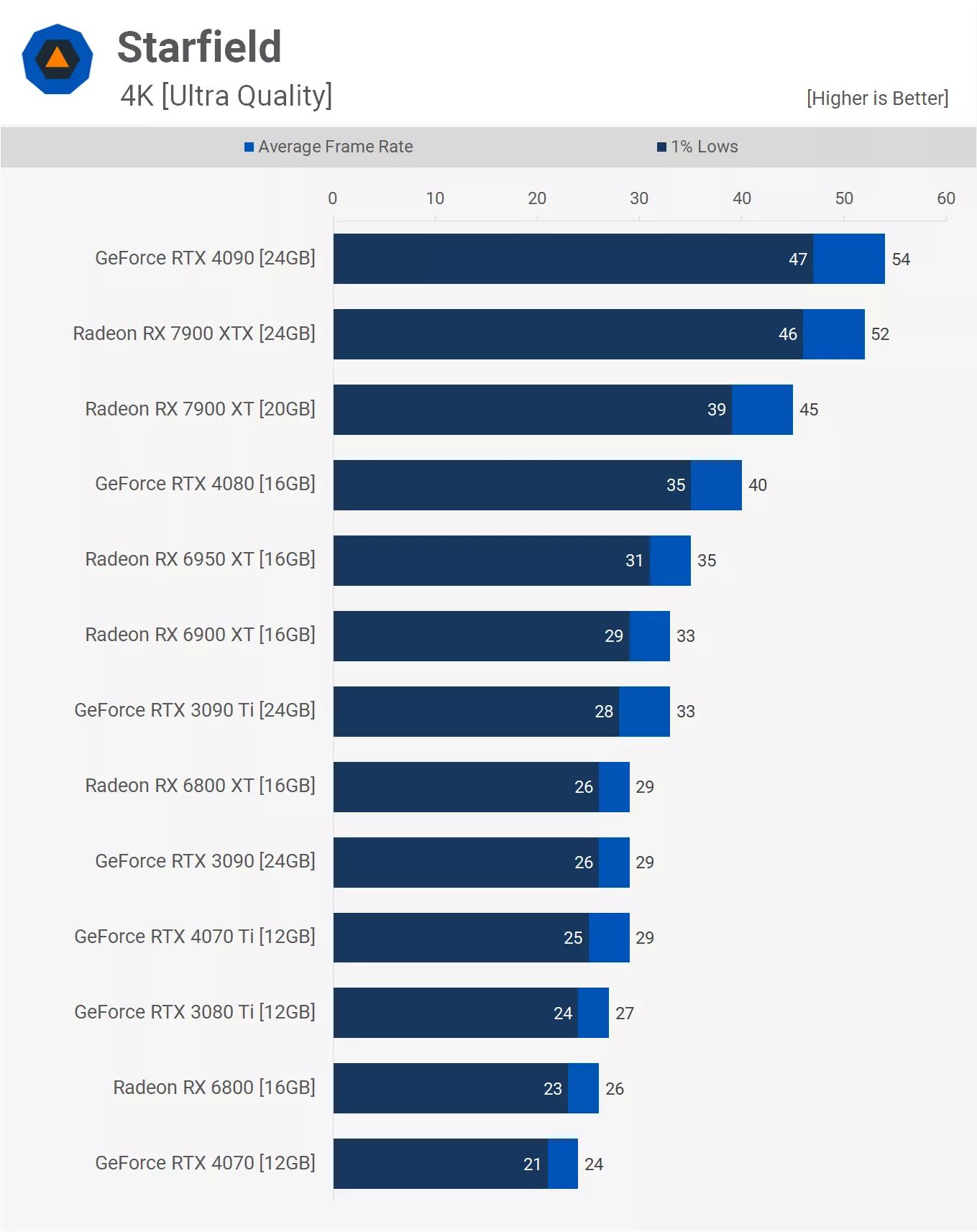

I have no doubt that they will continue to lead the pack in performance regardless of dlss on or off. But I can't blame them for pushing dlss so heavily when devs keep releasing games with dog shit performance and last gen graphics. We have people touting BS claims like 7900XTX keeping pace and even outperforming 4090 because a lot of these games seem to be hampering performance on the competitors HW. It's fucking wild man.

That's right the ps4 needs to be put down. Isn't the ps5 shortage over? But they still release games on it so I guess not

The PS4 by the end of its 3’rd year on the market had sold nearly double the number of PS5.That's right the ps4 needs to be put down. Isn't the ps5 shortage over? But they still release games on it so I guess not

After 3 years Sony had shipped nearly 70 million PS4’s, where now they are “bragging” that the PS5 has shipped just north of 40 million units.

That is a big market difference and developers who signed exclusive contracts with Sony can’t make ends meet on the PS5 alone.

So while the shortage may be over you have many users who are holding out for Christmas which is when the bulk of the console sales happen, or for the PS5 pro (or whatever they call it) because Sonys partners keep leaking and teasing it.

This is not a good generation for consoles and Sony hasn’t been shy about throwing AMD under that bus in-front of their shareholders.

The PS4 by the end of its 3’rd year on the market had sold nearly double the number of PS5.

After 3 years Sony had shipped nearly 70 million PS4’s, where now they are “bragging” that the PS5 has shipped just north of 40 million units.

That is a big market difference and developers who signed exclusive contracts with Sony can’t make ends meet on the PS5 alone.

So while the shortage may be over you have many users who are holding out for Christmas which is when the bulk of the console sales happen, or for the PS5 pro (or whatever they call it) because Sonys partners keep leaking and teasing it.

This is not a good generation for consoles and Sony hasn’t been shy about throwing AMD under that bus in-front of their shareholders.

So basically most games won't look good until ps4 dies

Probably doesn't help that the ps5 version of any game is usually 10% more expensive than the ps4 version of the game...that gets upgraded to ps5 for free

You think an RTX 4090 owner is going to use DLSS in their games? With that kind of performance, why would you?

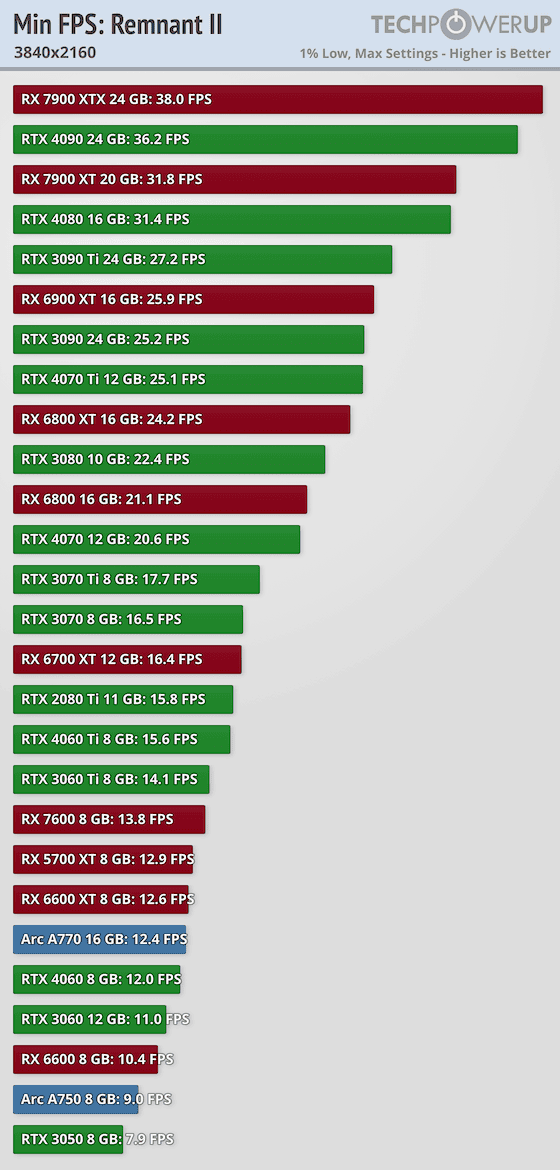

No RT (Aveum has Lumen always on too):

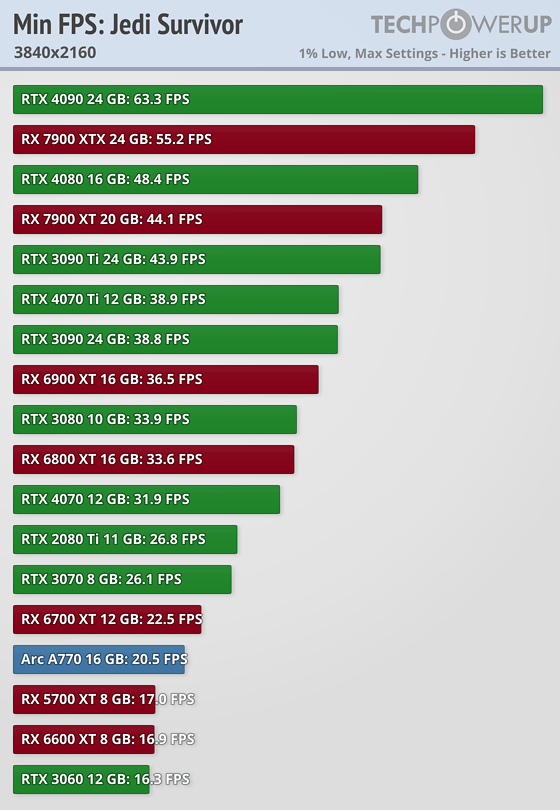

Under 45 fps 1% low even with a 7900xtx-4090 for 4k TV seem like it will not be that rare.

Like many technics to improve speed, does DLSS and FSR lower image quality at the same frame rate than native (with the compromise made to achieve that same frame rate), in most case DLSS-FSR augment image quality, like the previous inferior upscaling technic did but better. It could make pure 1:1 comparison impossible (like in between console, 3dfx Glide days, etc...)We know that DLSS and FSR lower image quality, but the idea is that it's acceptable image quality loss.

Thank you for saying this better than I have.I think you're misunderstanding the problem here. For years we've had a lot of situations where both ATI and Nvidia would cheat in games with their image quality. ATI is known for their Quake Quack situation, and Nvidia would often cheat in 3DMark frequently in the past. The method of choice was to not render something and hope that nobody would notice it. Today we have software rendering tools to make sure that whatever Nvidia and AMD are rendering are done accurately, because we don't want to deceive the customer. With things like DLSS and FSR, what is accurate anymore? We know that DLSS and FSR lower image quality, but the idea is that it's acceptable image quality loss. When Nvidia says that native resolution is out the door, they're also saying that you'll take the inaccuracies and love it. It's also a problem for them that in order to make graphic cards more powerful, they need to spend more on silicon and cooling and power consumption. There's a reason why the AMD RX 5500 and RX 480 and RX 6500 are nearly identical in performance with some outliers here and there. You see the same situation with Nvidia's RTX 2060, 3060, 4060 in that there isn't any huge leaps in performance. AMD and Nvidia don't want to lower the price of better GPU's when they can barely find resources to make their high end GPU's attractive enough for consumers to buy them. You think an RTX 4090 owner is going to use DLSS in their games? With that kind of performance, why would you? Ray-Reconstruction is a different story, since Nvidia is pretty much admitting that you can't do Ray-Tracing without upscaling, and DLSS 3.5 is just that combined.

well, until a year or two after the ps5 dies, because games in the pipeline probably aren't going to get a graphical overhaul.So basically most games won't look good until ps4 dies

Nvidia is pretty much admitting that you can't do Ray-Tracing without upscaling, and DLSS 3.5 is just that combined.

Is DLSS 3.5 all software changes?

View: https://m.youtube.com/watch?v=HQdzWgiUy8w

DLSS 3.5 seems to auto add some realism as you can see the floor coloring changing to a stronger purple vs the non dlss version.

Totally different look, not sure if it's good or bad but it's interesting how it changes the overall lighting to that extent

I actually think DLSS 3 looks better than 3.5

Last edited:

Is DLSS 3.5 all software changes?

View: https://m.youtube.com/watch?v=HQdzWgiUy8w

DLSS 3.5 seems to auto add some realism as you can see the floor coloring changing to a stronger purple vs the non dlss version.

Totally different look, not sure if it's good or bad but it's interesting how it changes the overall lighting to that extent

Yeah it's all software, just a different algorithm for determining how the lighting is calculated and it's more accurate when dealing with color blends and multiple bounces, but it's computationally expensive as hell.

But if you were to ask an artist to draw a picture and they had those lights above what OSL shades would they have gone with? The answer is a lot closer to the DLSS 3.5 answer than the others.

Yeah I get it's more realistic lighting, but maybe too noticeable of a change. I kind of like the fake lighting vs the more realistic version.Yeah it's all software, just a different algorithm for determining how the lighting is calculated and it's more accurate when dealing with color blends and multiple bounces, but it's computationally expensive as hell.

But if you were to ask an artist to draw a picture and they had those lights above what OSL shades would they have gone with? The answer is a lot closer to the DLSS 3.5 answer than the others.

DLSS 3.5 seems to auto add some realism as you can see the floor coloring changing to a stronger purple vs the non dlss version.

I think the big difference is the shadow for the very thin element like the cardboards on the floor, it ground things. Unlike what the light color should be, human brains use shadow a lot to build the 3d model of the world.but maybe too noticeable of a change

Alan wake 2, Around the plates:

View: https://youtu.be/HwGbQwoMCxM?t=14

I think this DLSS 3.5 tech is amazing, it's basically a cheat code to get 1-2 generational performance improvements just through software. With pathtracing on cp2077, I think we reached the next gen of graphics and i'm looking forward to see what other devs are capable of.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,950

Is DLSS 3.5 all software changes?

View: https://m.youtube.com/watch?v=HQdzWgiUy8w

DLSS 3.5 seems to auto add some realism as you can see the floor coloring changing to a stronger purple vs the non dlss version.

Totally different look, not sure if it's good or bad but it's interesting how it changes the overall lighting to that extent

I actually think DLSS 3 looks better than 3.5

From what I saw, DLSS 3.5 for Cyberpunk seems to sharpen the hell out of reflections. Which kinda looks good, but does bring in the question if it's realistic. Gamers Nexus kept pointing out how much sharper the reflections look, but maybe a bit too much sharper. The things I noticed was the amount of ghosting that was occurring. The further an object is the more ghosting you see. DLSS 3.5 does bring good and bad, but that's the problem. Why not just good? Should we excuse the problems that occurs with DLSS 3.5? Nvidia wants it to be the future, but first they should focus on it rendering as good as TAA. Personally, if I want a performance boost I would turn off TAA, but I'm not sure most modern games even allow that now. There's a whole Reddit dedicated to disabling TAA I've always been that guy that injects SMAA with ReShade, because it's better.

- Status

- Not open for further replies.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)