Domingo

Fully [H]

- Joined

- Jul 30, 2004

- Messages

- 22,642

DLSS 3 feels like something out of left field that probably needed a different name.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I definitely don't regret my $779 aio cooled evga 3080 card from dec 2020A really short version of my entire thoughts on the release: I don't regret recently buying a 3080 12GB.

used 3090 price will probably adjust if that the case, but I suspected some deals on those 3090TI were not that bad at all (for people for who 24gig had any relevance, even really good).If the 4080 12GB is the same performance as a 3090...and the 3090's can be had for 650-700 used, 800-900 new AND the 4080 12GB uses 35 less watts, and has half the memory of the 3090.....Its a no brainer to get a 3090, or hell a 3090ti.

Showing a marketing slide from a company that wants to sell you a product and believing it is not a good idea. Wait until unbiased honest reviews because Nvidia, Intel and AMD all fucking try to bullshit stuff about their products.used 3090 price will probably adjust if that the case, but I suspected some deals on those 3090TI were not that bad at all (for people for who 24gig had any relevance, even really good).

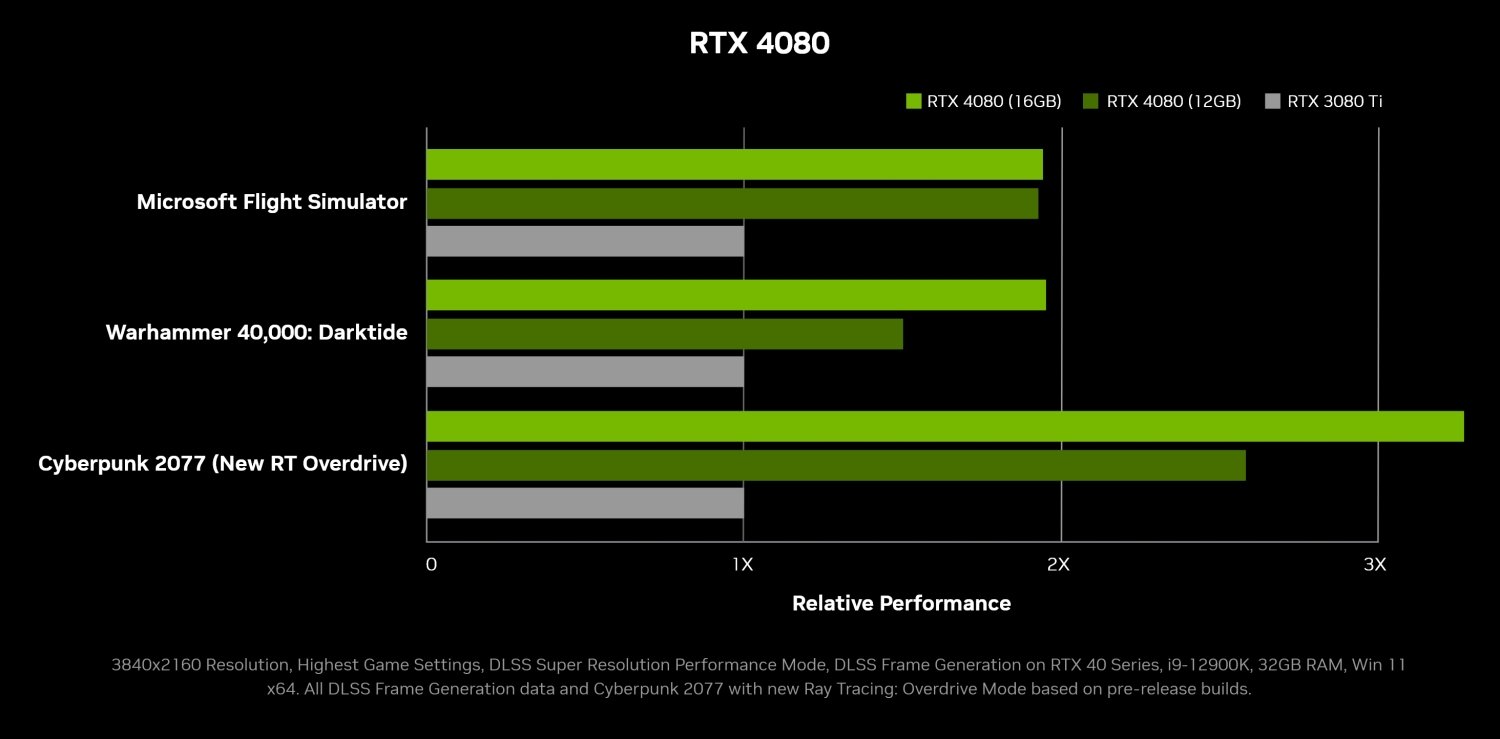

Will need to wait for review and how much better DLSS 3.0 really is, because according to this the 4080 12gb could be way better at gaming than the 3090, specially in the scenario that are actually hard enough to run for those cards to make sense:

View attachment 511986

Will have to see how those DLSS frame generation are worth versus regular frame obviously, but in those best case scenario a 4080 (12GB) could be between 1.5 to 2.5x time a 3080TI (that not that far from the 3090 gaming perf usually I think).

People look at bus wide, core numbers, VRAM amount to talk about justifying a xx80 tag or not, but in reality how/why would it not be purely a mix about the performance versus the previous generation and the upcoming competition that fully set the price and naming convention ? End of the days, all that matter is performance.

Like all the talk about RDNA 2 memory bandwidth (or almost all of the talk about ampere VRAM amount), end of the day purely irrelevant if the FPS are there.

If those part of the message were not clear enough: if that the case the best case scenario .... Will have to see how those DLSS frame generation are worth versus regular frame obviously.. .according to this... could be, we are all purely speculating and have nothing else to go with, I would assume 99.99% of the users of a message boards named Hard will always look at less biased review than company cherry picked benchmark before making a decision, specially when it is a sample size of 3 title that support DLSS 3.0.Showing a marketing slide from a company that wants to sell you a product and believing it is not a good idea.

I can't imagine many people would be happy with the IQ of DLSS in performance mode which makes those results fairly meaningless. We'll have a much better idea where these cards sit in terms of performance and efficiency after NDA lifts.used 3090 price will probably adjust if that the case, but I suspected some deals on those 3090TI were not that bad at all (for people for who 24gig had any relevance, even really good).

Will need to wait for review and how much better DLSS 3.0 really is, because according to this the 4080 12gb could be way better at gaming than the 3090, specially in the scenario that are actually hard enough to run for those cards to make sense:

View attachment 511986

Will have to see how those DLSS frame generation are worth versus regular frame obviously, but in those best case scenario a 4080 (12GB) could be between 1.5 to 2.5x time a 3080TI (that not that far from the 3090 gaming perf usually I think).

People look at bus wide, core numbers, VRAM amount to talk about justifying a xx80 tag or not, but in reality how/why would it not be purely a mix about the performance versus the previous generation and the upcoming competition that fully set the price and naming convention ? End of the days, all that matter is performance.

Like all the talk about RDNA 2 memory bandwidth (or almost all of the talk about ampere VRAM amount), end of the day purely irrelevant if the FPS are there.

There is no FE 4080 12 GB card therefore there will be only AIB 4080 12 GB card and therefore there will be no $900 4080 12 GB card.Marketing is one, but the pricing is also an indication, there is a 3090 at $960 on NewEgg right now, it is possible that a yet to be released $900 12 gig card will be significantly better at gaming.

Correct. I think Nvidia is going to wait until 3090 chips are almost gone, holding it's price above $900 before releasing the 4070.Marketing is one, but the pricing is also an indication, there is a 3090 at $960 on NewEgg right now, it is possible that a yet to be released $900 12 gig card will be significantly better at gaming.

I mean they could surprise us with a cheap boring blower style devoid of RBG bling with a plain name like "<Brandname> RTX 2080 12G" with no stupid extreme gaming SNUFF BDSM edition bullshit at a suffix.There is no FE 4080 12 GB card therefore there will be only AIB 4080 12 GB card and therefore there will be no $900 4080 12 GB card.

I can see it now, "FUCK I just got off the phone with Jensen, you know the slides he approved, scrap pages 18-24, and 36-45, just gloss over what would have been there as though it was a fleeting after thought and just continue on with slide 46. And just don't shit your whole ass on stage and say something stupid like "well you all have RTX don't you?"Correct. I think Nvidia is going to wait until 3090 chips are almost gone, holding it's price above $900 before releasing the 4070.

The 4080 12GB (really 4070) was priced higher, given the 4080 name and made AIB only to prevent the bottom from dropping out on 3090 chips.

Image a 4070 12GB for $699 trouncing a 3090 when they have a warehouse full of them.

I wouldn't be surprised he made a price increase on the 4080s at the last minute, that's why pricing slides didn't show all of the model prices.

It like the slide presenter was told to stop. lol

You idiot. There’s no “e” in XTREMEI mean they could surprise us with a cheap boring blower style devoid of RBG bling with a plain name like "<Brandname> RTX 2080 12G" with no stupid extreme gaming SNUFF BDSM edition bullshit at a suffix.

Listen here you beautiful bastard, I am in no mood for your games and I nearly pulled up dictionary.com because for a split second I forgot this was the internet and I am pretty sure somebody pranked me and put me on Defaf for the day.You idiot. There’s no “e” in XTREME

lol go back and rewatch that part, the pricing slides. It's like someone was beyond nervous to click the mouse.I can see it now, "FUCK I just got off the phone with Jensen, you know the slides he approved, scrap pages 18-24, and 36-45, just gloss over what would have been there as though it was a fleeting after thought and just continue on with slide 46. And just don't shit your whole ass on stage and say something stupid like "well you all have RTX don't you?"

Deceptive? No fucking way. It just works. The more you buy the more you save. On a serious note if they got 25% more at the same power that’s great. If it’s with 25% more wattage that’s bad. Power costs money.Their graph numbers are believable. Of course they are what they did was run the 3090 ti at 4k with ray tracing and everything else set to MAX. Then compared that to the 4000 running DLSS 3.

Seems a bit deceptive... but can't say that for sure till people get cards to test. From what I heard Jensen say these are 25% more powerful when we aren't talking about RT performance... or this new dlss which apparently is making frames up completely. I don't know I'll reserve judgement on that till I actually see it in operation myself.

The H100 in theory is 4x faster than the A100 at 2x the power draw, so that is technically half the electrical bill and one quarter the hours involved per job, depending on your workload that upgrade could pay for itself in a few months. So from the right perspective it's pretty truthfulDeceptive? No fucking way. It just works. The more you buy the more you save. On a serious note if they got 25% more at the same power that’s great. If it’s with 25% more wattage that’s bad. Power costs money.

First Look - ASUS GEFORCE RTX 4090 & 4080 ROG STRIX & TUF GAMING graphics cards

You really feel burned that the 2080 is 8gb? The card is 4 years old at this point and the 3070 ti is only 8gb...4080 series doesn't seem that impressive. The 12GB version definitely should have been a 4070.

I'm really eager for some real benchmarks. What I would really like to know is how the 3090 and 3090 Ti stand up against the various versions of the 4080 (ignoring DLSS, since none of the games I play use it). I mean, obviously the 4090 will be faster than a 3090 Ti, but it seems really unclear to me where the 4080 cards will fit in.

My 2080 is a bottleneck for me in most games at the moment, even in WoW which is notoriously CPU-bound, so I'm eager to replace the 2080. Based on what I've seen so far, I plan to either go all-out and buy a 4090, or I'm going to find a good deal on a 3090 or 3090 Ti (maybe even a used card). This will largely depend on how the 3090 cards stack up against the 4080 cards. It would feel like a waste to get a 3090 Ti if it can't even beat a 4080 12GB in most games (without DLSS or RT). I feel a bit burned by the 2080 having only had 8GB VRAM, so I would not even consider a 12GB GPU this round. For me to consider getting a 16GB 4080 over a cheaper 24GB 3090 Ti, the 4080 16GB better do damn well in the benchmarks...

There’s definitely some workloads where they really accelerate performance gen to gen, on the consumer front it’s been a slow plod since pascal.The H100 in theory is 4x faster than the A100 at 2x the power draw, so that is technically half the electrical bill and one quarter the hours involved per job, depending on your workload that upgrade could pay for itself in a few months. So from the right perspective it's pretty truthful

I am looking forward to seeing what that new NVLink can do, I mean that it way out of my price range but sweet Jebus it looks fun!

i was debating a guy at work. I said that my 3080 would be identical to a 4080 12gb (4070) if you clocked them the same. He insisted the shitty 4080 would be MUCH faster.I'm starting to wonder if the 4080 12GB will be faster than the 3080 10GB outside of DLSS 3 compatible titles.

Pretty sure you can’t compare cores gen to gen, though I may be wrongi was debating a guy at work. I said that my 3080 would be identical to a 4080 12gb (4070) if you clocked them the same. He insisted the shitty 4080 would be MUCH faster.

what do you guys think? Less cores on 4080, but better arch, so about a tie is my reasoning for my thinking.

I would amount going 50%+ faster with the much, much better node could be so crucial to the 4080 performance over the 3080 that it would be possible, but not sure if that matter or in what way.i was debating a guy at work. I said that my 3080 would be identical to a 4080 12gb (4070) if you clocked them the same. He insisted the shitty 4080 would be MUCH faster.

what do you guys think? Less cores on 4080, but better arch, so about a tie is my reasoning for my thinking.

I think it will be a bit faster due to a new architecture, though it could be like Maxwell to Pascal where the main difference is clock speed.i was debating a guy at work. I said that my 3080 would be identical to a 4080 12gb (4070) if you clocked them the same. He insisted the shitty 4080 would be MUCH faster.

what do you guys think? Less cores on 4080, but better arch, so about a tie is my reasoning for my thinking.

It's going to be very dependent on a number of things but Samsung 8n was a shitty process when compared to TSMC 7, going from Samsung 8n to TSMC 4N is a significant jump it has almost twice the practical density and much better thermals. So its anybody's game, I am looking forward to benchmarks, but I am not looking to offload the 3080TI I had to buy out of desperation when my 2080TI shat itself 5 months ago.i was debating a guy at work. I said that my 3080 would be identical to a 4080 12gb (4070) if you clocked them the same. He insisted the shitty 4080 would be MUCH faster.

what do you guys think? Less cores on 4080, but better arch, so about a tie is my reasoning for my thinking.

You really feel burned that the 2080 is 8gb?

Would be an even funnier joke if reviewers were to pit the 4080 12gb up against the 3080 12gb to display the generational leap in performance.After looking closer at some of NVidia's own benchmarks the 4080 cards are not impressive at all. The 4080 12gb is losing to the 3090 ti in games where its not using that DLSS 3. That means the 4080 12gb is only about 15% faster than the current 3080 which is a joke especially considering it costs a laughable 900 bucks. They are going to pressure reviewers hard to focus on DLSS 3 for sure.

What's happening right now is an exact repeat of the 20 series.After looking closer at some of NVidia's own benchmarks the 4080 cards are not impressive at all. The 4080 12gb is losing to the 3090 ti in games where its not using that DLSS 3. That means the 4080 12gb is only about 15% faster than the current 3080 which is a joke especially considering it costs a laughable 900 bucks. They are going to pressure reviewers hard to focus on DLSS 3 for sure.

| Ada architecture | position equivalency to previous model >>>>> | Ampere architecture |

| 4090 | 3090/ 3080ti | |

| 4080 16GB | 3070 | |

| 4080 12GB | 3060 |

When were they ever the same price? IIRC the 2080 was ~800 and the ti ~1200. The cheapest ti I remember was $999 and they were the dual slot/low profile kind that weren't very good as they throttled a bunch or had to run the fans near 100%.To a certain extent, yes. When I got my 2080 I could have gone with either a 2080 or 1080 Ti. They were essentially the same price and neck and neck in most benchmarks. The older 1080 Ti had 11Gb Ram. I went with the 2080 for the newer features but the 8GB VRAM always felt like a compromise. Ironically the main thing that I do with the 2080 that I could not have done with the 1080 Ti is use DLDSR, but running my monitor at 4K via DLDSR is causing me to run out of VRAM in some cases. I'm quite eager to get a card with more than 8GB. Even the old Dell laptop that I use has 16GB VRam on it's Quadro RTX 5000 (basically a mobile 2080).

If the 4080 12GB is equal in speed or slower to the 3080 12GB, even in a single situation, it will be a massive "egg on face" moment for Nvidia.Would be an even funnier joke if reviewers were to pit the 4080 12gb up against the 3080 12gb to display the generational leap in performance.I am betting Nvidia would regret the naming then ...

used 3090 price will probably adjust if that the case, but I suspected some deals on those 3090TI were not that bad at all (for people for who 24gig had any relevance, even really good).

Will need to wait for review and how much better DLSS 3.0 really is, because according to this the 4080 12gb could be way better at gaming than the 3090, specially in the scenario that are actually hard enough to run for those cards to make sense:

View attachment 511986

Will have to see how those DLSS frame generation are worth versus regular frame obviously, but in those best case scenario a 4080 (12GB) could be between 1.5 to 2.5x time a 3080TI (that not that far from the 3090 gaming perf usually I think).

People look at bus wide, core numbers, VRAM amount to talk about justifying a xx80 tag or not, but in reality how/why would it not be purely a mix about the performance versus the previous generation and the upcoming competition that fully set the price and naming convention ? End of the days, all that matter is performance.

Like all the talk about RDNA 2 memory bandwidth (or almost all of the talk about ampere VRAM amount), end of the day purely irrelevant if the FPS are there.

Showing a marketing slide from a company that wants to sell you a product and believing it is not a good idea. Wait until unbiased honest reviews because Nvidia, Intel and AMD all fucking try to bullshit stuff about their products.

Remember my 3090 was the first 8k gaming card from what Nvidia said!! They didn't lie about that at all....