elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

Interesting at a glance, thanks. I'll dig in more a bit later but i am one of the people who unapoligetically quote HDRTest and other purists and review sites regarding pure source material, fidelity, and downgrades in features on different tvs and monitor (though not expert calibration like they are).Here's some interesting info on banding in PC mode, 12 vs 10-bit: https://www.avsforum.com/forum/40-o...aming-thread-consoles-pc-14.html#post59699346

No matter how you spin it I'll always want 1:1 native wherever possible and native will always be the pure choice. There is lossless transmission (essentially), and there is lossy transmission whether it is a video signal or an audio singal. Otherwise signals are watered down by processing and compression... even if people try to say "it's fine" , "hardly noticeable" , "almost no noticable difference", "usually not visible /audible difference", or "to most people" to blow smoke at degrading the original source material.

.......Showing 8bit dithered on a 10bit panel is not native color resolution. (10bit/12bit over improvement over 8bit also matters in quality cameras btw).

https://dgit.com/4k-hdr-guide-51429/

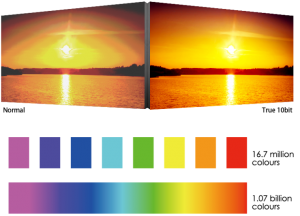

When we talk about 8 bit color, we are essentially saying that the TV can represent colors from 00000000 to 11111111, a variation of 256 colors per value. Since all TVs can represent red, green, and blue values, 256 variations of each essentially means that the TV can reproduce 256x256x256 colors, or 16,777,216 colors in total. This is considered VGA, and was used for a number of years as the standard for both TVs and monitors.

With the advent of 4K HDR, we can push a lot more light through these TVs than ever before. because of this, it’s necessary for us to start representing more colors, as 256 values for each primary color is not going to reproduce nearly as lifelike images as something like 10 or 12 bit.

10 bit color

Source: 4k.com

10 bit color can represent between 0000000000 to 1111111111 in each of the red, blue, and yellow colors, meaning that one could represent 64x the colors of 8-bit. This can reproduce 1024x1024x1024 = 1,073,741,824 colors, which is an absolutely huge amount more colors than 8 bit. For this reason, many of the gradients in an image will look more more smooth like in the image above, and 10 bit images are quite noticeably better looking than their 8-bit counterparts.

.......Lowering chroma compresses the signal from the original fidelity of 4:4:4 source material too (but not in the the case of 4:2:0 movie material)

https://en.wikipedia.org/wiki/Chroma_subsampling

Digital signals are often compressed to reduce file size and save transmission time. Since the human visual system is much more sensitive to variations in brightness than color, a video system can be optimized by devoting more bandwidth to the luma component (usually denoted Y'), than to the color difference components Cb and Cr. In compressed images, for example, the 4:2:2 Y'CbCr scheme requires two-thirds the bandwidth of (4:4:4) R'G'B'. This reduction results in almost no visual difference as perceived by the viewer

Anything less than native video and native audio is going to be compressed and degraded. They are just trying to tell you how much degradation is "ok".

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)