elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,314

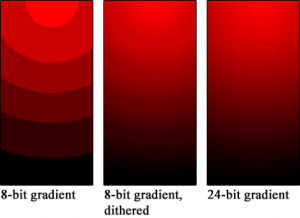

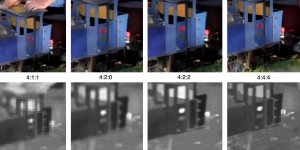

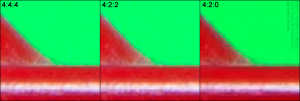

Well you technically could use it at 120Hz BUT at 4:2:0 chroma which equally sucks for desktop usage. I'm just going to keep on using my Acer X27 as my desktop display for the foreseeable future while the CX gets used for all gaming.

Exactly. People just need to hold their horses and wait instead of crying about it when it doesn't even matter right now due to the lack of HDMI 2.1 cards in the first place.

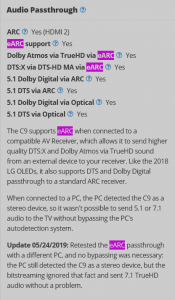

Now have to wait on nvidia b/c LG hdmi 2.1 isn't 48Gbps, and wait on LG for uncompressed audio support fw updates if ever.

So Yep need to wait because LG fails to support full hdmi standards out of the gate. No buying this display "early" secure in it's hdmi 2.1 capabilities for me.

I'll have to consider a 55" C9 if they never deliver. I'm not paying $1630 for a (edit: "hobbled") hdmi 2.1 port TV when there are others with full support avaialble.

Hobble:

- tie or strap together (the legs of a horse or other animal) to prevent it from straying.

- cause (a person or animal) to limp.

"Johnson was still hobbled slightly by an ankle injury" - restrict the activity or development of.

"cotton farmers hobbled by low prices"

- cause (a person or animal) to limp.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)