chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,676

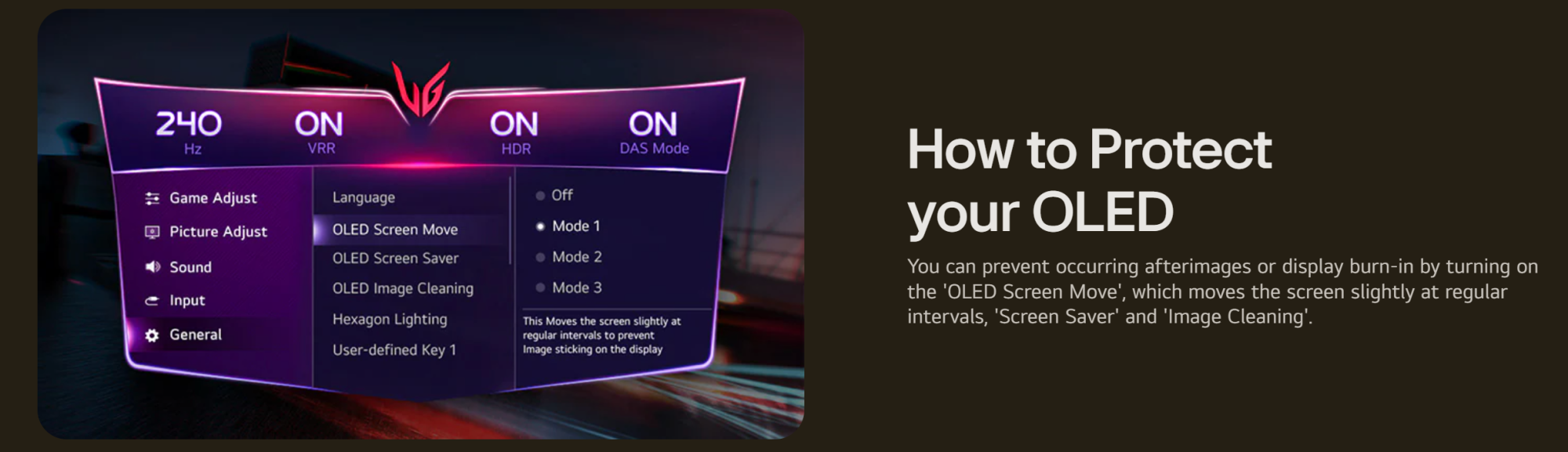

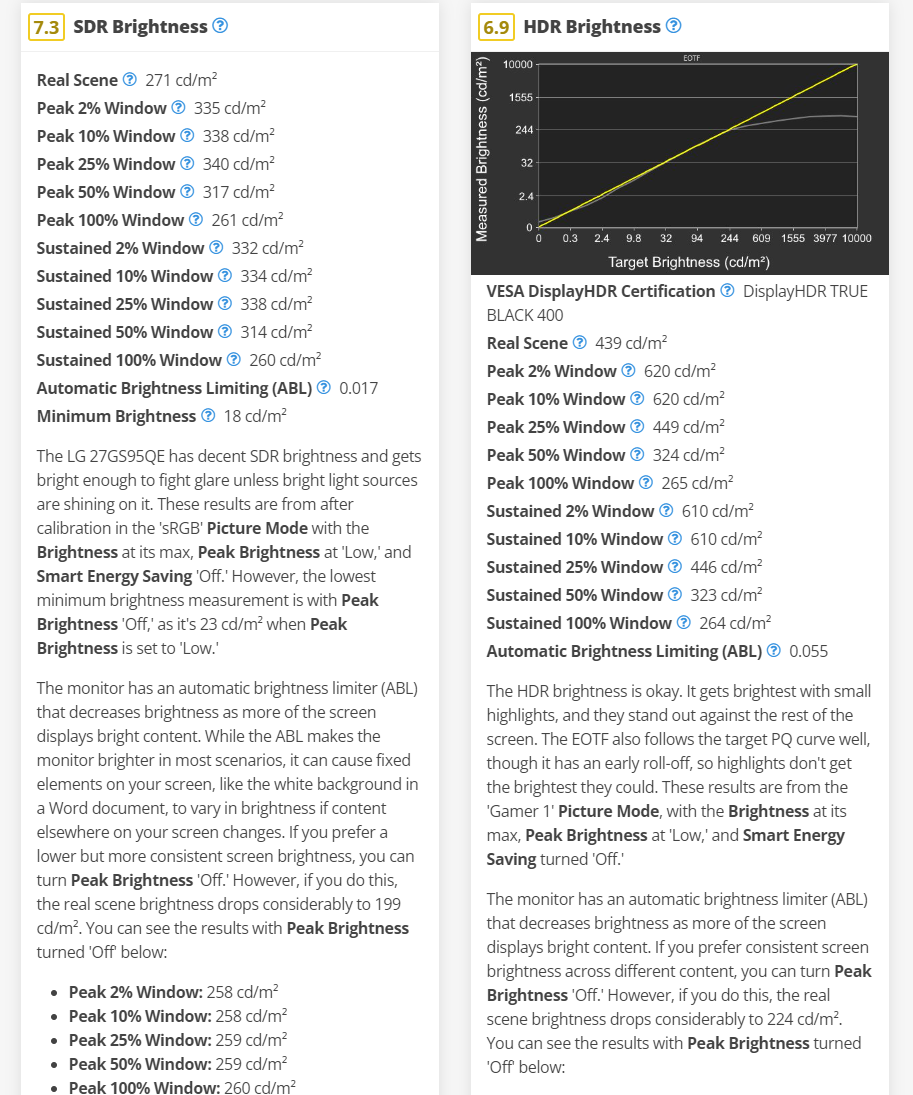

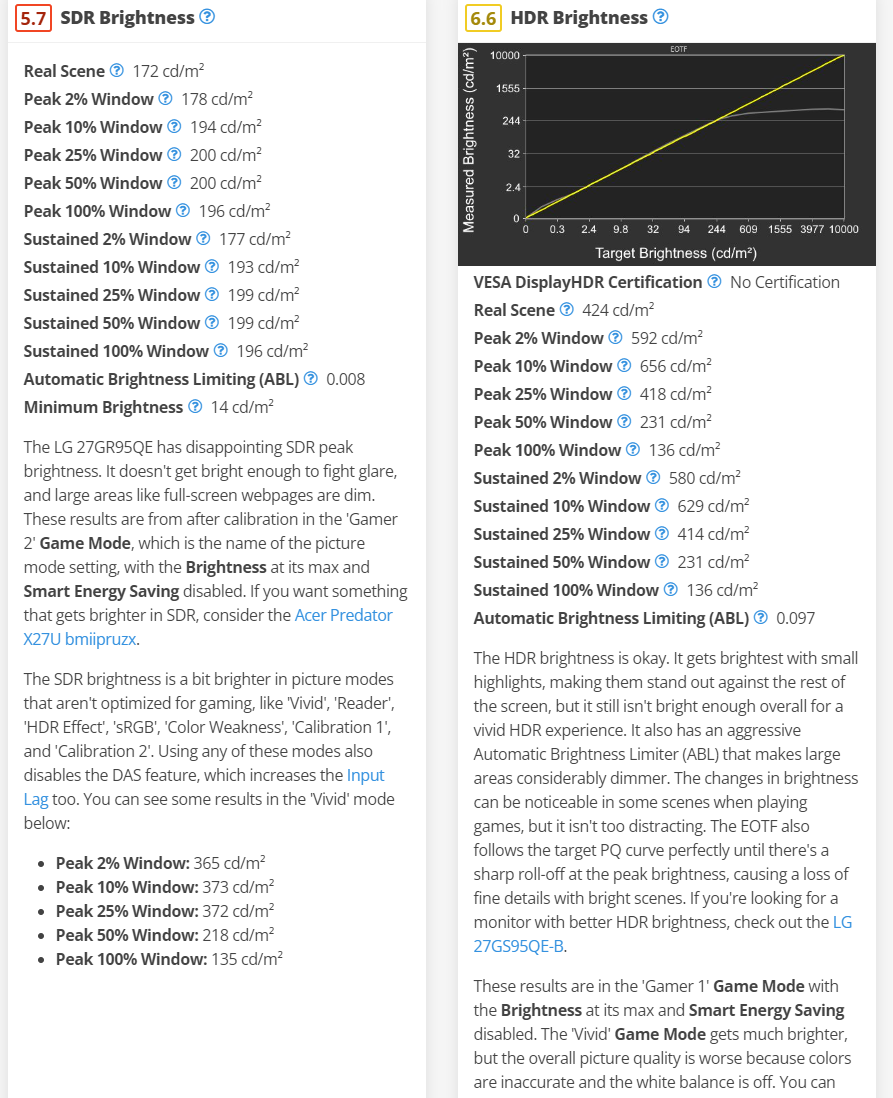

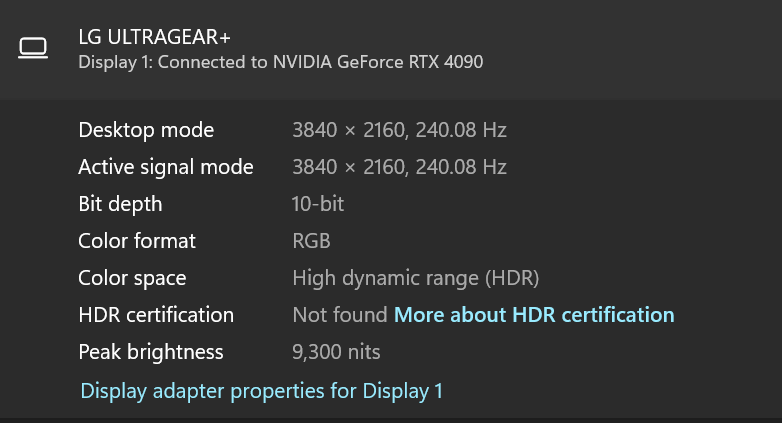

I'm still wondering what the heck people think they need 1000 nits full screen for?It's not doing 1000 for sure, but it might be able to get a lot closer to it like the PG42UQ and PG27AQDM.

View attachment 651041

View attachment 651042

I would be more than happy to have that kind of HDR performance vs what QD OLED offers.

View attachment 651043

View attachment 651044

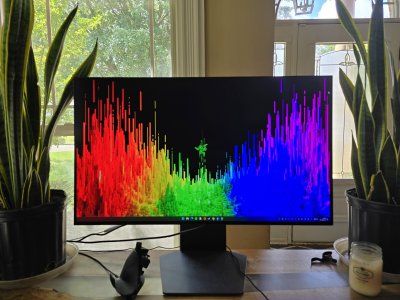

Again color volume can be thrown out the window when this is all the panel is capable of.

I have a 27 inch IPS which does 430 nits SDR and 520 HDR-------and I've never had it anywhere close to max. I always game with a (not very bright) overhead light on. And I never turn the brightness past "48" and its usually more like 43.

For HDR, I appreciate a good twinkle. But, I don't want anything so bright it causes me to squint and look away.

1000 nits for a 2% would maybe be alright for me. But I wouldn't want it that bright for anything larger.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)