Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,028

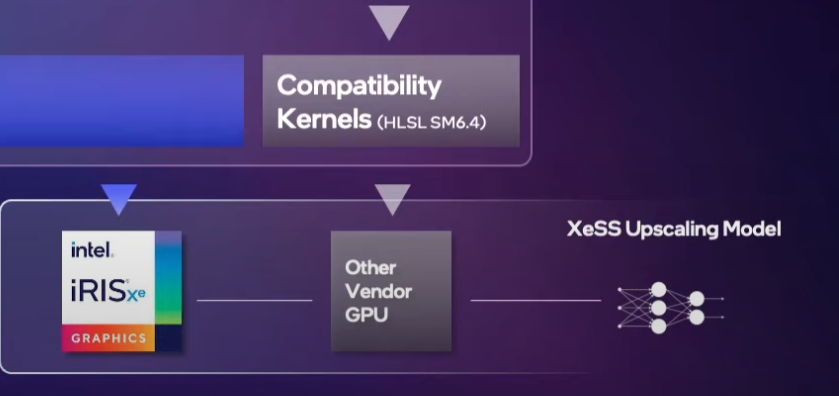

Intel is introducing its own AI-based upscaling algorithm with their forthcoming Arc GPUs. XᵉSS is an open standard that will support other video cards, but it will take advantage of specific hardware support present in Arc GPUs.

https://www.intel.com/content/www/u...al-technology/arc-discrete-graphics/xess.html

Demo in Riftbreaker:

https://www.intel.com/content/www/u...al-technology/arc-discrete-graphics/xess.html

Demo in Riftbreaker:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)