cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,092

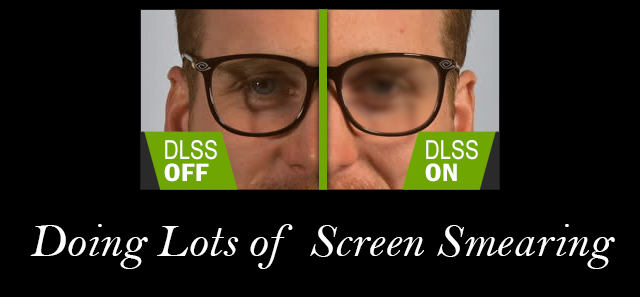

Hardware Unboxed has released its newest video where they dissect the image quality of Battlefield V with the new NVIDIA technology called Deep Learning Super Sample (DLSS) enabled. They not only compare the DLSS image quality to the native 4K image; Tim takes it a step further and compares the DLSS image to an 1685p upscaled 78% resolution scale image. They chose 1685p because it performs at a similar frame rate as when DLSS is enabled in-game.

In all instances, the DLSS image looks to be a smeared image and the 1685p upscaled 78% resolution scale image is much more pleasing to look at. Tim Schiesser says, "The 1685p image destroys the DLSS image in terms of sharpness, texture quality, clarity; basically everything." He goes on to say, "The 78% scaled image preserves the fine detail on the rocks, the sign, the sandbags, the cloth, the gun; pretty much everywhere. With DLSS everything is blurred to the point where this detail is lost." Resolution scaling has been available to gamers for decades. They hold back no punches and say, "DLSS sucks."

But the real kicker is looking at the visual quality comparisons. We'll start with native 4K versus 4K DLSS. Across all of the scenes that I've tested, there is a severe loss of detail when switching on DLSS. Just look at the trees in this scene. The 4K presentation is just as you'd expect; sharp, clean, high detail on both the foliage and trunk textures. But DLSS is like a strong blur filter has been applied. Texture detail is completely wiped out. In some cases it's like you've loaded a low texture mode. While some of the fine branch detail has been blurred away, or even thickened in some cases. Which makes the game look kinda weird in some situations. Of course this is to be expected. DLSS was never going to supply the same image quality as native 4K while also providing a 37% performance uplift. That would be pretty much black magic. But the quality difference comparing the two is almost laughable at how far away DLSS is from the native presentation in these stressful areas.

In all instances, the DLSS image looks to be a smeared image and the 1685p upscaled 78% resolution scale image is much more pleasing to look at. Tim Schiesser says, "The 1685p image destroys the DLSS image in terms of sharpness, texture quality, clarity; basically everything." He goes on to say, "The 78% scaled image preserves the fine detail on the rocks, the sign, the sandbags, the cloth, the gun; pretty much everywhere. With DLSS everything is blurred to the point where this detail is lost." Resolution scaling has been available to gamers for decades. They hold back no punches and say, "DLSS sucks."

But the real kicker is looking at the visual quality comparisons. We'll start with native 4K versus 4K DLSS. Across all of the scenes that I've tested, there is a severe loss of detail when switching on DLSS. Just look at the trees in this scene. The 4K presentation is just as you'd expect; sharp, clean, high detail on both the foliage and trunk textures. But DLSS is like a strong blur filter has been applied. Texture detail is completely wiped out. In some cases it's like you've loaded a low texture mode. While some of the fine branch detail has been blurred away, or even thickened in some cases. Which makes the game look kinda weird in some situations. Of course this is to be expected. DLSS was never going to supply the same image quality as native 4K while also providing a 37% performance uplift. That would be pretty much black magic. But the quality difference comparing the two is almost laughable at how far away DLSS is from the native presentation in these stressful areas.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)