If AMD moves to samsung for next PlayStation/xbox consoles that could free up TSMC space but Sony/MS have to be willing to take the performance hit (like Ampere)rumors are pointing to them using Samsung

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GPU prices — is the worst behind us ?

- Thread starter Marees

- Start date

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,672

I went from gtx 970 sli to an RTX 3080 in Dec 2020 at sub msrp (thanks evga coupon!). Nice jump. Just upgraded my projector to a 4k unit, so I'll probably wait until the 5xxx series or even a deal on one before jumping againThat's still a good card.

atp1916

[H]ard|DCoTM x1

- Joined

- Jun 18, 2004

- Messages

- 5,004

When the economy starts its next bull cycle (aka Feds win the "inflation fight") and easy money comes back...

#btc

Be ready.

Those RTX 3080s gonna be back up to 2k+ per

#btc

Be ready.

Those RTX 3080s gonna be back up to 2k+ per

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,672

I never mined on my 3080. I should have...When the economy starts on its next bull cycle (aka Feds win the "inflation fight") and easy money comes back...

#btc

atp1916

[H]ard|DCoTM x1

- Joined

- Jun 18, 2004

- Messages

- 5,004

3080 (mine are all EVGA 10gb) is a seriously decent lad.I never mined on my 3080. I should have....

Lot of respect for it, especially after/while running several 8 card 3070 Ti rigs.

Any yes, while Eth was a thing, the 3080 was very decent on the hash/watt metric.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,672

Yeah, I probably could have made a few grand in coin with gaming part time... I just didn't ever set it up, kept procrastinatingAny yes, while Eth was a thing, the 3080 was very decent on the hash/watt metric.

atp1916

[H]ard|DCoTM x1

- Joined

- Jun 18, 2004

- Messages

- 5,004

In the future, the children will ask. We will tell them, EVGA. and, they had not only the best quality - but, the best prices. Ever.Yeah, I probably could have made a few grand in coin with gaming part time... I just didn't ever set it up, kept procrastinating. Still, I love my card... It's the hybrid ftw3 10gb

.

I do think without EVGA in the mix (the Lord bless their company, and their peoples) - GPU pricing is going to take a hit upward, as time passes.

They were the canary in the mine with respect to Nvidia's pricing schemes.

That would be expensive, AMD currently keeps the costs down by using what is essentially their PC components with very minor modifications.If AMD moves to samsung for next PlayStation/xbox consoles that could free up TSMC space but Sony/MS have to be willing to take the performance hit (like Ampere)

Producing a new completely unique chip on a different node in a new foundry is anything but cheap, they would also lose access to features like stacked cache, the infinity fabric, and their laddered interconnect designs that are in their next generation of CPUs and GPUs. But I suppose a lot can happen in 3 years, it wouldn't be impossible, but I would not expect it to happen.

I don't know the price-to-performance ratio of the old BFG cards was pretty good. I think they would have given EVGA a run for their money.In the future, the children will ask. We will tell them, EVGA. and, they had not only the best quality - but, the best prices. Ever.That b stock. /salute

I do think without EVGA in the mix (the Lord bless their company, and their peoples) - GPU pricing is going to take a hit upward, as time passes.

They were the canary in the mine with respect to Nvidia's pricing schemes.

Blade-Runner

Supreme [H]ardness

- Joined

- Feb 25, 2013

- Messages

- 4,366

Yup, does the job for older games at 3440x1440 but its definitely showing its age.That's still a good card.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,558

If AMD moves to samsung for next PlayStation/xbox consoles that could free up TSMC space but Sony/MS have to be willing to take the performance hit (like Ampere)

Really depends on if Samsung has their process sorted out, if they do then it should not be a issue. Bigger question would be if stacked cache and such could be used or not and other advanced tooling that they use at TSMC, Samsung would need something similar. I am sure AMD is seriously considering it since Samsung is likely giving them a sweet deal as they need customers.

Samsung 4 was closer to TSMC 5 in terms of power/performance, and scaling, and the yields were atrocious, Qualcomm had to pack up and pay for a spot on the TSMC 4nm lineup and delay a couple of key product launches because of it, they were not at all pleased.Really depends on if Samsung has their process sorted out, if they do then it should not be a issue. Bigger question would be if stacked cache and such could be used or not and other advanced tooling that they use at TSMC, Samsung would need something similar. I am sure AMD is seriously considering it since Samsung is likely giving them a sweet deal as they need customers.

Now supposedly Samsung hired a team from Japan and they managed to fix the 4nm node and got their yields back up to 80'ish percent from the 40 or so they were at when Qualcomm up and left them, and their 3nm GaaFET node is supposedly in the low 60% which is better than TSMCs which is pushing the high 50s, but you better believe that after how they did both Nvidia and Qualcomm dirty that most companies are really gunshy there, and honestly its going to be up to Samsung to put out their own silicon on it first and show the world their nodes are working as advertised before anybody is going to trust them with a make or break product launch.

AMD would probably love to be able to change though, their tech needs a node shrink, and they need to make some changes. But stacked cache, and their Mesh Interposer is mostly TSMC and its CoWoS 3DFabric design tools and Samsung doesn't really offer that degree of packaging yet. So Samsung could make the chips, but who would AMD then use to interconnect them all, because the interposer they currently use is essentially a pretty complicated chip onto itself, to my knowledge Intel Foundry Services is the only company that is offering the ability to integrate multiple vendors at that level, but Intel uses a very different structure for connecting their tiles (chiplets) and it would require AMD to make a complete design change to accommodate it. But AMD would need a complete design change to make their chips at Samsung so really at that point the design changes to make things work for Intel's interposers is a minor thing and the contracts needed to make it all happen would be the complicated bit.

Though this possibility is why Intel announced that all its foundries are being spun off into their own company for 2025 making them technically fabless in the process. Because I am sure there would be absolutely no conflict of interest if Intel was the owner of the foundry manufacturing the chips for their CPU and GPU rivals right...

Going by trends/leaks/rumours so far, it seems neither will TSMC reduce their prices nor will AMD/Nvidia (& maybe Intel) switch to cheaper nodes.

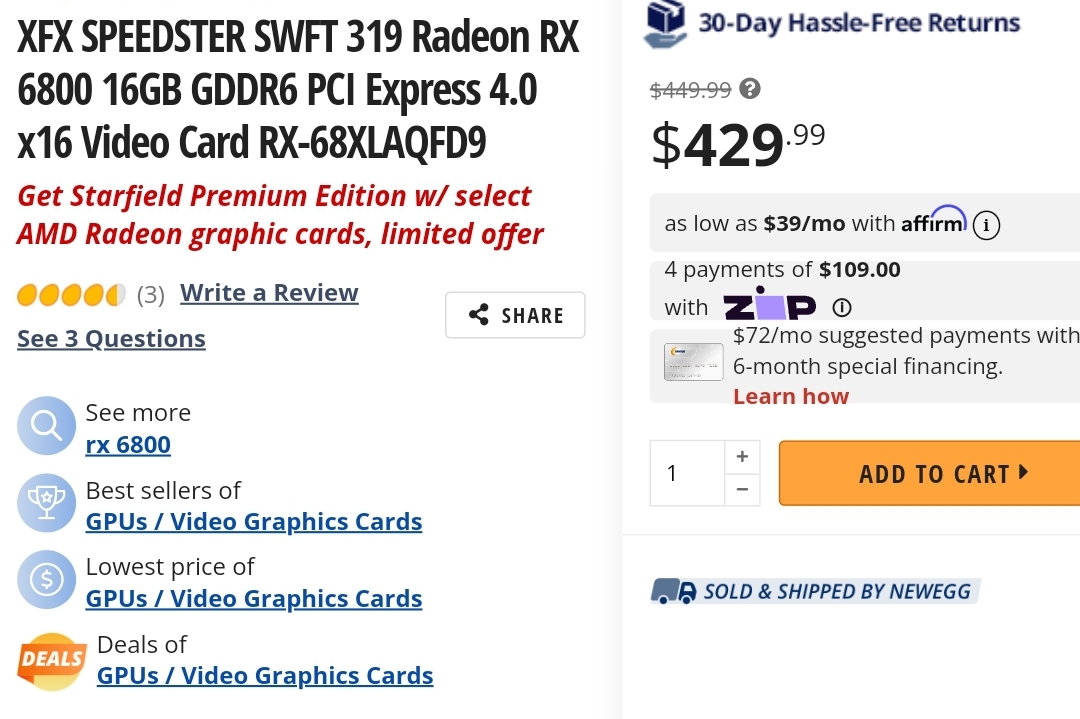

So your best bet is to watch prices of 6800/6800xt & buy it at close to $400/$450 respectively (if they hit that price)

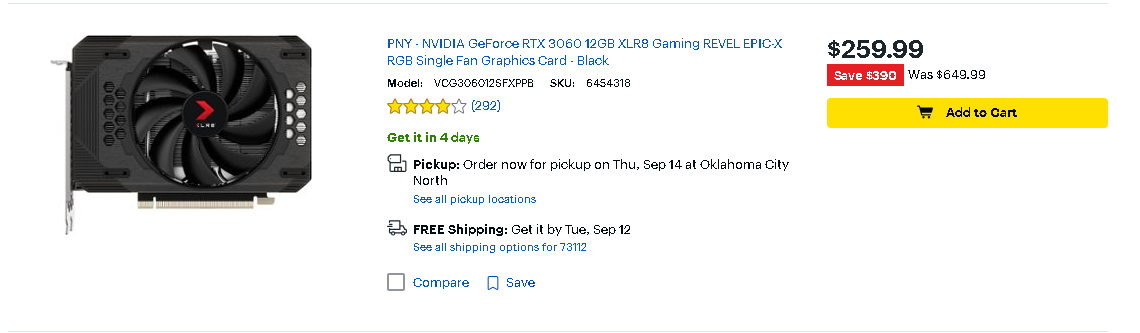

the equivalent budget card on nvidia would be the 3060 12gb. Apparently nvidia has lots of inventory left

if you are looking for used cards then something like 6700xt/6750xt or 2060 12gb should do it.

https://www.newegg.com/xfx-radeon-rx-6800-rx-68xlaqfd9/p/27N-0002-00117?Item=27N-0002-00117

So your best bet is to watch prices of 6800/6800xt & buy it at close to $400/$450 respectively (if they hit that price)

the equivalent budget card on nvidia would be the 3060 12gb. Apparently nvidia has lots of inventory left

if you are looking for used cards then something like 6700xt/6750xt or 2060 12gb should do it.

https://www.newegg.com/xfx-radeon-rx-6800-rx-68xlaqfd9/p/27N-0002-00117?Item=27N-0002-00117

Last edited:

sfsuphysics

[H]F Junkie

- Joined

- Jan 14, 2007

- Messages

- 15,994

and more importantly Nvidia (at least) will not reduce their profit margins.Going by trends/leaks/rumours so far, it seems neither will TSMC reduce their prices nor will AMD/Nvidia (& maybe Intel) switch to cheaper nodes.

Going by trends/leaks/rumours so far, it seems neither will TSMC reduce their prices nor will AMD/Nvidia (& maybe Intel) switch to cheaper nodes.

So your best bet is to watch prices of 6800/6800xt & buy it at close to $400/$450 respectively (if they hit that price)

the equivalent budget card on nvidia would be the 3060 12gb. Apparently nvidia has lots of inventory left

if you are looking for used cards then something like 6700xt/6750xt or 2060 12gb should do it.

https://www.newegg.com/xfx-radeon-rx-6800-rx-68xlaqfd9/p/27N-0002-00117?Item=27N-0002-00117

View attachment 591617

Honourable mention to Intel:

Intel's Arc A750 launched at $289, then got an official price drop to $249, and right now it's selling for just $199 at Newegg. If you're interested in giving an Intel Arc GPU a shot, this one's a no-brainer: The Arc A750 represents the best value among graphics cards, with performance that matches the RTX 3060 and almost catches the RX 6700 10GB, for about $70 less. Yes, power use will be higher, and the drivers still aren't perfect. But with over 30 driver releases since Arc first appeared, the Arc experience today is much better than last year.

https://www.tomshardware.com/news/nvidia-vs-amd-vs-intel-last-12-months-of-gpus-are-disappointing

crazycrave

[H]ard|Gawd

- Joined

- Mar 31, 2016

- Messages

- 1,884

You will buy at their price if you want to keep playing ball, they write the drivers and your not at the wheel anymore caveman!

jlbenedict

2[H]4U

- Joined

- May 22, 2005

- Messages

- 2,207

Going by trends/leaks/rumours so far, it seems neither will TSMC reduce their prices nor will AMD/Nvidia (& maybe Intel) switch to cheaper nodes.

So your best bet is to watch prices of 6800/6800xt & buy it at close to $400/$450 respectively (if they hit that price)

the equivalent budget card on nvidia would be the 3060 12gb. Apparently nvidia has lots of inventory left

if you are looking for used cards then something like 6700xt/6750xt or 2060 12gb should do it.

https://www.newegg.com/xfx-radeon-rx-6800-rx-68xlaqfd9/p/27N-0002-00117?Item=27N-0002-00117

View attachment 591617

Nice pricing! (even though the MERC 319 would have been better)

DooKey

[H]F Junkie

- Joined

- Apr 25, 2001

- Messages

- 13,560

Why should they?and more importantly Nvidia (at least) will not reduce their profit margins.

The idea that Nvidia or AMD could use a cheaper node and make a product that is cheaper is a flawed one. The nodes in demand at TSMC are priced accordingly and the cost per transistor balances out pretty equally across them. We would be looking at massive regressions in performance if AMD or Nvidia rolled back like that.Going by trends/leaks/rumours so far, it seems neither will TSMC reduce their prices nor will AMD/Nvidia (& maybe Intel) switch to cheaper nodes.

So your best bet is to watch prices of 6800/6800xt & buy it at close to $400/$450 respectively (if they hit that price)

the equivalent budget card on nvidia would be the 3060 12gb. Apparently nvidia has lots of inventory left

if you are looking for used cards then something like 6700xt/6750xt or 2060 12gb should do it.

https://www.newegg.com/xfx-radeon-rx-6800-rx-68xlaqfd9/p/27N-0002-00117?Item=27N-0002-00117

View attachment 591617

TSMC is only a part of the problem, we still have large quantities of components that were purchased by AIB’s at COVID inflated prices and they are going to get those back, because who’s going to make them take a loss on those components?

Sadly for us Samsung botched their nodes, their 8nm is closer to TSMC’s 10nm and is only now getting acceptable yields, but prices there are about the same as they were before as it’s still cheaper than TSMC but we’re any of us happy with the 2000 series prices? Would we want to see the RTX 5000 series launching at 2000 level prices (increased for inflation) but with at best RTX 3000 levels of performance?

2025 is roughly when Samsung and Intel will be ready to challenge TSMC, and it’s also when we can expect the overpriced components to be replaced in the market.

Ride it out is about the only option. Buy if you have too but unless you need to now is not the time.

There are just too many market factors working against us.

sfsuphysics

[H]F Junkie

- Joined

- Jan 14, 2007

- Messages

- 15,994

Well I mistyped, I meant to say profit per board/card sold, not as a whole to the company. And the reason is pretty simple, lower prices could end up making more profit in the long run with more purchases, I'm not privy to their financials and it certainly gets quite complicated where that point of diminishing returns is but it does exist. Plus if the rumors are true and they make the lions share of all of their revenue from AI related sales, then throwing a bone to gamers could potentially buy further favor of brand loyalty for future GPU purchases, I know I'm at the point where I'm going to go for Team VALUE, whomever that is... unfortunately AMD is too much of a unoriginal pussy to take advantage of what Nvidia is doing and instead is just playing copycat.Why should they?

They already did, the RTX 4000 series cards has their lowest margins since 2017.and more importantly Nvidia (at least) will not reduce their profit margins.

During their last financial report Jensen was raked by investors for it and said it’s because if they kept their normal margins they would have nobody to sell their cards too and ended it there.

Some good rants by Matthew Connatser on lack of (true) budget GPUs:

Nvidia CEO Jensen Huang declared that it was no longer possible to offer lower prices each generation, hence the dramatic price increase that came with RTX 40. Whether Moore's Law is dead or not is hotly debated (Intel insists it's alive and well), but ultimately, Nvidia's attempted transition into selling more expensive GPUs didn't go very well this time, not for the company or for its fans.

https://www.xda-developers.com/2022-bad-year-desktop-pc/

What concerns me the most is that Nvidia and AMD aren't backing down from their maximalist positions. When demand goes down, they lower production instead of prices, and you can tell demand is way down since the RTX 40 and RX 7000 haven't been in short supply since launch. Voting with your wallet might not even be effective, because gaming GPUs are small fry to Nvidia and AMD, and they would rather be committing more manufacturing capacity to server chips with fat margins, hence the lack of serious competition.

The graphics card is almost, without exception, the most crucial component in a gaming PC, and if people can't afford it, they're going to explore alternatives. Valve and Asus are opening the way with handheld gaming PCs, gaming laptops are still an alright choice, and cloud PC gaming is still making inroads despite the failure of Stadia. PC gaming will be fine, it's just the desktop that's not well.

https://www.xda-developers.com/desktop-pc-gaming-too-expensive-latest-gpu/

With AMD confirming its retreat from the low-end and Nvidia likely never coming back, it's up to Intel to keep budget graphics cards alive. The company already offers a $100 GPU in the form of the Arc A380, but its upcoming Battlemage series will need to offer something below the $200 mark at minimum to keep the cost of entry low enough. If Intel follows Nvidia and AMD's lead, it's probably over for everybody on a budget.

I've seen lots of people say that the death of low-end GPUs isn't a big deal, but $100 GPUs are possible to make. They used to be normal years ago, and they helped make PC gaming cheaper and more accessible for everybody. I would hate to see PC gaming become exclusive for the financially comfortable, and I am rooting hard for Intel to stop that from happening.

https://www.xda-developers.com/all-eyes-on-intel-budget-gpu/

Budget PC gamers are not going to accept APUs as a real alternative to low-end cards. They're going to eventually quit PC gaming and just switch to consoles, which offer much more affordable hardware and compelling performance. Think it won't affect you if these gamers leave the community? The latest hardware survey from Steam says about 10% of users have the GTX 1050, 1050 Ti, and 1650. That's just three low-end GPUs, and they make up 10% of the largest PC gaming community today. PC gaming can't afford to lose that many people.

https://www.xda-developers.com/why-apus-cant-truly-replace-low-end-gpus/

Nvidia CEO Jensen Huang declared that it was no longer possible to offer lower prices each generation, hence the dramatic price increase that came with RTX 40. Whether Moore's Law is dead or not is hotly debated (Intel insists it's alive and well), but ultimately, Nvidia's attempted transition into selling more expensive GPUs didn't go very well this time, not for the company or for its fans.

https://www.xda-developers.com/2022-bad-year-desktop-pc/

What concerns me the most is that Nvidia and AMD aren't backing down from their maximalist positions. When demand goes down, they lower production instead of prices, and you can tell demand is way down since the RTX 40 and RX 7000 haven't been in short supply since launch. Voting with your wallet might not even be effective, because gaming GPUs are small fry to Nvidia and AMD, and they would rather be committing more manufacturing capacity to server chips with fat margins, hence the lack of serious competition.

The graphics card is almost, without exception, the most crucial component in a gaming PC, and if people can't afford it, they're going to explore alternatives. Valve and Asus are opening the way with handheld gaming PCs, gaming laptops are still an alright choice, and cloud PC gaming is still making inroads despite the failure of Stadia. PC gaming will be fine, it's just the desktop that's not well.

https://www.xda-developers.com/desktop-pc-gaming-too-expensive-latest-gpu/

With AMD confirming its retreat from the low-end and Nvidia likely never coming back, it's up to Intel to keep budget graphics cards alive. The company already offers a $100 GPU in the form of the Arc A380, but its upcoming Battlemage series will need to offer something below the $200 mark at minimum to keep the cost of entry low enough. If Intel follows Nvidia and AMD's lead, it's probably over for everybody on a budget.

I've seen lots of people say that the death of low-end GPUs isn't a big deal, but $100 GPUs are possible to make. They used to be normal years ago, and they helped make PC gaming cheaper and more accessible for everybody. I would hate to see PC gaming become exclusive for the financially comfortable, and I am rooting hard for Intel to stop that from happening.

https://www.xda-developers.com/all-eyes-on-intel-budget-gpu/

Budget PC gamers are not going to accept APUs as a real alternative to low-end cards. They're going to eventually quit PC gaming and just switch to consoles, which offer much more affordable hardware and compelling performance. Think it won't affect you if these gamers leave the community? The latest hardware survey from Steam says about 10% of users have the GTX 1050, 1050 Ti, and 1650. That's just three low-end GPUs, and they make up 10% of the largest PC gaming community today. PC gaming can't afford to lose that many people.

https://www.xda-developers.com/why-apus-cant-truly-replace-low-end-gpus/

Can I unlike this???Some good rants by Matthew Connatser on lack of (true) budget GPUs:

Nvidia CEO Jensen Huang declared that it was no longer possible to offer lower prices each generation, hence the dramatic price increase that came with RTX 40. Whether Moore's Law is dead or not is hotly debated (Intel insists it's alive and well), but ultimately, Nvidia's attempted transition into selling more expensive GPUs didn't go very well this time, not for the company or for its fans.

https://www.xda-developers.com/2022-bad-year-desktop-pc/

What concerns me the most is that Nvidia and AMD aren't backing down from their maximalist positions. When demand goes down, they lower production instead of prices, and you can tell demand is way down since the RTX 40 and RX 7000 haven't been in short supply since launch. Voting with your wallet might not even be effective, because gaming GPUs are small fry to Nvidia and AMD, and they would rather be committing more manufacturing capacity to server chips with fat margins, hence the lack of serious competition.

The graphics card is almost, without exception, the most crucial component in a gaming PC, and if people can't afford it, they're going to explore alternatives. Valve and Asus are opening the way with handheld gaming PCs, gaming laptops are still an alright choice, and cloud PC gaming is still making inroads despite the failure of Stadia. PC gaming will be fine, it's just the desktop that's not well.

https://www.xda-developers.com/desktop-pc-gaming-too-expensive-latest-gpu/

With AMD confirming its retreat from the low-end and Nvidia likely never coming back, it's up to Intel to keep budget graphics cards alive. The company already offers a $100 GPU in the form of the Arc A380, but its upcoming Battlemage series will need to offer something below the $200 mark at minimum to keep the cost of entry low enough. If Intel follows Nvidia and AMD's lead, it's probably over for everybody on a budget.

I've seen lots of people say that the death of low-end GPUs isn't a big deal, but $100 GPUs are possible to make. They used to be normal years ago, and they helped make PC gaming cheaper and more accessible for everybody. I would hate to see PC gaming become exclusive for the financially comfortable, and I am rooting hard for Intel to stop that from happening.

https://www.xda-developers.com/all-eyes-on-intel-budget-gpu/

Budget PC gamers are not going to accept APUs as a real alternative to low-end cards. They're going to eventually quit PC gaming and just switch to consoles, which offer much more affordable hardware and compelling performance. Think it won't affect you if these gamers leave the community? The latest hardware survey from Steam says about 10% of users have the GTX 1050, 1050 Ti, and 1650. That's just three low-end GPUs, and they make up 10% of the largest PC gaming community today. PC gaming can't afford to lose that many people.

https://www.xda-developers.com/why-apus-cant-truly-replace-low-end-gpus/

Nothing they say is wrong but I hate almost every word of it…

Right or wrong is not the good word for prediction, but that part:

Budget PC gamers are not going to accept APUs as a real alternative to low-end cards.

I am not sure it fit the quitting the desktop for laptop-handheld-console where APU even SoC will be quite the norm, obviously possible in a what the point of a desktop with an APU versus something really close to it, with little downside but lot of upside of not being a desktop.

But it really depends on how good those APU get, AMD notion they will replace the cheapest GPU option is not crazy at all, with how small/low power Zen4c cores get that could open the door for a good sized-powered GPU on one.

https://s201.q4cdn.com/141608511/files/doc_financials/2024/Q2FY24/Q2FY24-CFO-Commentary.pdf

https://s201.q4cdn.com/141608511/files/doc_financials/2024/Q2FY24/Rev_by_Mkt_Qtrly_Trend_Q224.pdf

https://s201.q4cdn.com/141608511/fi...4/q2/19771e6b-cc29-4027-899e-51a0c386111e.pdf

https://s201.q4cdn.com/141608511/fi.../nvda-f2q24-investor-presentation-final-1.pdf

https://www.fool.com/earnings/call-...nvidia-nvda-q2-2024-earnings-call-transcript/

Maybe you mean on the previous financial report before their record shattering last one ? Cannot find it there either.

Budget PC gamers are not going to accept APUs as a real alternative to low-end cards.

I am not sure it fit the quitting the desktop for laptop-handheld-console where APU even SoC will be quite the norm, obviously possible in a what the point of a desktop with an APU versus something really close to it, with little downside but lot of upside of not being a desktop.

But it really depends on how good those APU get, AMD notion they will replace the cheapest GPU option is not crazy at all, with how small/low power Zen4c cores get that could open the door for a good sized-powered GPU on one.

Was it not more compensation/buyback for excess Ampere inventory that impacted margin ? Last report margin are I think the best ever at 70% and more of an software company than an hardware, has gaming get smaller and smaller part of that pie it is harder to tell gaming GPU margin, I cannot find that comment about 4000 series low margin.They already did, the RTX 4000 series cards has their lowest margins since 2017.

During their last financial report Jensen was raked by investors for it and said it’s because if they kept their normal margins they would have nobody to sell their cards too and ended it there.

https://s201.q4cdn.com/141608511/files/doc_financials/2024/Q2FY24/Q2FY24-CFO-Commentary.pdf

https://s201.q4cdn.com/141608511/files/doc_financials/2024/Q2FY24/Rev_by_Mkt_Qtrly_Trend_Q224.pdf

https://s201.q4cdn.com/141608511/fi...4/q2/19771e6b-cc29-4027-899e-51a0c386111e.pdf

https://s201.q4cdn.com/141608511/fi.../nvda-f2q24-investor-presentation-final-1.pdf

https://www.fool.com/earnings/call-...nvidia-nvda-q2-2024-earnings-call-transcript/

Maybe you mean on the previous financial report before their record shattering last one ? Cannot find it there either.

It was in one of their investor calls, I’ll pull up transcripts later. But basically AIB’s are taking a much bigger cut this round, and Nvidia has a slush fund from the 4000’s ready to go so if they need to do price cuts it’s coming from there and not other accounts. So technically those are in holding pending a price drop should one be needed. And now that AMD is finally stepping up it will be so they will be issuing AIB reimbursements from there.Right or wrong is not the good word for prediction, but that part:

Budget PC gamers are not going to accept APUs as a real alternative to low-end cards.

I am not sure it fit the quitting the desktop for laptop-handheld-console where APU even SoC will be quite the norm, obviously possible in a what the point of a desktop with an APU versus something really close to it, with little downside but lot of upside of not being a desktop.

But it really depends on how good those APU get, AMD notion they will replace the cheapest GPU option is not crazy at all, with how small/low power Zen4c cores get that could open the door for a good sized-powered GPU on one.

Was it not more compensation/buyback for excess Ampere inventory that impacted margin ? Last report margin are I think the best ever at 70% and more of an software company than an hardware, has gaming get smaller and smaller part of that pie it is harder to tell gaming GPU margin, I cannot find that comment about 4000 series low margin.

https://s201.q4cdn.com/141608511/files/doc_financials/2024/Q2FY24/Q2FY24-CFO-Commentary.pdf

https://s201.q4cdn.com/141608511/files/doc_financials/2024/Q2FY24/Rev_by_Mkt_Qtrly_Trend_Q224.pdf

https://s201.q4cdn.com/141608511/fi...4/q2/19771e6b-cc29-4027-899e-51a0c386111e.pdf

https://s201.q4cdn.com/141608511/fi.../nvda-f2q24-investor-presentation-final-1.pdf

https://www.fool.com/earnings/call-...nvidia-nvda-q2-2024-earnings-call-transcript/

Maybe you mean on the previous financial report before their record shattering last one ? Cannot find it there either.

Because 4060 and 4070s should be getting some decent cuts in the coming weeks.

sfsuphysics

[H]F Junkie

- Joined

- Jan 14, 2007

- Messages

- 15,994

I would like to see what (older) consoles have historically done where day 1 titles dont look like they are even on the same system as titles years later as they figure out how to squeeze more out of the existing hardware. E.g. final fantasy 7 looks like absolute ass compared to FF8, compared to FF9, all on the same system, all the same developer but vastly different and improved looks

even Red Dead 2/Horizon zero dawn/last of Us 2/last God of war on a PS4 versus 2013-2014 PS4 title would probably be quite different.I would like to see what (older) consoles have historically done where day 1 titles dont look like they are even on the same system as titles years later as they figure out how to squeeze more out of the existing hardware. E.g. final fantasy 7 looks like absolute ass compared to FF8, compared to FF9, all on the same system, all the same developer but vastly different and improved looks

When they started making FF7 in 1994, they probably had some SNES 2d title affair in mind, DirectX 1 had yet to release, the world changed really fast.

From the start of FF7 to the release of FF8, you go from no DX to DX 7, on launch FF7 was mindblowing

The dream of APU's taking over the low end has been just that, a dream despite all the great talk about it for the last 7-8 years. The only upside really has been how good the basic igpu's have been for laptops in that basic entry to mid level machines that don't have a dedicated gpu last a lot longer than they used to, coupled with cpu's themselves doing the same. I have a 2015 haswell i5 laptop with a HD 4600 that can still handle the web and streaming even if its a bit slow loading some websites and video.

With Zen 4c core being that small (3.84 mm) and a 4060 that could fit on 150mm, we could see quite nice 160-200mm APU at reasonable price one day real soon.

Maybe AMD Strix Halo is already there, if the 40 CU of RDNA 3.5 rumors are true, the chance that will match if not beat the under 32 CU of an 7500 class GPU if you put all the power it can handle on a desktop config are good.

Maybe AMD Strix Halo is already there, if the 40 CU of RDNA 3.5 rumors are true, the chance that will match if not beat the under 32 CU of an 7500 class GPU if you put all the power it can handle on a desktop config are good.

Last edited:

At least APUs would be very console-like, nearly identical really.The dream of APU's taking over the low end has been just that, a dream despite all the great talk about it for the last 7-8 years. The only upside really has been how good the basic igpu's have been for laptops in that basic entry to mid level machines that don't have a dedicated gpu last a lot longer than they used to, coupled with cpu's themselves doing the same. I have a 2015 haswell i5 laptop with a HD 4600 that can still handle the web and streaming even if its a bit slow loading some websites and video.

It would make life a smidge easier for developers, Microsoft already has the needed interfaces cooked into Windows 10 and 11, they just don't use them but they are in there.

But a modern APU with a newer GPU RDNA GPU with the exception of memory type DDR vs GDDR be an XBox.

Some good rants by Matthew Connatser on lack of (true) budget GPUs:

Nvidia CEO Jensen Huang declared that it was no longer possible to offer lower prices each generation, hence the dramatic price increase that came with RTX 40. Whether Moore's Law is dead or not is hotly debated (Intel insists it's alive and well), but ultimately, Nvidia's attempted transition into selling more expensive GPUs didn't go very well this time, not for the company or for its fans.

Greedy, greedy, greedy. He's turning his back on the community that made NVidia what it is today.

Give them a year and maybe some board meetings where the independent directors ask some hard questions.What concerns me the most is that Nvidia and AMD aren't backing down from their maximalist positions.

Only possible in a duopoly. Let's hope Intel upsets their apple cart.When demand goes down, they lower production instead of prices,

May be related to the lack of capacity at TSMC.and you can tell demand is way down since the RTX 40 and RX 7000 haven't been in short supply since launch. Voting with your wallet might not even be effective, because gaming GPUs are small fry to Nvidia and AMD, and they would rather be committing more manufacturing capacity to server chips with fat margins, hence the lack of serious competition.

Krenum

Fully [H]

- Joined

- Apr 29, 2005

- Messages

- 19,193

Like he gives a shit, he needs another Tesla & leather jacket.Greedy, greedy, greedy. He's turning his back on the community that made NVidia what it is today.

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,772

uhh. waah??Some good rants by Matthew Connatser on lack of (true) budget GPUs:

Nvidia CEO Jensen Huang declared that it was no longer possible to offer lower prices each generation, hence the dramatic price increase that came with RTX 40....

Low price market appears to be covered to me. Where is it written that each new generation of cards MUST come in every price point?

Lowering the price on previous gen GPU's and slotting those in at the bottom is very smart business decision. Both have been doing it for years. GTX680 turns into GTX750, people whine "It's just a previous gen gpu!!". So now, they are keeping the older names, people whine about that.

You guys are getting suckered by click-bait articles.

Of course no one likes these higher prices. Bu you can't blame all of that on 1 company. Higher prices are everywhere for everything. Covid Lockdown, Ukraine invasion, that's where you should be directing your ire.

Plus the leading edge semiconductors are currently only made by TSMC. Serious bottleneck and lack of competition means they can charge what they want. Supply and Demand.

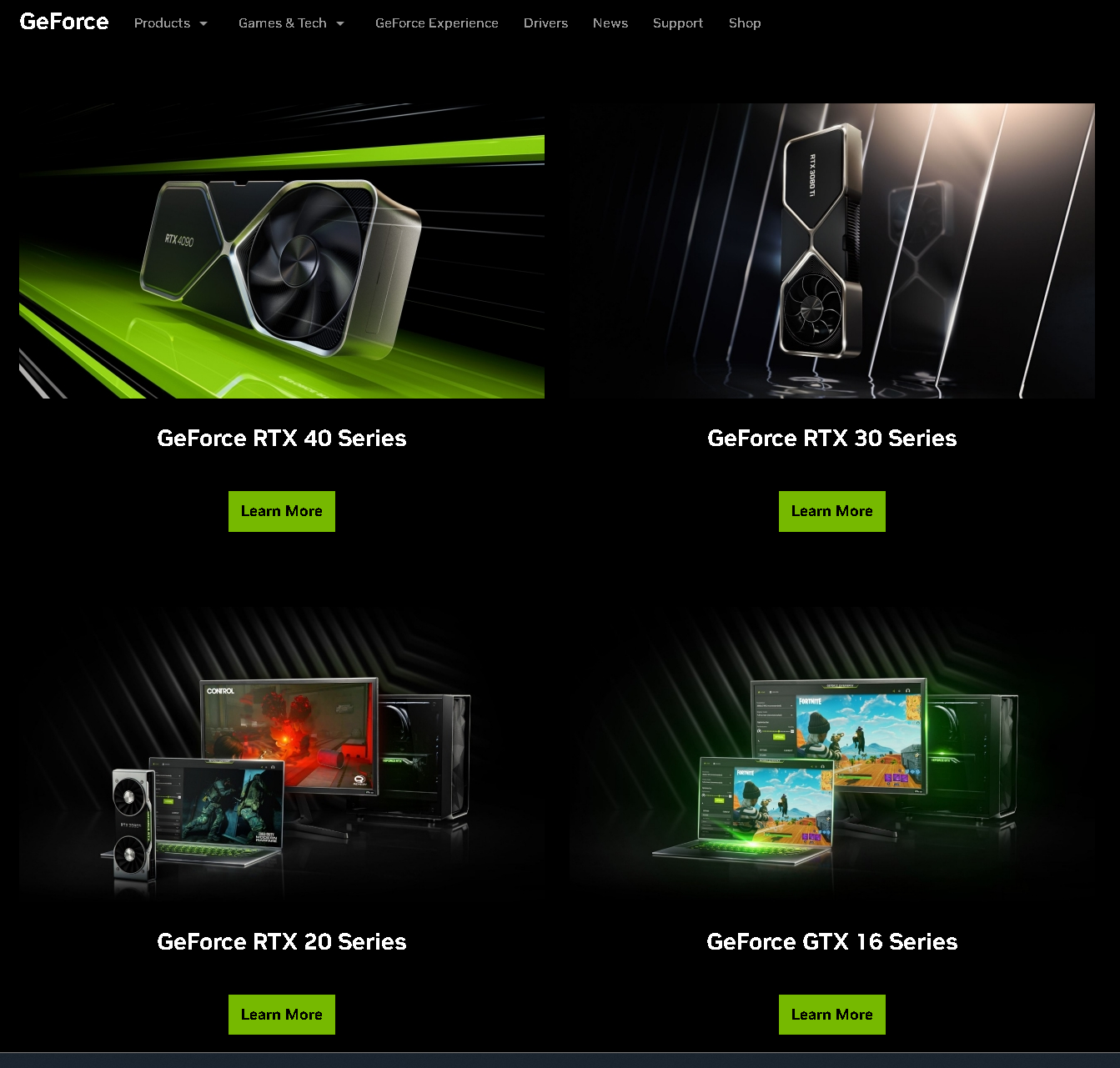

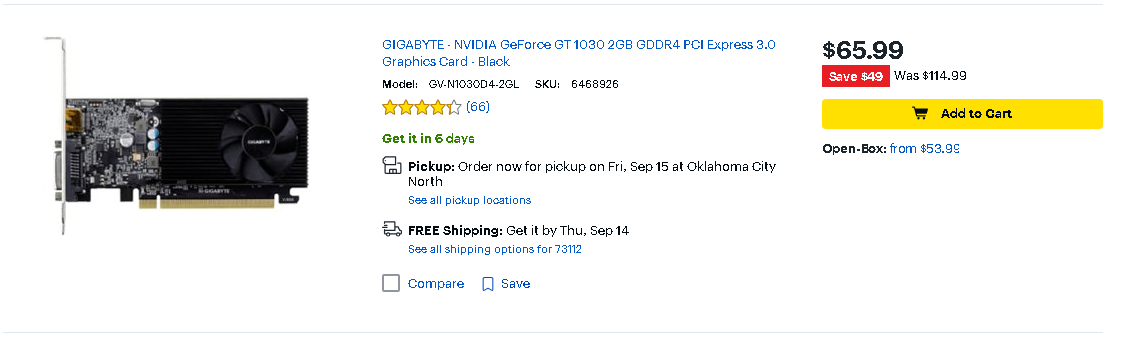

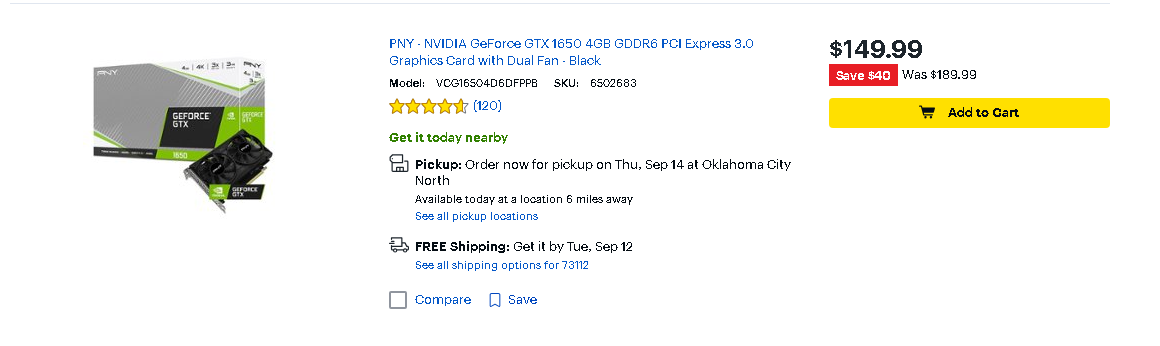

Low prices are covered by old, highly discounted stock and refurbished chips.uhh. waah??

View attachment 597373

View attachment 597376

View attachment 597378

View attachment 597380

Low price market appears to be covered to me. Where is it written that each new generation of cards MUST come in every price point?

Lowering the price on previous gen GPU's and slotting those in at the bottom is very smart business decision. Both have been doing it for years. GTX680 turns into GTX750, people whine "It's just a previous gen gpu!!". So now, they are keeping the older names, people whine about that.

You guys are getting suckered by click-bait articles.

Of course no one likes these higher prices. Bu you can't blame all of that on 1 company. Higher prices are everywhere for everything. Covid Lockdown, Ukraine invasion, that's where you should be directing your ire.

Plus the leading edge semiconductors are currently only made by TSMC. Serious bottleneck and lack of competition means they can charge what they want. Supply and Demand.

They aren’t terribly wrong though, the AIB’s who used to get 10% now want 30%. The chips that make up the board that used to be 0.10 each are now 0.50 each, because they need the high precision Japanese ones and the Chinese general ones are going to the automotive industry as fast as they can get made.

Intel maintains their prices by loosing 20-30% on each card sold.

If it were possible AMD would do it just to prove Nvidia was overpricing.

It’s the age of excusflation, everybody is charging more because “reasons” and enjoying record profits along the way. So AMD and Nvidia who exist at the end of a complex supply chain need to charge more to maintain their margins.

It sucks, it can’t continue for long, because we’re getting bled dry at every turn.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,672

Nope. Intel is and will be the best for budget buyers needing 16gb: https://videocardz.com/newz/intel-a...mes-with-free-code-for-assassins-creed-mirageOnce the 4070 super is released that should decrease the price of 4070.

Meanwhile if you care only about 1080p max/ultra gaming the 6800 for $380-$400 is likely to be the cheapest 16gb card that you can get in next few years

Arc has really matured and I look forward to seeing what battlemage brings! They're offering 16gb on arc a770 with a free copy of ac mirage.

If Intel offers budget 16gb battlemage cards ($350 or less) that will be cool !!Nope. Intel is and will be the best for budget buyers needing 16gb: https://videocardz.com/newz/intel-a...mes-with-free-code-for-assassins-creed-mirage

Arc has really matured and I look forward to seeing what battlemage brings! They're offering 16gb on arc a770 with a free copy of ac mirage.

jbltecnicspro

[H]F Junkie

- Joined

- Aug 18, 2006

- Messages

- 9,547

Yay. Congratulations intel! You’re relevant in the video card market! I don’t mean that sarcastically. Having a good third option should keep everything in line.Nope. Intel is and will be the best for budget buyers needing 16gb: https://videocardz.com/newz/intel-a...mes-with-free-code-for-assassins-creed-mirage

Arc has really matured and I look forward to seeing what battlemage brings! They're offering 16gb on arc a770 with a free copy of ac mirage.

More competition in the budget 16gb card segment.Yay. Congratulations intel! You’re relevant in the video card market! I don’t mean that sarcastically. Having a good third option should keep everything in line.

AMD too wants in on this gravy train

MLID says that the 7600 XT is based on same Navi 33 chip as the 7600. Expect 2%-3% improvement in performance

View: https://youtube.com/watch?v=fzPqNOK355c&t=79s

StoleMyOwnCar

2[H]4U

- Joined

- Sep 30, 2013

- Messages

- 3,005

Yay. Congratulations intel! You’re relevant in the video card market! I don’t mean that sarcastically. Having a good third option should keep everything in line.

I just hope they enter the higher end market, too. For all we know, though, their interest in GPU investment may just fizzle out entirely.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)