Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,877

Based on your recommendation, I'll be sure my next purchasing decision is based purely on Passmark.

Passmark is my favorite game!

I play passmark all the time!

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Based on your recommendation, I'll be sure my next purchasing decision is based purely on Passmark.

Based on your recommendation, I'll be sure my next purchasing decision is based purely on Passmark.

View attachment 172085

and then this happened.

https://www.cpubenchmark.net/singleThread.html

RIP Intel

I don't have much faith in this one at all. If the data is accurate, and at stock clocks, it probably just means Passmark isn't a very relevant benchmark.

3740qm.

My bad. The memory isn't what it used to be.

you forgot to add "now that AMD is on top."

View attachment 172085

and then this happened.

https://www.cpubenchmark.net/singleThread.html

RIP Intel

Moved this to the news thread if you don't mind.

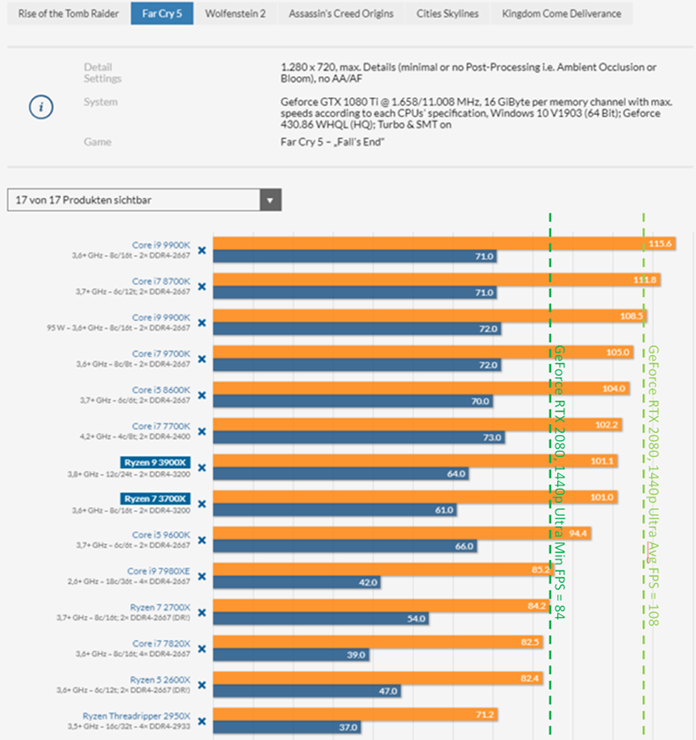

It is on purpose. This is always how CPU benchmarks are done.

In every title I've ever tested, at a fixed framerate, changing resolution/graphics settings has no impact on CPU load. Because of this it makes sense to run it at the absolute minimum resolution/graphics settings so that your benchmark figures are not GPU limited.

It is a CPU review, not a full system review, so this way you can see what the CPU is capable of independent of the GPU.

You - of course - have to read these charts with a little intelligence, and realize that depending on your GPU chances are in real world gameplay, you'll never see those framerates due to being GPU limited, and realize that this means that due to GPU limitations many of the top performing CPU's will perform identically in normal use.

It involves a risk that people misinterpret the results, but I don't see how else they could do it and still get to the actual performance of the CPU.

Yeah, but what's the point? Still, no one games at 720p, even if this IS the best way to test how a CPU performs in games, no one is playing at that resolution, it's pointless. Test 1080p, 1440p and 4k.

Even if you argue that there is GPU bottleneck at higher resolution, we don't care, no one games at 720p. Show us the real results in games so we can decide if it's worth buying it for games over older, 2 core CPUs. Testing games in 720p is completely pointless, no one games at 720p.

Did I mention that no one games in 720p?

Yeah, but what's the point? Still, no one games at 720p, even if this IS the best way to test how a CPU performs in games, no one is playing at that resolution, it's pointless. Test 1080p, 1440p and 4k.

Even if you argue that there is GPU bottleneck at higher resolution, we don't care, no one games at 720p. Show us the real results in games so we can decide if it's worth buying it for games over older, 2 core CPUs. Testing games in 720p is completely pointless, no one games at 720p.

Did I mention that no one games in 720p?

This has got to be sarcasm right? Removing the GPU as a bottleneck factor is the best way of showing which CPU preforms best. That performance extrapolates to resolutions people do play at. And in games that do fully bottle neck and all the CPUs show 85fps (or whatever number) that makes for a pretty boring graph and uninformative graph that paints them all as equals.Yeah, but what's the point? Still, no one games at 720p, even if this IS the best way to test how a CPU performs in games, no one is playing at that resolution, it's pointless. Test 1080p, 1440p and 4k.

Even if you argue that there is GPU bottleneck at higher resolution, we don't care, no one games at 720p. Show us the real results in games so we can decide if it's worth buying it for games over older, 2 core CPUs. Testing games in 720p is completely pointless, no one games at 720p.

Did I mention that no one games in 720p?

Yeah, but what's the point? Still, no one games at 720p, even if this IS the best way to test how a CPU performs in games, no one is playing at that resolution, it's pointless. Test 1080p, 1440p and 4k.

Even if you argue that there is GPU bottleneck at higher resolution, we don't care, no one games at 720p. Show us the real results in games so we can decide if it's worth buying it for games over older, 2 core CPUs. Testing games in 720p is completely pointless, no one games at 720p.

Did I mention that no one games in 720p?

You do realize that doing a CPU test in a GPU limited scenario is literally worthless, right?

This has got to be sarcasm right? Removing the GPU as a bottleneck factor is the best way of showing which CPU preforms best. That performance extrapolates to resolutions people do play at. And in games that do fully bottle neck and all the CPUs show 85fps (or whatever number) that makes for a pretty boring graph and uninformative graph that paints them all as equals.

You do realize that doing a CPU test in a GPU limited scenario is literally worthless, right?

Those results don't make much sense. If it is single thread benchmark, why would a 9600KF at 3.7GHz score the same as 9700F at 3.0GHz. They are essentially the same cores, and the 9600KF has a 23% clock speed advantage.

I expect very good things from Ryzen 3000, but I'll wait for some quality reviews with explanations for any anomalies like this.

FYI folks, if I remember reading correctly from a few weeks ago, Windows 10 1903 doesn't seem have all the Ryzen optimizations fully enabled/on, AMD is supposed to be putting out a new chipset driver any day that kicks in a bunch of performance optimizations. Something along that realm. So I would obviously guess that any leaked benchies are going to possibly be low(er) than they could with those new chipset drivers. Of course I wouldn't expect miracle chipset drivers to magically increase performance a lot. [one can wish, right?]

Those results don't make much sense. If it is single thread benchmark, why would a 9600KF at 3.7GHz score the same as 9700F at 3.0GHz. They are essentially the same cores, and the 9600KF has a 23% clock speed advantage.

I expect very good things from Ryzen 3000, but I'll wait for some quality reviews with explanations for any anomalies like this.

9600KF single core boost clock is 4.6 GHz, 9700F single core boost is 4.8 if I'm not mistaken. The 200 MHz difference in speed between the two should be negligible to the point that other factors (such as the frequence of the memory used) could be just as important.

That is true but a workload involving a game to test cpu is that the pinnacle of performance to begin with? Now that API have evolved what is the result that people find so reassuring about a 720p game benchmark ?You do realize that doing a CPU test in a GPU limited scenario is literally worthless, right?

100% agree, although my ambitions are a little more modest...probably a 3700X with a minor overclock. My expectations regarding all core overclocking headroom are in the couple of hundred Mhz at best range and with precision boost in play, I'm not sure if it will make an appreciable difference. Looking forward to finding out☺If you ask me, this is pretty much what I expected.

Pretty much what I expected. Trading blows depending on the title, trailing slightly in the games which are more highly dependent on a single fast thread, but in every case fast enough that you are probably going to be GPU limited before you are CPU limited at typical settings.

I haven't played Far Cry 5, but from my experience with other Far Cry games, the Dunia engine (modified Crytek engine for open worlds) they use always pins the third core, so it is not surprising at all yo me that it may be a worst case title for Ryzen.

I for one am looking forward to getting a 3950x and overclocking the snot out of it.

Credit for finding the link goes to LstOfTheBrunnenG

Don't wait....JUST BUY IT ☺I want 3700 vs 3700X benchmarks.

I guess the Jim Keller factor has something to do with it....Almost same performance and lower power consumption - pretty impressive for a fraction of the R&D and a less 'mature process'.

But of course power consumption only matters when it's AMD, because 5,000MW for 2% faster in some games is so [H].

Either way interesting to see minimums are very good if it isn't top of a game benchmark. Wonder if more optimization can help there.

Because it shows you the theoretical max with a future unreleased GPU when the system is no longer GPU limited? Most people upgrade their GPU's more often than they do their CPU's these days.

The whole point of the review is to show the capability of the CPU. The review is completely pointless if you are just going to benchmark on a system that is bottlenecked by the GPU. You learn very about the CPU that way.

Its the same reason reviewers tend to test GPU's on the absolutely fastest CPU they can find to make sure they are not CPU limited when testing the GPU.

This allows the user to take the minimum value of any CPU and minimum value of any GPU and predict how the combination of those two components may perform.

As soon as you benchmark the components in a system where they are held back by other components, you are benching that system, not the individual component you proclaim to be testing, and it limits the usefulness of the data.

Nothing could be less relevant than pointing out that no one games at 720p. The resolution is not the point. Getting CPU data untainted by any other aspect of the system is the point. I'd test at 640x480 if I could.

Do you also suggest benchmarking with a 60hz monitor and vsync turned on? Because that's essentially what you are doing when you do a CPU benchmark at higher resolutions.

What modern game that looks half good is not gpu limited at 1440p and above?

Maybe a handful, but I doubt they are really not gpu limited at those resolution, just able to use more cores.

Games from 2025 will not have the same requirement as today. The games will always be gpu limited at high resolution.

If they are going to show the capability of the cpu then test software benchmarks? They already do that. That's how they are showing capabilities of the cpu.

Testing games at 720p is completely pointless. It doesn't show the capability of the cpu because no one games at that resolution. It's irrelevant if the cpu is 200% faster in 720p because no one will ever see the difference because no one serious games at 720p.

There are basically no games that are not bottlenecked by the gpu at high resolution.

And if they are not dependant on the gpu at high resolution, the games are not demanding, so you could run them on a potato. So that still makes the test pointless.

My mind has not been changed.

Stop testing cpu in gaming at 720p. It's stupid.

What modern game that looks half good is not gpu limited at 1440p and above?

Maybe a handful, but I doubt they are really not gpu limited at those resolution, just able to use more cores.

Games from 2025 will not have the same requirement as today. The games will always be gpu limited at high resolution.

If they are going to show the capability of the cpu then test software benchmarks? They already do that. That's how they are showing capabilities of the cpu.

Testing games at 720p is completely pointless. It doesn't show the capability of the cpu because no one games at that resolution. It's irrelevant if the cpu is 200% faster in 720p because no one will ever see the difference because no one serious games at 720p.

There are basically no games that are not bottlenecked by the gpu at high resolution.

And if they are not dependant on the gpu at high resolution, the games are not demanding, so you could run them on a potato. So that still makes the test pointless.

My mind has not been changed.

Stop testing cpu in gaming at 720p. It's stupid.

What modern game that looks half good is not gpu limited at 1440p and above?

Maybe a handful, but I doubt they are really not gpu limited at those resolution, just able to use more cores.

Games from 2025 will not have the same requirement as today. The games will always be gpu limited at high resolution.

If they are going to show the capability of the cpu then test software benchmarks? They already do that. That's how they are showing capabilities of the cpu.

Testing games at 720p is completely pointless. It doesn't show the capability of the cpu because no one games at that resolution. It's irrelevant if the cpu is 200% faster in 720p because no one will ever see the difference because no one serious games at 720p.

There are basically no games that are not bottlenecked by the gpu at high resolution.

And if they are not dependant on the gpu at high resolution, the games are not demanding, so you could run them on a potato. So that still makes the test pointless.

My mind has not been changed.

Stop testing cpu in gaming at 720p. It's stupid.

With that it's also extremely important to emphasize that most bottlenecks are in the GPU, and in general while CPU is important, most people gaming really need to see that the $100 between the 9900k and the 9700k could put you up a GPU bracket and that would have far more effect on their performance than 9700k to 9900k

The point is that it is better to show CPU limited games like Crysis 3 running 1080p than GPU limited games like Wolf 2 at 720p when comparing CPUs. The former will do a better job at representing future 1080p games.

You do realize that doing a CPU test in a GPU limited scenario is literally worthless, right?

Stop testing cpu in gaming at 720p. It's stupid.

With that it's also extremely important to emphasize that most bottlenecks are in the GPU, and in general while CPU is important, most people gaming really need to see that the $100 between the 9900k and the 9700k could put you up a GPU bracket and that would have far more effect on their performance than 9700k to 9900k