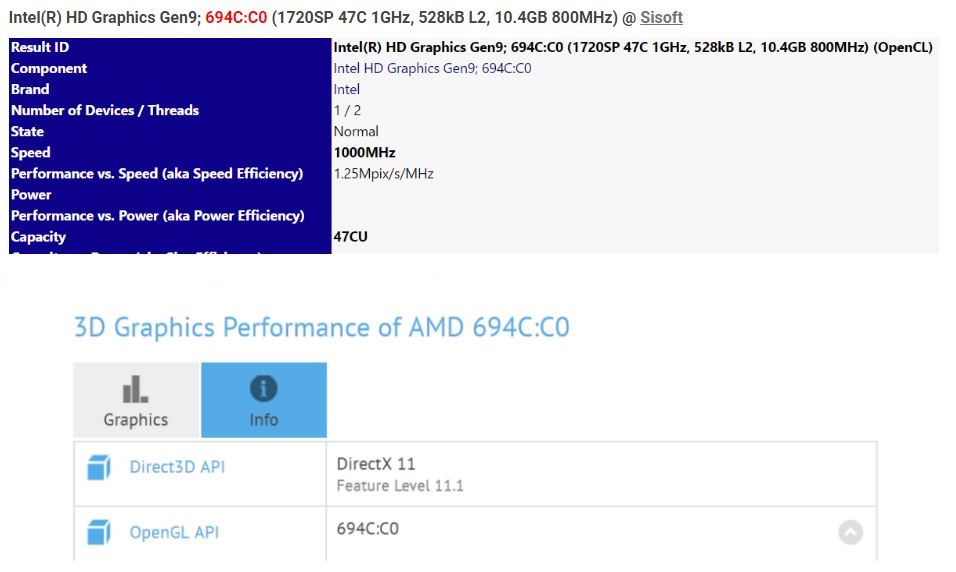

Too niche for AMD.

Probably, such an APU only makes sense for boutique and mITX builds. Still, they could create an entire new niche with overpowered APUs with HBM2 freeing system ram bandwidth limitations.

Actually now that I think about it, it makes sense: notebooks, ultrabooks especially, and NUCs. Even Intel throws their Crystal Well APU into these higher margin markets.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)