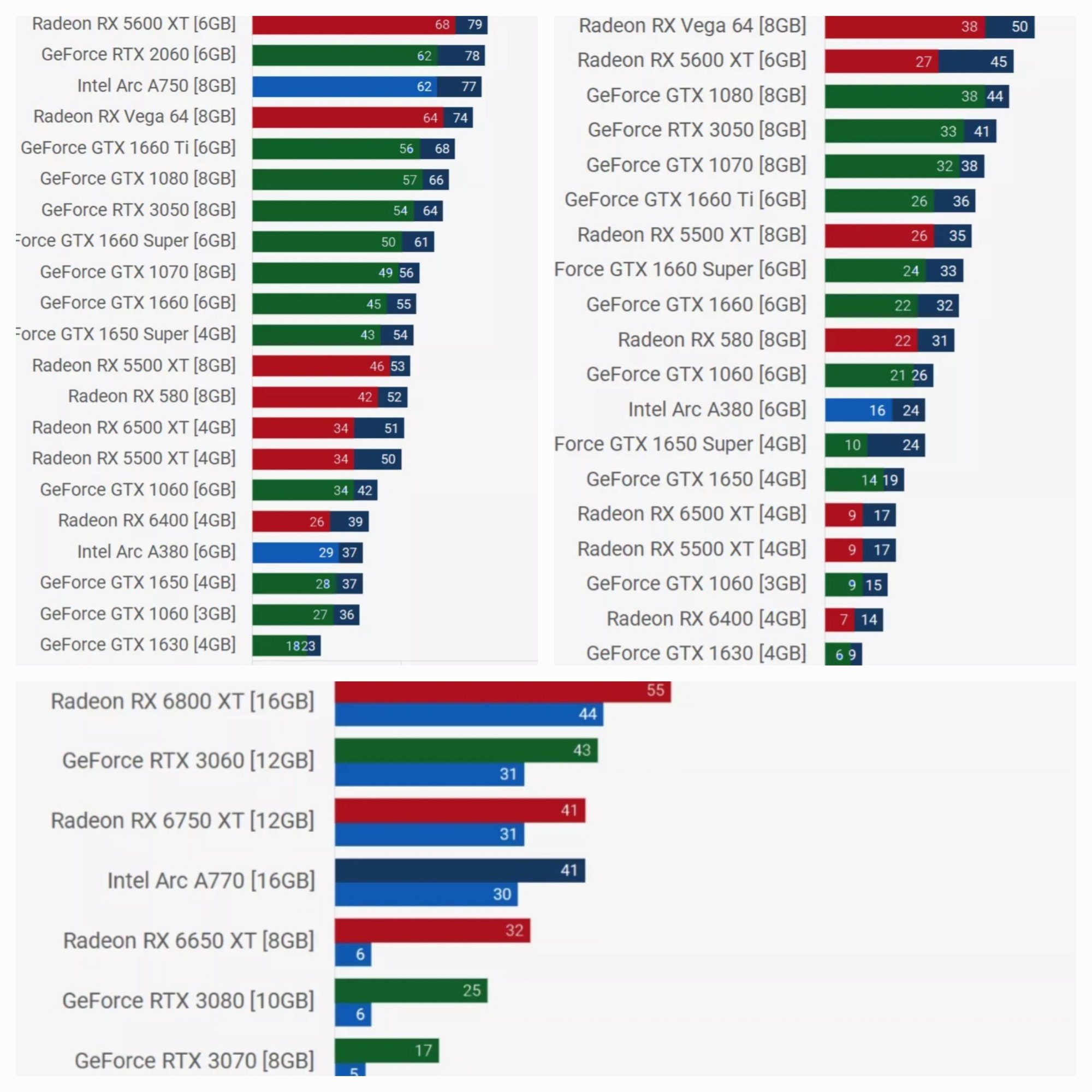

Rumour has it that Nvidia's RTX 4050 will be based on Nvidia's AD107 silicon, which is rumoured to be the silicon behind Nvidia's RTX 4060. The RTX 4050 appears to feature a 96-bit memory bus, with recent reports claiming that the graphics card will only feature 6GB of video memory, which is not enough to play many modern games without issues.

6GB of VRAM is not enough

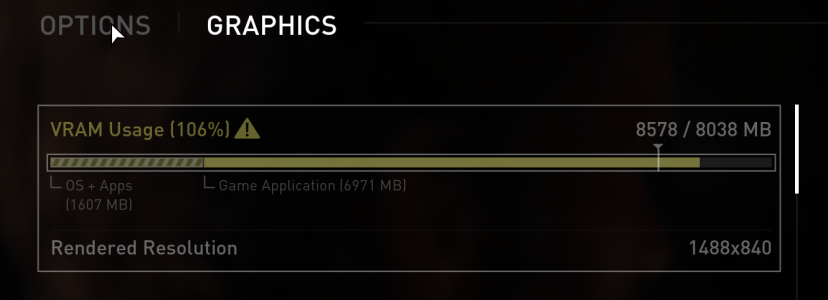

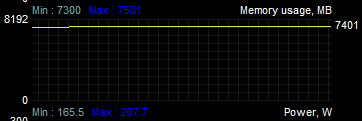

The past week has seen The Last of Us Part 1 and Resident Evil 4 (Remake) arrive on PC, and both of these games can utilise a lot of graphics memory, even at low 1080p resolutions. With the 8GB quickly becoming the minimum amount of memory that is advisable for 1080p gaming, Nvidia's RTX 4050 will be poorly positioned within today's PC market. This is especially true knowing that Nvidia's RTX 3050 has 8GB of graphics memory.

https://overclock3d.net/news/gpu_di...edly_launching_in_june_with_too_little_vram/1

https://twitter.com/Zed__Wang/status/1640986350986969089

6GB of VRAM is not enough

The past week has seen The Last of Us Part 1 and Resident Evil 4 (Remake) arrive on PC, and both of these games can utilise a lot of graphics memory, even at low 1080p resolutions. With the 8GB quickly becoming the minimum amount of memory that is advisable for 1080p gaming, Nvidia's RTX 4050 will be poorly positioned within today's PC market. This is especially true knowing that Nvidia's RTX 3050 has 8GB of graphics memory.

https://overclock3d.net/news/gpu_di...edly_launching_in_june_with_too_little_vram/1

https://twitter.com/Zed__Wang/status/1640986350986969089

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)