Wow! The Ultra Quality has more detail on a number of textures, like the brick wall. Plus the power lines are less alias than Native which has jaggies. I will have to see this in motion.Here are the same images, but as lossless PNGs so you can see actual quality.

https://postimg.cc/gallery/94Kfs33

This is on Ubuntu 21.04, Nvidia driver 465 on an RTX 2080 Ti.

Performance in Terminator is much better in borderless window, the previously mentioned performance drop was due to fullscreen mode.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD FidelityFX Super Resolution

- Thread starter killroy67

- Start date

dave343

[H]ard|Gawd

- Joined

- Oct 17, 2000

- Messages

- 1,869

AMD really, REALLY needs to get support into the bigger games that count and that will drive more attention onto FSR; RDR2, R6 Siege etc...

Fighting Nvidia is pricy... not because Nvidia is default 100x better they are just willing to throw money around more.AMD really, REALLY needs to get support into the bigger games that count and that will drive more attention onto FSR; RDR2, R6 Siege etc...

Same thing happened with Freesync. Nvidia paid a lot of monitor players to include their tech and hype the hell out of it. AMD said we are not going to pay companies to use our tech... no instead of paying them we will just give them something that won't cost them a fortune and charge them nothing at all for it. We know what has happened their Freesync has won... ya gsync is still a thing but you would have to be a hard core Nvidia booster to not admit its all but dead at this point.

The same will happen with this... Nvidia paid a bunch of (ok not really a small hand full of) developers to implement their tech into games, and spent a lot of money hyping it up for them. (those paid youtube shill videos cost more then you would imagine lol) AMD has come along with a solution and are probably not going to break open a bag of cash to drive its uptake. However they have made it inexpensive to implement... and free to use. (just like Freesync) So yes the same thing will happen over time. I suspect a handful of games may not see FSR right away as they have DLSS and I assume a bag of Nvidia cash with strings attached all over it.

I don't really see any way for Nvidia to save DLSS.... I mean if they back track now and find away for it to work without tensor cores they will look like Jerks even more. If they build a DLSS light that works the same way as AMDs FSR they will also look like Jerks. I expect they will double down and try to pay off a handful of developers they hope will have the smash hits to run DLSS and skip FSR inclusion. That would be peek Nvidia and what else would anyone expect from them.

- Joined

- May 18, 1997

- Messages

- 55,601

Quote of the day, ChadD !Fighting Nvidia is pricy... not because Nvidia is default 100x better they are just willing to throw money around more.

Same thing happened with Freesync. Nvidia paid a lot of monitor players to include their tech and hype the hell out of it. AMD said we are not going to pay companies to use our tech... no instead of paying them we will just give them something that won't cost them a fortune and charge them nothing at all for it. We know what has happened their Freesync has won... ya gsync is still a thing but you would have to be a hard core Nvidia booster to not admit its all but dead at this point.

The same will happen with this... Nvidia paid a bunch of (ok not really a small hand full of) developers to implement their tech into games, and spent a lot of money hyping it up for them. (those paid youtube shill videos cost more then you would imagine lol) AMD has come along with a solution and are probably not going to break open a bag of cash to drive its uptake. However they have made it inexpensive to implement... and free to use. (just like Freesync) So yes the same thing will happen over time. I suspect a handful of games may not see FSR right away as they have DLSS and I assume a bag of Nvidia cash with strings attached all over it.

I don't really see any way for Nvidia to save DLSS.... I mean if they back track now and find away for it to work without tensor cores they will look like Jerks even more. If they build a DLSS light that works the same way as AMDs FSR they will also look like Jerks. I expect they will double down and try to pay off a handful of developers they hope will have the smash hits to run DLSS and skip FSR inclusion. That would be peek Nvidia and what else would anyone expect from them.

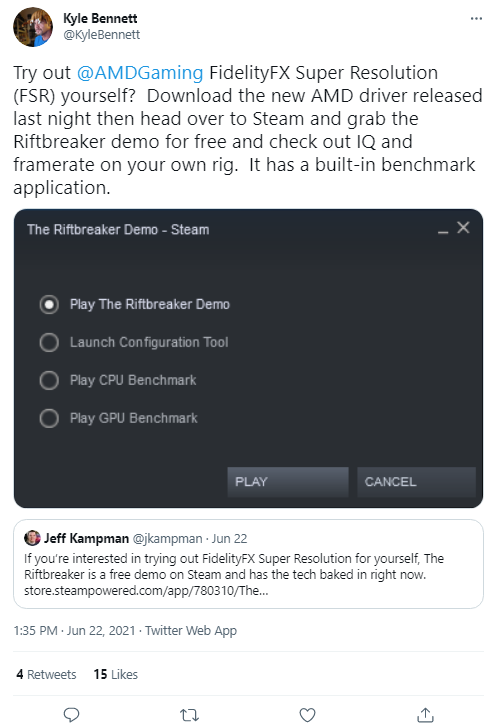

https://twitter.com/KyleBennett/status/1407839113097666568

- Joined

- May 18, 1997

- Messages

- 55,601

DOTA 2 just popped FSR.

https://www.dota2.com/newsentry/2992060508110412253

AMD FidelityFX Super Resolution

This update also adds support for AMD's FidelityFX Super Resolution. This technique allows the game to render at a lower resolution and then upscale the results with improved image quality. The result is high quality rendering at a lower performance cost than full resolution rendering, which allows for higher framerates even on less powerful graphics cards. Players can enable this setting in the Video options by turning the "Game Screen Render Quality" to less than 100%, and then turning on the "FidelityFX Super Resolution" checkbox. FidelityFX Super Resolution works on any GPU compatible with DirectX 11 or Vulkan.

https://www.dota2.com/newsentry/2992060508110412253

AMD FidelityFX Super Resolution

This update also adds support for AMD's FidelityFX Super Resolution. This technique allows the game to render at a lower resolution and then upscale the results with improved image quality. The result is high quality rendering at a lower performance cost than full resolution rendering, which allows for higher framerates even on less powerful graphics cards. Players can enable this setting in the Video options by turning the "Game Screen Render Quality" to less than 100%, and then turning on the "FidelityFX Super Resolution" checkbox. FidelityFX Super Resolution works on any GPU compatible with DirectX 11 or Vulkan.

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,028

anybody done the "kyle bennett FSR challenge" yet? ive got to get updated and try it...

https://twitter.com/KyleBennett/status/1407421909030125569

https://twitter.com/KyleBennett/status/1407421909030125569

Well they where not kidding about games coming soon where they. I mean just about everyone can run DOTA2 maxed out. Having said that I guess some people do reduce some settings and go for stupid high frame rates with Dota... I guess now perhaps they go Ultra Quality and leave everything cranked ?DOTA 2 just popped FSR.

https://www.dota2.com/newsentry/2992060508110412253

AMD FidelityFX Super Resolution

This update also adds support for AMD's FidelityFX Super Resolution. This technique allows the game to render at a lower resolution and then upscale the results with improved image quality. The result is high quality rendering at a lower performance cost than full resolution rendering, which allows for higher framerates even on less powerful graphics cards. Players can enable this setting in the Video options by turning the "Game Screen Render Quality" to less than 100%, and then turning on the "FidelityFX Super Resolution" checkbox. FidelityFX Super Resolution works on any GPU compatible with DirectX 11 or Vulkan.

Cool I do play dota now and then I'll have to go fire up a bot match so I can mess with settings haha

EDIT Valve implemented it a bit different if you select a render resolution less then 100% they have a added FSR toggle. I ran out of time today to really check it out... but I slide my render res down to 90% and toggled it can't say I could see any real difference in IQ... but I went from around 180FPS to 200-210 or so. Not bad for what looks like free, I'll have to play a match or two tomorrow and see how far I can slide it down. If I can hit 240 FPS I guess there isn't really much call for anymore, not that I am running a 240hrz monitor anyway.

Last edited:

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

I mean, freesync didn't really kill gsync like everyone proclaimed...

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

The problem with proprietary GPU features, is they only really stick around as long as the manufacturer wishes to sponsor their existence.

We've seen this a number of times, most notably with hardware PhysX (which is dead), SLI (dead), Nvidia 3D Vision (dead), most recently GSync (which has lost all steam and will probably disappear in the next 1 or 2 years).

Yes, FreeSync won. It is not as good as GSync in some technical ways, but it is cheaper for companies to implement, cheaper for consumers, much broader hardware support (not tied to Nvidia), and is mostly good enough.

We will see the same with FSR. It is technically not as good as DLSS (at this point in time) but it's cheaper and easier for everyone and still gives acceptable results. I'd say in the next few years we will see DLSS fade away.

We've seen this a number of times, most notably with hardware PhysX (which is dead), SLI (dead), Nvidia 3D Vision (dead), most recently GSync (which has lost all steam and will probably disappear in the next 1 or 2 years).

Yes, FreeSync won. It is not as good as GSync in some technical ways, but it is cheaper for companies to implement, cheaper for consumers, much broader hardware support (not tied to Nvidia), and is mostly good enough.

We will see the same with FSR. It is technically not as good as DLSS (at this point in time) but it's cheaper and easier for everyone and still gives acceptable results. I'd say in the next few years we will see DLSS fade away.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

GSync (which has lost all steam and will probably disappear in the next 1 or 2 years).

Again, that was said when freesync was first announced, yet here we are. It's still around 8 years after Gsync announcement. 7 years after freesync's announcement. As we can see with many things, there's room for 'cheap' (so to speak) and 'premium' to coexist indefinitely. Not saying that will be the case, but to just assume one way or another 'x' will definitely kill 'y' just seems fanboi-ish to me. There's also 2 tiers of Gsync now, that could have been the answer/death you might be predicting. Seems like some people only think competition is good and warranted when it's Nvidia has something AMD doesn't, otherway around or when both have 'equal' services, their tune changes and they want the competition eliminated.

Last edited:

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,028

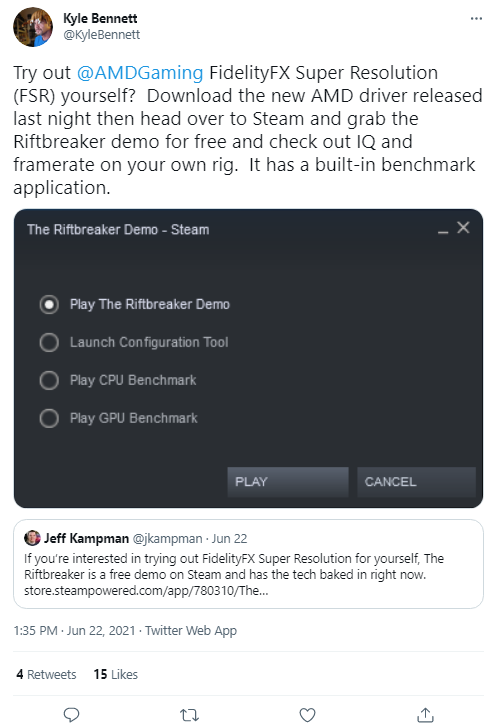

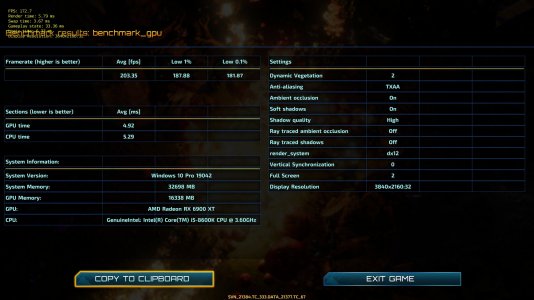

only have my 1080p plasma so using vsr. not too shabby, i guess. looks good at "4k" either way. edit: using the gpu benchmark.anybody done the "kyle bennett FSR challenge" yet? ive got to get updated and try it...

https://twitter.com/KyleBennett/status/1407421909030125569

View attachment 368768

1440 vsr, since im assume that is what fsr will upscale from.

2160 vsr + fsr off

2160 vsr + fsr quality

2160vsr + fsr ultra

Last edited:

Competition in parts is GOOD. Competition in standards is anti consumer and stupid.Again, that was said when freesync was first announced, yet here we are. It's still around 8 years after Gsync announcement. 7 years after freesync's announcement. As we can see with many things, there's room for 'cheap' (so to speak) and 'premium' to coexist indefinitely. Not saying that will be the case, but to just assume one way or another 'x' will definitely kill 'y' just seems fanboi-ish to me. There's also 2 tiers of Gsync now, that could have been the answer/death you might be predicting. Seems like some people only think competition is good and warranted when it's Nvidia has something AMD doesn't, otherway around or when both have 'equal' services, their tune changes and they want the competition eliminated.

As for Gsync yes its not dead... nor is it really alive. Looking at my local part supplier... 94 basic FreeSync monitors, 22 FreeSync Premium, 14 Gsync monitors (with most of the newer models being freesync with Gsync compatible badges)... and 2 listings for Asus ROG Gsync ultimate monitors which are cool if your looking for a $2-6k gaming monitor.

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

I understand companies want to innovate, and sometimes it takes proprietary experimentation to prove a feature or product works.

But after that point, when the idea is validated, it should be a standard.

But after that point, when the idea is validated, it should be a standard.

Ok so FSR has some limits. I was at my folks place this evening and fired up a very very old core 2 duo machine with a 750 ti 1080p. Dota2 low settings 40ish fps with some nasty lows. FSR 75% and I may may have got a couple extra FPS but those lows. lol I wasn't expecting much but probably not doubt with a 4 core old ass CPU it might have been actually playable.

- Joined

- May 18, 1997

- Messages

- 55,601

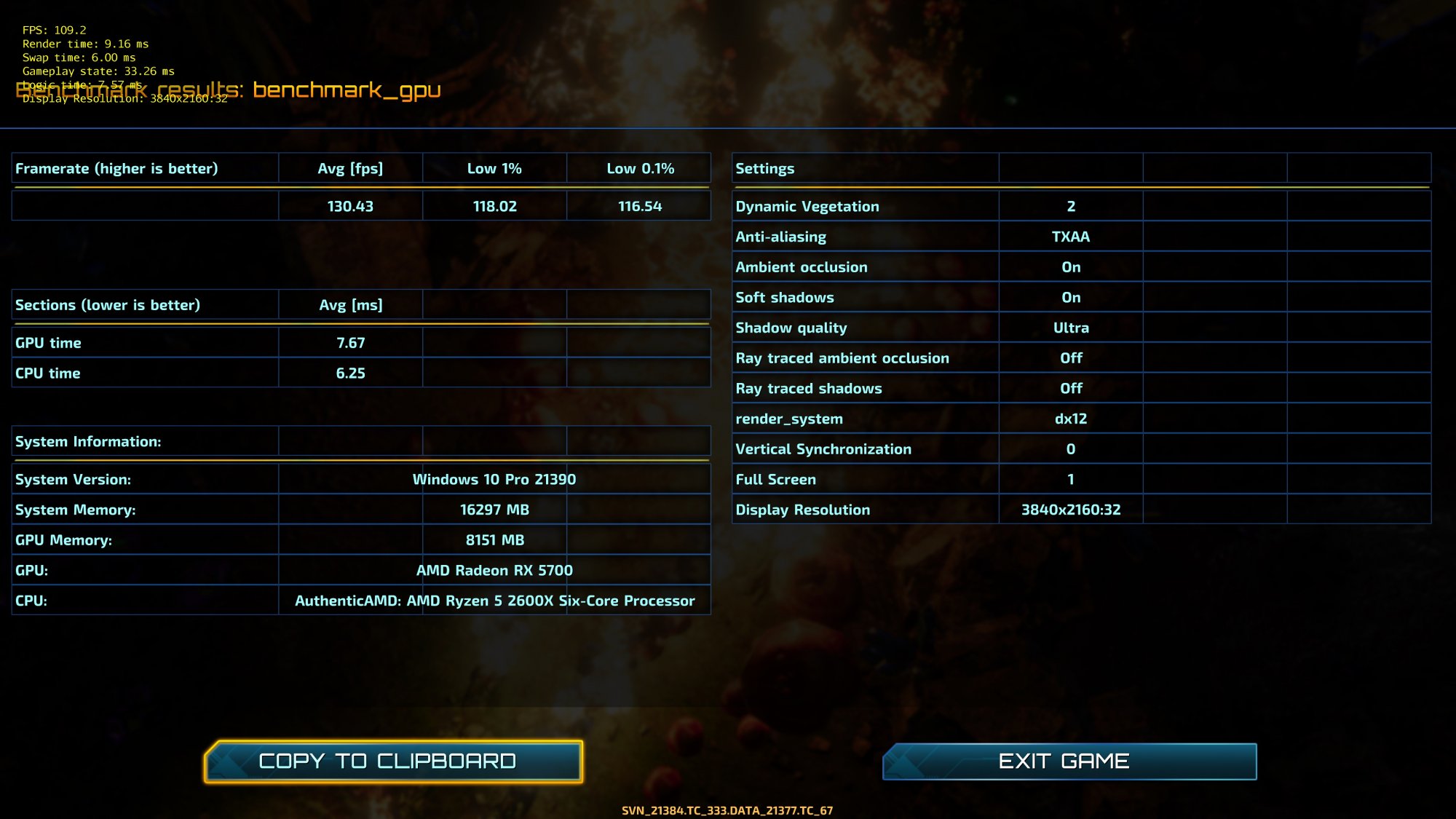

I did! 6900 XT at stock settings. 8600K at 5GHz, 3600Mhz 32GB RAM.anybody done the "kyle bennett FSR challenge" yet? ive got to get updated and try it...

https://twitter.com/KyleBennett/status/1407421909030125569

View attachment 368768

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,580

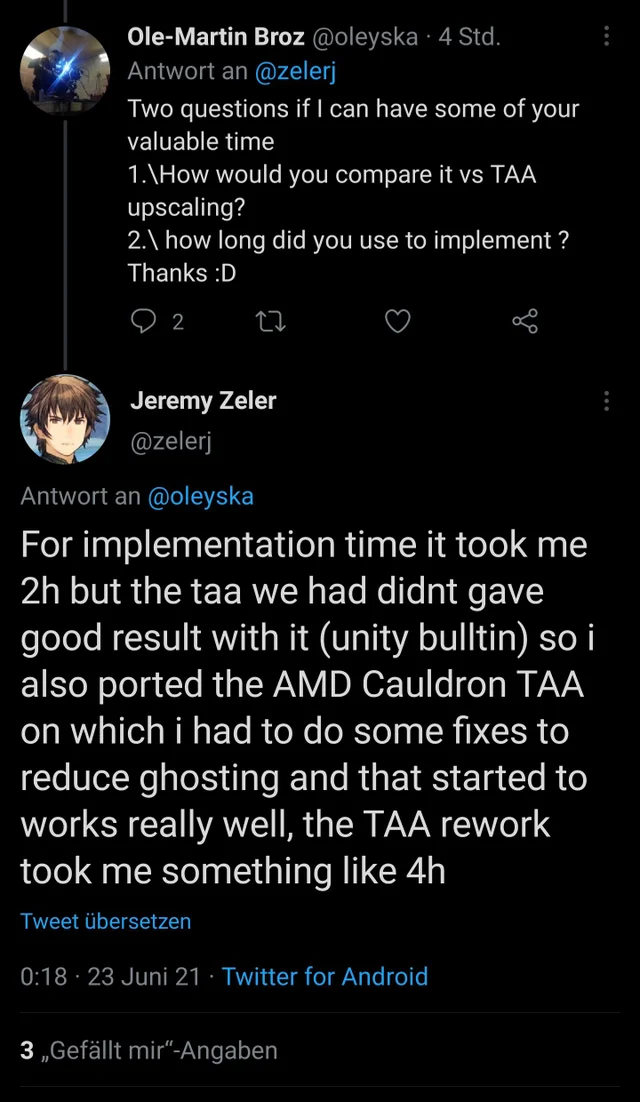

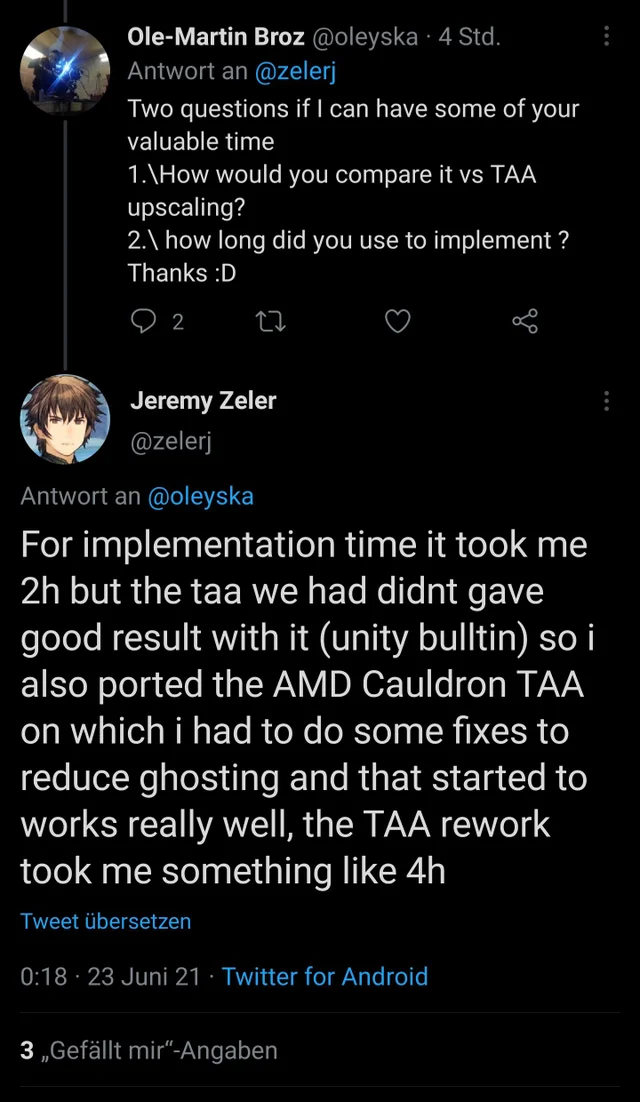

The internet says Jeremy is a developer from Edge of Eternity

Wow! AMD seems to be right about the ease of implementation. Need to look more at AMD Cauldron TAA, which I did not know about. Looking great so far.

I tried the Riftbreaker Demo on the 3090, SFR increased FPS significantly and I didn't really see any significant difference. Now the game plays so well even at 4K without SFR using RT, max settings with the 3090 that it is not needed here, maybe at 8K resolutions this would be useful with this game and setup.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,580

Now officially part of Xbox GDK. Come on Sony!

https://www.techpowerup.com/283806/amd-fsr-fidelityfx-super-resolution-is-coming-to-xbox-consoles

https://www.techpowerup.com/283806/amd-fsr-fidelityfx-super-resolution-is-coming-to-xbox-consoles

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,028

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,028

compares 580, 1060 and 970. yaps a lot at the beginning, might want to skip it...

edit: he also mentions amd has said that theoretically any gpu with shader model 5 should support fsr. igpus, see below...

edit: he also mentions amd has said that theoretically any gpu with shader model 5 should support fsr. igpus, see below...

Last edited:

defaultluser

[H]F Junkie

- Joined

- Jan 14, 2006

- Messages

- 14,398

yeah, I tested my hd 530 on Riftbreaker and saw a few fps increase (20%), but its nowhere near the 50% bump I saw with my gtx 960 on quality

I think there just isn't enough spare compute ion igp!

cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,077

Interesting.

TheBuzzer

HACK THE WORLD!

- Joined

- Aug 15, 2005

- Messages

- 13,005

is it me or can i barely tell difference at all in the look. but i guess thats not the point just more fps. also not using a big res monitor.

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

Not sure that is working right. First, I don't see any difference at all in PQ (maybe YouTube compression) but the fps also isn't any better with FSR (or worse). Not sure that is right.

I think the performance might be jumping without it as they have the resolution scaler on. So when its off its off but at 75% resolution scale. So turning it on would take a FPS hit... but the IQ in theory should be closer to the 100%. So if they went off and upped the scale to 100% the FPS would be much lower. I don't know though youtube compression sucks I can't tell if its working for sure either.Not sure that is working right. First, I don't see any difference at all in PQ (maybe YouTube compression) but the fps also isn't any better with FSR (or worse). Not sure that is right.

cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,077

crazycrave

[H]ard|Gawd

- Joined

- Mar 31, 2016

- Messages

- 1,878

This Youtuber was testing an MX 150 4Gb

Now officially part of Xbox GDK. Come on Sony!

https://www.techpowerup.com/283806/amd-fsr-fidelityfx-super-resolution-is-coming-to-xbox-consoles

Consoles is why AMD will overtake nVidia with FSR implementation in games vs. DLSS.

SPARTAN VI

[H]F Junkie

- Joined

- Jun 12, 2004

- Messages

- 8,760

Agreed. Hoping to see DLSS integration on Nintendo's front soon. Since 2018, the latest Tegra microarchitectures include tensor processing units, which is reportedly on their way to nex-gen Switches.Consoles is why AMD will overtake nVidia with FSR implementation in games vs. DLSS.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,028

FSR is coming to Resident Evil Village and more starting tomorrow and over the next couple weeks. AMD also announced integration into Unity and Unreal Engine 4. More game announcements are coming soon.

https://community.amd.com/t5/blogs/...-gpuopen-release-unity-and-unreal/ba-p/481543

https://community.amd.com/t5/blogs/...-gpuopen-release-unity-and-unreal/ba-p/481543

learners permit

[H]ard|Gawd

- Joined

- Jun 15, 2005

- Messages

- 1,797

Radeon driver modder enabling Rebar and FSR all the way back to preGCN cards including mobility graphics here.Only requires shader model 5 or better for FSR. Gonna test my old 6950 direct cu2 this weekend.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,580

I've seen some tests on older cards and the general consensus is that AMD probably cutoff support for FSR where they did, because cards much older than that don't really have the headroom to truly benefit from FSR. Resulting in only a handful of extra FPS.Radeon driver modder enabling Rebar and FSR all the way back to preGCN cards including mobility graphics here.Only requires shader model 5 or better for FSR. Gonna test my old 6950 direct cu2 this weekend.

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,520

I kind of figured they would, even before FSR was implemented I worried that DLSS would only be available for a small number of games due to consoles being all AMD.Consoles is why AMD will overtake nVidia with FSR implementation in games vs. DLSS.

that and (practically confirmed) rumor wise nVidia is notoriously difficult to work with.I kind of figured they would, even before FSR was implemented I worried that DLSS would only be available for a small number of games due to consoles being all AMD.

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 943

So I need to research into this, but FSR would work for my GTX 980?

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,028

yes, if its in the game. try the riftbreaker demo to see what it does.So I need to research into this, but FSR would work for my GTX 980?

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

Yes, I've seen people use it on the GTX 970, but it only supports like 12 games right now.

crazycrave

[H]ard|Gawd

- Joined

- Mar 31, 2016

- Messages

- 1,878

He tried a few older cards here..

killroy67

[H]ard|Gawd

- Joined

- Oct 16, 2006

- Messages

- 1,583

I ran it last night, I got 188FPS with it off, with ultra quality I got 257FPS, and the performance setting 407FPS. Thats running at 1440p and everything in the Riftbreaker settings maxed.I have to say Im really impressed, now for it to get in games I actually play, like hopefully BF2042.anybody done the "kyle bennett FSR challenge" yet? ive got to get updated and try it...

https://twitter.com/KyleBennett/status/1407421909030125569

View attachment 368768

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)