luisxd

Limp Gawd

- Joined

- Nov 26, 2014

- Messages

- 159

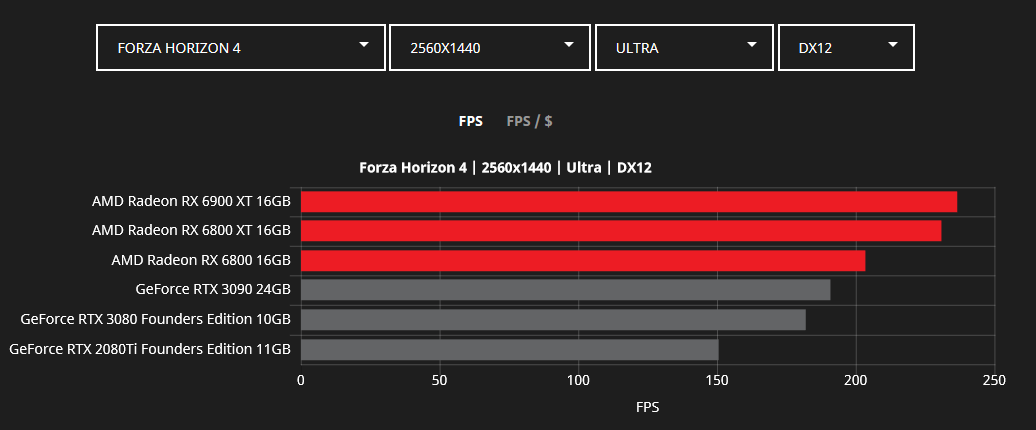

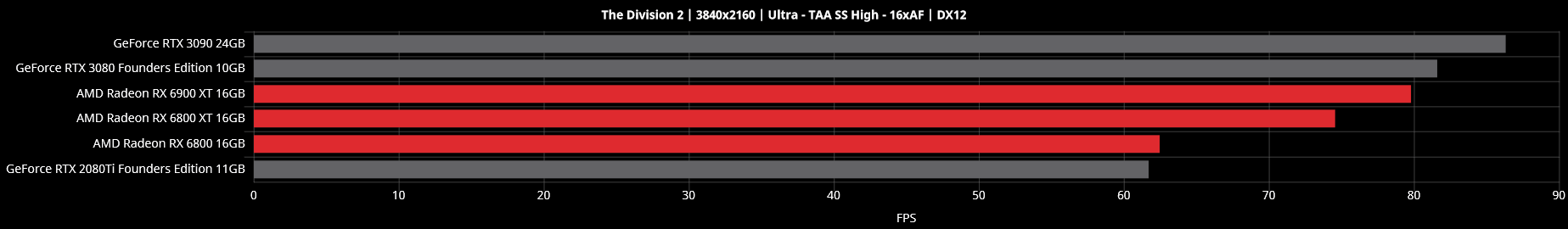

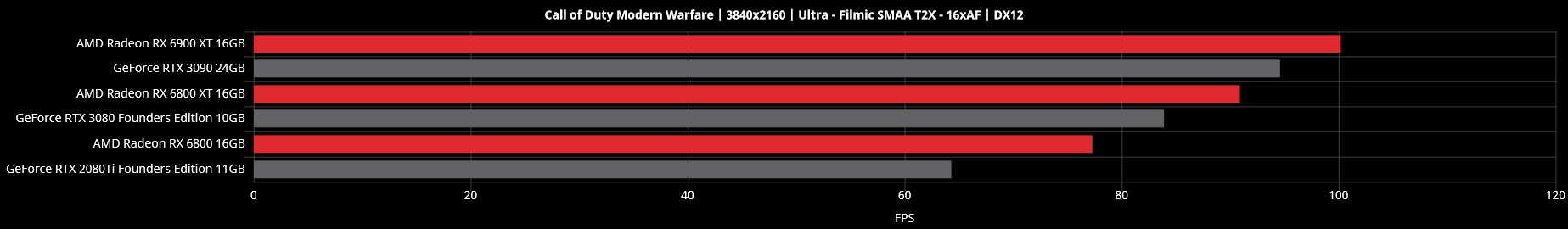

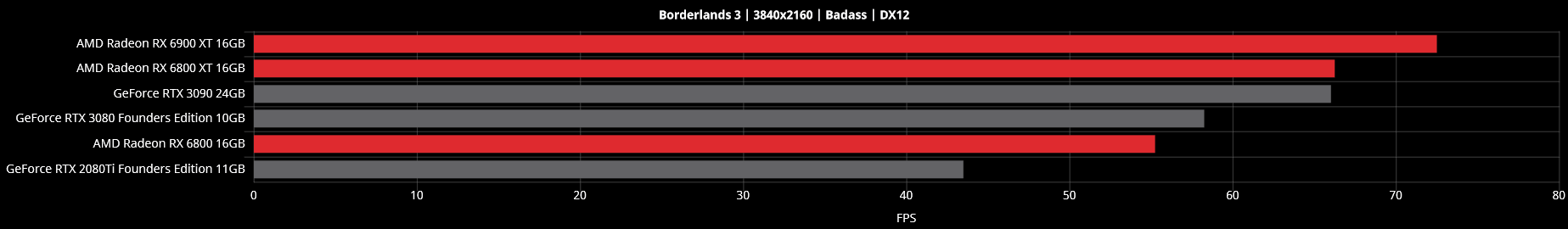

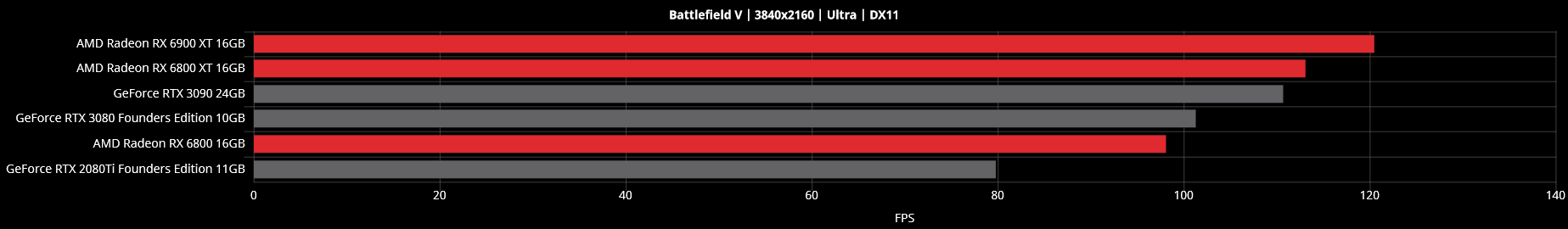

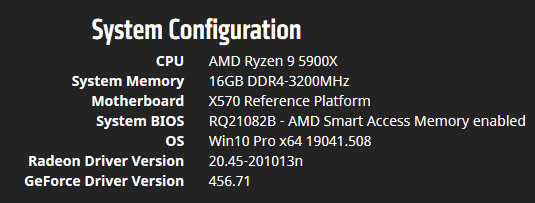

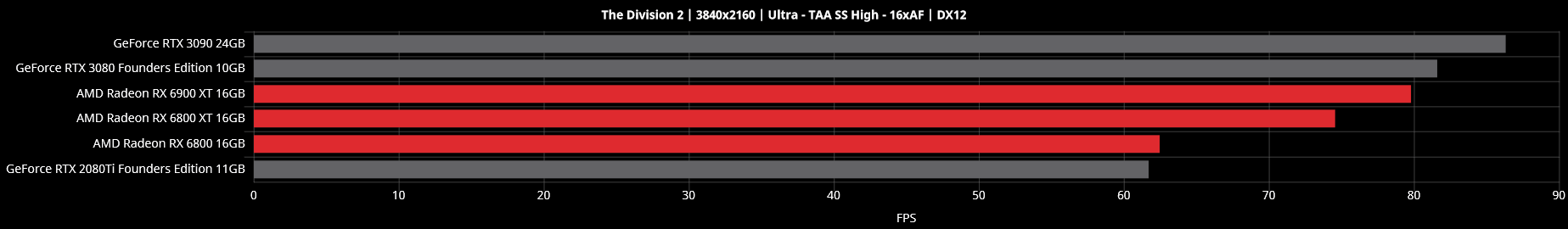

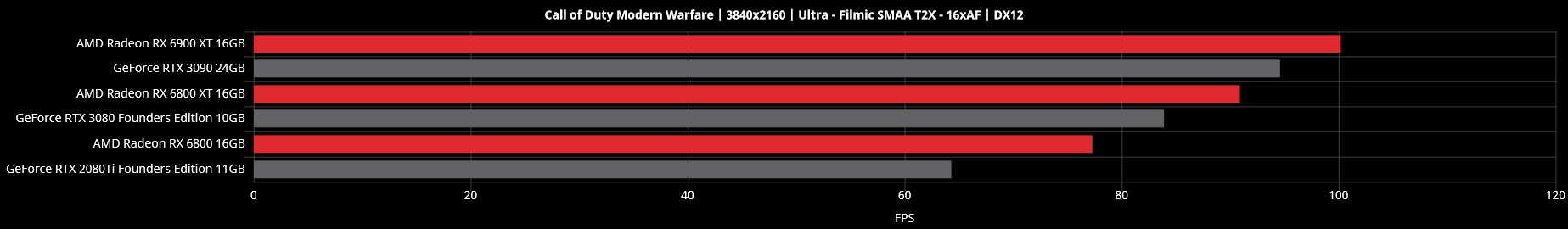

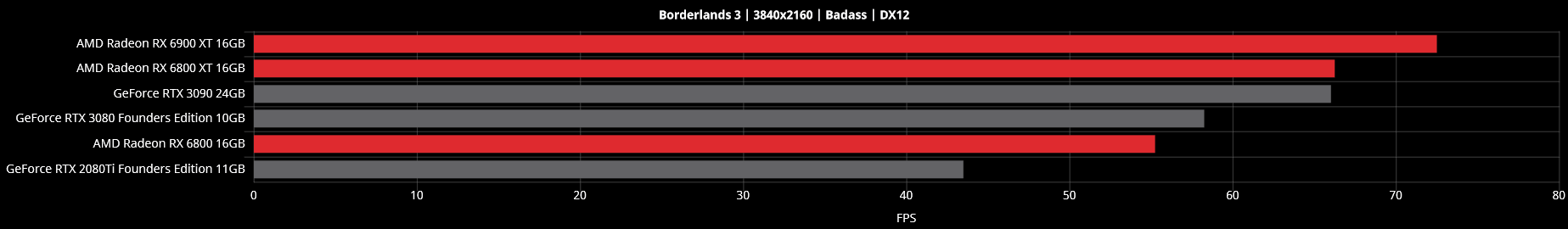

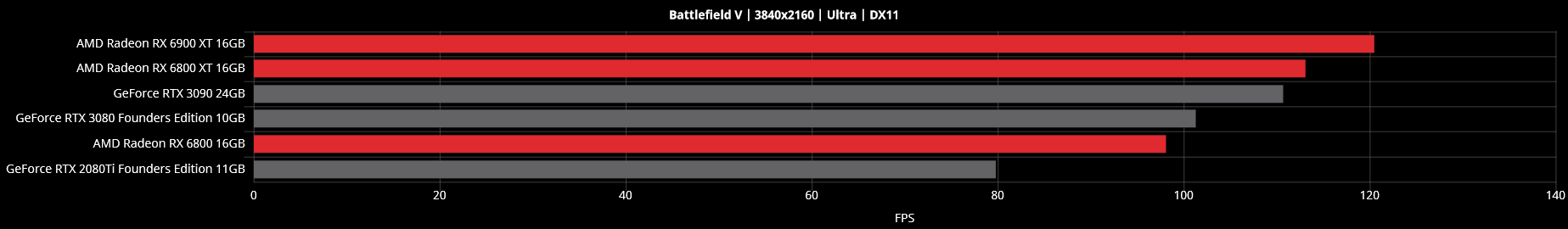

AMD just uploaded more gaming benchmarks of their navi cards, including 4K and 1440p.

MORE BENCHMARKS AT: https://www.amd.com/en/gaming/graphics-gaming-benchmarks

MORE BENCHMARKS AT: https://www.amd.com/en/gaming/graphics-gaming-benchmarks

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)