photoelectric

n00b

- Joined

- Jun 22, 2012

- Messages

- 37

Ok, this is a bit of an older thread and is going in a theoretical direction, but since there seem to be a lot of people here who have a clue about monitors, ClearType, etc., I'll ask here.

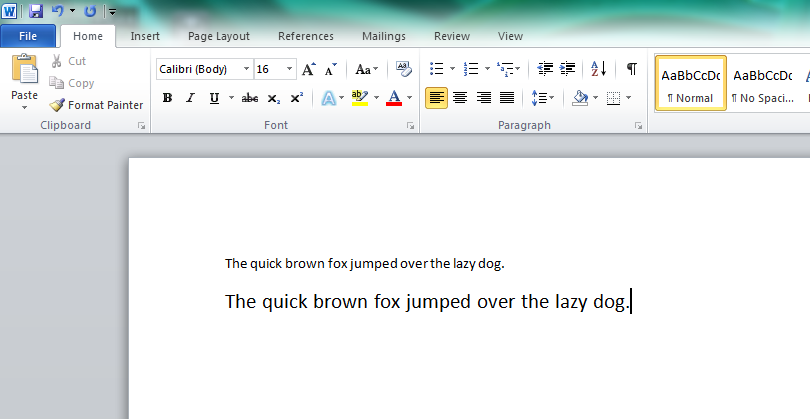

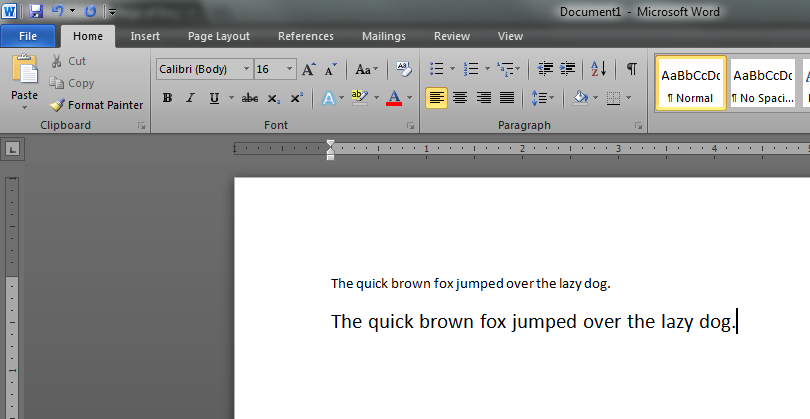

My new secondary monitor is a 24" Dell IPS. I got it to use in Portrait mode for reading and such. Problem is that everything looks nice and clear on it when it's oriented in Landscape mode (even if the monitor itself is physically mounted in a Portrait mode). But as soon as I use software (nVidia control panel or Windows 7 monitor settings) to rotate the image to Portrait mode, the image quality goes way down. It's not just the text - everything becomes less well defined, and there seems to be faint yellow discoloration. It's apparent around black letters on white background and in other areas.

I have so far tried connecting this monitor to Integrated graphics port (HD 4000 through my motherboard via dual-link DVI) and directly to my GTX 670's second DVI port - same thing. Tried turning off ClearType - even worse / doesn't help. Tried turning off software scaling and such in nVidia control panel - no noticeable helpful effect.

I can't figure it out... The monitor is on a desk-clamp mount, sitting in portrait mode permanently. So all I need to do is click some menus to flip the image back and forth between landscape and portrait and watch its quality deteriorate and go back to normal. Is there anything I can do?..

My new secondary monitor is a 24" Dell IPS. I got it to use in Portrait mode for reading and such. Problem is that everything looks nice and clear on it when it's oriented in Landscape mode (even if the monitor itself is physically mounted in a Portrait mode). But as soon as I use software (nVidia control panel or Windows 7 monitor settings) to rotate the image to Portrait mode, the image quality goes way down. It's not just the text - everything becomes less well defined, and there seems to be faint yellow discoloration. It's apparent around black letters on white background and in other areas.

I have so far tried connecting this monitor to Integrated graphics port (HD 4000 through my motherboard via dual-link DVI) and directly to my GTX 670's second DVI port - same thing. Tried turning off ClearType - even worse / doesn't help. Tried turning off software scaling and such in nVidia control panel - no noticeable helpful effect.

I can't figure it out... The monitor is on a desk-clamp mount, sitting in portrait mode permanently. So all I need to do is click some menus to flip the image back and forth between landscape and portrait and watch its quality deteriorate and go back to normal. Is there anything I can do?..

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)