Prisoner849

Gawd

- Joined

- May 5, 2016

- Messages

- 682

The 2080 at 1440p is perfectly justifiable.

What we NEED is fast ray tracing at 1440p.

I said nothing contrary, so why quote me?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The 2080 at 1440p is perfectly justifiable.

What we NEED is fast ray tracing at 1440p.

There seems to be a whole lot of anti-Nvidia butthurt that this forum's become a bit of a lightning rod for, and it's rooted in something other than the merits of these new cards; therefore hard to take seriously.

People that are hoping these "cherry picked results" are going to be drastically or even measurably different than benches in so-called "trusted reviews" are going to be disappointed.

So I can buy a new $1200 GPU that is exactly double the price my $600 GPU cost more than 2 years ago and it's not quite 2x the performance. Wow. Amazing.

Usually things move FORWARD in a new generation.

I dont get why people compare the 1080ti to the 2080ti...

The 1080ti should be compared to the 2080 replacing it and the 2080ti should be compared to whatever Titan it replaces... Then the jump in performance wouldnt be "45%" ishh

I'm sure glade I'm not cynical about this sort of thing. I wonder what anyone has to gain from lying about the amount of FPS? I don't get it.

uh they are your not even looking at things like DLSS and ray tracing and that doesnt even START on the other tech thats new in these things sure in older games what you said is the case but in new stuff that will use the new tech itll be more like 70 to 100% faster but you know shit on it because its not a faster horse

this is VERY much like the Geforce 2 days and i bet on the faster horse model then turned out TnL was where everything went

DLSS is still a question mark...will it be better then TAA and SMAA?...with all the mix/match AA options plus ReShade etc I don't see DLSS being that big of a deal...SSAA and MSAA will still be the best overall AA (but with a major hit on performance)

so desperate to believe everything nVidia has said

Even the 2080 ti isn't going to hold up well in the YEARS it will take for RT to be more than a toy for Nvidia to show off. I don't expect to see tons and tons of games add RT support.

I don't listen to anything that Nvidia says... but developers?

Yeah, their words carry weight.

Devs often paid by one company to implement their version of GPU tech?

I listen to nothing but real world facts, you would be doing yourself a favor if you did the same.

Every major engine has it and dozens of high-profile gamaes are releasing with it.

Performance is still a bit of a question, but the fabled '1080p60' misquote being pushed around looks to be a performance floor; other titles are using it at 4k, and the quoted developer said that 1440p60 would be easy using known optimizations that they hadn't gotten around to.

Remember that ray tracing isn't new, it's something that the industry (not just Nvidia!) has been working toward for years.

Been saying this since the RTX was announced, but I think in 4-5 years we'll be seeing it all over. As soon as consoles support it, ray tracing will be huge.

I read RT is rendered at lower res and needs strong AA to look ok.11 games have been announced with RT support. It seems like, for now, most games that are adding RTX features are focusing on DLSS. Which makes sense, DLSS is probably much much easier to implement on short notice.

An easy way is to check reviews using Vega cards , not old results but new benchmarks.

Vega 64 used to trade blows with 1080, now it will trade blows with 1080Ti

You're kidding, right?An easy way is to check reviews using Vega cards , not old results but new benchmarks.

Vega 64 used to trade blows with 1080, now it will trade blows with 1080Ti

Interesting that lower quality YUV 422 is slower than RGB.

Defiantly and great part about ray-tracing is it's more parallel than rasterization so SLI/CF works better with less hassle. I think that's part of why AMD is making a push towards adopting PCI-E 4.0 quickly for both it's next gen GPU's and motherboards. You double the lanes thus you could cut latency in half so CF for PCI-E 4.0 x16 would perform as well as PCI-E 3.0 x16 does now in terms of latency with twice the horse power. On top of that multi card scaling was never a serious issue anyway with ray tracing. They could pump out a 3-slot quad core VEGA 56 derived PCIE 4 x16 GPU if they wanted. It would do traditional ray tracing like a monster all w/o any proprietary gimmickry. Vega was already great at standard ray tracing....ray-tracing is the future but the present is still rasterization

just saying nvidia oversells their new gen cards by using different benchmarks methods that puts them way ahead of their old gen, but the results are misleading, and the sad part is that most reviewers do not challenge it, and use old data for the other cards added to teh slides.You're kidding, right?

just saying nvidia oversells their new gen cards by using different benchmarks methods that puts them way ahead of their old gen, but the results are misleading, and the sad part is that most reviewers do not challenge it, and use old data for the other cards added to teh slides.

so if any reviewer actualy bench 10 series , vega and 20 series, under the same methodology nvidia asks them to review the new gen, i am confident that you will see vega cards suddenly become much faster than the 10 series at least at what we used to see this past couple years.

just saying nvidia oversells their new gen cards by using different benchmarks methods that puts them way ahead of their old gen, but the results are misleading, and the sad part is that most reviewers do not challenge it, and use old data for the other cards added to teh slides.

so if any reviewer actualy bench 10 series , vega and 20 series, under the same methodology nvidia asks them to review the new gen, i am confident that you will see vega cards suddenly become much faster than the 10 series at least at what we used to see this past couple years.

yet im pretty sure benching these cards according to what Nvidia will recommand the reviewers to do ( 4k + HDR ) will inherently make vaga 56 higher than 1080 level, and vega 64 very close to 1080Ti.[H] did a pretty extensive review showing that both “fine wine” and nVidia gimping old cards via drivers didn’t hold water. People hear this nonsense once and it keeps getting repeated. It seems the general populace just loves conspiracy theories and a villian.

https://m.hardocp.com/article/2017/02/08/nvidia_video_card_driver_performance_review/13

yet im pretty sure benching these cards according to what Nvidia will recommand the reviewers to do ( 4k + HDR ) will inherently make vaga 56 higher than 1080 level, and vega 64 very close to 1080Ti.

deny all you want but that is what's going to happen, the only thing is initial reviews won't show it.

i wasn't saying it's fishy, i just said the guide lines that will be sent from nvidia to reviewers will put 10 series in bad light and 20 series in a better one, increasing the performance gap that 99% of gamers won't experience, and one way to verify that is to include up to date vega cards to the reviews, there for if vega gains experience over the 10 series from what we used to see these last couple years, then im right...and you know im right !Ok, well come read the [H] review then. They didn’t sign the NDA at cost to themselves so they had no restraints.

And using HDR isn’t anything fishy... it’s a tech that is getting way more popular.

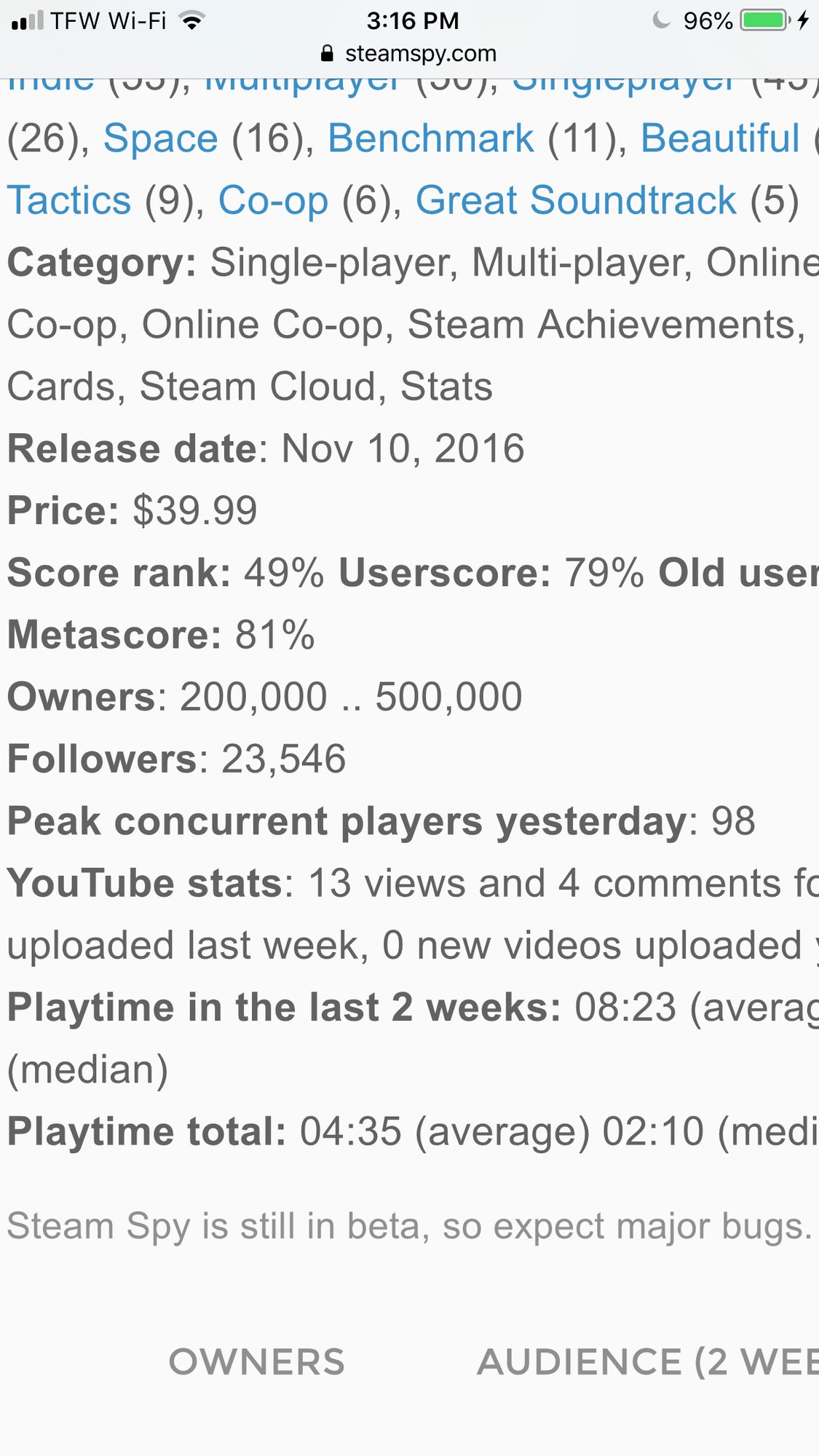

True but its 14 games that are relevant to most gamers so that is worth considering. It's not something obscure that nobody will ever use like AoTs.

the 1080ti is within 5% of the Titan XP. They are the same card.

Not a gaming card.Titan V

Not a gaming card.

Are any of the Titans?

while still giving AA, at something like 4k you would need a panel >35in to see the pixels that create the jagged edges needed for full screen AA.

I agree with your first point. I trust NVidia less than most tech companies, but this is a broad suite of current games compared apples to apples. It's probably a reliable display of differences between the cards.

As for your offhand dig at Ashes of the Singularity: this is an incredibly good game; the most modern RTS out there with great depth, astounding graphics and game mechanics, and infinite replayability. I have hundreds of hours into it and look forward to many more. In my book it's better than 99.999% of the games out there. My GTX 1060 runs it just fine.

In fact it's laughable how hung up people are on GPU performance when there is so very, very little that's even worth playing. And quite a few of the good games would run on an iGPU.