StoleMyOwnCar

2[H]4U

- Joined

- Sep 30, 2013

- Messages

- 2,995

Can you imagine the confusion to keep the exact same first numbers (say it would have been DLSS 2.2 or 2.3) but not make it available to the previous generation of cards ?

Seem like a massive no-brainer to something has massive has a new inter frame generator and a new tracking of motion vector less element on the scene to get a new number, seem not that smaller if any smaller than going from 1 to 2 because of the generalised instead of specialised machine learning.

I would even go to say if you decide to not make it available on the previous gneration of cards you have almost no choice at all to change the name or at least the number, so game with the little sticker do not create confusion on the buyer.

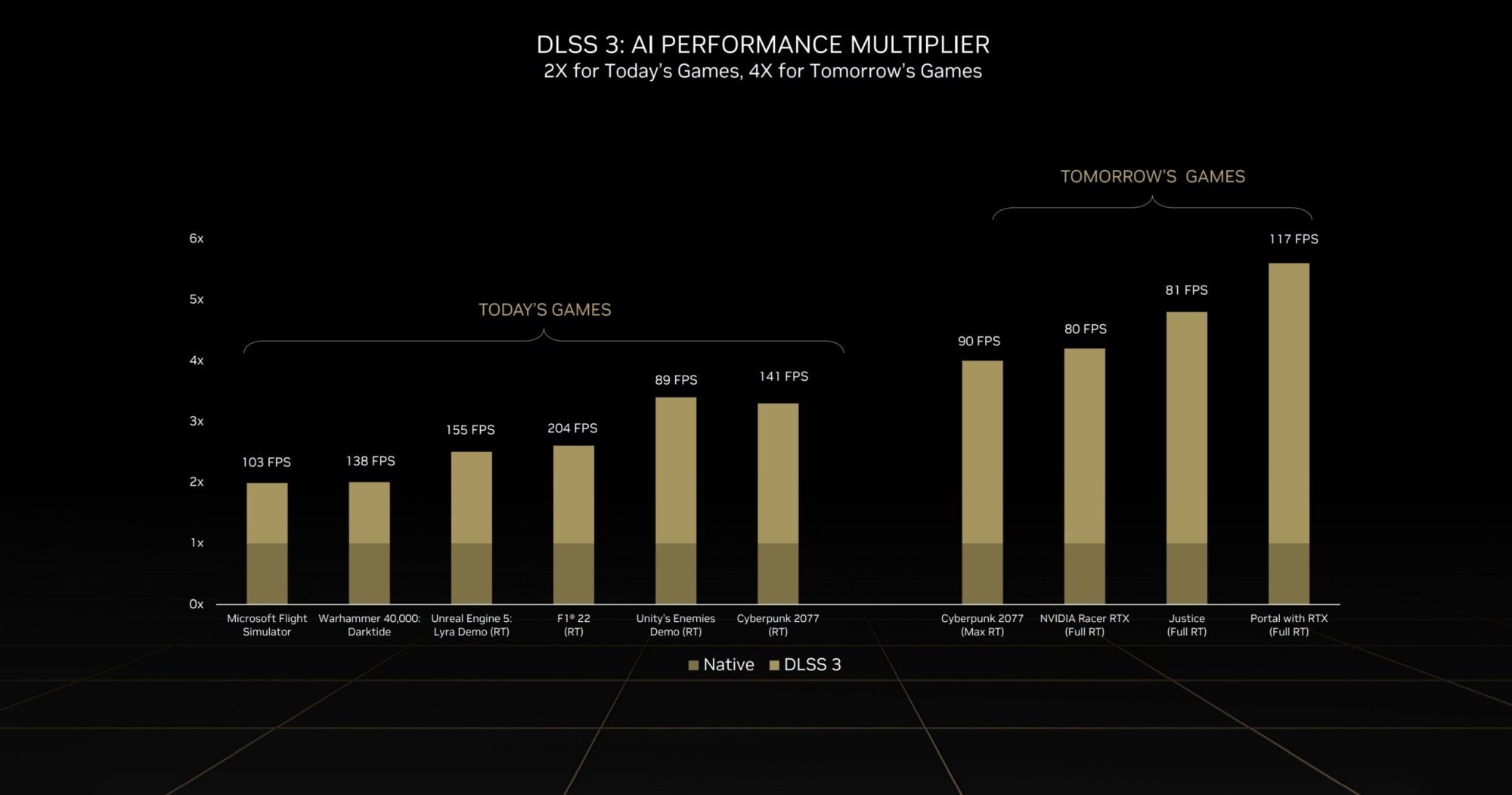

I think the reason(s) is(are) a little more simple than all that: There are a lot of Nvidia 30 series GPUs floating around for bottom of the bin prices (if you include secondhand, it's even worse). One of their most purchased (and from a warranty/longevity standpoint, trusted) AIB partners also just left the game entirely (and we don't know what the others are thinking). Nvidia seems to be scrambling to try to make this generation seem like a worthy upgrade for gamers, so how are they going to do that with, "Uh this is DLSS...2.5..ish"? I know it's standard fare to hype up every generation, but to me it will be a bit interesting to see how Nvidia tries to actually make upgrading seem worthwhile when current gen cards can be bought for what seems to be lower and lower pricing, and can play basically anything out on the gaming market at way more than sufficient framerates and image quality, especially for 1440p ultrawide (or similar) resolutions. It's entertaining to me actually, I would enjoy to see them scramble a bit more.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)