defaultluser

[H]F Junkie

- Joined

- Jan 14, 2006

- Messages

- 14,398

that's not awesome

What did you want, the ungodly performance increases will have to wait for an even larger part. You can't get new process node plus super-size plus GDDR6X for $1500.

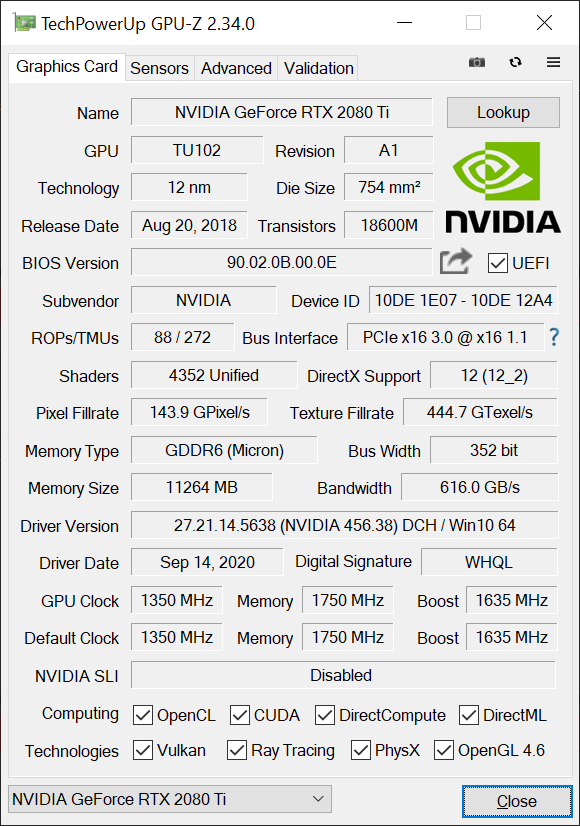

NVIDIA kept their 1080 Ti die just under 471 mm², while the 3090 is PORKER a die size of 628 mm²(on an even costlier process node than 14nm)

This is just as nomenclature change , the total performnance increase over the 2080 Ti is still 50% total (well above what the naysayers were saying). Unfortunately, they're using twice the density for cutting-edge memory parts. (adds even more expense)

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)