drescherjm

[H]F Junkie

- Joined

- Nov 19, 2008

- Messages

- 14,941

I’m actually looking for another M.2 slot to put my second SSD

Can't you use a $20 PCIe slot adapter?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I’m actually looking for another M.2 slot to put my second SSD

AMD allegedly had 8 core CPU on the consumer market even before Zen...AMD opened with Ryzen proto and we got 8 core decently priced competition. I fully expect at some point Intel will respond to consumer 12 and 16 thread parts.

AMD allegedly had 8 core CPU on the consumer market even before Zen...

Zen+ was +250mhz over Zen, and Zen 2 is +350mhz over Zen+. Extrapolate one more generation and you have ~5GHz boost.

1600X: 4.0GHz

2600X: 4.2GHz

3600X: 4.4GHz

1800X: 4.0GHz

2700X: 4.3GHz

3800X: 4.5GHz

It is a bit difficult to believe that 4600X and 4800X will hit 5GHz.

Sandy Bridge was a competitor by association. Bulldozer could not beat Nehalem8 cores, that even when all fully utilized, could barely match a 4c/4t 2500k. And on single thread was about half as fast. As far as I'm concerned, the only CPU in x86 history to be worse than Bulldozer was the early Pentium with the FDIV bug. Even the P4 at least had some strengths relative to its competition.

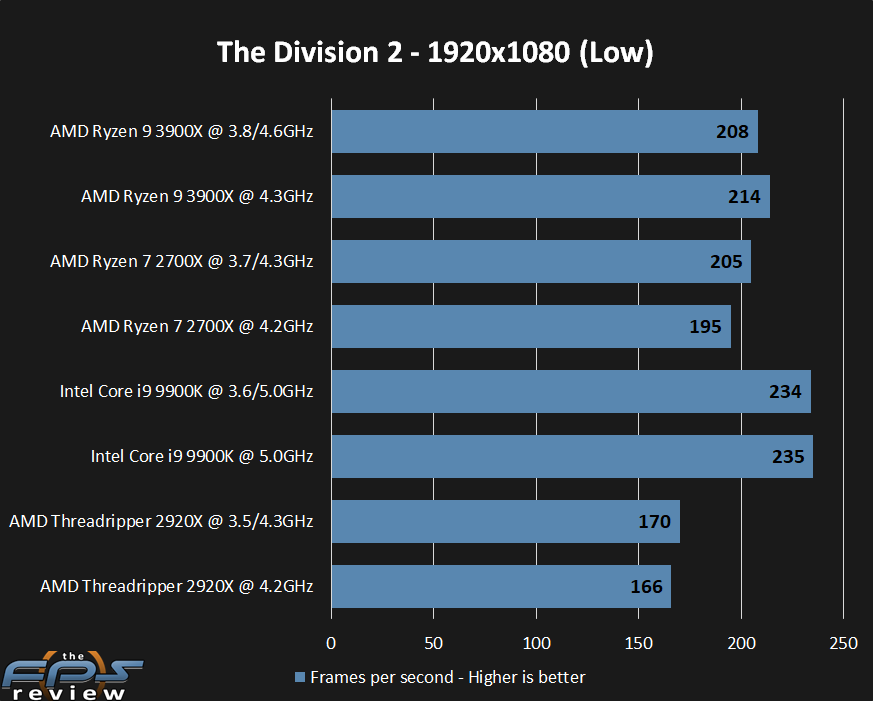

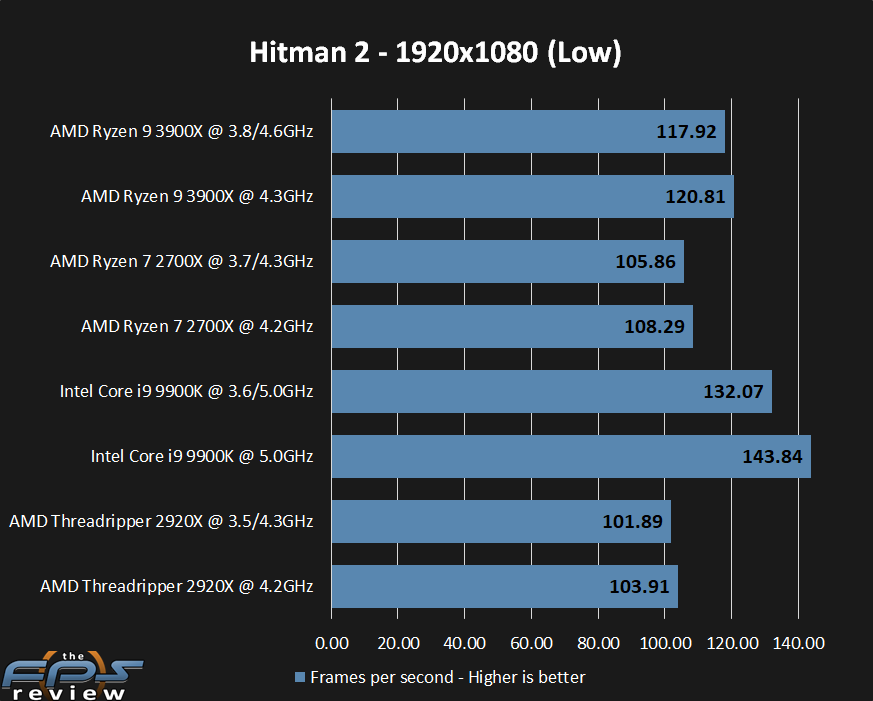

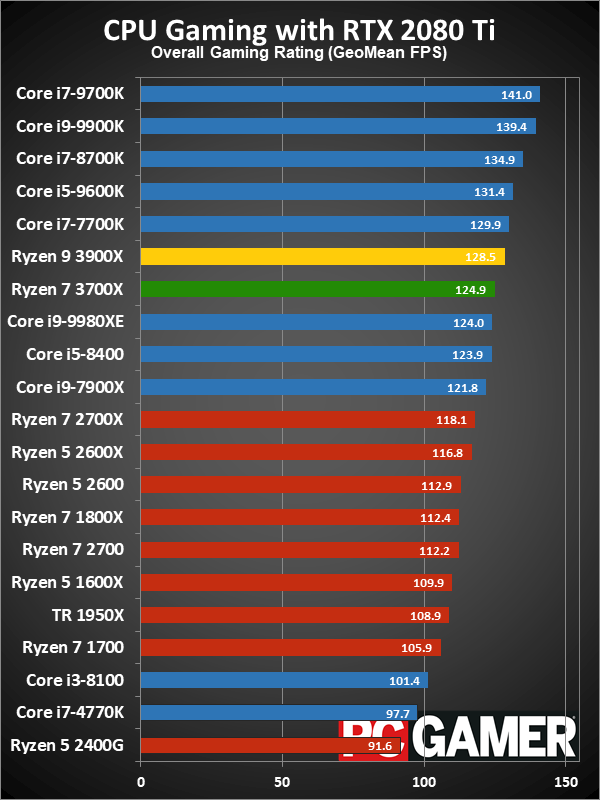

I just finished initial testing of my superior 720p gaming CPU, still without GPUEnjoy your performance advantage at 720p/1080p

Enjoy your performance advantage at 720p/1080p

Northwood with some L3 cache (as in P4 Extreme Edition) was pretty good CPU and they should rather just shrink it and not increase pipeline depth even more.

720p testing today is a good measurement of 1440p gaming tomorrow with a better GPU.

But it’s not tomorrow. It’s today and when the tomorrow you’re referencing comes, the systems we are all taking about now will be as relevant as a Q6600 is today.

Sufficed to say, we have two vendors to choose from. Both are quite good at everything with each one excelling at a certain workload or another.

That WAS the way it was yes. For the most part it no longer is. Outside of somet very niche stuff using avx or something. AMD has a pretty clean sweep with 3000. Intel isn't really better at anything. Unless people are seriously considering a 1-2% win at 720p in a few games a real win. AMD destroys Intel across the board. That hasn't been true in a long long time.

720p testing today is a good measurement of 1440p gaming tomorrow with a better GPU.

But it’s not tomorrow. It’s today and when the tomorrow you’re referencing comes, the systems we are all taking about now will be as relevant as a Q6600 is today.

Sufficed to say, we have two vendors to choose from. Both are quite good at everything with each one excelling at a certain workload or another.

AMD destroys Intel across the board.

720p testing today is a good measurement of 1440p gaming tomorrow with a better GPU.

This is the conventional wisdom, but parallelism throws a monkey in this wrench. Will gaming tomorrow take more advantage of parallelism and thus more cores/threads? Probably, though to what extent I don't know. Will that offset (to some unknown limited degree) single thread performance deficiency?

Core count will matter more in the future that is for sure

Will it be one year? Two? Three?

In three year time I guess single core performance of new processors will be so much better compared to current ones that upgrade will be preferable to using older system anyway.

AM4 platform is in this good situation that even if there won't be any new Zen architecture on it there will be still 3950X (or 3900X for those who got weaker CPU) and it generally supports PCI-E 4.0 so upgrade will be possible

So it simply is more future proof platform and there should be no doubt about it...

Intel is more "now" solution for people who want fastest single core and gaming performance that is available, period.

Besides I doubt there will be any significant market for custom built Intel rigs anymore. Most people who build PC themselves will get AMD, it is the most obvious choice.

Only die hard Intel fanboys will get Intel until they bring something considerably better, like 10nm with those 15% IPC improvement and significantly more cores.

Intel will be still used in PC's that companies mass sell to corporations. They have their funny iGPU thing and no matter how you slice it that is important feature for that kind of computer. AND that is beside Intel doing its Intel thing which makes these companies choose Intel...

Intel will be still used in PC's that companies mass sell to corporations. They have their funny iGPU thing and no matter how you slice it that is important feature for that kind of computer. AND that is beside Intel doing its Intel thing which makes these companies choose Intel...

Maybe not less single threaded but it might be that there will be more physics in future gamesWhat we can't really know is whether future additions to game logic are going to be more single-thread dependent, or less. We can expect a bit of both, most likely, but addressing the question means making a solid prediction as to the mix.

The best prediction that we can make is that single-thread performance will not become less important for gaming in the forseeable future. That doesn't really put anyone at a disadvantage any more than they are today.

We can take decent stabs at it with a bit of logic.

Right now, due to consoles going wider vs. faster, and clockspeeds being limited for almost a decade, we can reasonably assume that anything that can be easily parallelized has been already. We can then reasonably assume that games aren't going to get less single-thread dependent without some major rethink to the level of entirely new paradigms of software development coming into play.

What we can't really know is whether future additions to game logic are going to be more single-thread dependent, or less. We can expect a bit of both, most likely, but addressing the question means making a solid prediction as to the mix.

The best prediction that we can make is that single-thread performance will not become less important for gaming in the forseeable future. That doesn't really put anyone at a disadvantage any more than they are today.

I made a deal with myself "one small meal a day (at evening so my body get used to ketones at night and I am not hungry next day) until saving from buying meals at work cover difference between what I should be buying (3700x, 3600MHz DDR4) and what I bought (9900k, 4400MHz DDR4)"... and I am already loosing my body fat as we speakAnd also no clockspeed regression, or costing you 3 fingers for the 10 core. (luckily with AMD around, it will no longer cost a kidney, but Intel being Intel you can still expect to lose some body parts)

It could also go the other way. If single thread performance levels off, and developers want to add more features to their games and need the CPU horsepower... the only way they'll be able to get it is through parallelism.

We're at a weird place as we approach the silicon limits.

It could also go the other way. If single thread performance levels off, and developers want to add more features to their games and need the CPU horsepower... the only way they'll be able to get it is through parallelism.

I dunno man. That's why I say very clearly I don't know, and I don't take a side in that debate. I can argue it either way. We're at a weird place as we approach the silicon limits.

Do not worry, with TSMC/Samsung process node nomenclature we will get to 1nm in no timehttps://en.wikipedia.org/wiki/5_nanometer said:The 5 nm node was once assumed by some experts to be the end of Moore's Law.

I made a deal with myself "one small meal a day (at evening so my body get used to ketones at night and I am not hungry next day) until saving from buying meals at work cover difference between what I should be buying (3700x, 3600MHz DDR4) and what I bought (9900k, 4400MHz DDR4)"... and I am already loosing my body fat as we speak

X470 motherboards are generally cheaper, but not necessarily cheaper than Z390 motherboards. Otherwise, there is perfect platform cost parity. Of course you can point out how expensive X570 is, but there are also cheap X570 motherboards as well. I probably wouldn't ever use one, but that's just me.

Comparable system to 9900K with the same PCI-E speeds would be getting 3700X and 3xx or 4xx series chipset (though some mobos allegedly support PCI-E 4.0 on some ports)

Price advantage is obvious

AMD simply wins this round. There should be no doubt about that!

Not that it is enough to convince die hard Intel fanboys like myself to get AMD platform<laughing madly while putting i9 9900K to Z390 mobo>

H370 + 9700K would be very similar to 3700x. No price advantage unless creativity is your use or you cant 2 box for streaming.

3600x vs 9600k. Same scenario.

I would argue that for the first time since A64 we have a scenario where price isn't the deciding factor. You could save money all kinds of ways on both platforms. The decision this time is use case. Pure gaming vs general or mixed use.

Don't know if we even have a parallel for this situation, really.

Then again, I'm starting to feel old. All the old review sites are disappearing. Old forums, too, in many cases. Damned Youtube reviewers and all that jazz. Even PCs in general... so many people use their phones and don't even bother with a desktop (and in many cases, even a laptop) at home anymore.

The days of the desktop as standard are over. The desktop is now for people who do boatloads of work, or people who do boatloads of gaming (or both).

H370 + 9700K would be very similar to 3700x. No price advantage unless creativity is your use or you cant 2 box for streaming.

3600x vs 9600k. Same scenario.

I would argue that for the first time since A64 we have a scenario where price isn't the deciding factor. You could save money all kinds of ways on both platforms. The decision this time is use case. Pure gaming vs general or mixed use.

But it’s not tomorrow. It’s today and when the tomorrow you’re referencing comes, the systems we are all taking about now will be as relevant as a Q6600 is today.

Unless people are seriously considering a 1-2% win at 720p in a few games a real win.

And by the time that “tomorrow” comes all these CPUs will be about as useful as a C2D is today. It will be a long time before 1440p at 60hz comes even close to being CPU limited. Even at 144hz we’re still hitting GPU limits and the small difference between Intel and AMD is negligible. Then there’s 4K.

This is the conventional wisdom, but parallelism throws a monkey in this wrench. Will gaming tomorrow take more advantage of parallelism and thus more cores/threads? Probably, though to what extent I don't know. Will that offset (to some unknown limited degree) single thread performance deficiency?

I don't know and can't quantify this, and neither can you.

We can take decent stabs at it with a bit of logic.

Right now, due to consoles going wider vs. faster, and clockspeeds being limited for almost a decade, we can reasonably assume that anything that can be easily parallelized has been already. We can then reasonably assume that games aren't going to get less single-thread dependent without some major rethink to the level of entirely new paradigms of software development coming into play.

Only HT four-core. Games have quickly evolved in to taking advantage of 8 threads after the release of 8 thread consoles.Reality is that ancient four-core i7 continue being valid for a gaming build today

Only HT four-core. Games have quickly evolved in to taking advantage of 8 threads after the release of 8 thread consoles.

Same evolvement will happen over the next years, as 2020 consoles will come with 8 cores and 16/24 threads.

One is reserved for other stuff yeah so 7 cores. I think with 2020 consoles they are stripping the reservations for useless kinect stuff etc. so it would be even less of impact. But 1 thread out of 16 wouldn't be much anyway.One or two cores are reserved for the system in current consoles, so only six or seven cores are accessible to games. I guess future Zen-based consoles will do something similar.

Only HT four-core. Games have quickly evolved in to taking advantage of 8 threads after the release of 8 thread consoles.

Same evolvement will happen over the next years, as 2020 consoles will come

8 cores and 16/24 threads.

Well, people who bought into AM4 with a mid-range board from the past two generations will be saving quite a bit of money, and I would argue there is still at least another generation that will be compatible with many boards.

I had the money to go Intel, but chose not because I knew AM4 would have long life, and i've been rewarded for that choice. I'm moving to a 3800x now, but can still move to a 12 or 16 core if I need to in the next couple of years. (Next year possibly more, or at least another IPC gain)

It's in this regard that Intel is still more expensive. Although I think moving forward Intel won't pull this BS now that AMD is in parity with them.

Well, people who bought into AM4 with a mid-range board from the past two generations will be saving quite a bit of money, and I would argue there is still at least another generation that will be compatible with many boards.

I had the money to go Intel, but chose not because I knew AM4 would have long life, and i've been rewarded for that choice. I'm moving to a 3800x now, but can still move to a 12 or 16 core if I need to in the next couple of years. (Next year possibly more, or at least another IPC gain)

It's in this regard that Intel is still more expensive. Although I think moving forward Intel won't pull this BS now that AMD is in parity with them.