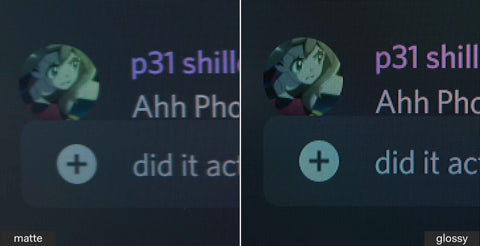

AwesomeOwl

Weaksauce

- Joined

- Feb 15, 2011

- Messages

- 88

Where did I spread misinformation? And oh no, my covert operation has been blown. My between the lines super secret shilling that no one else has noticed has been exposed!Funny you are the one spreading misinformation while secretly shilling on OLED. I'm just doing the counterpart job.

I don't say anything about OLED because it's not a competition between OLED and FALD. It's you that is constantly crying about how OLED is worse. Apple makes similar claims for their Pro Display XDR btw. Doesn't make it true. They will say whatever it takes to sell their product, and since their monitors do well on test slides they can get away with it.I know how this work when you pull out dual-layer LCD as an excuse that FALD LCD is not accurate enough for HDR grading. You don't say anything abut OLED is even worse on this regard. FALD is already made professional HDR 1000 grading while OLED isn't.

https://support.apple.com/guide/fin...r-video-with-pro-display-xdr-ver27c9f61bc/mac

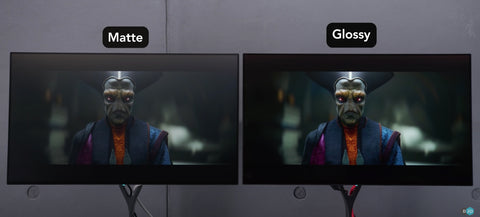

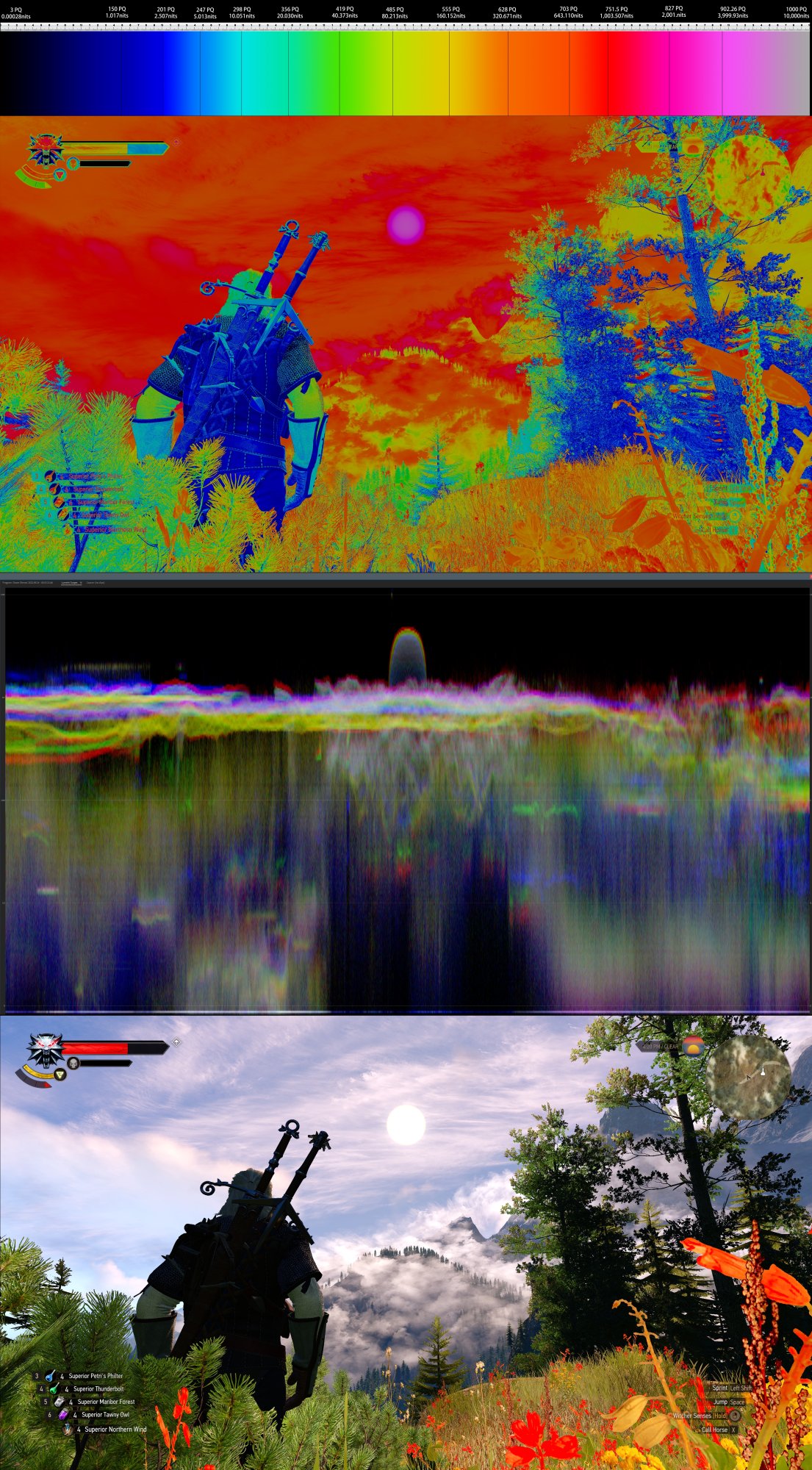

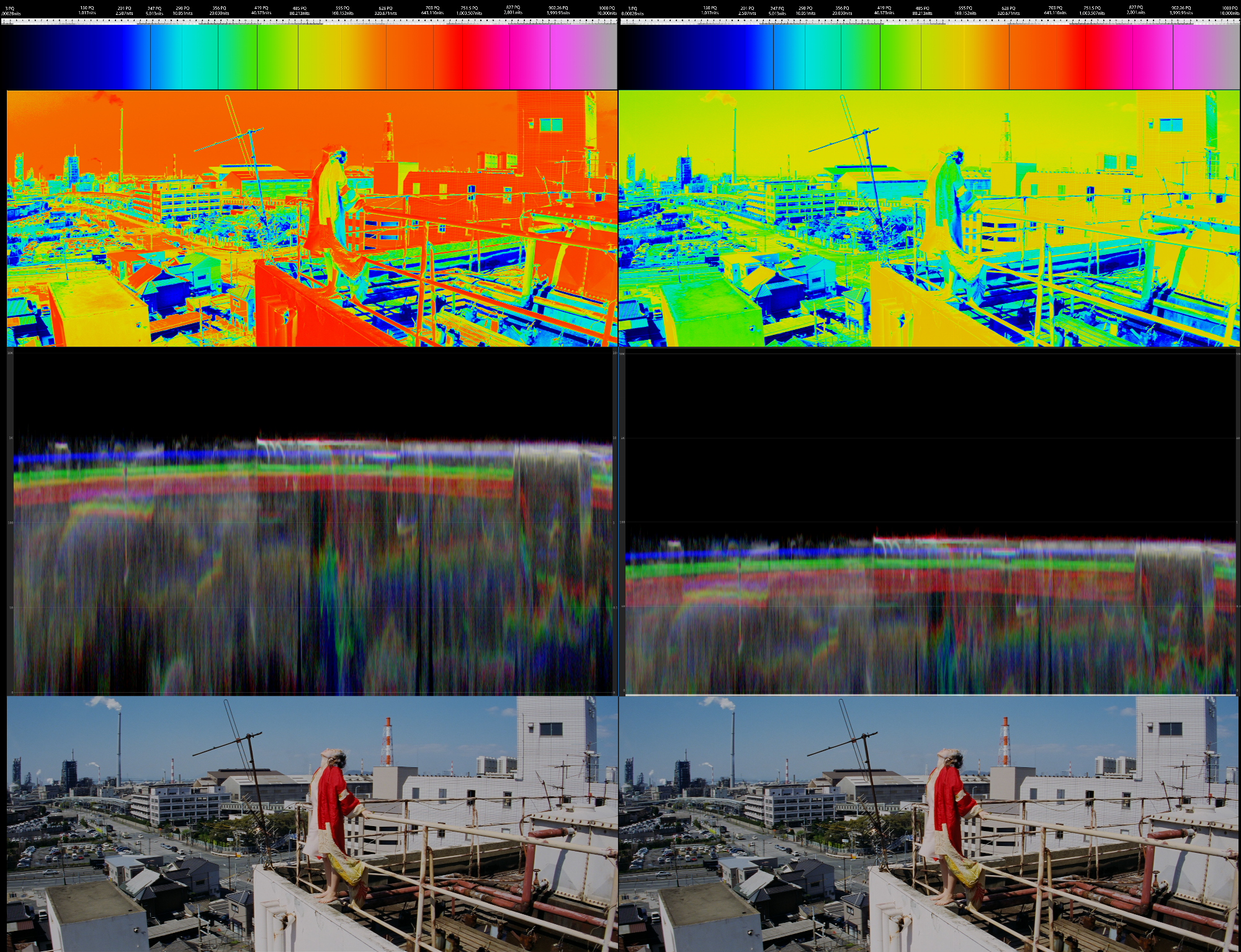

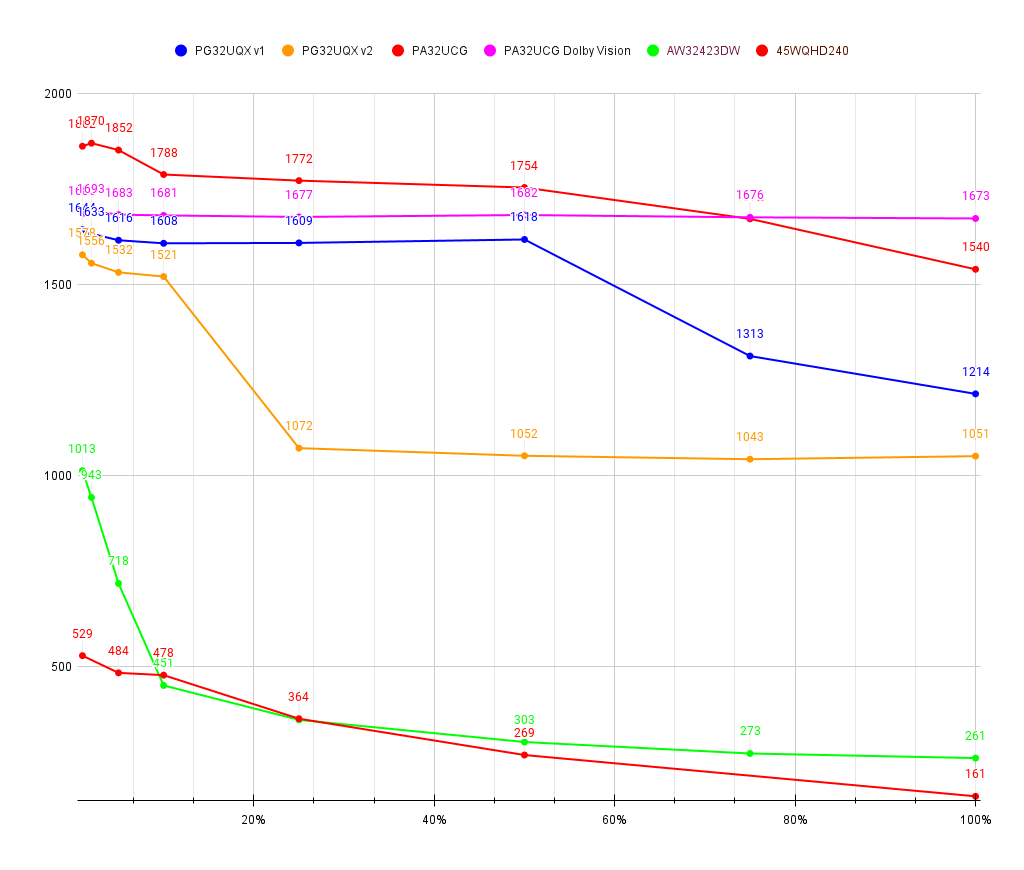

Yawn. OLED is accurate as long as the material does not trigger the ABL or hits the peak brightness. You can't dispute that. Yes, you can act like a baby and say dumb stuff like "B-B-But that's t-totally not REAL HDR". FALD is not accurate in any range.You claim OLED has better accuracy in a low range that doesn't cover HDR. The truth is OLED has worse accuracy than FALD in a high range where HDR matters. So FALD is more accurate than OLED on the HDR range.

You didn't, but you sure lie a lot. You showed one who uses it "somewhere in the production phase but not for critical finishing" and one random Chinese youtuber.You claim nobody use FALD to grade HDR. I just showed you there are professionals use FALD to grade HDR1000 while nobody uses a 200nits OLED for HDR1000.

Yawn.Then you claim anyone uses FALD to grade HDR are idiots while the truth is some of the best HDR videos out there are made FALD.

These statements are incredibly contradictory...You've claimed nothing. Your claims don't stand a chance.

I really wouldn't call him a troll. If he is it is surely the most extensive and bizarre case I have ever seen. I just think he is just a little... different.Rarely before has a troll been fed this much and persisted as long. Nor has a single point been uselessly rehashed so many times.

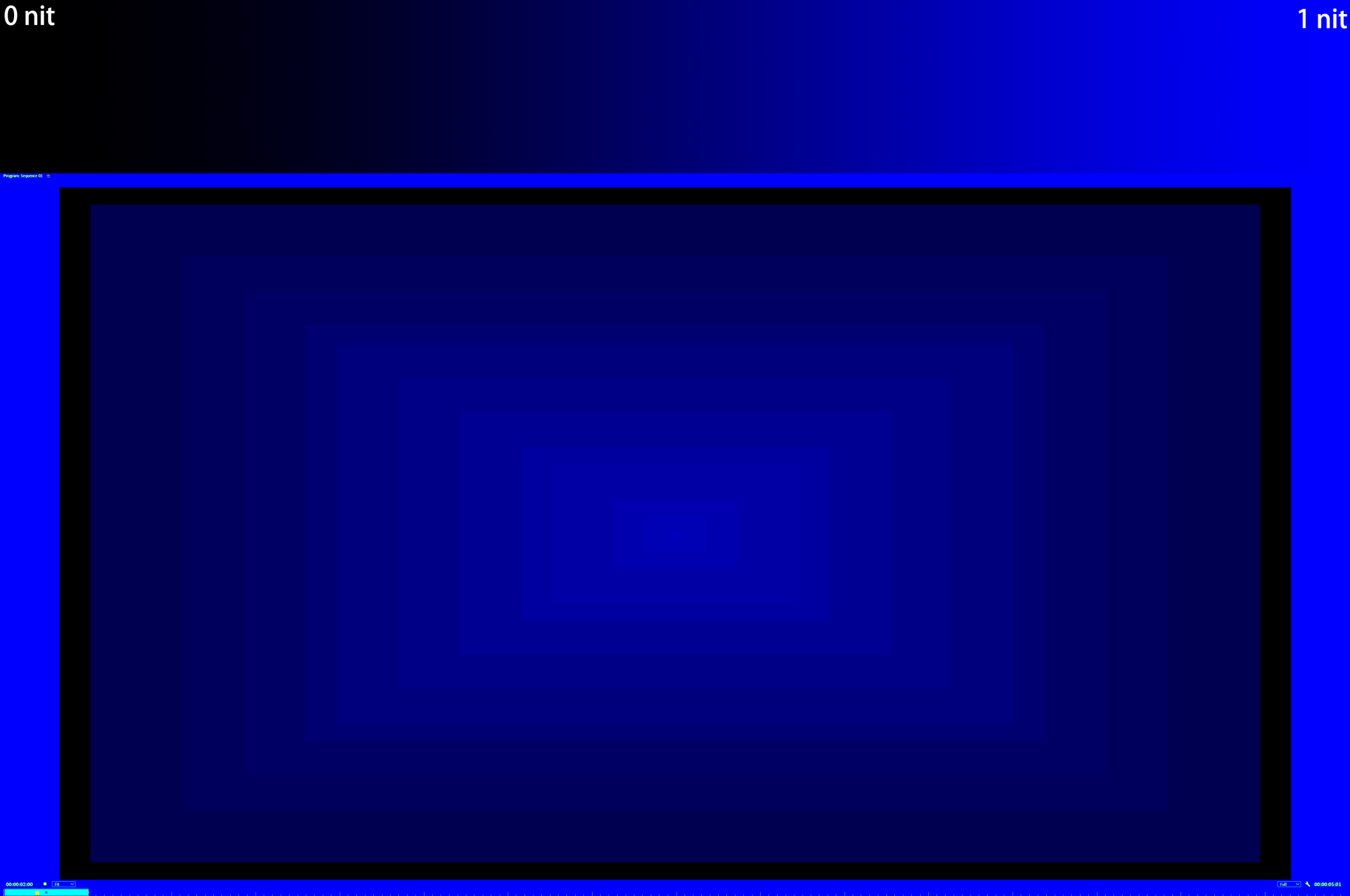

All pixels are in dimming zones. All pixels are affected. Unless we're talking large uniform test slides.The inaccuracy of FALD is the pixels under limited dimming zones. The inaccuracy of OLED is all the pixels hit by ABL. Once the ABL kicks in, all the pixels loses brightness to cause a less accurate HDR presentation than FALD. You don't get the brightness, you don't get the accurate color either. This is why OLED is a bigger tradeoff. This is why FALD is used to grade HDR1000 while a 200nits OLED isn't .

No one serious would ever use FALD to grade anything.

Yawn.Prove me wrong if you can use 200nits OLED to grade HDR1000.

Be happy with what you've got. All technologies have pretty significant flaws. If you don't see any now, then stop reading and enjoy your display.I use a mini led every day and I have never, literally never seen blooming in any type of mixed content. What I do see is an incredible jaw dropping picture every time no matter what game or movie or show I'm watching from bright to dark.

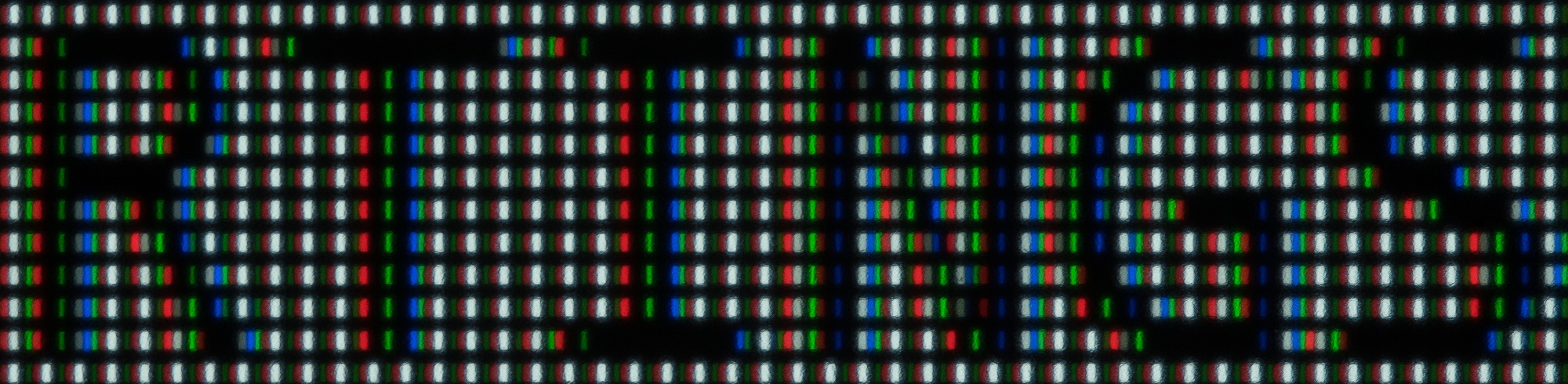

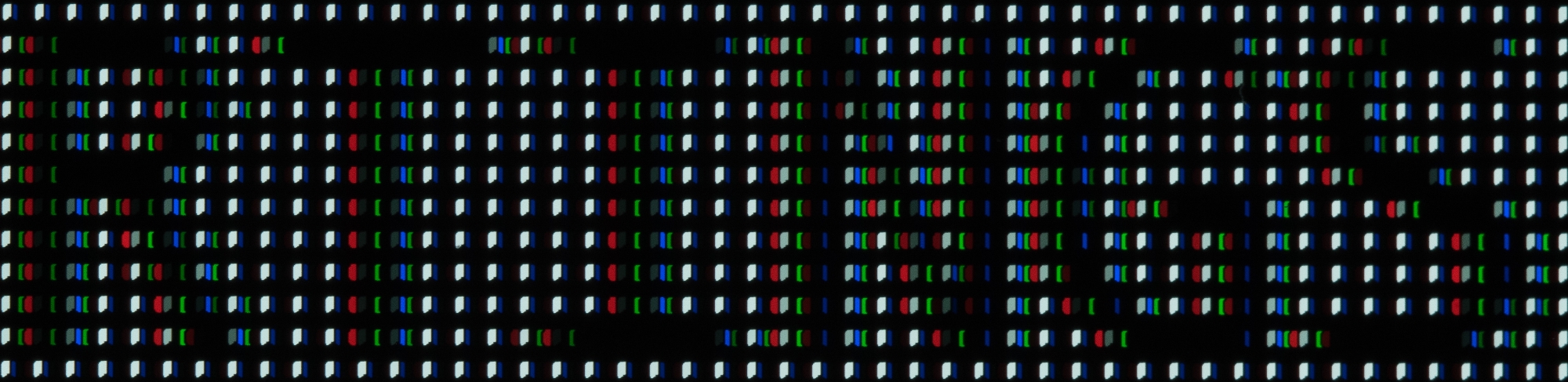

And that's one of the things that causes the FALD displays to be inherently inaccurate.Most FALD backlight has optimization to reduce blooming such as lower brightness at smaller window size or lift the whole screen a bit. Some manufacturers such as AUO labeled it as Adaptative mini LED or AmLED.

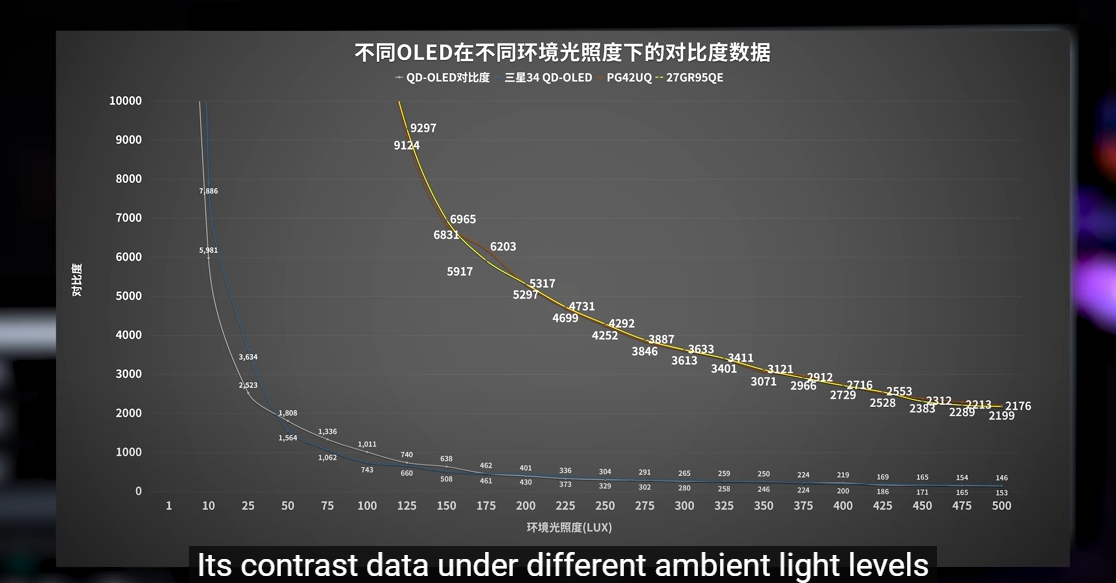

Glad you suddenly agree that FALD is inherently inaccurate now. Really funny you completely change the way you write when you're talking to someone who has already declared their love to a FALD display.Yet the contrast of FALD is still a lot higher than OLED in HDR range as OLED brightness call fall off the chart to have even less contrast and less colors. Basically 1000nits sun vs 0.1nits shadow on FALD becomes 1000nits sun vs 0.11nits or 800nits vs 0.1nits. But on OLED it can be just 300nits vs 0.1nits.

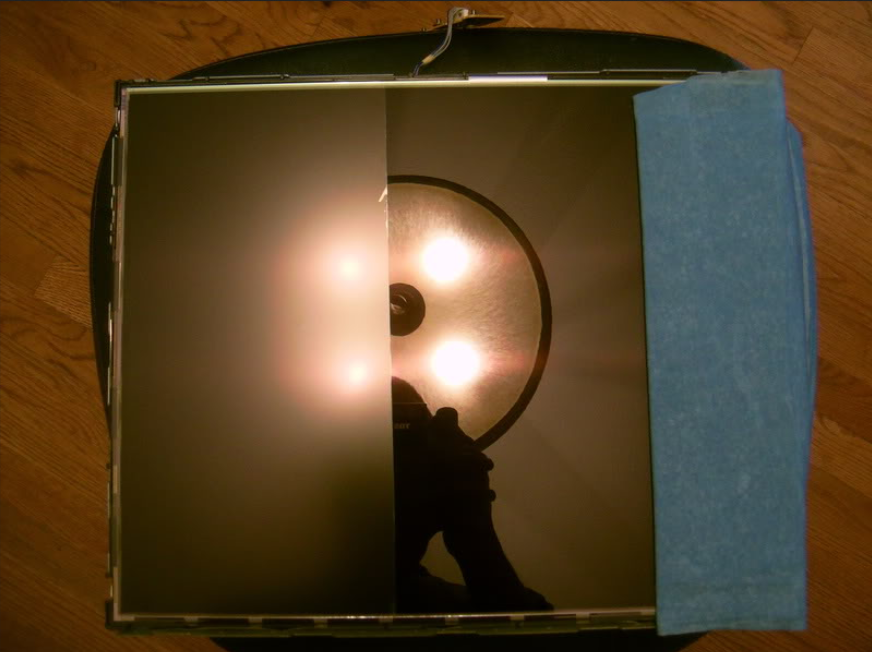

LOL. A "professional" FALD display may be accurate on test slides, but it is never accurate with actual content. Dimming zones and algorithms make it impossible. evln has made detailed explanations that are backed up with actual external sources, and you even just said it yourself.A professional FALD monitor is accurate on brightness at any window size even though the blooming is more visible. This is enough to say how important brightness is on HDR. And a better HDR monitor is the one that has more accurate brightness despite more blooming on limited zones. Dimming zones will increase over time.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)