kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

No, they are less accurate than you thought when ABL hits hard.and 50% more accurate at displaying HDR content.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

No, they are less accurate than you thought when ABL hits hard.and 50% more accurate at displaying HDR content.

Got any kind of review, data, or proof to back what you're saying?No, they are less accurate than you thought when ABL hits hard.

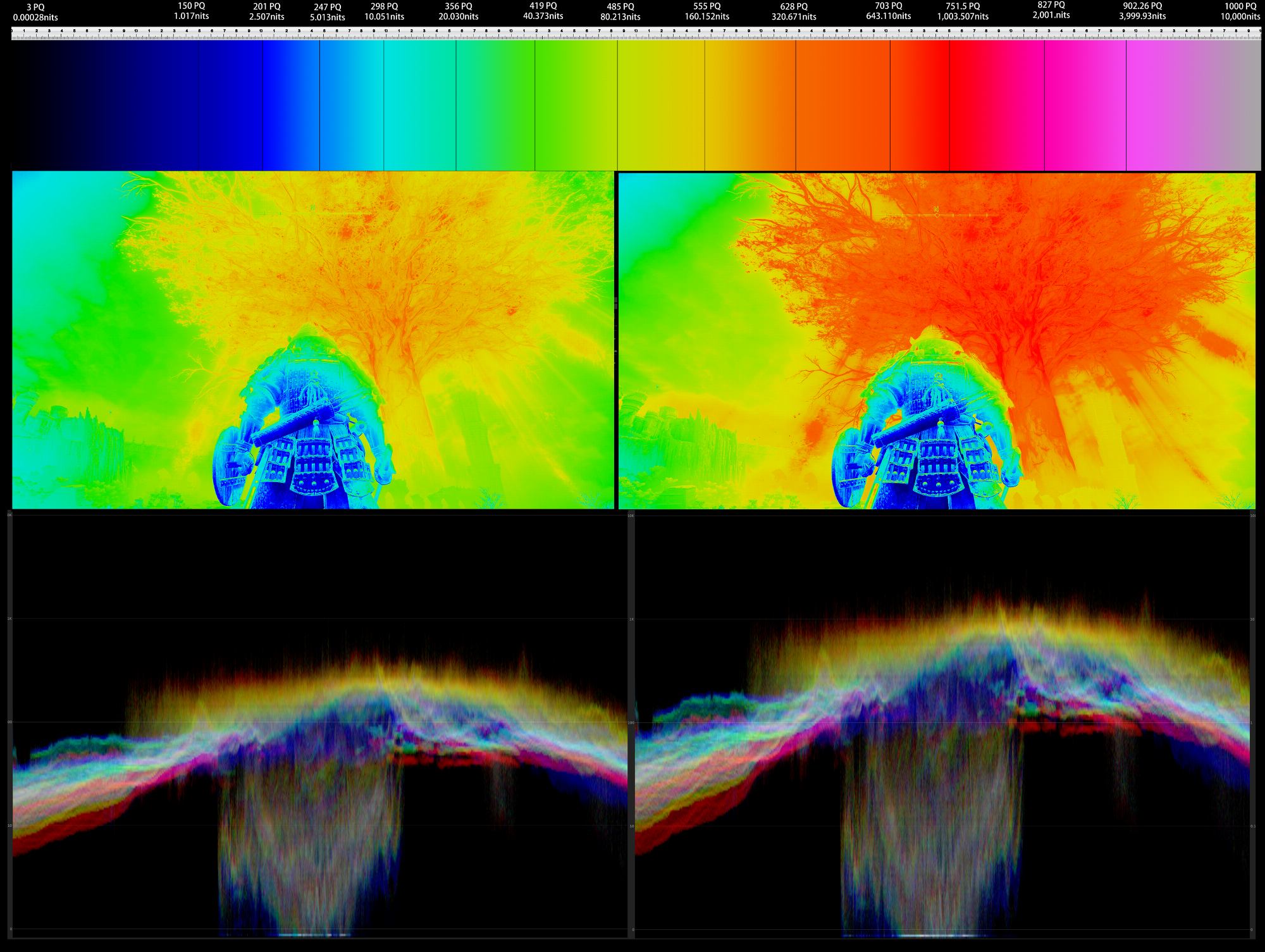

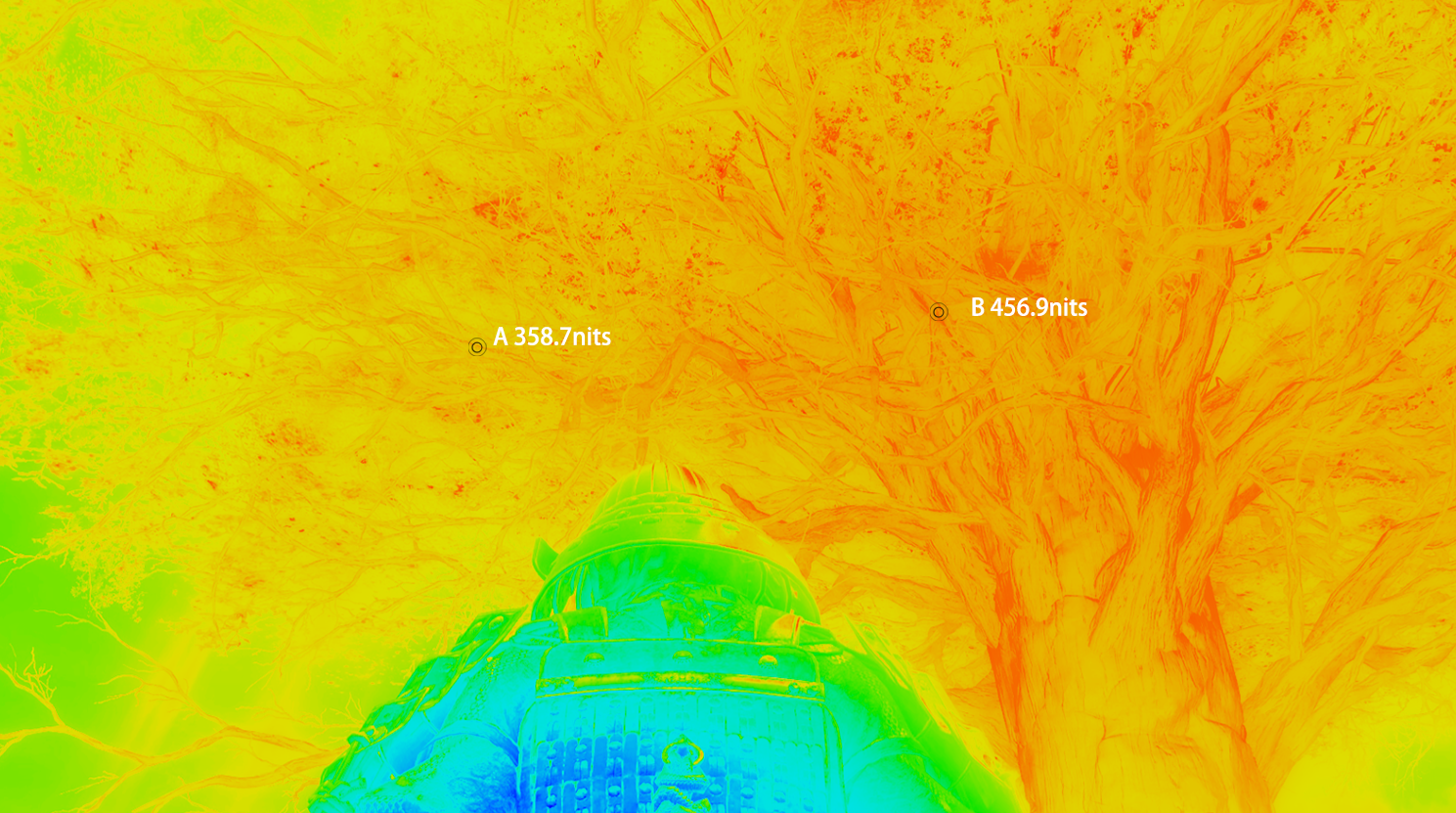

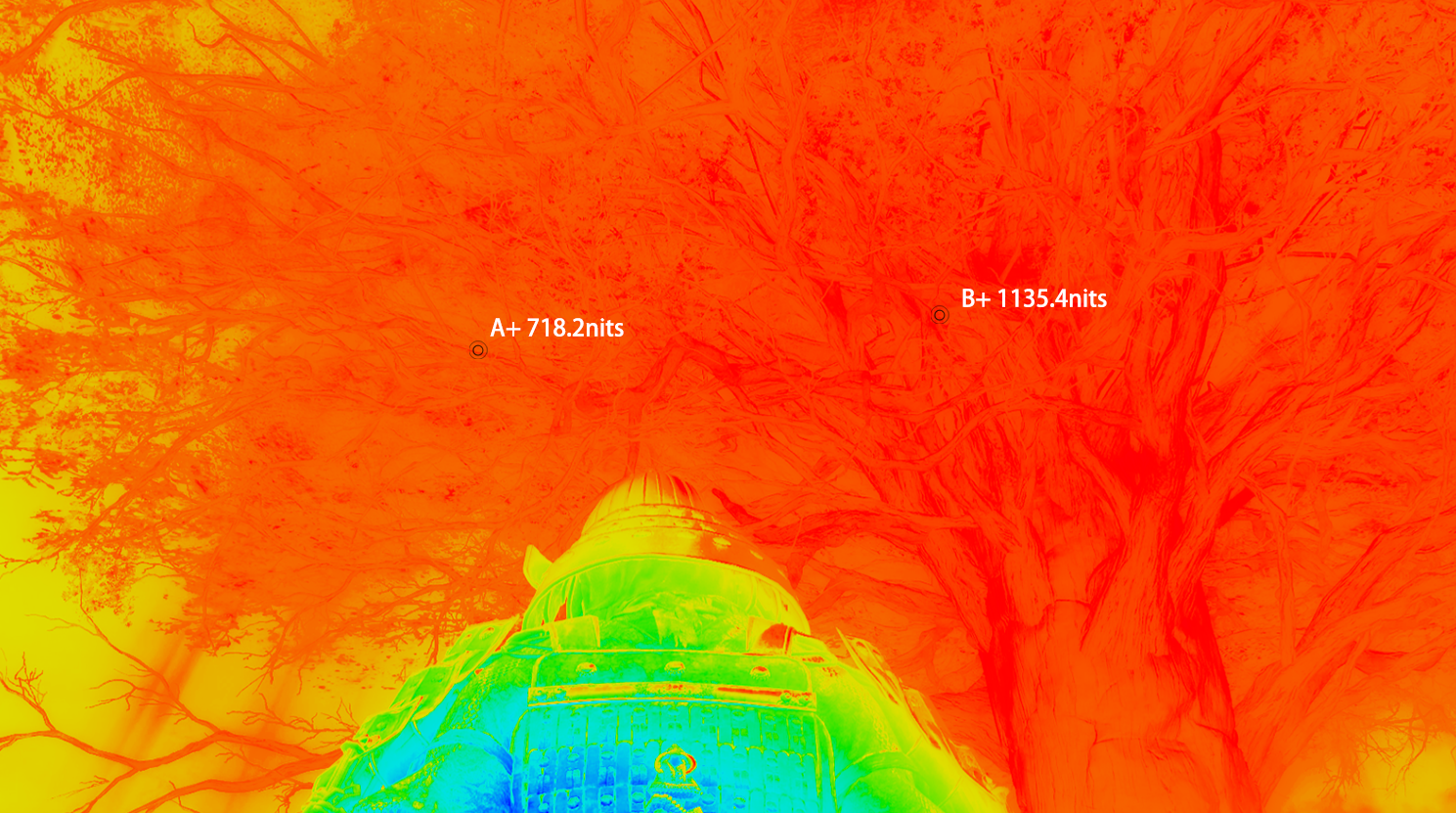

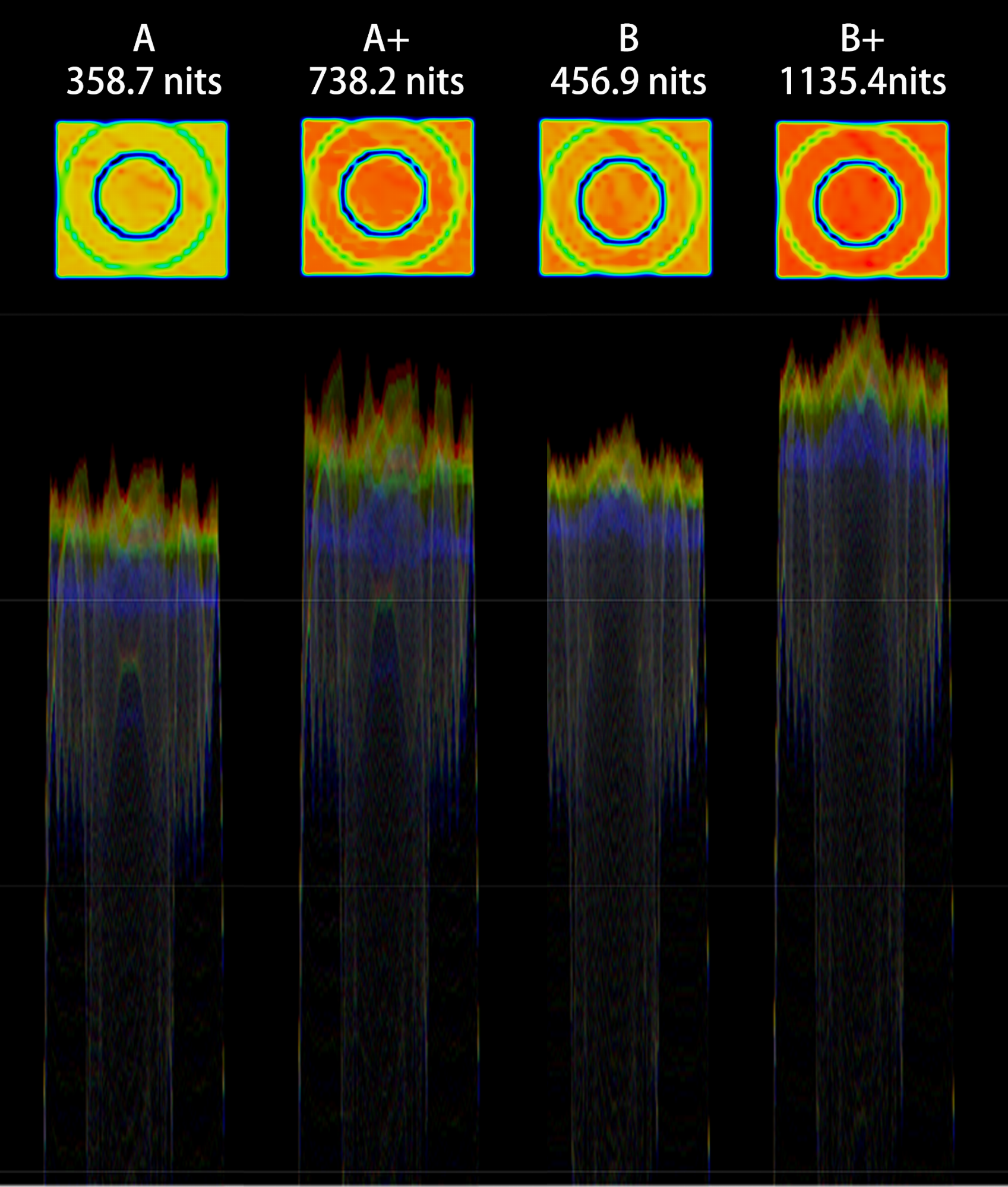

This gets to the heart of things I think. From what I've gathered the thing is that Kram stretches/filters HDR curves into his own curves he applies, lifts all the top lows and the mids like a torch mode. That's his contrast increase, moving the normal color curve goal posts to his own distorted ones. He's said that the default hdr curves are inadequate and made for hdr1000 and less screens most people have rather than his 1400nit one - but he'd likely throw that same kind of curveball to the top lows and the mids on a HDR 4,000 or HDR 10,000 capable game's curve vs default screen mapping on those kind of screens too if he had them.. That's fine if it's what he likes, and it can look pleasing sort of like a "HDR reshade" bump on a very bright FALD - but it's not accurate, it's just stretched/inflated/bulged. That may be being applied to objects and unknown object properties, large and small areas with unknown conditions, and scenes whose levels wouldn't necessarily accurately look lifted like that IRL or by creator's intent. It's a shaped filter so it's operating blind of what the content is and what the content's properties are IRL or what they are virtually representing. It's his own custom look like someone' favorite reshade settings. Additionally, 7000 pixels to 15,000 pixels per fald zone + offsetting and toning multiple surrounding fald zones x 7k - 15k each all of the time is never going to accurate to begin with as has been evidenced many times in the thread already.

"Lift is a lift. You turned the whole orange, yellow and red heatmapped erdtree into ~1000nit and lifted the whole green area on the right from a more meaningful depth. Those areas are not as dark in absolute value nor in comparison to whatever peak brightness areas are displayed anymore. Simple as that.

Ah yes, all those Chinese professional youtubers with professional Nikon Z9s making super serious content for Youtube.Of course FALD LCD cannot do as good as dual-layer LCD but it does much better than whatever OLED you have. That's why the professional use it. Yet you call them idiots. You are so exposed.

LOL. Now you suddenly care about what was debated originally. Holy double standards, Batman.That's not what you debated originally.

That's not what you debated originally. You original claim is the lowlight is lifted that results in less contrast even the higher contrast waveform is shown as proof. And you don't know how to read it.

Since I make HDR with whatever preference I like, you should consider if you OLED monitor is capable of displaying the content with my intent. Once ABL kicks in, all pixels of OLED shrinks both contrast and color which results in a even worse accuracy.

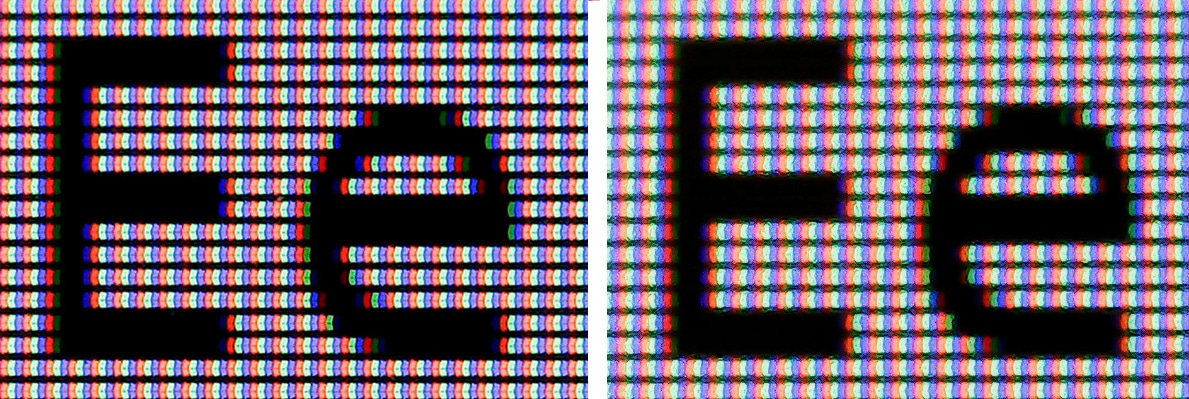

The problems with OLEDs are just Brightness, Burn-in, Text Clarity, and Color Fringing. Everything else is perfect.

It would be nice if there were some 32" models eventually for people who need shorter view distances.

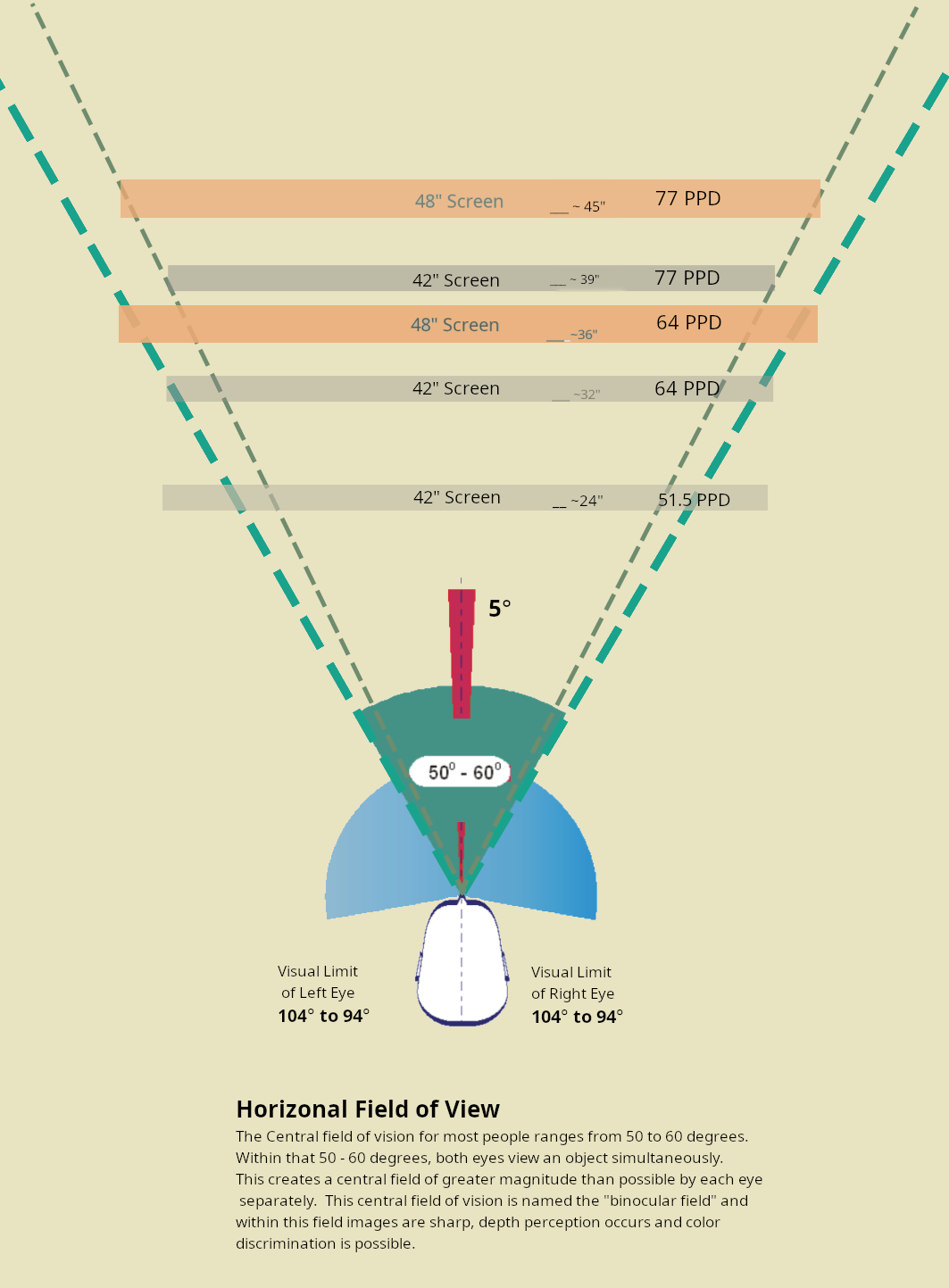

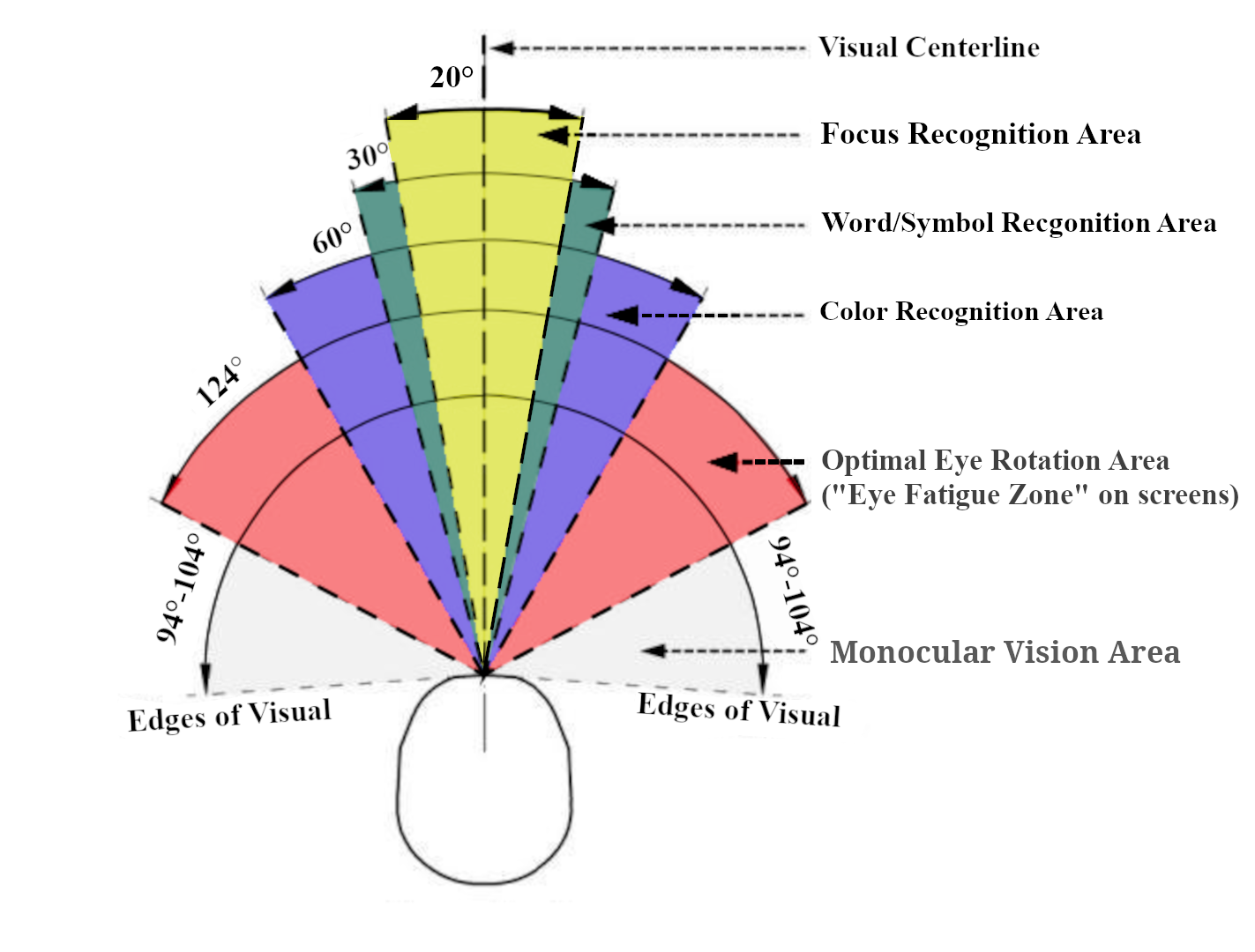

42" 4k screen at 24" view distance is 52 PPD.

27" screen 2688 x 1512 rez at 24" view distance = 52 PPD

The 24" view crowd are in a way using a 42" 4k like a 27" 1500p screen's pixels and exacerbating off axis viewing angle issues instead of getting 4k fine pixel PQ.

View attachment 528910

. . . . . . .

On the other hand, a

. . 32" 4k at 24" view would be ~ 64 PPD (and 60 deg) which can be compensated for with aggressive AA and text sub-sampling (though the 2d desktop's graphics and imagery lacks AA outside of text sub sampling)

. . 32" 4k at 27" view would be ~ 70 PPD and 55 deg viewing angle.

So really a much better fit for the near desk view crowd. Right now it's a square peg in a round hole thing going on with larger screens for a lot of people from what I've read in threads and seen in images.

At the human 50 to 60 degree viewing angle, all 4k screens of any size are around 64PPD at 60deg and 77 PPD at 50 deg.

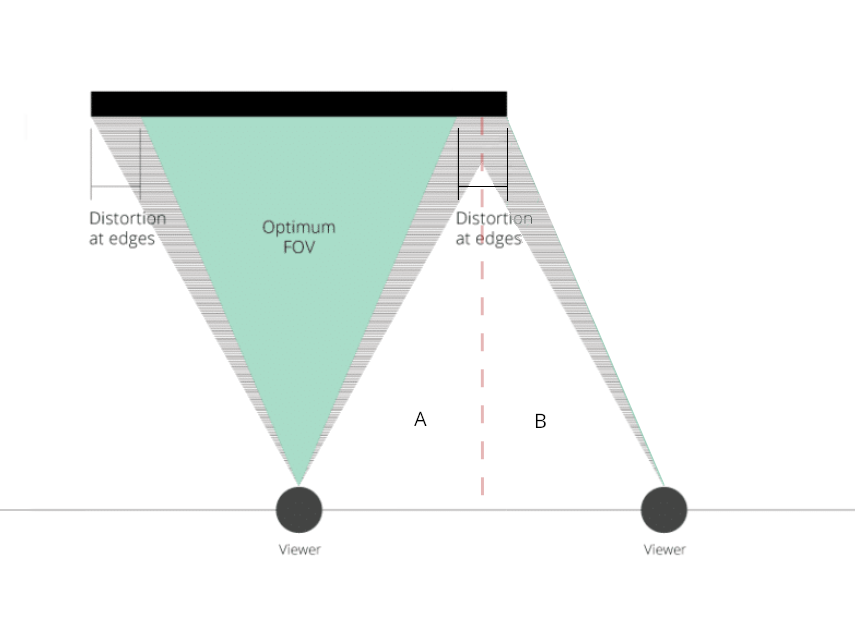

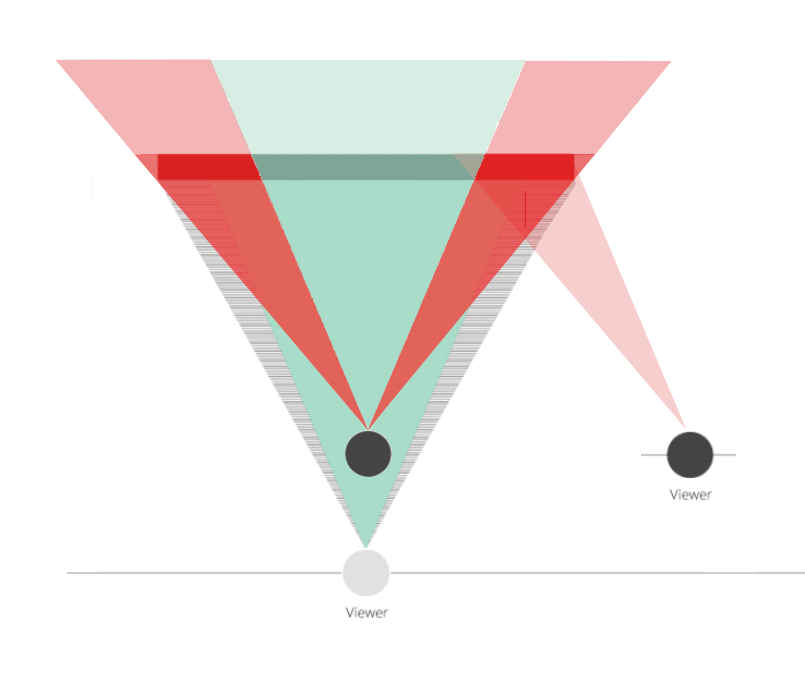

So if you are set up properly especially if getting 70PPD+ I think you'll be fine on most of these screen types. Sitting at optimal viewing angle will also prevent side panels worth of screen space from being pushed outside of your viewpoint and will keep the off-axis color uniformity issue from growing larger.

42" 4k and 48" 4k optimal view distances (the 24" view distance in the example is too close as a comparison, with low PPD and poor viewing angle):

You end up doing this kind of thing when you sit too close (as well as driving your PPD down):

optimal view distance (The outside viewer is the same amount of degrees off axis from the sides of the screen as the central viewer is).

Sitting too close. (The outside viewer is the same amount of degrees off axis from the sides of the screen as the central viewer is).

Human viewing angle:

It's when people try to stuff a 42" - 48" - 55" screen on a (desk instead of mounting it separately at a more optimal distance) that they end up getting 1500p-like PPD or worse so the pixel grid appears too large. Then they try to squeeze as much masking/compensation out of text-ss and AA as possible to hide the ugliness. The 2D desktop's graphics and imagery typically remain uncompensated for entirely too since it gets no sub-sampling or game AA - so that will look even worse.

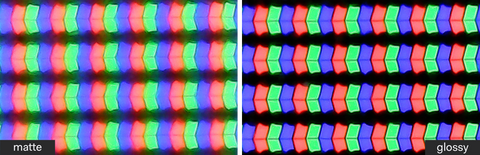

Also worth mentioning that AG/matte screen surface treatments can affect text clarity and how clear pixels/subpixels appear in general. Matte type AG surface treatments also raise blacks and affect how wet and saturated a screen would otherwise look.

Ah yes, all those Chinese professional youtubers with professional Nikon Z9s making super serious content for Youtube.

LOL. Now you suddenly care about what was debated originally. Holy double standards, Batman.

FALD can't do accurate except on test slides and no professionals use them for grading. You cannot deny this.Now who is shifting focus?

You cannot deny the fact their HDR videos look good regardless of what kind of cameras they have.

You cannot deny the fact both of them use FALD LCD for HDR production.

You cannot deny the fact they don't use a 200nitis OLED for HDR production.

You are just downplay the OLED accuracy by saying FALD cannot do very serious HDR work. And you don't even dare to accept the fact OLED has much worse accuracy than FALD on the actual HDR.

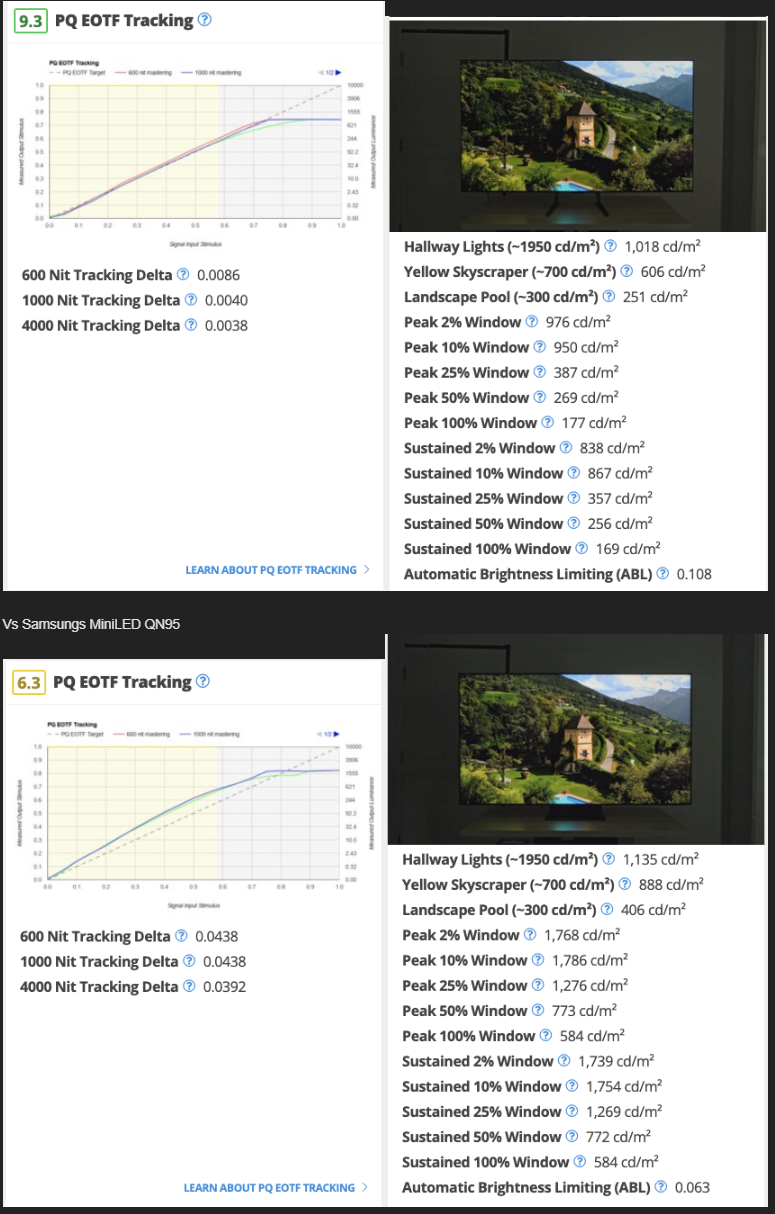

The review data of your own post is good enough.Got any kind of review, data, or proof to back what you're saying?

I can deny this easily. I just showed you how professionals do grading work with FALD LCD. It is not as accurate as dual-layer LCD but a lot more accurate than your 200ntis OLED. Your own link shooting your own foot even encourages you to do grading on the monitor you can buy.FALD can't do accurate except on test slides and no professionals use them for grading. You cannot deny this.

That doesn't address the lack of accuracyThe review data of your own post is good enough.

View attachment 549650

QN95 has raised EOTF. It has much higher peak brightness across the board.

When there are footages need 10% window from 950nits - 1800nits or 25% window from 387nits-1200nits then QN95 will be more accurate. OLED is only accurate when the footage is lower in these ranges because its brightness is not enough.

You still don't understand contrast. You still don't know how to read waveform where the low light goes down while high light goes up.Yes as contrast measurements are often considered as depth to darkness capability vs peaks, as in a VA screen or a FALDs screen vs an edge lit IPS's black depth capability. Taking a 200nit log in a swamp and lifting it to 500 nit and scaling all of the mids even higher (exaggerating a bit there but maybe not) is a farther distance sure but it's not what people usually intuit from a raw/flat contrast of screen X number : 1 measurement in specs. It's also not necessarily accurate to IRL or artistic intent. .. but I won't go into the whole debate again here though. That will suffice. Its just another can of worms and this is all just you resorting to ad hominem attack on someone personally again in order to distract from the points of this thread in the first place.

LOL. The truth is you have proved nothing. You showed a video of a guy promoting the display (as in he is literally getting paid to say nice things about it, and at no point does he say he actually say he uses it for grading, while there is proof that he uses very expensive cameras and has previously purchased a $30.000 pro monitor so money is obviously not an issue for him) and some random Chinese youtube channel because that was all you could find while searching youtube for proof LOL.I can deny this easily. I just showed you how professionals do grading work with FALD LCD. It is not as accurate as dual-layer LCD but a lot more accurate than your 200ntis OLED. Your own link shooting your own foot even encourages you to do grading on the monitor you can buy.

Again, the truth is both of them use FALD LCD to grade HDR. They don't use a 200nitis OLED for HDR production. And you are just downplay the OLED accuracy by saying FALD cannot do very serious HDR work. And you don't even dare to accept the fact OLED has much worse accuracy than FALD on the actual HDR.

Where exactly is he doing that? Seems to be only in your head...You are still denying the contrast is lower even on the exactly the same location where 2000nits sun vs 0.01nits shadow has 20 times more contrast than 1000nits sun vs 0.1nits shadow

Because the facts you provided are only partial of the reviews. You use it to downplay OLED has even less accuracy in HDR1000.Just follow any major thread dialogue Kram is in and you'll see similar.

Kramnelis. . he knows better than dolby, and better than TFTCentral .. /s . . " a few words from A review" .. probably one of the top monitor reviewing sites if not the top monitor reviewing site, known for very detailed technical reviews dissecting screens and known for being very honest about it.

When faced with the actual factual import and impact of the tradeoffs of fald, or any technical topic of debate or discussion for that matter really, he'll often fall back to more enraged sounding personal attacks on individuals themselves. He's done so multiple times to multiple people in this thread and others. It's his m.o. You know that someone is hard pressed when they swing wildly like that at the person rather than the facts. It's one of the logical fallacies, ad hominem. He actually employs several of them. While no-one is immune to falling into some of those traps from time to time, I try not to fall prey to that so I usually try not to focus on the person so much for that reason but I will say that facts and opinions on performance of screen types aside (he's brought up some interesting data at times) - I find his character questionable. At least I personally find that his online persona is kind of toxic.

LOL. The truth is you have proved nothing. You showed a video of a guy promoting the display (as in he is literally getting paid to say nice things about it, and at no point does he say he actually uses it for grading, while there is proof that he uses very expensive cameras and has previously purchased a $30.000 pro monitor so money is obviously not an issue for him) and some random Chinese youtube channel because that was all you could find while searching youtube for proof LOL.

You can blame Samsung did that for brightness to pop even more so it has a bigger impact even it is less accurate while OLED is just under underwhelming with even worse accuracy than Samsung.That doesn't address the lack of accuracy

"for HDR production" yeah, lmao. You think you're so clever...I provide proof they use FALD for HDR production.

Next thing you want me to prove that God doesn't exist...It's you provide nothing that FALD cannot be used for HDR production.

Thank you, that's very kind of you.You can keep your imagination.

Yeah, lol. Bet you have tons of material lined up. You are a professional colorist after all.In the meanwhile, I can use PA32UCG to grade whatever HDR1000 I want.

Now you just trash talk into oblivion. I grade whatever HDR I want with images you can never see on OLED.

Now you just trash talk into oblivion. I grade whatever HDR I want with images you can never see on OLED.

Don't forget you don't even grade HDR.Wooow, I'm so sad I'm missing out on your expertly crafted fake regraded anime HDR.

You're a gold mine. Please do keep going. But you're going to have to come up with some new material that I can work with...

LOL. And you don't even make anime.Don't forget you don't even grade HDR.

I can see better images. That's what matters. I can grade any footage I record while you cannot even see what I see. You can't even grade HDR on your 200nits OLED. This is a big difference.LOL. And you don't even make anime.

You are not a colorist. You are not a movie director. You are not a display manufacturer. You are not a calibrator. You don't even make anime.I can see better images. That's what matters. I can grade any footage I record while you cannot even see what I see. You can't even grade HDR on your 200nits OLED. This is a big difference.

All you do is downplay the OLED accuracy by saying FALD cannot do very serious HDR work. And you don't even dare to accept the fact OLED has much worse accuracy than FALD on the actual HDR. Better buy a dual-layer LCD to see better.

You are not a colorist. You are not a movie director. You are not a display manufacturer. You are not a calibrator. You don't even make anime.

Nothing you say or do holds any value. All you do is shill FALD displays. You should buy an actually accurate monitor like a dual cell LCD if you actually care. But do you care? No you do not. You just shill. All day every day. On this forum. On other forums. The same nonsense shilling all the time. Almost like you were getting paid for it, but the ones paying for it, albeit indirectly, are probably your government and not the FALD display manufacturers.

It doesn't matter who I am. It's never about who I am. Everybody is a nobody on the Internet.You are not a colorist. You are not a movie director. You are not a display manufacturer. You are not a calibrator. You don't even make anime.

Nothing you say or do holds any value. All you do is shill FALD displays. You should buy an actually accurate monitor like a dual cell LCD if you actually care. But do you care? No you do not. You just shill. All day every day. On this forum. On other forums. The same nonsense shilling all the time. Almost like you were getting paid for it, but the ones paying for it, albeit indirectly, are probably your government and not the FALD display manufacturers.

Funny thing is they went from inaccurate FALD, then replaced that with a nice and accurate dual cell LCD, and now they're back to inaccurate FALD. I mean they could have kept the dual cell LCD in the line-up, but I guess they hope that most people just think MORE BRIGHT MORE BETTER!I mean FALD displays can get pretty up there if this is anything to go by: https://www.newsshooter.com/2021/07/14/flanders-scientific-xm312u-5000nit-hdr-mastering-monitor/

A 5000 nits mastering monitor is probably something he should buy if he wants to "see better images" lol because surely 5000 nits beats out a measely 1600 on the Asus amirite?

Of course. It only matters who other people are because that you can use for your incessant FALD shilling.It doesn't matter who I am. It's never about who I am. Everybody is a nobody on the Internet.

Uhuh. Think you're getting yourself confused now.All you do is downplay the OLED accuracy by saying FALD cannot do very serious HDR work.

You know what I mean.Funny thing is they went from inaccurate FALD, then replaced that with a nice and accurate dual cell LCD, and now they're back to inaccurate FALD. I mean they could have kept the dual cell LCD in the line-up, but I guess they hope that most people just think MORE BRIGHT MORE BETTER!

Of course. It only matters who other people are because that you can use for your incessant FALD shilling.

Uhuh. Think you're getting yourself confused now.

None of what you say changes the fact that no FALD display can do anything accurately except large uniform test patterns. You can type a million posts with the same shilling, but you can't change the facts.It doesn't change anything about how AW3423DW low-range "HDR" get wrecked by a 4-year-olld 512-zone FALD high-range SDR. It doesn't change the fact OLED is underwhelming. It doesn't change the fact OLED has much worse accuracy than FALD on the actual HDR.

I never shill on FALD. FALD has problems. I cannot wait to replace FALD. But OLED is even worse. The brightness can hardly get higher. Once it gets higher it flickers even harder.

Of course a higher range is better.None of what you say changes the fact that no FALD display can do anything accurately except large uniform test patterns. You can type a million posts with the same shilling, but you can't change the facts.

You think MORE BRIGHT MORE BETTER is the only thing that matters, but to most people it is far from the only thing that matters. Very few people exclusively view youtube videos by people who only make demo material for display manufacturers to showcase bright HDR. Some people like accuracy but FALD can never do true accuracy and you know this. You know I speak the truth but you can't stop yourself from shilling FALD. You always bring OLED into it because it's the only defense you have - that something is worse, even though it's not even relevant. And maybe get your head checked because you're reading a lot things that no one has ever written.

Damn. Literally everything I have ever said about anything is a lie. Holy moly. I think I might need some time to truly process this revelatory information that you have bestowed upon me and retrain my brain.You've never spoken any truth.

Funny you are the one spreading misinformation while secretly shilling on OLED. I'm just doing the counterpart job.Damn. Literally everything I have ever said about anything is a lie. Holy moly. I think I might need some time to truly process this revelatory information that you have bestowed upon me and retrain my brain.

I'm really disappointed you didn't use any of your classic lines that I had carefully selected for you, but instead chose some other of your other classic lines. Kinda breaks my heart to be honest.

Btw, I've thought about training a chatbot on your posts. It would be incredibly simple. I would make a new user for it to post from called "kramneIis". No one would notice the difference.

Funny I'm just saying the truth. It can be repeated multiple times. FALD has much better accuracy than OLED at high range.Rarely before has a troll been fed this much and persisted as long. Nor has a single point been uselessly rehashed so many times.

which one?I use a mini led every day and I have never, literally never seen blooming in any type of mixed content. What I do see is an incredible jaw dropping picture every time no matter what game or movie or show I'm watching from bright to dark.

Most FALD backlight has optimization to reduce blooming such as lower brightness at smaller window size or lift the whole screen a bit. Some manufacturers such as AUO labeled it as Adaptative mini LED or AmLED.I use a mini led every day and I have never, literally never seen blooming in any type of mixed content. What I do see is an incredible jaw dropping picture every time no matter what game or movie or show I'm watching from bright to dark.

I use a mini led every day and I have never, literally never seen blooming in any type of mixed content. What I do see is an incredible jaw dropping picture every time no matter what game or movie or show I'm watching from bright to dark.

Not a gotcha at all; I was genuinely curious!You guys want to know what displays I have to prove that it has blooming in certain rare scenarios. That's fine, me and my wife have never noticed anything bad or distracting when watching even dark content like Stranger things which has a lot of very dark scenes. I know what blooming is and I have never noticed it, ever. We have QN90A in the living room and a QN90B in my computer room. We love to open up the blinds during the day because we both enjoy natural sunlight and a view of our garden and trees in back yard. The QN90A in the living room is fantastic. I couldn't ask for anything more as we are very satisfied with it's picture quality. The QN90B in my PC room is arguably even better. It's so immersive and I love it at 144hz and extremely powerful HDR along with G-sync or whatever auto sync it uses to sync to the 4090. The PC room has a window that I can choose to shut or open for light or dark moods whatever I feel like. Both displays are 50". Also I tried a C2 LG and we honestly disliked it. I couldn't accept the ABL and dim pictures and my wife was believe it or not disappointed in the dim brightness also. I'm not even kidding. We returned it within a couple days. After buying the QN mini led it knocked out socks off. This is our experience.

I also was curious as I returned the C2.You guys want to know what displays I have to prove that it has blooming in certain rare scenarios. That's fine, me and my wife have never noticed anything bad or distracting when watching even dark content like Stranger things which has a lot of very dark scenes. I know what blooming is and I have never noticed it, ever. We have QN90A in the living room and a QN90B in my computer room. We love to open up the blinds during the day because we both enjoy natural sunlight and a view of our garden and trees in back yard. The QN90A in the living room is fantastic. I couldn't ask for anything more as we are very satisfied with it's picture quality. The QN90B in my PC room is arguably even better. It's so immersive and I love it at 144hz and extremely powerful HDR along with G-sync or whatever auto sync it uses to sync to the 4090. The PC room has a window that I can choose to shut or open for light or dark moods whatever I feel like. Both displays are 50". Also I tried a C2 LG and we honestly disliked it. I couldn't accept the ABL and dim pictures and my wife was believe it or not disappointed in the dim brightness also. I'm not even kidding. We returned it within a couple days. After buying the QN mini led it knocked out socks off. This is our experience.