Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,835

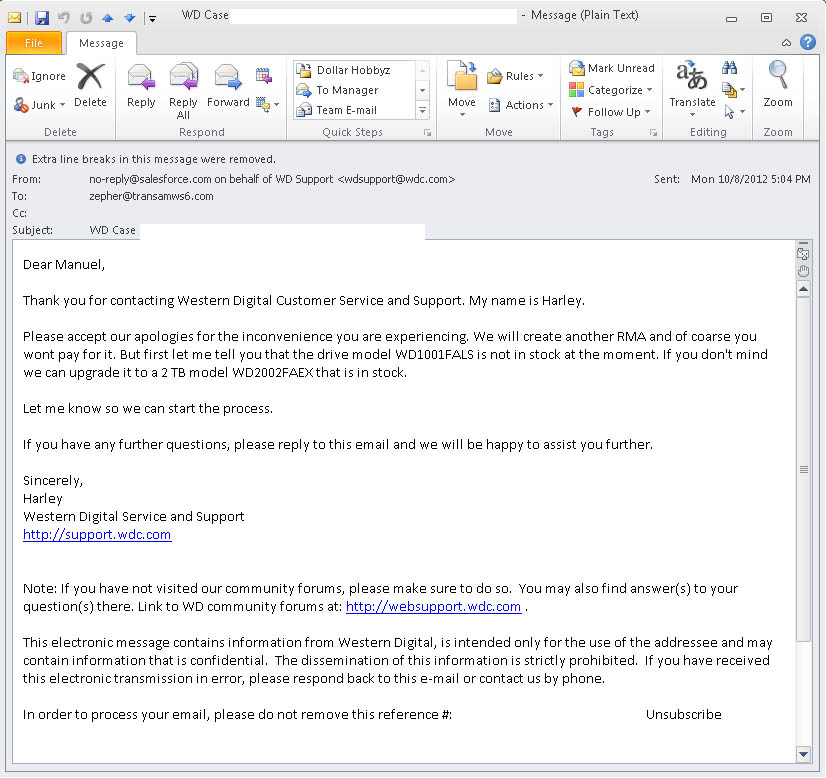

So, everyone seems to have a different take on when it is time to RMA a drive.

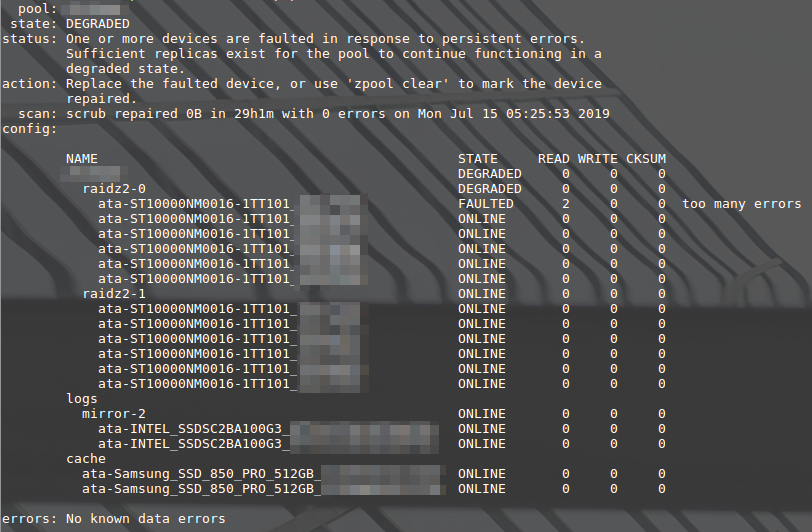

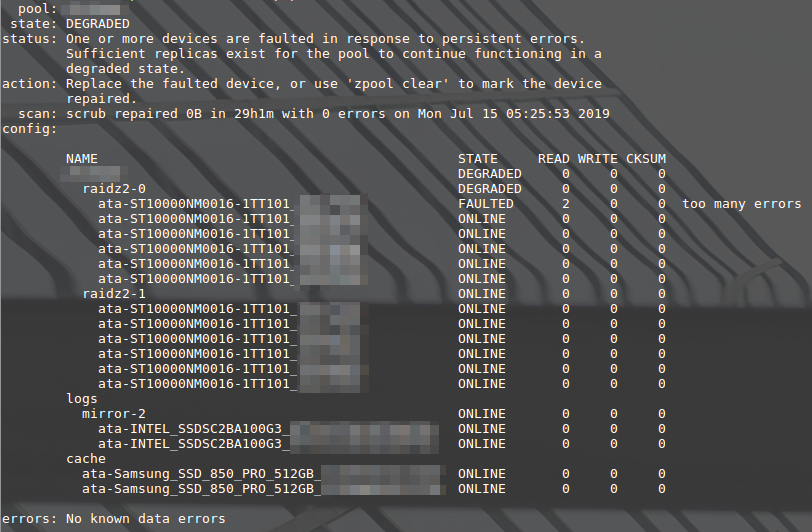

This happened today in my pool.

I wondered if the drive was really going bad, or if something else was going on, so I pulled it out of the backplane, and reseated it, cleared the errors, resilvered the pool, and am now running a pool scrub to see if I can tease the errors out again.

If the drive fails again, I'm going to RMA it, otherwise I'll consider it one of those random occurrences we keep redundancy for.

When do you guys typically decide to RMA yours?

This happened today in my pool.

I wondered if the drive was really going bad, or if something else was going on, so I pulled it out of the backplane, and reseated it, cleared the errors, resilvered the pool, and am now running a pool scrub to see if I can tease the errors out again.

If the drive fails again, I'm going to RMA it, otherwise I'll consider it one of those random occurrences we keep redundancy for.

When do you guys typically decide to RMA yours?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)