Well according to Jen Hsung himself, the 2070 is faster than a Titan Xp, so...Yeah. When I bought this 1080 Ti for $699 back during launch, I felt dirty spending that much on a GPU. Now $999 or $1199 for Ti? No. I'm going to peace-out at that price level. So unless the regular 2080 is waaaaay faster than the 1080 Ti, which I'm seriously doubting, there's no upgrade path for me until prices come down.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Tick Tock - #BeForeTheGame

- Thread starter FrgMstr

- Start date

Trust me guys, if everyone waits for the next 60 days without pre-ordering and then ordering these new cards, NVIDIA will bring the price down. Considering their behaviour during the past 12 months (limiting supply to keep the price high - I understand mining but common - and GPP and other things), this company badly needs a boycot to fall back in line and behave. As consumers we have to stop tacking it up the... [you fill in the blanks].

Facepalm. You really need to read this:

https://en.wikipedia.org/wiki/Supply_and_demand

STEM

Gawd

- Joined

- Jun 7, 2007

- Messages

- 614

That, and there is a small market segment who doesn't care about the price, they just want it first. So it's smart to launch with a high price, then lower it in 2 to 3 months once these are stocked in decent quantity.

When did prices ever come down? The GeForce GTX 1080 Ti launched at $699, and look how much it costs today. Only the garbage cards like the cheaped plasticky MSI and Gigabyte cards are priced under $700. Anything else is between $700 to $800.

The GeForce GTX 1080 8GB launched at $699 and it dropped to $549 when the GTX 1080 Ti was launched. But that's a rare occasion. The GTX 1080 8GB was out for a year already and the market was saturated with it, and NVIDIA needed to make money and sell something new. No one would have bought the GTX 1080 Ti at $999 with the GTX 1080 8GB around.

This time around they are launching all 3 tiers at the same time, so there won't be another product to slot in and push down the price like it happened with the introduction of the GTX 1080 Ti.

Keep hoping though

STEM

Gawd

- Joined

- Jun 7, 2007

- Messages

- 614

Yeah, that's why they have an oversupply of 10 series cards. And not just Founders Edition cards, but their board partners have been hoarding those pesky GPUs as well.

Read this: https://en.wikipedia.org/wiki/Market_manipulation

I'd like to see just how slow the 1080 and TI are at raytracing, from the stream they claim 10x, so if that's true, if the 2080 getting 60 fps, the 1080s will be getting 6? It sounds like some amazing technology, there just isn't much info to actually make a decision without some benches. Oh well it won't be too long till we will know more. Maybe when they become more mainstream production along with the 2070 the 2080 TI will be more like $700. Should be interesting.I am going to try my hardest to skip turing, lets see if I can get a full 5 years out of my 1080ti.

butttt Im sure once those 34" high refresh 4k screens start to come out I will probably cave.

STEM

Gawd

- Joined

- Jun 7, 2007

- Messages

- 614

I'd like to see just how slow the 1080 and TI are at raytracing, from the stream they claim 10x, so if that's true, if the 2080 getting 60 fps, the 1080s will be getting 6? It sounds like some amazing technology, there just isn't much info to actually make a decision without some benches. Oh well it won't be too long till we will know more. Maybe when they become more mainstream production along with the 2070 the 2080 TI will be more like $700. Should be interesting.

I think that for current games, the performance will be a bit better than Pascal, but nothing earth-shattering. In contrast to this launch, the GTX 1080 Ti launched on March 10th, 2017. Reviews started coming out on launch day, just check YouTube. This time around no reviews, nothing. Besides clearing out their existing 10 series inventory, maybe they are delaying sending out review samples because they are waiting for more content that uses those RT cores to be released? AMD pulls the same crap when they know that they won't shine in reviews (I'm thinking of the Vega Frontier Edition).

I guess we shall see. Until then, happy speculating!

Its good to know that the GTX2070 is supposedly faster than Titan

In raytracing maybe.

Exactly. I was very excited by the stream, then realized it's not really available and no games can use it, while great to push new tech, not so fun to be so far ahead of the curve you are all dressed up with no where to go so to speak. Except I heard that "IT JUST WORKS", so there's that.I think that for current games, the performance will be a bit better than Pascal, but nothing earth-shattering. In contrast to this launch, the GTX 1080 Ti launched on March 10th, 2017. Reviews started coming out on launch day, just check YouTube. This time around no reviews, nothing. Besides clearing out their existing 10 series inventory, maybe they are delaying sending out review samples because they are waiting for more content that uses those RT cores to be released? AMD pulls the same crap when they know that they won't shine in reviews (I'm thinking of the Vega Frontier Edition).

I guess we shall see. Until then, happy speculating!

nEo717

Limp Gawd

- Joined

- Jun 2, 2017

- Messages

- 358

Personally, I've never understood paying more for a factory overclock. You can match the OCin 10 seconds with MSI Afterburner or something similar...do they tend to be higher binned or something that makes it worth the extra $?

I’m with you... plus it’s easier to get water blocks or AIO sometimes for founders cards, at least earlier on.

I've been hoping that VR would make dual GPUs useful again (one per eye/screen), but the re-projection tech made a lot of sense for rastered graphics. I'm not sure how well that trick will work with ray tracing though. The fact they're selling an NVLink bridge has me hopeful.

STEM

Gawd

- Joined

- Jun 7, 2007

- Messages

- 614

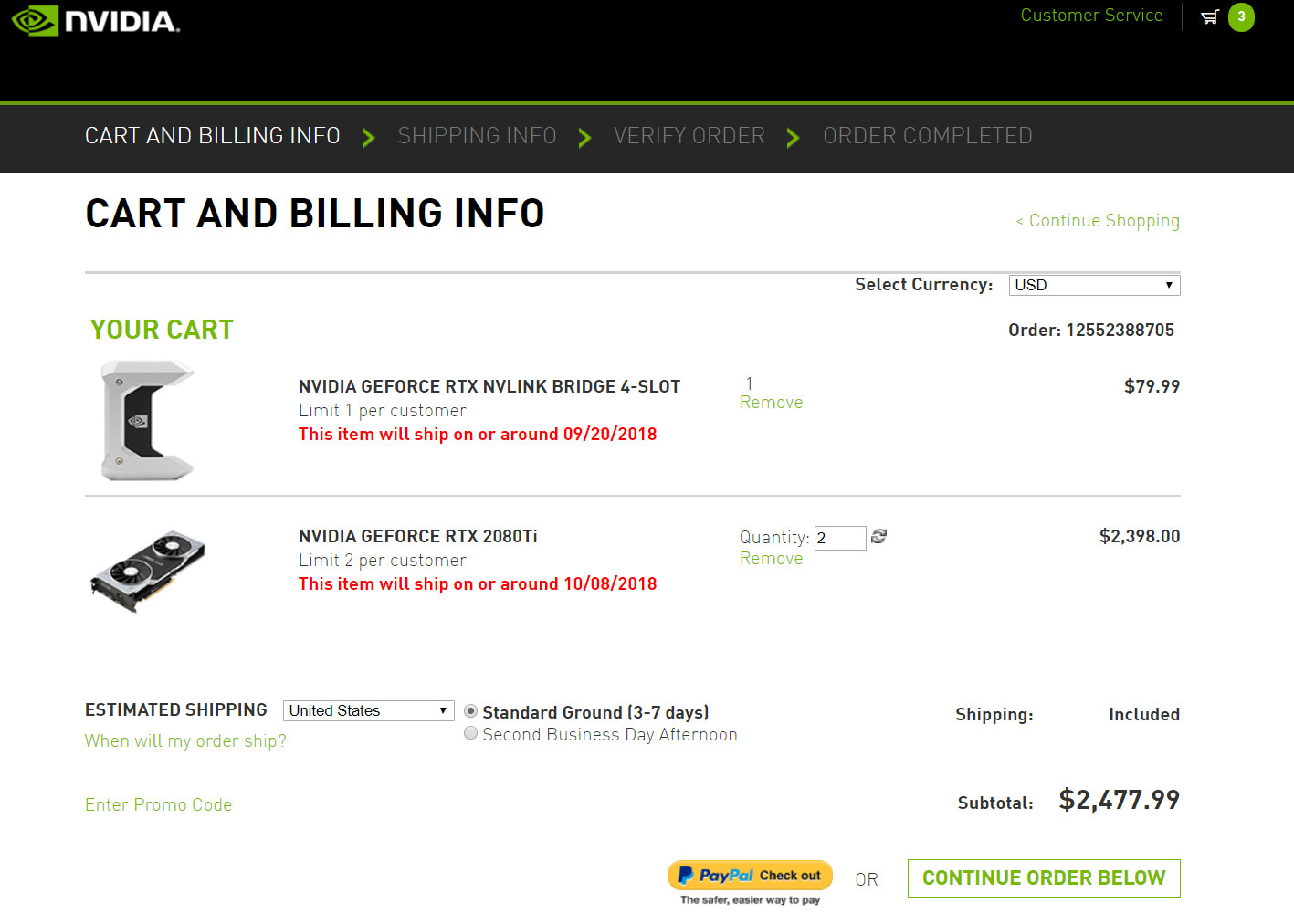

I dog gone did it... #YOLO

Thanks for feeding NVIDIA... I guess... Also, you will see your new GPU in a month to a month and a half from now.

I got dual EVGA GeForce GTX 1080 Ti SC2 in SLI. I paid $759.99 per GPU, $9.99 per EVGA Power Link (got it on sale) and $9.99 for the SLI bridge (again, got it on sale). I think it was pretty expensive, but $2500 + tax for a dual RTX 2080 Ti setup is vomit inducing. And yes, I did not say RTX 2080 Ti SLI setup because SLI is dead. With NVLink games will most likely see one virtual GPU. I guess the only optimization that needs to be done is making sure that the memory is used correctly on each GPU because NVLink is slower than internal memory bandwidth between GPU and GDDR6.

Come to think of it, I wonder if SLI will be supported in future games, or for how long? Now I feel kind of bad for buying two of these GTX 1080 Ti cards.

I actually threw up a bit in my mouth while looking at this:

DuronBurgerMan

[H]ard|Gawd

- Joined

- Mar 13, 2017

- Messages

- 1,340

Well according to Jen Hsung himself, the 2070 is faster than a Titan Xp, so...

Never trust the manufacturer claims.

JosiahBradley

[H]ard|Gawd

- Joined

- Mar 19, 2006

- Messages

- 1,791

Literally can buy 2 1080tis or one 2080ti. The 2080 cost more than a 1080ti and apart from this RT nonsense I doubt it'll be faster.

Thanks for feeding NVIDIA... I guess... Also, you will see your new GPU in a month to a month and a half from now.

I got dual EVGA GeForce GTX 1080 Ti SC2 in SLI. I paid $759.99 per GPU, $9.99 per EVGA Power Link (got it on sale) and $9.99 for the SLI bridge (again, got it on sale). I think it was pretty expensive, but $2500 + tax for a dual RTX 2080 Ti setup is vomit inducing. And yes, I did not say RTX 2080 Ti SLI setup because SLI is dead. With NVLink games will most likely see one virtual GPU. I guess the only optimization that needs to be done is making sure that the memory is used correctly on each GPU because NVLink is slower than internal memory bandwidth between GPU and GDDR6.

Come to think of it, I wonder if SLI will be supported in future games, or for how long? Now I feel kind of bad for buying two of these GTX 1080 Ti cards.

I actually threw up a bit in my mouth while looking at this:

View attachment 97704

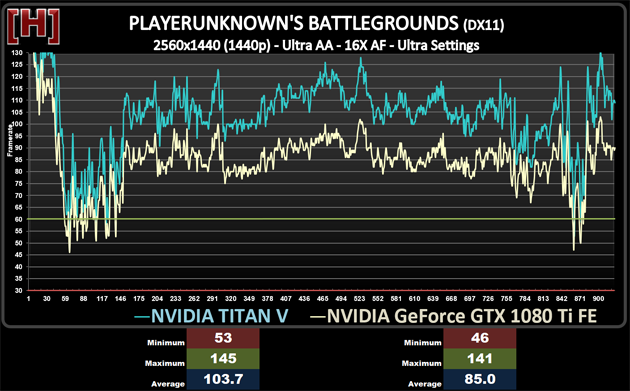

I literally just sold my 1080ti a couple weeks ago and im running on my backup 980ti right now. I wish i could say that the 1080ti was enough for 1440p gaming but it just isnt. While playing ghost recon wildlands I could see FPS drops to the mid 60s/low 70s on ultra settings and PUBG averaged about 70 FPS on ultra at 1440p. If this next gen is 50% better than pascal then in my mind it will be worth it. I was kind of underwhelmed when i saw the FPS i was getting on PUBG from the fastest card I could get my hands on at the time so for me there no other option but to get the TI. I dont quite know what this NVling gimmick is but if it combines both GPU's virtually then it might be the next best thing in performance scaling as far as gaming goes or it might turn out to be a flop, who knows? But then again that what [H] is for and all the other review sites. I honestly am pretty pumped about ray tracing and cant wait what Nvidia has next. Dont get me wrong my first legit GPU was a HD 4850 back in the day and ive always been rooting for the red team. I almost bought a 2700x to upgrade from to from my 6700k but AMD just doesnt have the performance. At the end of the day money is nothing but just a tool, good thing my kidneys are still good in case i have to take a loan out for that 20k nvlink quadro setup

STEM

Gawd

- Joined

- Jun 7, 2007

- Messages

- 614

I literally just sold my 1080ti a couple weeks ago and im running on my backup 980ti right now. I wish i could say that the 1080ti was enough for 1440p gaming but it just isnt. While playing ghost recon wildlands I could see FPS drops to the mid 60s/low 70s on ultra settings and PUBG averaged about 70 FPS on ultra at 1440p. If this next gen is 50% better than pascal then in my mind it will be worth it. I was kind of underwhelmed when i saw the FPS i was getting on PUBG from the fastest card I could get my hands on at the time so for me there no other option but to get the TI. I dont quite know what this NVling gimmick is but if it combines both GPU's virtually then it might be the next best thing in performance scaling as far as gaming goes or it might turn out to be a flop, who knows? But then again that what [H] is for and all the other review sites. I honestly am pretty pumped about ray tracing and cant wait what Nvidia has next. Dont get me wrong my first legit GPU was a HD 4850 back in the day and ive always been rooting for the red team. I almost bought a 2700x to upgrade from to from my 6700k but AMD just doesnt have the performance. At the end of the day money is nothing but just a tool, good thing my kidneys are still good in case i have to take a loan out for that 20k nvlink quadro setup

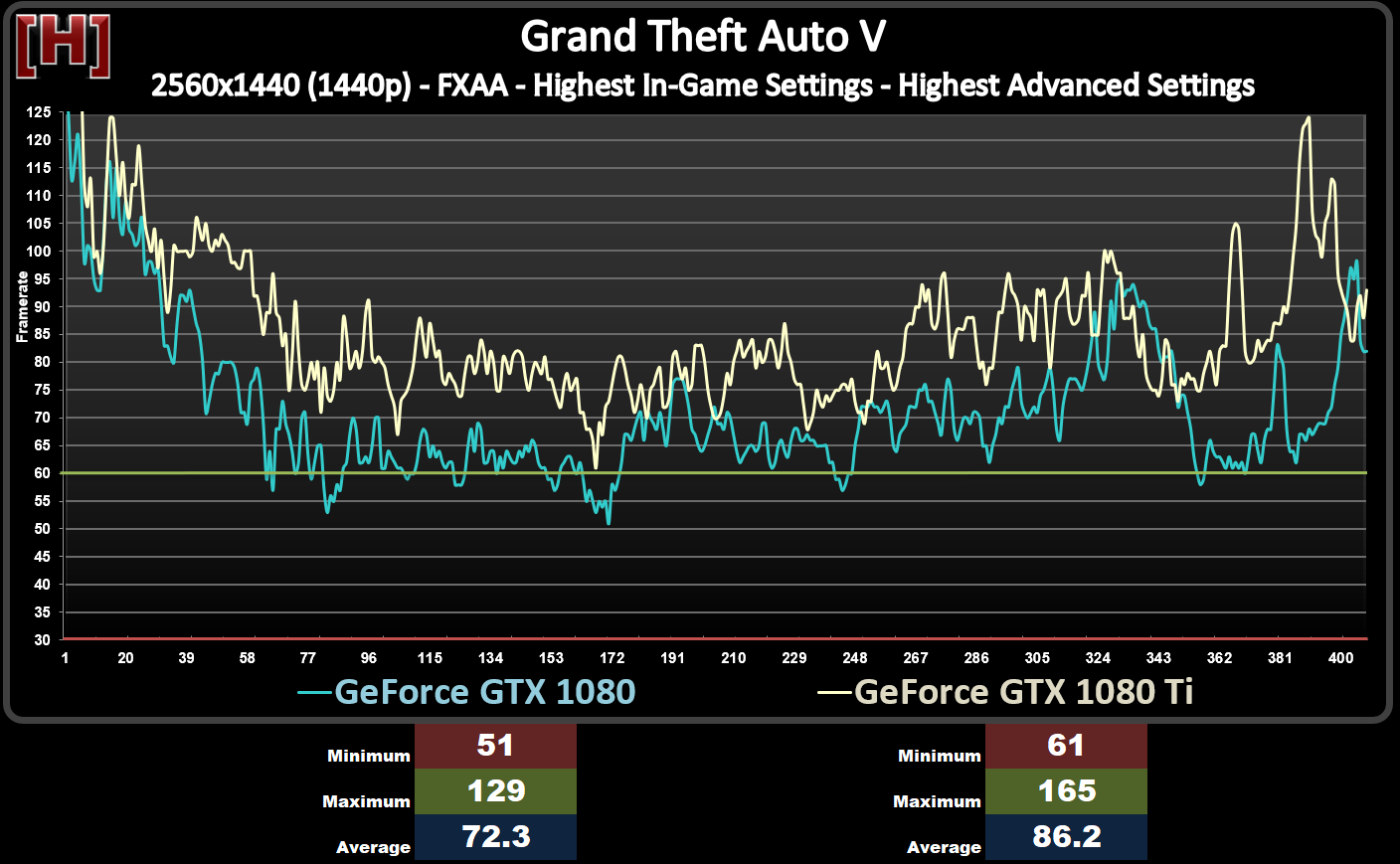

I was underwelmed when I turned up the eyecandy at 1440p on a single GTX 1080 Ti in GTA V. It was stuttering and I was getting 20 to 30 FPS. NVIDIA promised great performance with the GTX 1080 Ti, yet when you turn up the settings that performance is gone. Trust me, it will be true for this new generation as well. When I first saw the TITAN V benchmarks I knew that NVIDIA can't just simply throw more CUDA cores (lets use their marketing name) at gaming, so something had to change. The problem is that there won't be an imediate performance realization for consumers, and by the time this new way of rendering graphics becomes more mainstream, NVIDIA will be on 7nm, probably on their next generation or on a Turing refresh. Early adopters will pay a heavy financial price this round, but hey, it's their money. I can't afford to throw mine away like that.

I believe that AMD came to the same conclusion, but they won't have a similar solution ready until at least 2020. Sadly we, the consumers, are paying for their incompetence. Unless they want to stay around the low-end... which makes me wonder how long they will be able to hang around the mid range. NVIDIA is now in a league of their own, so AMD will most likely compete with Intel for the budged segment. No worries, NVIDIA will represent there as well.

It's not proprietary. It's in direct x.

I was refering to the implementation, it's definitively proprietary. It always is with NVIDIA.

Finally got a chance to watch the whole stream instead of just reading a summary.

I'm skeptical because of the way this has rolled out (no reviews yet, pre-orders, no solid gaming numbers, etc), but optimistic that real-time ray tracing is nearly here. Regardless it's an amazing accomplishment, but I'm thinking gen2 on 7nm will be where this really gets rolling. Couple of observations.

So often we see technology implemented at the edge of feasibility and I get the strong sense that this is true here. I'm just not sure 10 Gigarays is enough for 4k. Interesting data points.

1 - The comparison to the DGX is really amazing, but 45ms is still way to slow for real-time use. The level of ray tracing is going to, out of neccessity, be significantly reduced versus that specific demo.

2 - The GPU is absolutely massive at 754mm^2. That's even larger than the big 600mm^2 plus dies NVIDIA has done in the past. It's smaller than Volta, but the target market there has really deep pockets; selling rejects at $3k is a nice side business though. I'm guessing that NVIDIA only went that large because they absolutely had to in order to get the needed performance to make the RT and Tensor cores worth while. If TSMC scaling for 7nm is valid, then that 754mm^2 die could be ~450mm^2. Toss in some more cores and NVIDIAs 7nm big die could be significantly faster but still stay around the 500-600mm^2 range.

3 - The tensor core use for AI in games seems a bit incomplete or gimmicky. It feels very much to me that these are first and foremost Quadro rejects, where tensor cores are big plus, and they had to have some gaming purpose to use that large portion of the GPU for. I really have no problem with this conceptually as die harvesting/binning are the status quo. I mention it mostly that it might be another shift in NVIDIAs GPU strategy. In the beginning of CUDA they built gaming GPUs that could also compute. The last generation or so, especially with Volta, it seemed like the gaming and compute sides were going to diverge, a lot. Now we have Turing and it's compute first, gaming GPUs are the rejects. Interesting.

4. Gaming performance on older, non-RT games is a crapshoot. 2080Ti vs 1080Ti: ~20% more CUDA cores, at slower clocks, but with concurrent FP and INT means what? Who knows for now. I'm sure it's faster, but can't wait for the [H] review to find out just how much faster.

5. NVLINK is really cool, but even that amount of bandwidth is far short of the GDDR6 (I'm assuming 100GB/sec as 50x the old 2GB/sec SLI link per the RTX page, but it could be only 50GB/sec vs the original SLI). It's a lot better than PCIe 3.0, but is it going to be enough to really enable one "virtual" GPU? Can't wait to find out.

6. The RT should scare AMD, the tensor cores probably not, at least for gaming. As far as we know, AMD has no response for RT (I'd love to be wrong). RT has been long awaited and used properly, can really make the games look far better. Best yet, it's a general technique that could save a lot of work hacking the effects. I expect RT to be adopted relatively quickly.

7. Given #6, I wonder what implications there are for AMD on the next gen consoles. Does RT stay on the PC or does it become a console requirement?

I'm skeptical because of the way this has rolled out (no reviews yet, pre-orders, no solid gaming numbers, etc), but optimistic that real-time ray tracing is nearly here. Regardless it's an amazing accomplishment, but I'm thinking gen2 on 7nm will be where this really gets rolling. Couple of observations.

So often we see technology implemented at the edge of feasibility and I get the strong sense that this is true here. I'm just not sure 10 Gigarays is enough for 4k. Interesting data points.

1 - The comparison to the DGX is really amazing, but 45ms is still way to slow for real-time use. The level of ray tracing is going to, out of neccessity, be significantly reduced versus that specific demo.

2 - The GPU is absolutely massive at 754mm^2. That's even larger than the big 600mm^2 plus dies NVIDIA has done in the past. It's smaller than Volta, but the target market there has really deep pockets; selling rejects at $3k is a nice side business though. I'm guessing that NVIDIA only went that large because they absolutely had to in order to get the needed performance to make the RT and Tensor cores worth while. If TSMC scaling for 7nm is valid, then that 754mm^2 die could be ~450mm^2. Toss in some more cores and NVIDIAs 7nm big die could be significantly faster but still stay around the 500-600mm^2 range.

3 - The tensor core use for AI in games seems a bit incomplete or gimmicky. It feels very much to me that these are first and foremost Quadro rejects, where tensor cores are big plus, and they had to have some gaming purpose to use that large portion of the GPU for. I really have no problem with this conceptually as die harvesting/binning are the status quo. I mention it mostly that it might be another shift in NVIDIAs GPU strategy. In the beginning of CUDA they built gaming GPUs that could also compute. The last generation or so, especially with Volta, it seemed like the gaming and compute sides were going to diverge, a lot. Now we have Turing and it's compute first, gaming GPUs are the rejects. Interesting.

4. Gaming performance on older, non-RT games is a crapshoot. 2080Ti vs 1080Ti: ~20% more CUDA cores, at slower clocks, but with concurrent FP and INT means what? Who knows for now. I'm sure it's faster, but can't wait for the [H] review to find out just how much faster.

5. NVLINK is really cool, but even that amount of bandwidth is far short of the GDDR6 (I'm assuming 100GB/sec as 50x the old 2GB/sec SLI link per the RTX page, but it could be only 50GB/sec vs the original SLI). It's a lot better than PCIe 3.0, but is it going to be enough to really enable one "virtual" GPU? Can't wait to find out.

6. The RT should scare AMD, the tensor cores probably not, at least for gaming. As far as we know, AMD has no response for RT (I'd love to be wrong). RT has been long awaited and used properly, can really make the games look far better. Best yet, it's a general technique that could save a lot of work hacking the effects. I expect RT to be adopted relatively quickly.

7. Given #6, I wonder what implications there are for AMD on the next gen consoles. Does RT stay on the PC or does it become a console requirement?

Take a look at [H] data...sounds like something else is wrong with your PC or configuration, not the 1080Ti. That a stock FE card from [H] generational part 3 testing.I was underwelmed when I turned up the eyecandy at 1440p on a single GTX 1080 Ti in GTA V. It was stuttering and I was getting 20 to 30 FPS. NVIDIA promised great performance with the GTX 1080 Ti, yet when you turn up the settings that performance is gone.

I don't recall [H] testing GRW, but for PUBG a 1080Ti should be averaging (w/o OC) more like 85fps with a few drops below 60fps. There's some serious questions about PUBGs CPU limitations holding back GPUs during those drops, but I've never seen anything 100% confirmed. If you're deviating from that significantly, I'd start looking for other problems or bottlenecks in your system.I literally just sold my 1080ti a couple weeks ago and im running on my backup 980ti right now. I wish i could say that the 1080ti was enough for 1440p gaming but it just isnt. While playing ghost recon wildlands I could see FPS drops to the mid 60s/low 70s on ultra settings and PUBG averaged about 70 FPS on ultra at 1440p.

OC a 1080Ti should add a good 20%.

I was running a stock 6700k at the time. I didn’t bother to OC since I was gaming on my mining rig with one pcie 16x riser on one of the 1080ti’s that I had. That little noctua l9i hadn’t no room for any overclocking at all. Right now I’m running a 8700k but I sold the cards before I upgraded. ): I’m potentially getting another 980ti to sli tomorrow though cause I can’t deal with 30fps jitter while having the eye candy turned up. Either that or if one of you guys are trying to sell your 1080ti for cheap then hit me a pm

I'm keeping my 1080Ti for now. I'd love a pair of 2080Ti, but will either wait it out until 7nm or grab em when I build a whole new system.I was running a stock 6700k at the time. I didn’t bother to OC since I was gaming on my mining rig with one pcie 16x riser on one of the 1080ti’s that I had. That little noctua l9i hadn’t no room for any overclocking at all. Right now I’m running a 8700k but I sold the cards before I upgraded. ): I’m potentially getting another 980ti to sli tomorrow though cause I can’t deal with 30fps jitter while having the eye candy turned up. Either that or if one of you guys are trying to sell your 1080ti for cheap then hit me a pm

Noctua L9i, eh? There's your problem right there. That cooler is great, I have one on my NAS (E3-1275Lv3 - 45W TDP), but it's only rated for 65W TDP not the 91W of your 6700k. That's going to cause thermal throttling on the CPU and jitter regardless of what you use for a GPU. I guess you could put a 15k RPM industrial fan on it!

If you're still running that cooler, you likely have a CPU thermal throttling issue, not a GPU issue.

Edit: I think I also have one on my firewall (E3-1230Lv3).

Last edited:

Cool.Nope I got a cryorig r1 now. Had to use a low profile cooler for the mining enclosure I had.

Out of curiosity...that's an interesting combination. Small enclosure, small CPU heatsink for a mining rig makes sense, but then why did you have a 6700k in it?

Stryker7314

Gawd

- Joined

- Apr 22, 2011

- Messages

- 867

Not impressed with raytracing, doesn’t look realistic at all. Just a big shiny blurry mess. Too distracting and would turn it off anyway.

STEM

Gawd

- Joined

- Jun 7, 2007

- Messages

- 614

Take a look at [H] data...sounds like something else is wrong with your PC or configuration, not the 1080Ti. That a stock FE card from [H] generational part 3 testing.

Turn all the eye candy all the way up and watch the GTX 1080 Ti die. [H] may have run the game on default settings, or without everything dialed up to the 11th...

Nobu

[H]F Junkie

- Joined

- Jun 7, 2007

- Messages

- 10,059

It says in the image...highest in-game settings, highest advanced settings.Turn all the eye candy all the way up and watch the GTX 1080 Ti die. [H] may have run the game on default settings, or without everything dialed up to the 11th...

Here he says the 2070 is higher "performance" than the Titan Xp. You guys think he is referring to general performance or ray-tracing only?

I'm guessing he means in ray tracing as that is what was discussed in the vast majority of the performance talk. I have a feeling the GTX 2070 will be up to 5% slower than the Titan Xp/1080 Ti in current games without ray tracing.

Cool.

Out of curiosity...that's an interesting combination. Small enclosure, small CPU heatsink for a mining rig makes sense, but then why did you have a 6700k in it?

I converted my gaming rig to a mining rig when I saw that my 1080 ti was making $4-5 a day during the mining peak this past march. Then I proceeded to buy 4 more just for shits and giggles. At best I broke even. It did fund my upgrade to my 8700k though.

harmattan

Supreme [H]ardness

- Joined

- Feb 11, 2008

- Messages

- 5,129

Got an MSI 2080 Duke on preorder from OCUK, just over £700 all in (which is not horrible with the pound in freefall). We'll see when they deliver...

When does the review NDA end on these? My baseline is if it's not at least 10% faster (overclocking inclusive) than 1080 ti in current i.e. non-raytraced games, I'm noping the heck out and going back to dual 1080 tis.

When does the review NDA end on these? My baseline is if it's not at least 10% faster (overclocking inclusive) than 1080 ti in current i.e. non-raytraced games, I'm noping the heck out and going back to dual 1080 tis.

Got an MSI 2080 Duke on preorder from OCUK, just over £700 all in (which is not horrible with the pound in freefall). We'll see when they deliver...

When does the review NDA end on these? My baseline is if it's not at least 10% faster (overclocking inclusive) than 1080 ti in current i.e. non-raytraced games, I'm noping the heck out and going back to dual 1080 tis.

Your changing from a 1080ti to a 2080 for a measly 10% performance increase? And people wonder why the prices are rising.

Blade-Runner

Supreme [H]ardness

- Joined

- Feb 25, 2013

- Messages

- 4,366

Well this is awkward if true....

https://www.dsogaming.com/news/nvid...-tomb-raider-with-60fps-at-1080p-with-rtx-on/

Raytracing kills frames even at 1080P, I doubt anyone in the market for this GPU plans on running it at anything less than 1440P, and even then the likely users are probably more interested in 4K.

https://www.dsogaming.com/news/nvid...-tomb-raider-with-60fps-at-1080p-with-rtx-on/

Raytracing kills frames even at 1080P, I doubt anyone in the market for this GPU plans on running it at anything less than 1440P, and even then the likely users are probably more interested in 4K.

Well this is awkward if true....

https://www.dsogaming.com/news/nvid...-tomb-raider-with-60fps-at-1080p-with-rtx-on/

Raytracing kills frames even at 1080P, I doubt anyone in the market for this GPU plans on running it at anything less than 1440P, and even then the likely users are probably more interested in 4K.

Oh wow, that would be embarrasing if true. So much hype for a feature that it is not even able to run properly.

Denpepe

2[H]4U

- Joined

- Oct 26, 2015

- Messages

- 2,270

I was thinking for this time to go with a 2080Ti, but when I saw the prices that's not going to happen, you can build a decent mid range pc for the price of one of those. 1.479 € for an EVGA GeForce RTX 2080 Ti XC ULTRA GAMING (that's around 1.704 $)

Even the 2080's are around the 1k € mark, might skip this generation at those prices.

Even the 2080's are around the 1k € mark, might skip this generation at those prices.

TheRealDarkphoX

n00b

- Joined

- Mar 2, 2016

- Messages

- 29

I'm coming in with a 5 year old R9 290. Built a new 8700K system earlier this year, and got an Acer Predator monitor (Gsync) a few months back in anticipation of a GPU upgrade, knowing AMD would not be competitive in the high end segment.

After much flip flopping, I ended up pre-ordering a 2080 last night. It will be a massive upgrade (I also got my wife's approval, lol) so the price doesn't bother me as much as it did at first. Something else that added to the value equation were the bundles offered by Newegg. There was an EVGA GTX 2080 with an 860Evo 1Tb ssd for $900, "saving" $50. There were other bundles too, so it is possible to find a 'deal' if you're interested in one of these cards.

After much flip flopping, I ended up pre-ordering a 2080 last night. It will be a massive upgrade (I also got my wife's approval, lol) so the price doesn't bother me as much as it did at first. Something else that added to the value equation were the bundles offered by Newegg. There was an EVGA GTX 2080 with an 860Evo 1Tb ssd for $900, "saving" $50. There were other bundles too, so it is possible to find a 'deal' if you're interested in one of these cards.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,162

I ran GTA5 at 50-60 FPS with the highest in-game settings on a Maxwell Titan X at 1440p. The 1080 Ti numbers in the [H] graph are right in line with what I get on my Pascal Titan X using the same settings.Turn all the eye candy all the way up and watch the GTX 1080 Ti die. [H] may have run the game on default settings, or without everything dialed up to the 11th...

Stryker7314

Gawd

- Joined

- Apr 22, 2011

- Messages

- 867

So will Titan V leverage tensor cores for ray-tracing in games now that the cat is out of the bag? Sure hope those dudes don't get shafted and get a driver update.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,558

Well this is awkward if true....

https://www.dsogaming.com/news/nvid...-tomb-raider-with-60fps-at-1080p-with-rtx-on/

Raytracing kills frames even at 1080P, I doubt anyone in the market for this GPU plans on running it at anything less than 1440P, and even then the likely users are probably more interested in 4K.

Like I said, why you skip the first generation of anything, it lacks the horsepower and refinement. Ray tracing takes a ton of horsepower to do and will be the first thing they turn off when the fps tanks, which makes a chunk of the card they paid top dollar for useless.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)