cyberguyz

Gawd

- Joined

- Aug 28, 2014

- Messages

- 710

We won't know until AMD releases it and the numbers come in, won't we

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I think this "leak" is not actually not Zen2. More likely, it is the Zen+ 2800x meant to counter the 9900k.

Zen2 will most likely be 16c/32t. Now way the RTG would have Zen2 a year before release. Must be some changes in the 2800x that the RTG needs to adjust for.

Color me skeptical on a 15% IPC increase. Maybe a 15% *overall* performance increase is possible. Meaning combination of clockspeed and IPC. AMD shot for a 10% performance uplift with Zen+, which they... more or less delivered on. A combination of minor 3% IPC bump

Then Zen 2 moves to an 8 core "CCX" that's the whole die.

a 2x 256 bit AVX setup (instead of 2x 128).

I think this "leak" is not actually not Zen2. More likely, it is the Zen+ 2800x meant to counter the 9900k.

Zen2 will most likely be 16c/32t. Now way the RTG would have Zen2 a year before release. Must be some changes in the 2800x that the RTG needs to adjust for.

Or only 1.5% IPC bump, it depends what you are measuring.

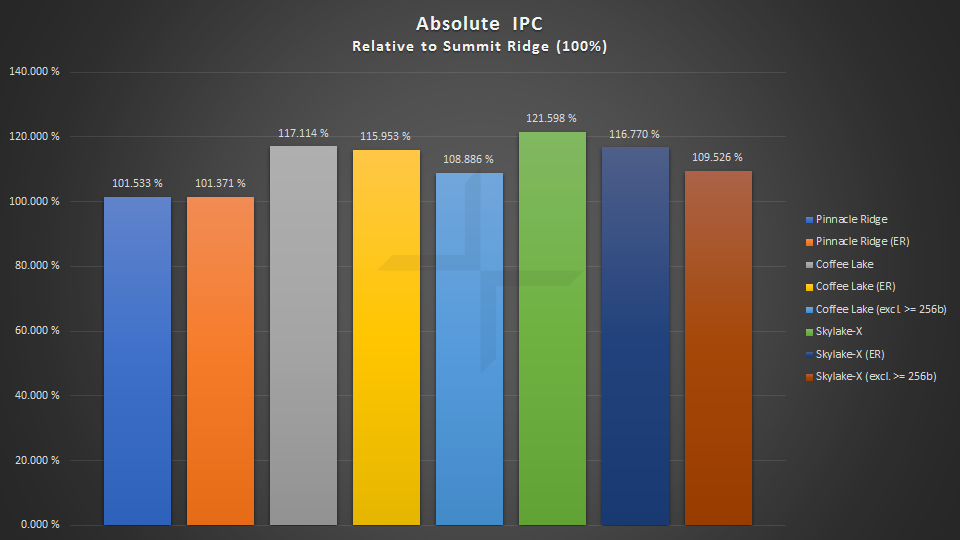

View attachment 108459

So much as I like the 8-core CCX idea. The last rumor is that the ZP2 die is a pair of 4 core CCX again.

This is difficult to believe. Rome increases the core count to 64 core. If each core has now twice bigger AVX units, then the memory subsystem would be improved by about 4x whereas maintaining backwards compatibility with SP3 specs.

I think what a lot of people tend to forget is that adding more cores increases latency as well.

Not really, the mere act of adding more cores does not add latency. Hence the 8700K does not have more latency than the older (but same architecture) 7700K. Changing core counts while maintaining the same interconnect architecture will have identical latency. The additional latency comes from the groundwork interconnect architecture, which can be made to handle large core counts with less die-space (which usually increases latency) or more die-space can be used for the interconnect which isn't as conducive to large core counts.

Color me skeptical on a 15% IPC increase. Maybe a 15% *overall* performance increase is possible. Meaning combination of clockspeed and IPC. AMD shot for a 10% performance uplift with Zen+, which they... more or less delivered on. A combination of minor 3% IPC bump, minor ~5% max boost clock boost, and precision boost rework (good for a nice practical performance boost) got them over the finish line, if barely. I expect Zen2 will deliver more than this, because of a much better process. So a 15% bump is not out of the question. More, even, is possible if there is a core count increase to go along with it.

One possibility which crossed my mind is that Zen2 may dispense with the CCX. I wondered the other day if 2x 4 core CCXs was a stopgap measure from AMD. Perhaps when designing Zen, they realized that while it was impressive - and ran right over bulldozer, it wasn't quite going to reach parity with Intel in clockspeed or IPC. So AMD used IF, and expanded a 4 core design to be an 8 core design so that it would beat out the 7700k in at least some tasks, and create a market for Zen. This might have happened early in the design process. Anyway... the IF would still be useful for gluing together Epyc - and so they just added it on die as well as a way to glue together multiple dies.

Then Zen 2 moves to an 8 core "CCX" that's the whole die. Full ring bus internally. But, of course, you can still glue together multiple dies for Epyc and TR, for rapid, cheap core count scaling. A 4 core version could continue to be produced for APU design, with the interconnect still used.

If true Zen2's IPC improvements may be tied partly to the reduction in latency from eliminating cross-CCX traffic and, perhaps, a 2x 256 bit AVX setup (instead of 2x 128). Combine with what we assume will be much better clock scaling... and you have something that should compete well against a 9900k, while still preserving the ability to glue multiple dies for TR and Epyc at relatively low cost.

Of course, this is all supposition. I have no idea. We could still see a 6 core CCX or something - and this ES (presuming the rumor is true) could have just had some cores disabled because partly broken ES.

The IF frequency is clocked 1:1 to the memory frequency. The illusion of 1:2 ratio comes from the fact that what is colloquially called "memory frequency" is actually memory transfer rate.As we know from The_Stilt's deep dive on the original Zen ES chip, it ran the Infinity Fabric (uncore) at 1:1, and was said that it'd likely be the case for the EPYC chips, and TR by association (whether or not that came to be the case I don't know and I don't know how we'd even be able to determine what speed it runs at).

I don't think, or at least recall, it ever being explained why they ended up going with a 1:2 speed.

Color me skeptical on a 15% IPC increase. Maybe a 15% *overall* performance increase is possible. Meaning combination of clockspeed and IPC. AMD shot for a 10% performance uplift with Zen+, which they... more or less delivered on. A combination of minor 3% IPC bump, minor ~5% max boost clock boost, and precision boost rework (good for a nice practical performance boost) got them over the finish line, if barely. I expect Zen2 will deliver more than this, because of a much better process. So a 15% bump is not out of the question. More, even, is possible if there is a core count increase to go along with it.

One possibility which crossed my mind is that Zen2 may dispense with the CCX. I wondered the other day if 2x 4 core CCXs was a stopgap measure from AMD.

Then Zen 2 moves to an 8 core "CCX" that's the whole die. Full ring bus internally. But, of course, you can still glue together multiple dies for Epyc and TR, for rapid, cheap core count scaling. A 4 core version could continue to be produced for APU design, with the interconnect still used.

That "momentum" is worth nothing on its own.Leave it to AMD to completely loose their momentum by forfeiting their core count supremacy....

This is incorrect.Not really, the mere act of adding more cores does not add latency. Hence the 8700K does not have more latency than the older (but same architecture) 7700K. Changing core counts while maintaining the same interconnect architecture will have identical latency.

I have to disagree here. Sure, it won't scale as well with six or eight cores, but you could use the mesh in a similar way. There are a number of possible arrangements, with varying degrees of complexity, depending mostly on the limits of the technology it's implemented on.In Zen, the cores of a CCX are connected in a full mesh which scales with quadratic complexity.

This topology is infeasible for six or eight cores.

X—X—X

|\|\|

X—X—X

X—X—X

|\ /|

X—X—X

X—X

/| |\

X + X

| | |

X + X

\| |/

X—X or

CACHE CACHE

↑ ↑ ↑ ↑

X X X X—X—X

|\|\| |\|\|

X X X X—X—X

↓ ↓ ↓ ↓

CACHE CACHEI specifically meant a full mesh (or fully connected network) as it is now (i.e. all nodes/cores connected directly).I have to disagree here. Sure, it won't scale as well with six or eight cores, but you could use the mesh in a similar way. There are a number of possible arrangements, with varying degrees of complexity, depending mostly on the limits of the technology it's implemented on.

Yeah, for some reason I confused the core configuration with the die configuration. You could swap the cache and X's in my ascii diagram to make it more representative of actual possible configurations. As shown here, though, it's not necessary to have one L3 slice per-core: you could have one per two cores. You end up with a smaller cache, but I wonder what are the effects on latency there?I specifically meant a full mesh (or fully connected network) as it is now (i.e. all nodes/cores connected directly).

There are certainly ways to reduce the number of links, but this inevitably leads to forwarding - adding routing latency and complexity.

Regarding the cache in the middle, this is how it is done right now on a high level of abstraction; four cores are connected to the L3 cache.

However when looking at the detailed implementation, the cache consists of four slices, each adjacent to one core, which are connected to each other.

The connection between the slices is typically graphically presented as a full mesh (incl. by AMD), but AFAIK the actual topology has never been officially mentioned.

Intel also uses one L3 slice per core, and all are connected via (modified) ring or mesh.

The interesting observation here is that neither AMD nor Intel uses a physically unified large cache block.

As I said before, AMD is evidently very active in the research of interconnects so I am confident they can come up with a good compromise of complexity and performance.

It will be interesting to see whether AMD believes that the drawbacks of their NUMA-esque architecture are worth a paradigm shift.

If the Infinity Fabric and locality-aware software (scheduler and applications) can be improved enough, the CCX might not be worth giving up.

The latency of the CCX-local L3 cache is actually superior to the Intel ring bus counterpart.

The RTG has just received its first Zen 2 sample (to optimize for) and it's really impressive.

8C/16T

4.0 GHz/4.5 GHz

DDR4-3600 CAS 15

Radeon RX Vega 64 LE

__________________________

The good: It's already nibbling at the Core i7-8700K.

The bad: It crashes a lot.

The ugly: It crashes all the time. Some of the tests have to be run multiple times because they crashed before finishing.

I know it's only early but that's disappointing. Either they fix up the IPC at least 5 / 10% or they push that number a bit higher.

Hopefully, very hopefully, this will be resolved in 6 months time.

Also, to be honest, considering rumoured, potential launch dates, I'm surprised this wasn't at least 2 or 3 months ago. (I could've sworn there was indications this had occurred months ago?)

I'm still trying to track down the exact post in his thread (sadly, that's even assuming it was his Deep Dive thread that I read it in), but given I had originally read the at-the-time 24 or so pages, it'll take me awhile to track down the post :\The IF frequency is clocked 1:1 to the memory frequency. The illusion of 1:2 ratio comes from the fact that what is colloquially called "memory frequency" is actually memory transfer rate.

DDR stands for Double Data Rate which means that DDR memory performs two transfers per clock cycle.

The Stilt's analysis (in its latest form) explains this.

An AMD slide (not sure from which presentation) mentioned that the fabric is in the memory clock domain so they didn't have to add a buffer in between that adds latency for memory accesses.

Regarding your memory troubles:

All I can say is that memory is... complicated when you try to run it near its physical limitations.

A memory controller is incredibly complex because it has to implement complex procedures that are parameterized over dozens of memory-specific details (timings etc.)

Regarding the cache in the middle, this is how it is done right now on a high level of abstraction; four cores are connected to the L3 cache.

However when looking at the detailed implementation, the cache consists of four slices, each adjacent to one core, which are connected to each other.

The connection between the slices is typically graphically presented as a full mesh (incl. by AMD), but AFAIK the actual topology has never been officially mentioned.

Intel also uses one L3 slice per core, and all are connected via (modified) ring or mesh.

The interesting observation here is that neither AMD nor Intel uses a physically unified large cache block.

You are right. When I read your first paragraph, I vaguely remembered that he had written something about 1:1.Which after holding off posting for a day so I could research... I finally managed to at least track down one of the posts I was recalling. (I'd still swear he mentioned it again, relating to a chip sample he had, but regardless...)

"UCLK, FCLK & DFICLK default to half of the effective MEMCLK frequency (i.e. DDR-2400 = 1200MHz).In all fairness, I suppose it's my error for assuming that the UCLK (aka UMC Clock; Unified Memory Controller) was directly related to the IF speed. And it still could be, but from what he says, that doesn't sound like the case. I'll have to do my damn best to disassociate the two and forget it ever mentioned. BUT, it does still leave me curious regarding what having the UMC running 1:1 would translate to performance wise. The person quoted in his response seems to feel it'd have a sizable impact on things, but being I'm not an engineer, I can't even begin to speculate.

There is a way to configure the memory controller (UCLK) for 1:1 rate, however that is strictly for debug and therefore completely untested. The end-user has neither the knowledge or the hardware to change it.

AFAIK FCLK & DFICLK are both fixed and cannot be tampered with. However certain related fabrics, which run at the same speed have their own frequency control. The "infinity fabric" (GMI) runs at 4x FCLK frequency."

Yeah, it was just a remark.I'm personally stuck at 3133 with my 2700X, but only due to my own laziness.As for "my memory troubles", I'm not sure which ones you mean? Aside from a couple BIOS releases that caused me issues, I've been able to run my RAM at DDR4-3200 on my 1700X since launch and running my Titanium's v1.10 shipping BIOS.

Or do you mean what I had been talking about with Odd/Even timings? As I understand how complex memory is, and how one timing can play on another, leading to you either gaining more performance or losing stability.

Regardless, it's not really that I'm having troubles, insofar as that it's not something I'm doing wrong... it's just a quirky choice AMD has made. In the times when I was permitted to run Odd timings, I had perfect stability, there were no troubles to speak of. Thus why I find it strange that the ability was once again taken away.

Memory blocks typically have quite a bit of redundancy. SRAM takes up a huge portion of most chips so it would be foolish not to consider yields in each slice already.In terms of using a 'solid chunk' of cache... To me, the most obvious reason would be: yields.

When you use a solid chunk of cache, if that happens to be the sector of a die with a fault, then that die is junk.

However... When you have segmented cache, I suspect it opens up the ability to lasing it off, then binning it as a lower-end product like an i5 or i3 (or Pentium and Celeron, when they were options).

Even if that isn't necessary due to high yields, like in AMD's case with Ryzen, it may have provided more difficult to then create the APU which has 1/2 the L2 in the CCX.

AFAIK the Raven Ridge CCX still has four slices, but each with half the size.Yeah, for some reason I confused the core configuration with the die configuration. You could swap the cache and X's in my ascii diagram to make it more representative of actual possible configurations. As shown here, though, it's not necessary to have one L3 slice per-core: you could have one per two cores. You end up with a smaller cache, but I wonder what are the effects on latency there?

There are some excellent die shots by Fritzchens Fritz on Flickr. One of my favourites is this (be sure to check out the full resolution originals).Then again, this is all assuming that the cache is how it is depicted in the die shot of chips. Keep in mind that the die shots of the 8C Zen and 4C Zen APU are provided by AMD. This is not me trying to create conspiracy, but that it's known that some elements are obscured while other elements are altered slightly to better illustrate what they are. After all, they are just PR material we're shown. I've also never bothered to look around for a 3rd-party die shot of Ryzen, assuming there are any published, and as such can't say I've compared to see if there are changes.

Don't forget that when you have a mobile processor you are mostly limited by the amount of heat generated by the silicon. You could go higher on raw clock speed but the design would have to be able to cope with it (heat).The Anandtech article on the new 7nm TSMC Apple A12 processor is quite interesting.

120mhz-200mhz gains when compared to its 10nm TSMC A11 predecessor. Hard to say how the current Global Foundries 12nm process would translate in this case for AMD CPUs.

It could be that these clock boosts are purely the result of better power efficiency. Interesting nonetheless.

If any of that shit is true, AMD is gonna need Zen 2 sooner rather than later. That 9900k is looking MIGHTY impressive. Finally a balls-to-the-wall product out of Satan Clara.

Nothing I have seen looks impressive and it's looking to be priced way too high as well. 12% faster in gaming then a 2700x is jut not that impressive, especially when you factor in price.

The key difference is that it's also faster in multithreaded productivity tasks too. Up until now, there's been a good rationalization for Ryzen > mainstream Intel products due to better multithreaded performance and only a minor gaming hit. Neither the 8700k nor the 2700X could be said to wear that absolute performance crown in the mainstream - it's more use case based. Both are relatively equal. The 9900k turns that on its head, offering better performance in both types of tasks, though admittedly at a higher price point. The 2700X will still win on performance for the dollar - provided it remains competitive with the 9700k (which it probably will) - but speaking for myself, if I were building on a mainstream platform today, it would be 9900k all day.

Either way, hopefully Zen 2 competes favorably with the 9900k.

All AMD needs to do is increase clock speeds and IPC and Intel is in a world of hurt.

This is all they've ever needed to do, and they do occasionally pull it off.

Yap. Not I dont have any evidence but we do know they are sampling 7nm right now to people. BUT....yea ill wait for official reviews..

AND...it looks like another new terrible rumor has come out /facepalm https://wccftech.com/amd-zen-2-7nm-cpu-13-percent-ipc-increase-rumor/

7% and they up the clockspeeds a bit- still big ifs, but that'd be enough I think.

The key difference is that it's also faster in multithreaded productivity tasks too. Up until now, there's been a good rationalization for Ryzen > mainstream Intel products due to better multithreaded performance and only a minor gaming hit. Neither the 8700k nor the 2700X could be said to wear that absolute performance crown in the mainstream - it's more use case based. Both are relatively equal. The 9900k turns that on its head, offering better performance in both types of tasks, though admittedly at a higher price point. The 2700X will still win on performance for the dollar - provided it remains competitive with the 9700k (which it probably will) - but speaking for myself, if I were building on a mainstream platform today, it would be 9900k all day.

Either way, hopefully Zen 2 competes favorably with the 9900k.

I just skimmed through so i might've missed it, but what's so bad about that rumor?Yap. Not I dont have any evidence but we do know they are sampling 7nm right now to people. BUT....yea ill wait for official reviews..

AND...it looks like another new terrible rumor has come out /facepalm https://wccftech.com/amd-zen-2-7nm-cpu-13-percent-ipc-increase-rumor/

I just skimmed through so i might've missed it, but what's so bad about that rumor?

7% and they up the clockspeeds a bit- still big ifs, but that'd be enough I think.

I was hoping for more cores, but it's starting to look like the core count isn't going up on the mainstream. Not confirmed, of course. But seems that way.

AMD needs ~15% more performance per core if they intend to compete at the same number of cores. Whether that comes from clockspeed or IPC, or both... doesn't really matter. But that's the bar. I think it's very possible, but by no means a sure thing.

...Unless you forget about an active interposer which AMD is developing and patented.In Zen, the cores of a CCX are connected in a full mesh which scales with quadratic complexity.

This topology is infeasible for six or eight cores.

...Unless you forget about an active interposer which AMD is developing and patented.

I am not aware of them using HBM for the active interposer CPU designs, instead only in MCMs/GPU stuff, but it's more [active interposer] just a way to provide better mesh/linking without taking valuable, high density 7nm die area.So long as costs- and worse yields, a la HBM!- don't skyrocket in the process, I see this as being a win for them, supposing that they can keep performance in line with non-interposer parts. How well HBM works is a good sign, though!

Nope they just have to come anywhere near it and price appropriatelyThe magic fairy told you?

Edit: and this is not good.

Engineering samples are meant to crash a lot. but ONLY coming close to matching an 8700K? Who freaking cares? It needs to BEAT a 9800K. Intel is going to have its own 8/16 CPU that will be the definitive gaming champion, AMD needs to beat that.