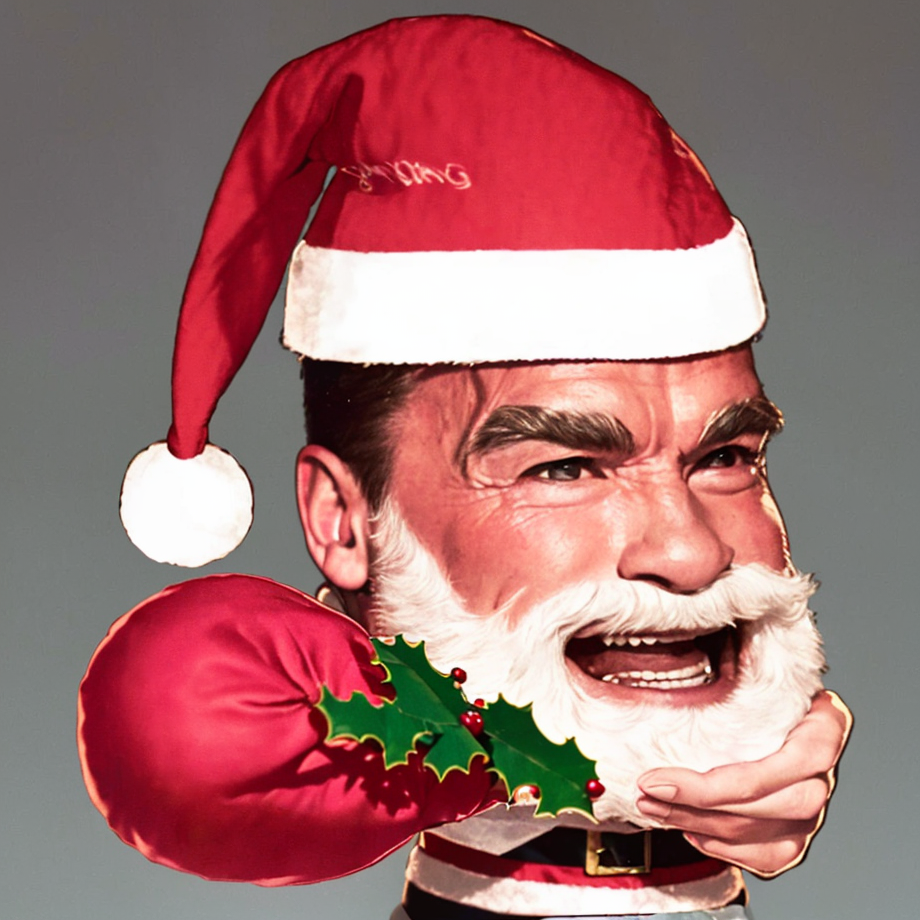

https://videocardz.com/newz/nvidia-introduces-system-memory-fallback-feature-for-stable-diffusion

I mean I would prefer more memory on the GPU, but this is at least something better than a hard crash.

I mean I would prefer more memory on the GPU, but this is at least something better than a hard crash.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)