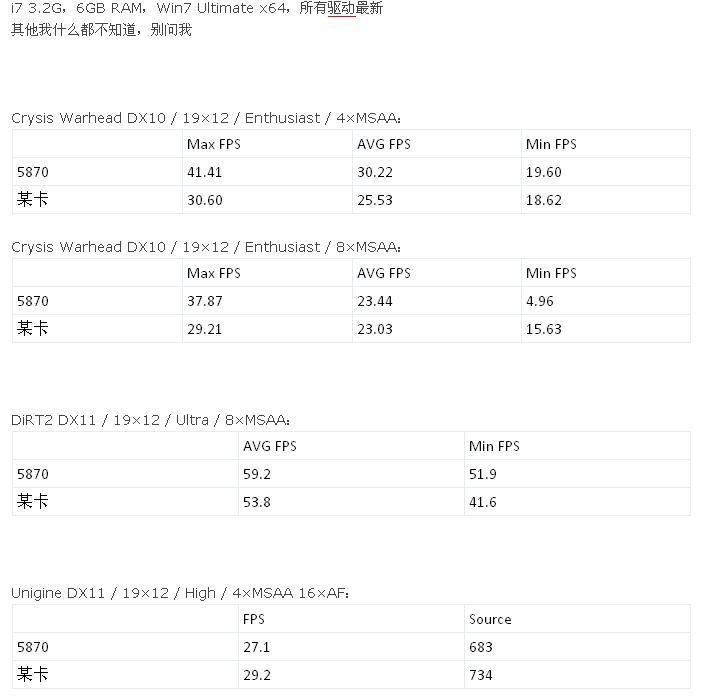

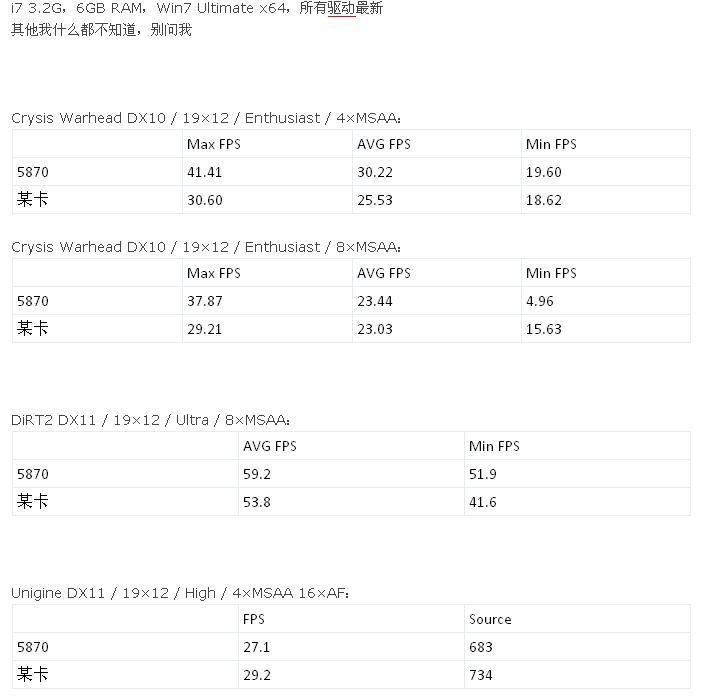

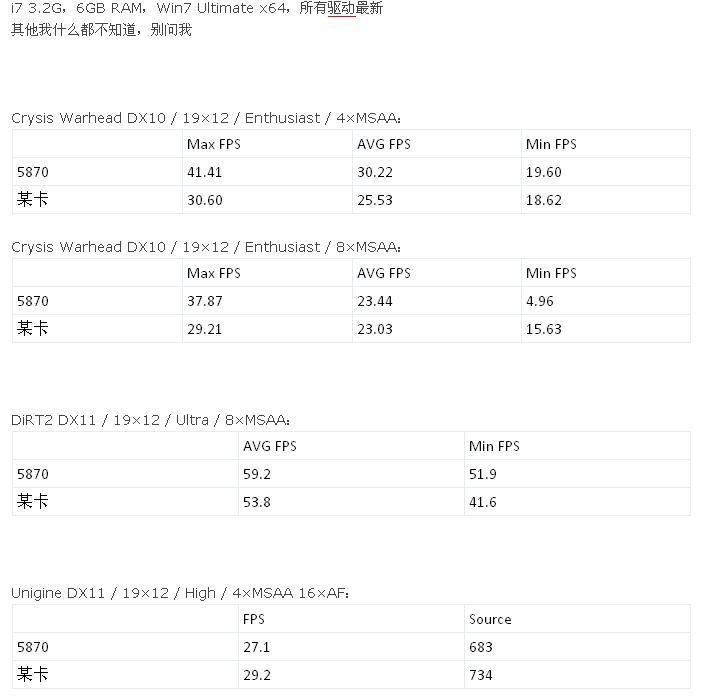

The Chinese name card is most likely GTX470,but I'm not sure.

Another rumor from Chinese forum:

http://we.pcinlife.com/thread-1366899-1-1.html

gap bw 5870 and 470 suggests that the Charlie 5% claim might actually be true.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The Chinese name card is most likely GTX470,but I'm not sure.

Another rumor from Chinese forum:

http://we.pcinlife.com/thread-1366899-1-1.html

gap bw 5870 and 470 suggests that the Charlie 5% claim might actually be true.

Numbers could make sense if that is a GTX470. Typically it seems mid and high-enthusiast level cards are seperated by around 10-20% in performance. A 20% increase over the 470 numbers seen here (if real) would put the 480 at about the 5870's level. Of course what game is being played makes a large difference as well.

My money is on Fermi and Cypress trading blows.

from the rumored specs, the 470 has what, 10% less shaders than the 480, if so, It would be hard to give a 20% increase with slightly less shaders and memory / bandwidth

The performance delta is also highly dependent on the clock speed differences. The 5870 has a 17%+ faster clock speed alone. I'd bet the same performance comparison could be made with the 470 and 480, where the clock speed has a great effect than the number of shaders.I agree with you there, 20% was only a guess. Based on what you've pointed out shader intensive apps like those tested above may scale a bit beyond the 10% but not much more. My estimation was based loosely on the 5850/5870 where the 5850 has 10% less shaders than 5870 and suffers about 15% in performance.

Not much, if these are numbers are close to accurate. Ouch, just ouch. The 5890 refresh should be able to take on or overcome the 480 if AMD really wants to push it.then what is the point of waiting for fermi other then folding ?

I agree with you there, 20% was only a guess. Based on what you've pointed out shader intensive apps like those tested above may scale a bit beyond the 10% but not much more. My estimation was based loosely on the 5850/5870 where the 5850 has 10% less shaders than 5870 and suffers about 15% in performance.

no i am not himAre you Mindfury @ B3D?

Same exact post over there.

je you were rite http://www.semiaccurate.com/2010/02/20/semiaccurate-gets-some-gtx480-scores/Not hardly, Charlies 5% numbers were for the GTX480, not 470.

The GTX480 with 512 shaders running at full speed, 600Mhz or 625MHz depending on which source, ran on average 5 percent faster than a Cypress HD5870, plus or minus a little bit. The sources were not allowed to test the GTX470, which is likely an admission that it will be slower than the Cypress HD5870.

That post was put up on the 3rd of March, and the new drivers had been available for a few days before that. It's possible--but not likely--that they were using old drivers if the post itself is factual.Ah simple answer to that: old drivers! New drivers boost Crysis Warhead performance by 30%

How can fermi running 384bit vs ati's 256bit, be slower?

How can fermi running 384bit vs ati's 256bit, be slower?

UP TO 13% with a single GPU.Ah simple answer to that: old drivers! New drivers boost Crysis Warhead performance by 30%

The 384-bit 8800GTX is slower than the 128-bit 5770. The 256-bit 8800 GTS 512mb is faster than the 320-bit 8800 GTS 640mb.

One spec does not a complete picture paint.

The 384-bit 8800GTX is slower than the 128-bit 5770. The 256-bit 8800 GTS 512mb is faster than the 320-bit 8800 GTS 640mb.

One spec does not a complete picture paint.

But both fermi and cypress are running ddr5. While the gtx runs ddr3 and the 5770 runs ddr5.

So? Number of shader cores will not tell you how efficient they are at a specific task, the number of transistors will not tell you how many of them are useless for gaming purposes, etc. etc.But both fermi and cypress are running ddr5. While the gtx runs ddr3 and the 5770 runs ddr5.

because you can't measure performance by the bitness of the memory bus?

The bitness, eh?

That's gold!

But both fermi and cypress are running ddr5. While the gtx runs ddr3 and the 5770 runs ddr5.

The purpose of memory bandwidth is to keep shader cores and texture units fed, and provide a destination for pixels written from the ROPs.

Think of the memory bus and speed like a tire: wider tires rated for faster speeds mean you can really improve your grip and performance. But the best tires in the world mean nothing when your car's engine has the power of a lawnmower.

If you don't have enough memory bandwidth, it will hold-back performance of the execution units on the card. On the other hand, if you have "enough" memory bandwidth, then anything more is just overkill.

The HD 5870 has just enough memory bandwidth to function. The GTX480 has more bandwidth tha the 5870 because Nvidia was aiming for a performance level about %25-50 faster than the 5870. Unfortunately, Nvidia was unable to push their execution units to the predicted levels of performance, so the extra bandwidth is largely unnecessary.

The card has the extra bandwidth to drive higher performance, but the execution engine has failed to live-up to expectations.

Wow if these numbers are accurate then who or rather why would anyone buy a 470/480? I mean unless they give them away at firesale prices. Which they can't afford to do, so ummm ya. They might be fucked. I hope this isn't true since I wanted two 480's, but not at 5870 speeds or slightly above and not this late in the game. /sigh

since you have the actual Fermi on you right now and doing benchmarks can you send it to me after you finish?

Guess it is worth noting the thing did pull ahead on Crysis with 8X AA but Crysis has usually been a nVidia tuned game....so nothing too surprising. We'll see though, once drivers get better tweaked.

from the rumored specs, the 470 has what, 10% less shaders than the 480, if so, It would be hard to give a 20% increase with slightly less shaders and memory / bandwidth

I believe the rumors, since they seem to be pretty consistent. It's your thing if you choose to not believe. I'm not here to argue anything.

But my point stands regardless of what the true performance of Fermi is: you cannot judge performance of a video card purely by memory bandwidth. Lack of bandwidth can hold a card back, but in the end it's the execution pipeline that gets things done.

you mistaking me for someone else, i never said that.

Fermi has everything on the paper from what the specs show, from shading to memory bandwidth.

idk where you getting your info from.

Read the thread. My original post was in-response to rocketr2, not you.

So, do us all a favor, and stop trying to start a flamewar, troll.

im not trying to start anything, btw you quoted me when you said that so dont go there.

im not trying to start anything, btw you quoted me when you said that so dont go there.