- Joined

- May 18, 1997

- Messages

- 55,634

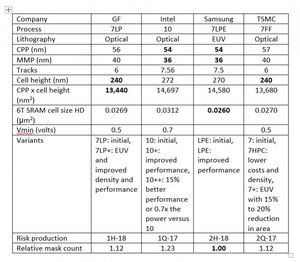

Ryan Shrout is reporting that Intel CEO Brian Krzanich stated that Intel will have its first discrete graphics chips available in 2020. No direct quote is given by Shrout, only paraphrasing, and it is a loose statement at best. Personally I am betting for more like 2021 or 2022 for anything "competitive" in the gaming arena. Keep in mind that Intel is looking at GPUs for things far beyond mere gaming, so at this point, "discrete graphics chips" could mean just about anything.

Intel CEO Brian Krzanich disclosed during an analyst event last week that it will have its first discrete graphics chips available in 2020. This will mark the beginning of the chip giant’s journey toward a portfolio of high-performance graphics products for various markets including gaming, data center and artificial intelligence (AI).![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)