- Joined

- May 18, 1997

- Messages

- 55,601

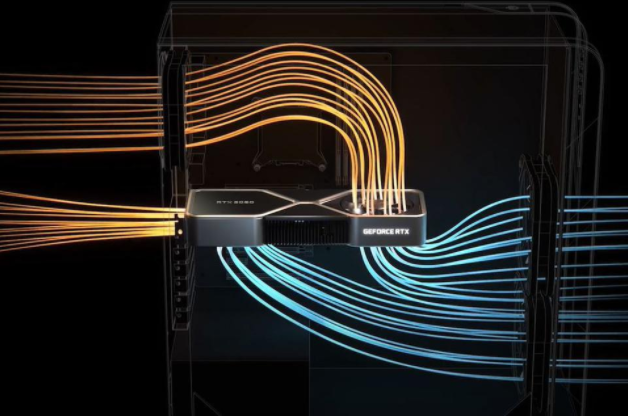

All true, but packing all those components together in such a small space does not bode for great heat transfer. And already pushing 325w or 350w? Oof. I don't think Samsung has left a lot of headroom in these chips. Asus is already touting a 400W model. I would think that is about topped out.The PCB doesnt dissipate much power, its size has little relevance.

What affects overclocking more is how automated overclocking and power control becomes.

As time goes on we get less control, it is more automated.

The problem is we dont get potential high clocks for free any more, we have to pay for it.

Everything is performance binned.

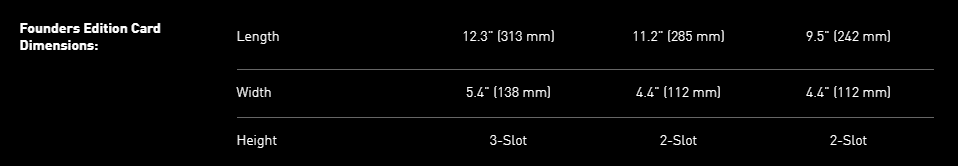

3090/3080/3070Have they posted measurements yet? I think I only have about a inch of length over my current Aorus 2080TI before the card would be hitting my side mounted CPU cooler. I'll be a sad boi if I can't fit the 3090.

No clocks, no prices.....hmmm.I will say it makes me wonder a bit, because none of the AIB partners have released clock speeds yet for the factory OCed cards (as far as I have seen). And these cards are ostensibly about 2-4 weeks away.

There will be 3+ slot cards as well I was told.Most AIB cards look like 2.5 to triple slot coolers so it would be interesting to see what the oc's ends up being for each brand.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)