With a conventional open fan card, the hot GPU air is mostly just recirculated inside the box. It's eventually going to heat up everything, including the CPU.

You need better airflow design if your just leaving gpu air to recirculate in the case.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

With a conventional open fan card, the hot GPU air is mostly just recirculated inside the box. It's eventually going to heat up everything, including the CPU.

And, we actually built our own equipment to test transient power response. I do not think any other review site ever covered that, and that is what ASUS is focusing on. You can ask @[spectre] He knows a lot more about this than me.

Might be problematic for cpu temperature performance as I'm still using the old fashion air cooling (Noctua NH-u12s) .

Good for the card temp but bad of my cpu setup. Gotta see what other AIBs are designing their cooling system.

View attachment 275446

Thanks for your insight.Though it probably won't be top of agenda for many reviewers, that will be racing to get standard reviews up.

I think it's just the angle, make it look bigger than it is.

Yeah. We had to go the route of building custom equipment because loads aren't static and even active load testers can not mimic those transient spikes of power draw. It was one of those innovations that we brought to the table years ago that continues to be relevant today. For a while, power draws were not increasing as much as in the past. However, with what looks to be some very power hungry new components those kinds of issues are likely to crop up again.

Are you talking about RTX IO / DirectStorage or something else?How much does the game asset decompression support mitigate GPU RAM? At first I was uncertain about the 3080’s 10 GB buffer and was wondering whether to rationalize the 3090’s far higher price and correspondingly fractional performance increase. But, it seems to me that if the assets can be loaded onto the GPU much more quickly than before, that might ameliorate RAM exhaustion.

Are you talking about RTX IO / DirectStorage or something else?

Did you notice if parts where particularly sensitive to voltage changes?

I know in my S4 as well as my parents benz that if the 12v battery even starts to go due to age or other problems, all sorts of systems in the vehicle start to behave erratically. Wondering if the same would be true for pc components?

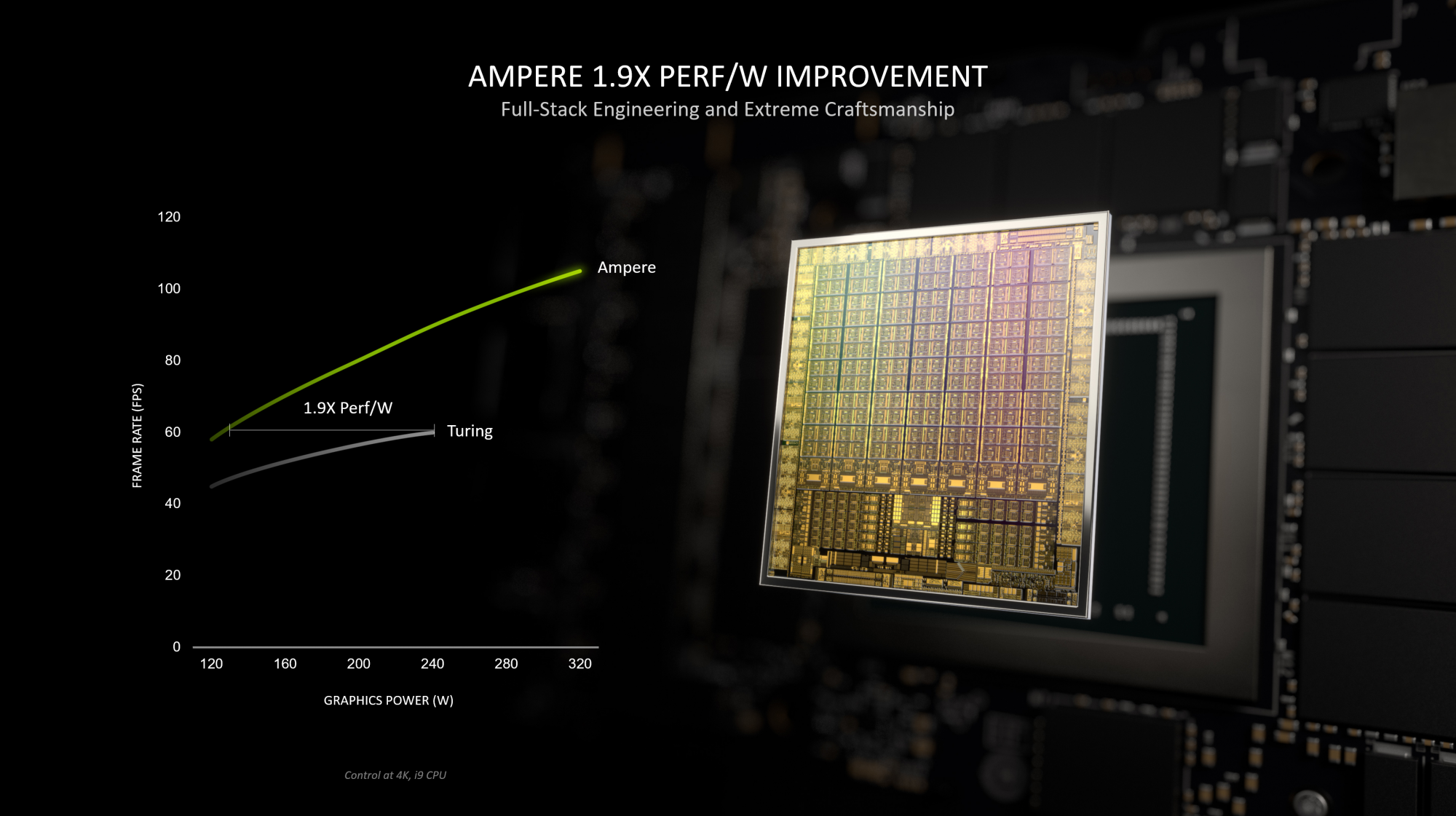

Yes indeed, explain how a 220w card that performs about the same as a 250w card is 90% more efficient. Maybe it is at idle where the 3070 shuts off it's fan and the 2080Ti doesn'tPut up the perf/Watt slide and I will explain to you why you fail physics...

So faster frames? Not really going to do anything extra for your TV. No?the RTX 3090 because it may be the first card that truly has the power to realize this giant TV/monitor's potential. Full 10-bit, 4:4:4 4k/120hz GSYNC + HDR at close to max settings

Yes indeed, explain how a 220w card that performs about the same as a 250w card is 90% more efficient. Maybe it is at idle where the 3070 shuts off it's fan and the 2080Ti doesn't

As for physics, I took college physics when in High school, tenth grade, got an A. Ran nuclear power plants and taught at Naval Power School, several courses. Glad you know so much about me.

Oh yeah, show me a picture of yourself and I can explain to you how you fail at everything . . .just kidding, yeah for real.

First you make up claims like "serialized raytracing"...and now you don't understand a simple picture:

View attachment 275525

Very telling...

And in the case you do not understand:

60 FPS on Turing would require ~240 Watt

60 FPS on Ampere requires ~130 Watt.

It is basic physics/math.

I guess your saying the 3070 and 2080Ti does not follow that graph, at same performance they are roughly 10% within each other in power, if the rating is correct or maybe Jensen is lying (would not be his first).First you make up claims like "serialized raytracing"...and now you don't understand a simple picture:

View attachment 275525

Very telling...

And in the case you do not understand:

60 FPS on Turing would require ~240 Watt

60 FPS on Ampere requires ~130 Watt.

It is basic physics/math.

LG OLEDs go up to 4k 120hz gsync 10bit color. So yes, the more horsepower the betterSo faster frames? Not really going to do anything extra for your TV. No?

Yes because first gen was just an over priced tech demoAnyone else see that RTX on/off cp 2077 comparison slider on the GeForce site? Shadowing and reflections both look noticeably better with raytracing. EDIT: Forgot how I got to it, so no link.

Eh, it'll take time (years?) to be common but major titles do seem to be starting to pick it up. Heck even call of duty and World of Warcraft (very soon) have it. I know I'd turn it off for raiding if I played wow, but that's not most of your time in game anyway.Yes because first gen was just an over priced tech demo

Where were ya AMD?

I think it's just the angle, make it look bigger than it is.

Yes indeed, explain how a 220w card that performs about the same as a 250w card is 90% more efficient. Maybe it is at idle where the 3070 shuts off it's fan and the 2080Ti doesn't

As for physics, I took college physics when in High school, tenth grade, got an A. Ran nuclear power plants and taught at Naval Power School, several courses. Glad you know so much about me.

Oh yeah, show me a picture of yourself and I can explain to you how you fail at everything . . .just kidding, yeah for real.

I guess your saying the 3070 and 2080Ti does not follow that graph, at same performance they are roughly 10% within each other in power, if the rating is correct or maybe Jensen is lying (would not be his first).

More importantly, that graph is totally worthless marketing BS that means zero. What settings, part of game, GPU card etc. . .

Fingers crossed for a 3080 with higher vRAM

Custom RTX 3090, 3080, & 3070 Video Cards

Better than what was expected I guess the the reaction of the stock market right now:

View attachment 275317

I guess your saying the 3070 and 2080Ti does not follow that graph, at same performance they are roughly 10% within each other in power, if the rating is correct or maybe Jensen is lying (would not be his first).

More importantly, that graph is totally worthless marketing BS that means zero. What settings, part of game, GPU card etc. . .

Geez, Nvidia stock is on a tear. It's up another 6% in pre-market trading today. AMD is also up 8% and some sites are attributing it to the 5300 lol.

https://markets.businessinsider.com...rd-gaming-chipmaker-devices-2020-8-1029549474

Geez, Nvidia stock is on a tear. It's up another 6% in pre-market trading today. AMD is also up 8% and some sites are attributing it to the 5300 lol.

https://markets.businessinsider.com...rd-gaming-chipmaker-devices-2020-8-1029549474

RTX. IT’S ON. | The Ultimate Ray Tracing and AI

RTX. IT’S ON. | The Ultimate Ray Tracing and AI

Nvidia's hype team is in full gear.