So I bought a Perc 5/i and have a RAID 5 array of 5 x 750 GB Seagate HDs on there. Some may be 7200.10s, some may be 7200.11s, I don't know.

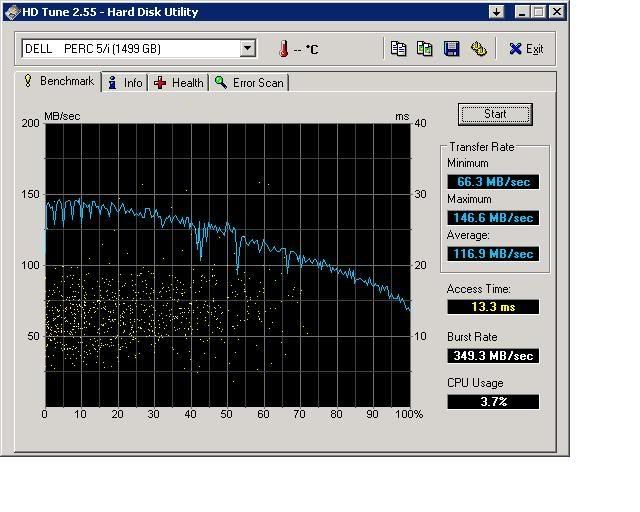

Anyway, according to HD Tach I only get about 113 MB/sec average read speeds. Isn't this a bit low? I have write back enabled (without a battery backup, but I forced it in the RAID card BIOS and will get it soon) and read-ahead enabled.

The system specs are:

E6600 @ 2.40 GHz (will overclock AFTER setting up system)

IP35 Pro

4 GB RAM

Vista 64 Home Premium

4870 512 MB

Dell Perc 5/i w/ 256 MB RAM

Everything else is probably irrelevent. I thought I should be getting upwards of 200 MB/sec on such a system? Now, it doesn't really matter because my network copy speeds are only ~70 MB/sec or less, but what can I do to maximize the potential of this array?

Anyway, according to HD Tach I only get about 113 MB/sec average read speeds. Isn't this a bit low? I have write back enabled (without a battery backup, but I forced it in the RAID card BIOS and will get it soon) and read-ahead enabled.

The system specs are:

E6600 @ 2.40 GHz (will overclock AFTER setting up system)

IP35 Pro

4 GB RAM

Vista 64 Home Premium

4870 512 MB

Dell Perc 5/i w/ 256 MB RAM

Everything else is probably irrelevent. I thought I should be getting upwards of 200 MB/sec on such a system? Now, it doesn't really matter because my network copy speeds are only ~70 MB/sec or less, but what can I do to maximize the potential of this array?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)