- Joined

- May 18, 1997

- Messages

- 55,635

After our first RTX 2080 Ti Founders Edition went into Space Invaders mode, after a bit of testing, we put in with NVIDIA for an RMA since we did purchase the card. We got our replacement card in on Monday. Our "new" RTX 2080 Ti FE card has been equipped with Samsung GDDR6 instead of Micron. Luckily, we bought two 2080 Ti FE cards, and still have one here with Micron VRAM to test side by side with the new Samsung VRAM card.

GPUz Side by Side.

This VRAM change has of course been reported elsewhere, but we assuredly can confirm this firsthand finally. The $64K question is of course, does this point to the issue with all the failing 2080 Ti FE cards being VRAM associated. Space Invaders points to yes quite possibly, as it looks to very much be a VRAM failure, but according to NVIDIA this is just a "Test Escape." We are still digging.

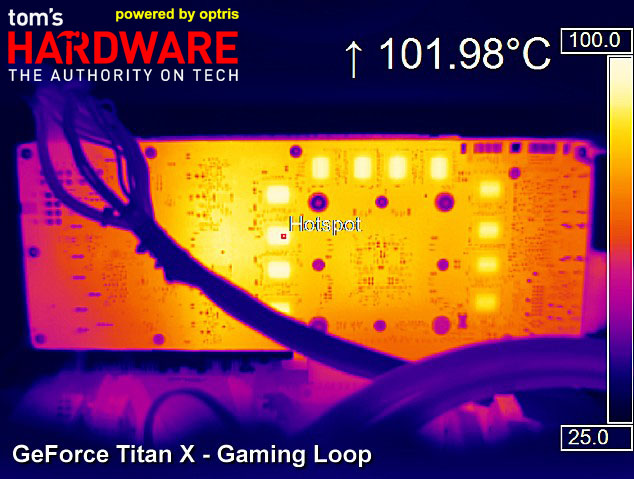

We are however sure that the Micron VRAM is running at a very toasty 86C under a very normal workload on an open test bench this evening.

FLIR Micron Memory Temp.

GPUz Side by Side.

This VRAM change has of course been reported elsewhere, but we assuredly can confirm this firsthand finally. The $64K question is of course, does this point to the issue with all the failing 2080 Ti FE cards being VRAM associated. Space Invaders points to yes quite possibly, as it looks to very much be a VRAM failure, but according to NVIDIA this is just a "Test Escape." We are still digging.

We are however sure that the Micron VRAM is running at a very toasty 86C under a very normal workload on an open test bench this evening.

FLIR Micron Memory Temp.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)

. I am very curious as to whether there is any clearly distinguishable differences between the two VRAM types.

. I am very curious as to whether there is any clearly distinguishable differences between the two VRAM types.