This. They going to just discontinue the 4070 ti and 4080rumors are Nvidia is going to replace the vanilla 4080 with the 4080 Super...so I could see them being aggressive and lowering the price to $1000 to go against the 7900XTX...plus the 4080 is not selling at all...lowering it to $1000 would be a good move especially since 4090 supply will be really tight for the next few months

plus if the 4080 Super is going to have the same 16GB VRAM, same 256-bit memory bus and only 6%- 9% faster than the vanilla 4080 then there's no point having both the 4080 and 4080 Super on the market at the same time

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA rumored to be preparing GeForce RTX 4080/4070 SUPER cards

- Thread starter Blade-Runner

- Start date

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,667

big disappointment the 4070 super is still 12GB.

Is it ??big disappointment the 4070 super is still 12GB.

Using the latest 7800xt techpowerup 1440p average that could look

4090...: 176% $1600-$2200

4080s..: 155% $1000-$1200

7900xtx: 146% $1000

4080...: 144% *non officially gone*

4070Ts.: 140% $800-$900

7900xt.: 127% $800

4070ti.: 119% *non officially gone*

4070s..: 114% $600-$700

7800xt.: 100% $500

4070...: 96% $550

So maybe non remaking new 4080 and 4070ti, price cut the 4070 that continue.

It could sound aggressive, but has they shift to TSMC 3 for the pro line, the wait line for the L40 type dry up, AMD possibly getting aggressive before the 2024 Q3 launch of RDNA 4 like they did with RDNA 2 street price before the 7900xtx launch.

And "sound aggressive", $1000 for a xx80 card (the announced 4080 model cost not more to make, maybe much cheaper on renewed vram pricing contract), that still good money for a 256 bits card under 400mm on an old by now node, A14 bionic will turn 4 years old in 2024.

$700 was for a 2080 in 2018 was not called cheap by anyone, and that $850 adjusted in 2023 dollars.

If this happens, where does AMD put the 530mm of die 7900xt, the performance gap with the 7800xt is vast it cannot be too close, cannot be only 15% cheaper than a 4070 T super either... And if they go around $630-$650 the xtx cannot be $1000, that sku-price strategy from Nvidia would make a lot of sense to hurt AMD line up, maybe forcing them to "retire" the 7900xt-GRE.

Also once the AMD 8000 series launch in 2024, that rumored reshuffle will not sound aggressive at all anymore would be my guess, back to overpriced options real quick.

Last edited:

AMD has to live with the 7900xt & 7900xtx until 2025 9xxx series.If this happens, where does AMD put the 530mm of die 7900xt

Maybe an overclocked revision with higher memory speeds ??

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 954

At this point, this for sure isn't going to happen. Again, AD102 is driving too much money. So much so that 4090 cost is going up. And this is with the shutdown of all sales to China of AD102.

Can't happen due to the bus width of AD103. They're limited to the 8 RAM chips. And there isn't a way for them to double or halve really without bringing up RAM to the amount the 4090 has. I suppose they could bring it up to 24GB, but we haven't known nVidia to not be stingy for at least the last 3 generations (2000 series to present).

Yep, Nvidia has zero reason to ever give us lower priced AD102 based cards...at this point. 4090's sell, and at this point, are also going up in price. So the idea of a lower cost AD102 card doesn't make sense. This is why you aren't hearing news for a 4080 Ti and we instead keep hearing 4080 Super. I would not expect any of these refresh cards to be on AD102.

Correct, AD103 has a memory controller capable of 256-bit at most. They could double and do 32GB, 3090 style with front and back RAM, but I think we all know that won't happen. I forget what density we are up to now for G6X but perhaps there is a path to 24GB on 256-bit as well if each chip was 3GB, but I wouldn't count on that either. I'd say 16GB is the max you are going to see.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

The only way I think 24GB would come to a 4080 variant is if that's the new variant nVidia wants to push to China in light of not being able to ship AD102. However, I still think that's fairly unlikely. Unlikely enough for me to just say it won't happen. That and I also think MLID has been very consistent on all of these leaks for the past two years or so.Yep, Nvidia has zero reason to ever give us lower priced AD102 based cards...at this point. 4090's sell, and at this point, are also going up in price. So the idea of a lower cost AD102 card doesn't make sense. This is why you aren't hearing news for a 4080 Ti and we instead keep hearing 4080 Super. I would not expect any of these refresh cards to be on AD102.

Correct, AD103 has a memory controller capable of 256-bit at most. They could double and do 32GB, 3090 style with front and back RAM, but I think we all know that won't happen. I forget what density we are up to now for G6X but perhaps there is a path to 24GB on 256-bit as well if each chip was 3GB, but I wouldn't count on that either. I'd say 16GB is the max you are going to see.

If they could do a die shrink and also fix any timing issues they had with the chiplet design, theoretically that could open up to 20-25% performance. It was reported on multiple times that the 7900XTX is a bugged card that they couldn't pull the full performance out of without graphical glitches related to chiplet timing. Theoretically if they could fix that it would have a massive performance uplift.AMD has to live with the 7900xt & 7900xtx until 2025 9xxx series.

Maybe an overclocked revision with higher memory speeds ??

Whether AMD thinks it's worth trying to fix and pushing out another variant for is another question altogether. Certainly I think they think that trying to make highly competitive <$1000 cards is more important than trying to compete with the 4080/4090.

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 954

I don't follow MLID, but yeah makes sense just looking at the market and where the deficiencies are and why you would refresh in the first place...and what you'd refresh. 4090 has never been a problem in the stack, so AD102 at $1600+ is something that buyers at that level have accepted for years now. 4080 at $1200 did not sit well and sits on shelf, so makes sense to refresh it with full die and faster memory for a 5-10% performance increase with a $200 price cut to $999 to compete with the 7900XTX at that price point. 4070 Ti Super on AD103 that is within ~10% of a regular 4080 replacing the 4070 Ti, while also moving from 12 to 16GB for that price point at $799 makes sense and I think will end up the big winner of this refresh. 4070 Super on AD104 within 5-10% of a 4070 Ti, again, fixes that price segment. More or less those specific refreshes fix everything wrong with those current cards. Personally I would like to see even more aggressive pricing, but hey that's just me.The only way I think 24GB would come to a 4080 variant is if that's the new variant nVidia wants to push to China in light of not being able to ship AD102. However, I still think that's fairly unlikely. Unlikely enough for me to just say it won't happen. That and I also think MLID has been very consistent on all of these leaks for the past two years or so.

If they could do a die shrink and also fix any timing issues they had with the chiplet design, theoretically that could open up to 20-25% performance. It was reported on multiple times that the 7900XTX is a bugged card that they couldn't pull the full performance out of without graphical glitches related to chiplet timing. Theoretically if they could fix that it would have a massive performance uplift.

Whether AMD thinks it's worth trying to fix and pushing out another variant for is another question altogether. Certainly I think they think that trying to make highly competitive <$1000 cards is more important than trying to compete with the 4080/4090.

Depend on how much stock they already achieved to have in, put the AD103 is way over the permitted limit and under sanction has well I think, only put on the exception list has of now like the 4090 was for a while, if they boost it too much for AI workflow they open the door for it to be added overnight.The only way I think 24GB would come to a 4080 variant is if that's the new variant nVidia wants to push to China in light of not being able to ship AD102.

AD 103 is not over the permitted limit and falls well below it. The 103 has gimped FP64 double performance, and falls well short of the cutoff.Depend on how much stock they already achieved to have in, put the AD103 is way over the permitted limit and under sanction has well I think, only put on the exception list has of now like the 4090 was for a while, if they boost it too much for AI workflow they open the door for it to be added overnight.

The previous cutoff was based on memory throughput and training speeds, which is why Nvidia was able to take the A100 cut back the memory bandwidth , and NVLink lanes, call it the A800 series and fall under the previous limit. But then the Chinese developed a new LLM that found a way around that by subdividing FP64 registers and they brought their speeds back up. Rumours suggest Nvidia had a hand in helping develop that LLM.

The new one they are just taking FP64 double GFLOPS and multiplying by 4. If that goes over 4800 then it’s banned. A 100% complete 103 under that criteria is going to struggle to beat 3250.

The 4090 was never whitelisted the government simply changed the criteria for the cutoff. The rumours of the 4090 being white listed were because it was left off the list Nvidia presented to investors during an earnings call which was either wishful thinking or a mistake.

Last edited:

Are you sure I do not think the executive order specify FP64 at all, they can use FP8 numbers if they want (lot of training at 16 bits)AD 103 is not over the permitted limit and falls well below it. The 103 has gimped FP64 double performance, and falls well short of the cutoff.

https://www.ft.com/content/be680102-5543-4867-9996-6fc071cb9212

The revamped export controls will prohibit the sale to Chinese groups of data centre chips that are capable of operating at speeds of 300 teraflops — meaning they can calculate 300tn operations per second — and above.Sales of chips with speeds of 150 to 300 teraflops will be barred if they have a performance density of 370 gigaflops (billion calculations) per square millimetre or more.

The 4080 according to Nvidia:

| 390 TFLOPS of FP8 tensor performance according to Nvidia, the die is under 400mm, https://images.nvidia.com/aem-dam/S...idia-ada-gpu-architecture-whitepaper-v2.1.pdf |

390,000 / 400 = 975 gigaflops per mm, way above the 370 limits. |

It was not written in a way that the 4090 barely make it that card is almost 6 time too strong, according to this:

https://www.theregister.com/2023/10/19/china_biden_ai/

Last edited:

OK yeah, I was only getting half of it or I hallucinated it, the entire 4000 series with the exception of the 4050 would fail to meet the criteria specified by the government.Are you sure I do not think the executive order specify FP64 at all, they can use FP8 numbers if they want (lot of training at 16 bits)

https://www.ft.com/content/be680102-5543-4867-9996-6fc071cb9212

The revamped export controls will prohibit the sale to Chinese groups of data centre chips that are capable of operating at speeds of 300 teraflops — meaning they can calculate 300tn operations per second — and above.Sales of chips with speeds of 150 to 300 teraflops will be barred if they have a performance density of 370 gigaflops (billion calculations) per square millimetre or more.

The 4080 according to Nvidia:

390 TFLOPS of FP8 tensor performance according to Nvidia, the die is under 400mm, https://images.nvidia.com/aem-dam/S...idia-ada-gpu-architecture-whitepaper-v2.1.pdf

390,000 / 400 = 975 gigaflops per mm, way above the 370 limits.

"Integrated circuits having one or more digital processing units have either of the following: a.1. a 'total processing performance' of 4,800 or more, or a.2. a 'total processing performance' of 1,600 or more and a 'performance density' of 5.92 or more."

Calculating the total processing performance (TPP) score for any given GPU or accelerator is a fairly straightforward project. Double the max number of dense tera-operations — floating point or integer — a second and multiple by the bit length of the operation. If there are multiple performance metrics advertised for various precisions — INT4, FP8, FP16, and FP32, for example — the highest TPP score is used.

Using Nvidia's L40S as an example, the equation would look a bit like this:

2 x 733 teraFLOPS x 8 bits = a TPP of 11,728

11,728 TPP / 609 mm² = a performance density of 19.25

https://www.theregister.com/2023/10/19/china_biden_ai/

Even the 4060 fails the full metric set by violating the total processing performance coming in at 1936 TPP and having a performance density of 12.1

Here's that same article you linked, or at least a really close one to it but not paywalled.

https://mexicobusiness.news/aerospace/news/us-impose-ai-chip-export-restrictions-china

The keynote in there is the last line of the ban.

"The new rules follow a "performance density" measure instead of chip speed to prevent workarounds. They strictly prohibit the sale of data center chips with speeds exceeding 300 teraflops to Chinese buyers. Chips with speeds between 150 and 300 teraflops and a performance density of 370 gigaflops or more per square millimeter, require government notification for Chinese buyers. However, chips used in consumer products like smartphones and gaming are exceptions and are not subject to these restrictions."

AD102 is used in the L40 series, and as such the AD102 is the part that is banned in China, the 4090 is a byproduct of that as the ban is on the chip itself and not the product using it. But as there are no data center parts using the AD103 or below they don't fall under the bans even though they don't pass the TPP test laid out

The article I found was reporting the ban based on TOPS, not TPP so it must have been older and I muddled up the date or it was just wrong but it was going based on FP64 sparse * 4

So now I am just left wondering what data center parts can Nvidia sell to China, or what can AMD sell for that matter, because AMD's existing MI250 series was banned and now the MI300s don't pass either, Just about everybody is running out of parts they can sell to China and it's not exactly a small market.

Last edited:

This is the whole point.Just about everybody is running out of parts they can sell to China

I mean I don't disagree, but I suspect China has something they can retaliate with, I suspect they ban Graphite exports, which would explain the sudden update on Geologists in Canada getting survey requests for Deposits with a specific crystalline structure as they are needed as an Anode in Lithium Ion batteriesThis is the whole point.

And there is a bit of a dance, i.e. they use the EO to add skus to the banned list, all those still exportable are just not banned to sell yet, like the 4090 that was not put on the list at first, the minute it became public that it was a popular buy for machine learning despite being a gamer card it was added.However, chips used in consumer products like smartphones and gaming are exceptions and are not subject to these restrictions."

AD102 is used in the L40 series, and as such the AD102 is the part that is banned in China, the 4090 is a byproduct of that as the ban is on the chip itself and not the product using it. But as there are no data center parts using the AD103 or below they don't fall under the bans even though they don't pass the TPP test laid out

Would they modify AD103 to make it attractive for an ML workload (large VRAM quantity, for example) and if it works and word come out that sales in China are suspicious for a $1000+ gaming cards, it will get added to the list or at least Nvidia could very well fear that it will be the case. The talk of trying to kick the 7900GRE out, make it sound like the Chinese market will be aimed by those super card. And I am not sure gimping the Tensor core could be tempting, keeping the vram alone to not attract extra attention (and save money and not create a strange case of a 32GB 4080 vs 24GB 4090 or some talk of 75% bus use because of a 8GB + 16GB setup) would be my guess.

An RTX 4080 in 8 bits inference is already a bit of a monster relative to the previous A100

And in 16-bit training it is not that far from a L40 (when the task fit) and more than half an A100, at least with January drivers-firmware:

https://timdettmers.com/2023/01/30/which-gpu-for-deep-learning/

We see that there is a gigantic gap in 8-bit performance of H100 GPUs and old cards that are optimized for 16-bit performance.

For this data, I did not model 8-bit compute for older GPUs. I did so, because 8-bit Inference and training are much more effective on Ada/Hopper GPUs because of the 8-bit Float data type and Tensor Memory Accelerator (TMA) which saves the overhead of computing read/write indices which is particularly helpful for 8-bit matrix multiplication.

at least 24 GB for work with transformers

And they could just go and update something because I mean they will need to revamp the banned list every 6 months or so.And there is a bit of a dance, i.e. they use the EO to add skus to the banned list, all those still exportable are just not banned to sell yet, like the 4090 that was not put on the list at first, the minute it became public that it was a popular buy for machine learning despite being a gamer card it was added.

Would they modify AD103 to make it attractive for an ML workload (large VRAM quantity, for example) and if it works and word come out that sales in China are suspicious for a $1000+ gaming cards, it will get added to the list or at least Nvidia could very well fear that it will be the case. The talk of trying to kick the 7900GRE out, make it sound like the Chinese market will be aimed by those super card. And I am not sure gimping the Tensor core could be tempting, keeping the vram alone to not attract extra attention (and save money and not create a strange case of a 32GB 4080 vs 24GB 4090 or some talk of 75% bus use because of a 8GB + 16GB setup) would be my guess.

An RTX 4080 in 8 bits inference is already a bit of a monster relative to the previous A100

And in 16-bit training it is not that far from a L40 (when the task fit) and more than half an A100, at least with January drivers-firmware:

https://timdettmers.com/2023/01/30/which-gpu-for-deep-learning/

We see that there is a gigantic gap in 8-bit performance of H100 GPUs and old cards that are optimized for 16-bit performance.

For this data, I did not model 8-bit compute for older GPUs. I did so, because 8-bit Inference and training are much more effective on Ada/Hopper GPUs because of the 8-bit Float data type and Tensor Memory Accelerator (TMA) which saves the overhead of computing read/write indices which is particularly helpful for 8-bit matrix multiplication.

at least 24 GB for work with transformers

This is just an all-around feels bad.

The intent of these bans is to prevent the Chinese Military from getting their hands on the hardware to stop them from doing various simulations and other BS reasons. Like that is going to change anything? Not terribly hard for them to buy the hardware in another country and have it smuggled in. They post all the time about finding and blocking smugglers from bringing in stuff for the black market, but I am sure some brown paper boxes will show up one day and when they go to inspect it some supervisor will just say to the guy, Bùshì zhège, nǐ jīntiān jiù qù nàlǐ! while pointing to the back of the warehouse, while those boxes get loaded onto an unmarked truck and never seen again.

Yep. And to be sure, China will still get all these banned products, it's just going to be painful at 2x-3x+ previous price, so middleman market is also rejoicing. But the overall is a change in posture and it was definitely overdue.I mean I don't disagree, but I suspect China has something they can retaliate with, I suspect they ban Graphite exports, which would explain the sudden update on Geologists in Canada getting survey requests for Deposits with a specific crystalline structure as they are needed as an Anode in Lithium Ion batteries

It started to become apparent to me about 6 years ago that GPUs were not just "the new CPUs", but were becoming a global resource like food, water, gold, rare earth materials that we'd eventually be fighting over.

Last edited:

Blade-Runner

Supreme [H]ardness

- Joined

- Feb 25, 2013

- Messages

- 4,386

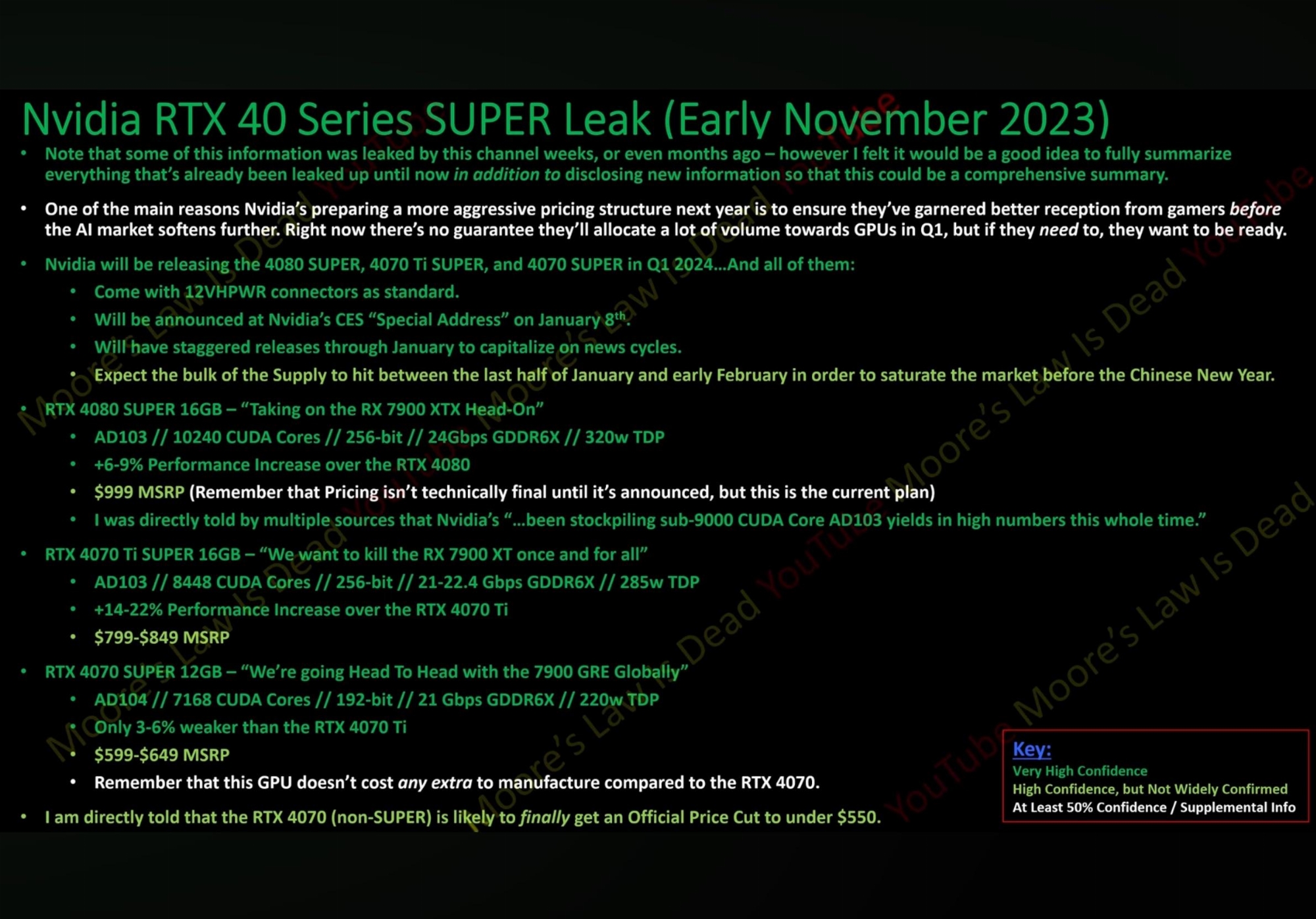

new video from that Moore's Law is Dead YouTuber...I have no idea if he's reliable but his latest video has a lot of details...seems like the 4080 Super will be around 40% of the 4090's performance (and cost 20% less than the vanilla 4080's MSRP) and the 4070 Ti Super will be closer to the original 4080:

4080 Super 16GB 256-bit

6-9% performance improvement over 4080

$999

4070 Ti Super 16GB 256-bit

14-22% performance improvement over 4070 Ti

$799-$849

4070 Super 12GB 192-bit

$599-$649

Still too expensive in my view, especially without uplifts in ram capacity.

Flogger23m

[H]F Junkie

- Joined

- Jun 19, 2009

- Messages

- 14,421

Still too expensive in my view, especially without uplifts in ram capacity.

I agree. 4070 Super should have 16GB, 4080 more than 16GB. However I half expected that.

Blade-Runner

Supreme [H]ardness

- Joined

- Feb 25, 2013

- Messages

- 4,386

If AMD had any brains and wanted to take a shit in Nvidia's morning coffee they should drop the price of the 7900XTX now to under $849.I agree. 4070 Super should have 16GB, 4080 more than 16GB. However I half expected that.

Yeah the connector pins got a slight redesign and they added a clip in there somewhere in the design revision.Hmm a 4070 Super that is 3-6% slower than a $800 4070ti a year later. Turing all over again, just more expensive. All with the new power connectors too. Hopefully they have fixed their cable quality/connector problems.

https://www.tweaktown.com/news/9219...r-geforce-rtx-40-series-new-design/index.html

Last edited:

Leak from korean retailer confirms 2 of the super cards :

https://twitter.com/harukaze5719/status/1722959682422353938?s=20

https://twitter.com/harukaze5719/status/1722959682422353938?s=20

One Korean seller confirmed RTX 40 Super. But he only confirmed 4070 Super and 4080 Super.

Just for naming, didn't give any details

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,563

That would probably only benefit us, not AMD.If AMD had any brains and wanted to take a shit in Nvidia's morning coffee they should drop the price of the 7900XTX now to under $849.

AMD has been very clear to their investors that they intend to have equal or better margins than Nvidia. Lowering prices would be counter to those promises, and breaking promises to investors verbal or otherwise usually get you sued and fired.That would probably only benefit us, not AMD.

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,563

If all they said was they "intend" to have equal or better margins than nvidia then there will be no legal accountability for that.AMD has been very clear to their investors that they intend to have equal or better margins than Nvidia. Lowering prices would be counter to those promises, and breaking promises to investors verbal or otherwise usually get you sued and fired.

Yeah the connector pins got a slight redesign and they added a clip in there somewhere in the design revision.

https://www.tweaktown.com/news/9219...r-geforce-rtx-40-series-new-design/index.html

so I would need to buy a new PSU to pair with the new GPU?

No it’s backwards compatible.so I would need to buy a new PSU to pair with the new GPU?

But Modular PSU’s you can just buy the new cable.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

Investors understand you cannot divide by zero as well. If nVidia product is better than their product at every price point and AMD sells zero cards to “protect their margins” then that’s also how you get fired.AMD has been very clear to their investors that they intend to have equal or better margins than Nvidia. Lowering prices would be counter to those promises, and breaking promises to investors verbal or otherwise usually get you sued and fired.

One way or another in the silicon market, pricing will shift. Whether it’s before nVidia makes a move or after. And it’s up to AMD whether they want the good will before the fact or not.

At this stage I’m pretty sure that AMD has found their market they pretty solidly capitalize the Anything but Nvidia crowd, their only trouble there is if Intel manages to come out swinging with Battlemage at CES.Investors understand you cannot divide by zero as well. If nVidia product is better than their product at every price point and AMD sells zero cards to “protect their margins” then that’s also how you get fired.

One way or another in the silicon market, pricing will shift. Whether it’s before nVidia makes a move or after. And it’s up to AMD whether they want the good will before the fact or not.

Intel has been making some solid progress on the driver side of things. AMD is going to need their 7x50 refresh to stick the landing or risk Intel nipping their heals a little harder than they hoped.

Tangent:

Anybody heard anything on the MI300’s?

AMD was talking that up hard as their fastest SKU to reach $1B in sales but then the new China sanctions landed and I’ve heard all but crickets on it since.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

I don't think that there are many of that type of customer.At this stage I’m pretty sure that AMD has found their market they pretty solidly capitalize the Anything but Nvidia crowd, their only trouble there is if Intel manages to come out swinging with Battlemage at CES.

I do think that there is a significant GPU customer base that is price sensitive and they are okay with alternative brands if it gets them the price and performance they want. It is quite literally just about voting with your wallet, and like actual voting there is a huge amount of fence-sitters that just buy what they think makes sense for them at the time.

If AMD's entire user base was "anyone but nVidia", then they could simply price their cards any-which-way and their customers would buy it - because what other alternative is there? Intel parts for sure are not mature enough to best a 7800XT let alone a 7900XTX. Anyway, the point is AMD's target demo for the time being I'd say is more about the bang-for-the-buck/performance-per-dollar buyer. Which is obviously why AMD cards are priced where they are relative to their nVidia counterparts.

If the performance-per-dollar gets too close, then those same fence sitters may buy the nVidia counterpart because of software optimizations. So AMD has to convincingly price their product stack below nVidia's to show their cards in a favorable light. It's one of the reasons why the 7800 XT is a sales darling.

I'm merely saying (and as are others): that AMD should then continue doing that, but also strategically beat nVidia to the punch as well as deflate nVidia's next launch by undercutting them ahead of time. They could even do so with "unofficial" price drops for the Holiday season, and when nVidia refreshed parts inevitably come at CES, simply make the prices "official"; if AMD are for some reason uncertain of this very certain future.

I don't really think so. I do think Intel can and will be a contender in the space, but I think I like the way MLID put it: when Intel released cards it showed nit-picky reviewers what truly poor drivers looked like. And although AMD is securely #2, their drivers are significantly more mature than anything Intel can offer. So much so that there are tons of games that literally were not playable at all.Intel has been making some solid progress on the driver side of things. AMD is going to need their 7x50 refresh to stick the landing or risk Intel nipping their heals a little harder than they hoped.

I would sooner suggest buying a Mac for gaming than I would an Intel card for gaming. Not that Intel can be ignored forever, but for the time being just offering that performance per dollar is enough to keep Intel from making any real advancements in the market.

Beyond my level of understanding. I'd probably ask you that question lol.Tangent:

Anybody heard anything on the MI300’s?

AMD was talking that up hard as their fastest SKU to reach $1B in sales but then the new China sanctions landed and I’ve heard all but crickets on it since.

The drivers Intel launches alongside Battlemage might surprise a fair number, the updates to D3D9on12, and the D3D12 Translation layers of which Intel is a heavy contributor, have made some big updates of late.I don't think that there are many of that type of customer.

I do think that there is a significant GPU customer base that is price sensitive and they are okay with alternative brands if it gets them the price and performance they want. It is quite literally just about voting with your wallet, and like actual voting there is a huge amount of fence-sitters that just buy what they think makes sense for them at the time.

If AMD's entire user base was "anyone but nVidia", then they could simply price their cards any-which-way and their customers would buy it - because what other alternative is there? Intel parts for sure are not mature enough to best a 7800XT let alone a 7900XTX. Anyway, the point is AMD's target demo for the time being I'd say is more about the bang-for-the-buck/performance-per-dollar buyer. Which is obviously why AMD cards are priced where they are relative to their nVidia counterparts.

If the performance-per-dollar gets too close, then those same fence sitters may buy the nVidia counterpart because of software optimizations. So AMD has to convincingly price their product stack below nVidia's to show their cards in a favorable light. It's one of the reasons why the 7800 XT is a sales darling.

I'm merely saying (and as are others): that AMD should then continue doing that, but also strategically beat nVidia to the punch as well as deflate nVidia's next launch by undercutting them ahead of time. They could even do so with "unofficial" price drops for the Holiday season, and when nVidia refreshed parts inevitably come at CES, simply make the prices "official"; if AMD are for some reason uncertain of this very certain future.

I don't really think so. I do think Intel can and will be a contender in the space, but I think I like the way MLID put it: when Intel released cards it showed nit-picky reviewers what truly poor drivers looked like. And although AMD is securely #2, their drivers are significantly more mature than anything Intel can offer. So much so that there are tons of games that literally were not playable at all.

I would sooner suggest buying a Mac for gaming than I would an Intel card for gaming. Not that Intel can be ignored forever, but for the time being just offering that performance per dollar is enough to keep Intel from making any real advancements in the market.

Beyond my level of understanding. I'd probably ask you that question lol.

Intel does not have any hardware support for DX9 and 10 on their hardware nor does it do any of the OpenGL variants natively, it is all done via translation layers and that is the primary source of Intel's swingy driver results, those libraries are coming along and with each passing year, their need decreases little by little.

DX11 support this late in the game is UGLY, the things some developers did there to make things work are both astounding and frightening, and I can 100% understand why Intel would struggle to get that working for everybody coming in this late into the game.

Intel has also put a fair few more resources into their driver collaboration team so they can have some boots on the ground working with the big developers for driver optimization ahead of time so they can work on getting their day 1 patches to a place that isn't utter crap.

Most of the new games launching with UE5 as their Engine will certainly help them there as Intel is working very closely with Epyc on getting their stuff cooked up right for them.

And yeah the Anything but Nvidia thing is a gross oversimplification of things, there can't be nearly that many of them, the I'm not buying another Nvidia GPU crowd has to be at least as big as the I'm not buying another AMD GPU crowd so that balances out in the wash.

But if Intel can deliver something that goes toe to toe with or edges out the RX 7600 while coming in under their price tag then Intel can gain more ground, they need to work on volume sales to pay off the stupid amounts of money they are spending on driver development, playing catchup isn't cheap.

Whiffle Boy

Limp Gawd

- Joined

- Feb 23, 2001

- Messages

- 411

Let's face it, the fella you are replying to is quoting a youtuber who has gone on the record numerous times over his career spouting outright lies... (while using attempts at charismatic charms to deflect and point these same offences out on other channels...)The drivers Intel launches alongside Battlemage might surprise a fair number, the updates to D3D9on12, and the D3D12 Translation layers of which Intel is a heavy contributor, have made some big updates of late.

Intel does not have any hardware support for DX9 and 10 on their hardware nor does it do any of the OpenGL variants natively, it is all done via translation layers and that is the primary source of Intel's swingy driver results, those libraries are coming along and with each passing year, their need decreases little by little.

DX11 support this late in the game is UGLY, the things some developers did there to make things work are both astounding and frightening, and I can 100% understand why Intel would struggle to get that working for everybody coming in this late into the game.

Intel has also put a fair few more resources into their driver collaboration team so they can have some boots on the ground working with the big developers for driver optimization ahead of time so they can work on getting their day 1 patches to a place that isn't utter crap.

Most of the new games launching with UE5 as their Engine will certainly help them there as Intel is working very closely with Epyc on getting their stuff cooked up right for them.

And yeah the Anything but Nvidia thing is a gross oversimplification of things, there can't be nearly that many of them, the I'm not buying another Nvidia GPU crowd has to be at least as big as the I'm not buying another AMD GPU crowd so that balances out in the wash.

But if Intel can deliver something that goes toe to toe with or edges out the RX 7600 while coming in under their price tag then Intel can gain more ground, they need to work on volume sales to pay off the stupid amounts of money they are spending on driver development, playing catchup isn't cheap.

Intel is bankrupt in MLiD's eyes, he still has at minimum (at last count on my sources) 5 videos where he firmly states the doom of Intel. The doom. I really dont think he gets out much, go watch a video of a tour of Intel at least... see the scope. They could literally sell nothing for 15 years and not go under (this is easily AS silly a claim as the ones he makes, is why i made it)

What am I going on about? Everyone is so quick to vilify Intel, praise AMD with the hero crown, and use NVIDIA for convenience. All three of these behaviors do nothing to bring tech forward.

Battlemage isn't cancelled, as much as MLiD will huff and puff on the topic (much like the 580, which was 100% confirmed "cancelled" by his best sources). It may be subpar at release, hell it may be another joke of a release, months late and dollars short. Let it happen before judgement.

This is the difference that needs to be made these days in the YouTube rumormongering... these guys are inventing controversy to keep themselves employed in between launches. ampere, ada, RDNA1,2,3, all of them were completely and successfully leaked 6+ months ahead, and if you know where to look, you have the specs, pricing, estimates at performance. Its not rocket science.

The main reason the 4090 is such a "killer" this gen, is becuase of how weak past gens have been, and just how WEAKER the lower stack is. Everyone is still so quick to forgive for the:

4080 16GB (4060TI)

4080 12GB (4060)

4070TI (4050TI)

real card names in brackets for ease of reference to previous gens. (sorry to 4080 owners, would you like me to drive to the local shops and show you the dust lines where the 4080's have been sitting since release? none have sold there. OEM's and system pack ins are the only ones moving these pieces of trash) These would have been the metrics for continued performance had collusion and corporate price manipulating not be commonplace now.

I like video cards, they are fun, but golly is it ever a non technical space of tech now. I giggle when I see the odd question asked (GREAT questions... from keen minds...) about how is it that these companies are SO close in performance and price... gen after gen, when the tech is so "different" between the two.

Do we really need to paint that picture? I drank the NVIDIA koolaid like a good simp. I have a 3090, but I also worked my booty off for it. Value is something that is very eye of the beholder, especially in inflation laden times.

This fun rant has been brought to you by the letter H.

I never said any of that…. Are you responding to the wrong post??Let's face it, the fella you are replying to is quoting a youtuber who has gone on the record numerous times over his career spouting outright lies... (while using attempts at charismatic charms to deflect and point these same offences out on other channels...)

Intel is bankrupt in MLiD's eyes, he still has at minimum (at last count on my sources) 5 videos where he firmly states the doom of Intel. The doom. I really dont think he gets out much, go watch a video of a tour of Intel at least... see the scope. They could literally sell nothing for 15 years and not go under (this is easily AS silly a claim as the ones he makes, is why i made it)

What am I going on about? Everyone is so quick to vilify Intel, praise AMD with the hero crown, and use NVIDIA for convenience. All three of these behaviors do nothing to bring tech forward.

Battlemage isn't cancelled, as much as MLiD will huff and puff on the topic (much like the 580, which was 100% confirmed "cancelled" by his best sources). It may be subpar at release, hell it may be another joke of a release, months late and dollars short. Let it happen before judgement.

This is the difference that needs to be made these days in the YouTube rumormongering... these guys are inventing controversy to keep themselves employed in between launches. ampere, ada, RDNA1,2,3, all of them were completely and successfully leaked 6+ months ahead, and if you know where to look, you have the specs, pricing, estimates at performance. Its not rocket science.

The main reason the 4090 is such a "killer" this gen, is becuase of how weak past gens have been, and just how WEAKER the lower stack is. Everyone is still so quick to forgive for the:

4080 16GB (4060TI)

4080 12GB (4060)

4070TI (4050TI)

real card names in brackets for ease of reference to previous gens. (sorry to 4080 owners, would you like me to drive to the local shops and show you the dust lines where the 4080's have been sitting since release? none have sold there. OEM's and system pack ins are the only ones moving these pieces of trash) These would have been the metrics for continued performance had collusion and corporate price manipulating not be commonplace now.

I like video cards, they are fun, but golly is it ever a non technical space of tech now. I giggle when I see the odd question asked (GREAT questions... from keen minds...) about how is it that these companies are SO close in performance and price... gen after gen, when the tech is so "different" between the two.

Do we really need to paint that picture? I drank the NVIDIA koolaid like a good simp. I have a 3090, but I also worked my booty off for it. Value is something that is very eye of the beholder, especially in inflation laden times.

This fun rant has been brought to you by the letter H.

Whiffle Boy

Limp Gawd

- Joined

- Feb 23, 2001

- Messages

- 411

nah I just wasn't clear, I wasn't directing anything at you, it was a general reply to the generic green red defense that was making me nauseous reading the last page of the thread.I never said any of that…. Are you responding to the wrong post??

have a nice day

This sound strange to me in many waysThe main reason the 4090 is such a "killer" this gen, is becuase of how weak past gens have been, and just how WEAKER the lower stack is. Everyone is still so quick to forgive for the:

4080 16GB (4060TI)

Ampere was not on the weak side at 4k the 3080 was almost 70% stronger than the 2080

Lovelace was not on the weak side either, 4080 about 50% stronger than the 3080 (how can we talk about 4060ti performance, we never saw the 60Ti crush the previous xx80 like that), or at least it is under the 4070ti that it started to get weak, price was the only issue with the 4080. It was so strong that the competitor 90xtx class had issue cleanly beating it.

Last edited:

Whiffle Boy

Limp Gawd

- Joined

- Feb 23, 2001

- Messages

- 411

oh the $3200 ASUS matrix or whatever its called doesn't do it for ya?I was there was a Geforce RTX 4090 super.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)