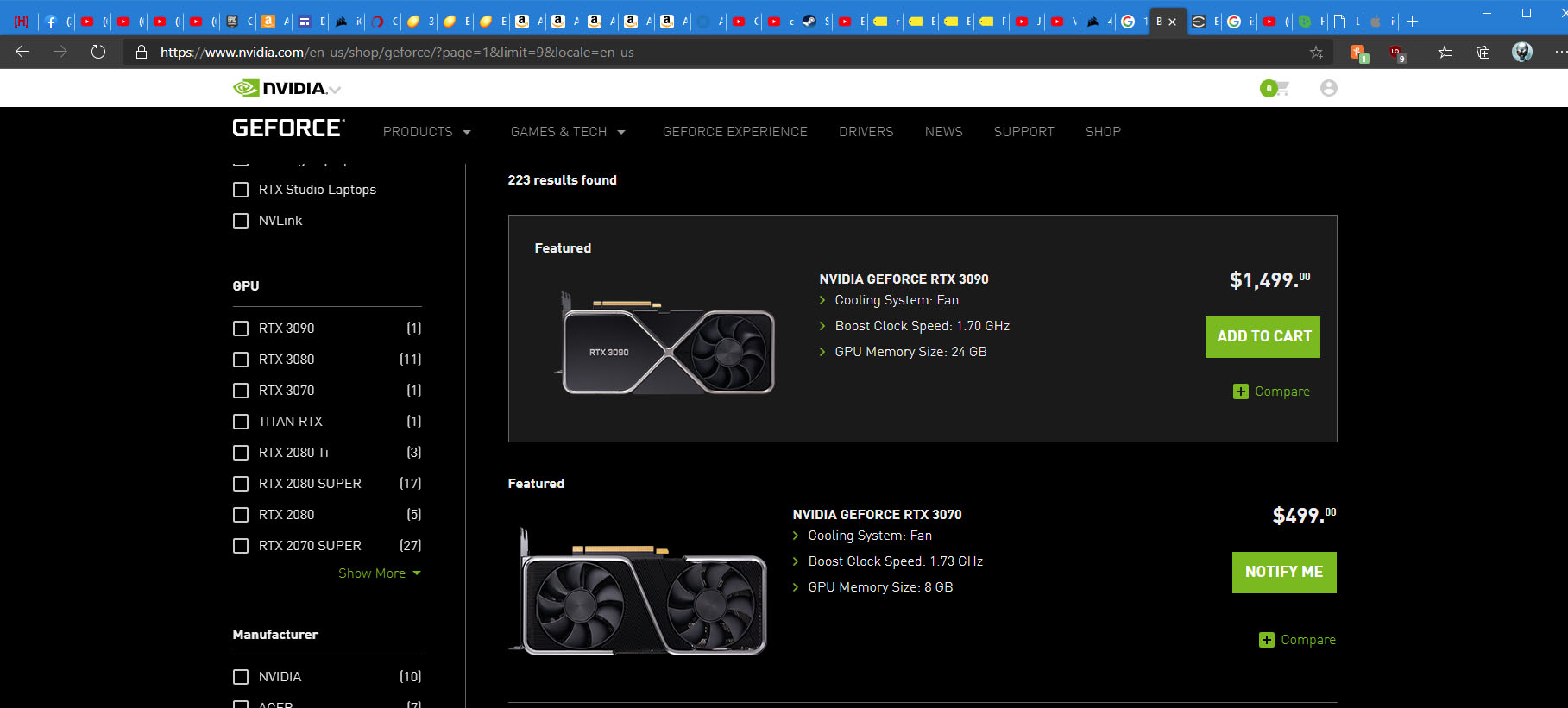

Let's talk about performance, not availability. I've been thinking about this card as being a titan replacement. However, the titans have always been nvidia produced, not aib. It looks like all the regular aibs are on board for the 3090. Do we think it is really a titan then? No other generation has had double the vram for the flagship vs the second best card except titans, correct?

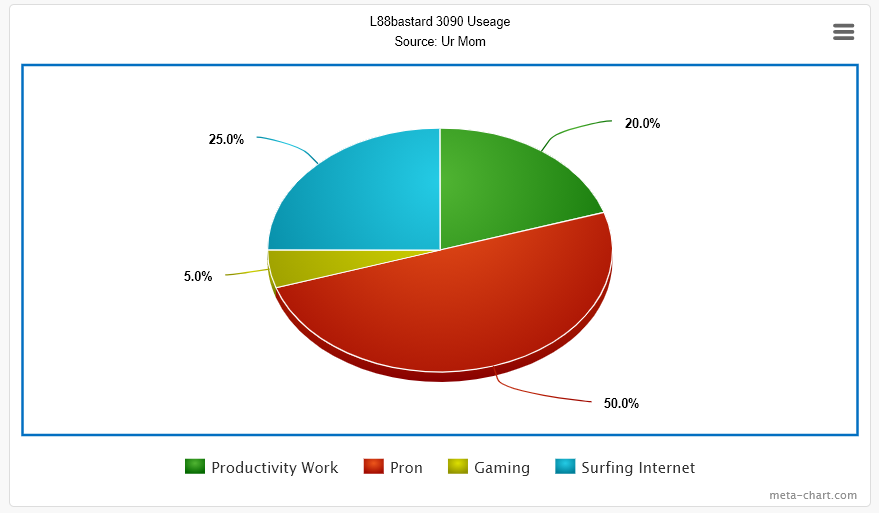

For those considering one, what's your use case?

For those considering one, what's your use case?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)