Brent_Justice

Moderator

- Joined

- Apr 17, 2000

- Messages

- 17,755

Note I am not saying they are, but this video brings up the question

You know this question would come up, it always does, this video is interesting, if a bit incomplete.

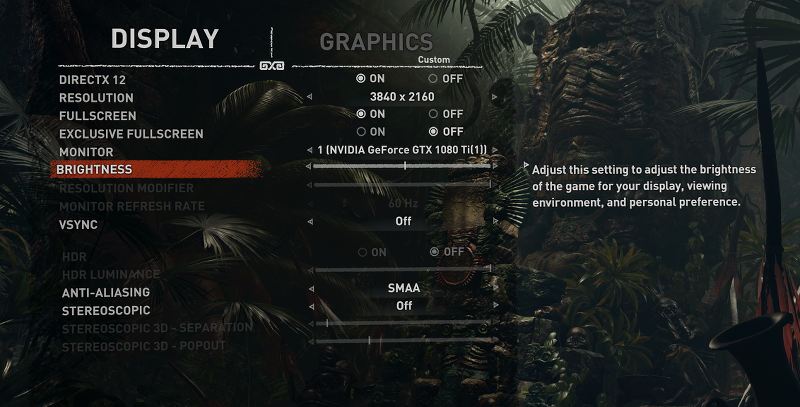

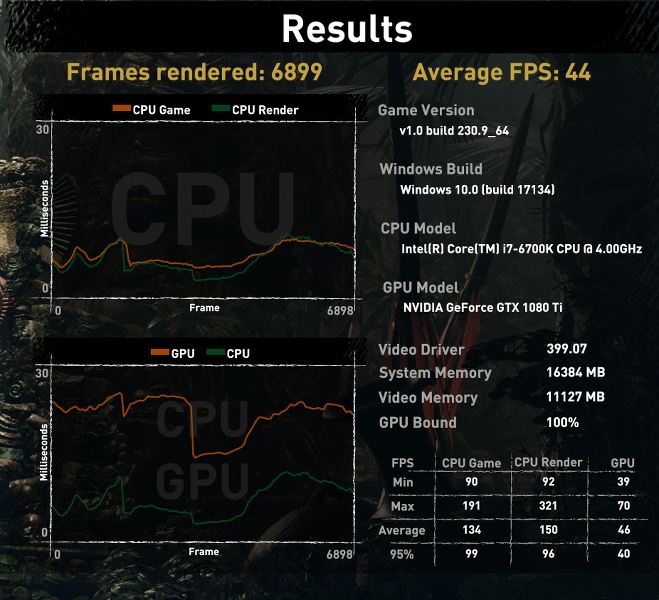

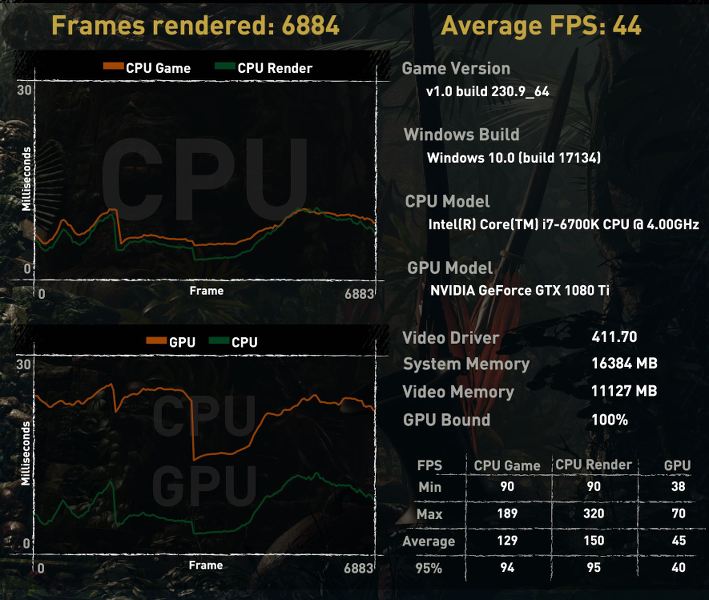

I will have to do my own testing. We are starting our full 2080 Ti review now. I was planning to use the newest driver for 10 series testing as well, to keep the driver version the same, so when I get to it I'll do a little comparison for my own sake, to see, really interested in the Shadow of the Tomb Raider outlier.

You know this question would come up, it always does, this video is interesting, if a bit incomplete.

I will have to do my own testing. We are starting our full 2080 Ti review now. I was planning to use the newest driver for 10 series testing as well, to keep the driver version the same, so when I get to it I'll do a little comparison for my own sake, to see, really interested in the Shadow of the Tomb Raider outlier.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)