cjcox

2[H]4U

- Joined

- Jun 7, 2004

- Messages

- 2,946

AMD is about to deliver. It's a 800W board with 12 fans requiring a triple length supporting case called omgATX (new standard).

(can't wait!)

(can't wait!)

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

unlikely that's what it means-- it's all hybrid raytracing right now, both in the sense that in-game RT effects are used alongside conventional rendering and also in the sense that in the hardware, DXR is partially accelerated in dedicated HW and partially computed on the shader cores.

A "non-hybrid" DXR approach where all rendering is done via pathtracing and all parts of the pathtracing are accelerated by dedicated hardware isn't something we're likely to see soon if ever.

I may be alone here, but I care extremely little about Raytracing. And I don't care so much about who has "the fastest" card - I care about who has the fastest card that fits my budget.

I care about HDMI 2.1 and DP 2.0 much more than I do Raytracing.

Sorry I just don't play any games that support RT right now.

Quake II is the most interesting of the bunch that are currently out. Cyberpunk 2077 could be neat, but I'm not buying a video card today for a game that hasn't been released yet.

Sorry I just don't play any games that support RT right now.

Quake II is the most interesting of the bunch that are currently out. Cyberpunk 2077 could be neat, but I'm not buying a video card today for a game that hasn't been released yet.

Quake II RTX is a ball, easily the most fun in a game I had this year.

I think Quake 2 RTX uses pathtracing already.

The original DIY "Quake 2 Vulkan Pathtrace (Q2VPT)" engine that Nvidias release is based on was indeed 100% pathtraced (hence why some things like particle effects were broken) but Nvidia's version on Steam etc. does use a hybrid approach, because it turns out that it's actually easier and looks better to do some effects with raster shaders. The basic global illumination/ambient occlusion/indirect lighting is all indeed pathtraced because PT excels at that and offers much more realism than traditional raster GI and SSAO/HBAO but I think it's going to be a long time until "soft" effects like particles, fog, bloom and whatnot are fully RT. Raster shader effects may be only so-so for basic lighting but they're fantastic for "photoshopping" the rendered image to make it prettier

https://www.tomshardware.com/news/nvidia-ampere-gpu-graphics-card-samsung,39787.html

No leading-edge company in this era is so stupid as to put all of their chips in one fab, especially after what happened to Intel.

Then they are designing more then one version of Ampere... TMSC and Samsung are not using the same 7nm tech. There are subtle differences in their mfg process such pitch and cell sizes. It is basically impossible to take the exact same design to both fabs. Of course they can have Ampere-A and Ampere-B but I would think having them both tap out and scale up at the same time would be harder. Yes its nice to have options... but going with two fabs increases costs as both have unique setups. Unless one is fabbing the high end part and one is fabbing the mainstream part. Guess we'll see in a year or soish.

Quake II RTX is a ball, easily the most fun in a game I had this year.

Didn't Apple do precisely this with recent phone SOCs?

And its not the ray tracing that make it fun, its that it is a good old game you can get right on board with.

If you honestly think it is the RT that make it cool, then maybe i have misunderstood something about that update and maybe they added a little more than fancy rays.

Remember, AMD does not need to beat the 2080ti, they need to beat the 3080ti.

Whatever it is, AMDs "Nvidia killer" will still be 7nm. Navi at 7nm can barely touch Nvidias 12nm. So figure out the math, re Nvidias 7nm likely potential.

Current Navi parts are not maxed out builds like the 2080Ti. So far Navi has looked promising and whatever Nvidia gets with 7nm is a bit of a wild card.

Current Navi parts are not maxed out builds like the 2080Ti. So far Navi has looked promising and whatever Nvidia gets with 7nm is a bit of a wild card.

Well, 5700xt is rated at 225W and 2080ti is 250W. They will run into TDP limits if the 5700xt is anything to go off of.

https://www.tomshardware.com/reviews/nvidia-geforce-rtx-2080-ti-founders-edition,5805-10.html

its no where near 250w. It goes over nvidia's official number.

RX 590 was 225w, Vega 64 was over 50%+ faster at 300w. So it just doesn't work like that. They can easily come out with 300w card that is faster than 2080ti. Bigger chip doesn't mean its going to be double the power, just not how it works.

Didn't AMD call the Fury X the 4K killer and hyped up marketing killed it when 4 Gig of HBM was not enough.

I wouldn't hard my breath until real testing proves otherwise. Plus this card will not be affordable to the masses anyways.

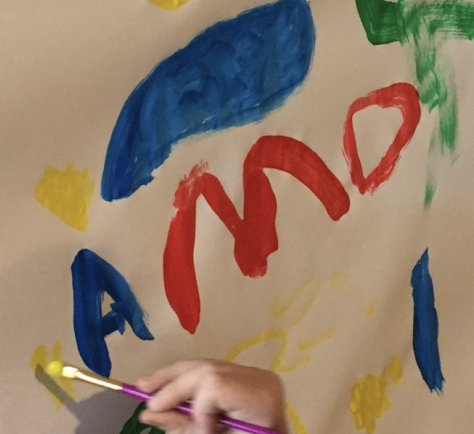

Okay, in a strange Koinkidink, my little one was painting and I walked by to spot this:

View attachment 179804

Tiniest fan ever? I have no idea where this came from, and he doesn't know either. Thought it looked neat, he said.

Raytracing is visible here.

Whatever it is, AMDs "Nvidia killer" will still be 7nm. Navi at 7nm can barely touch Nvidias 12nm. So figure out the math, re Nvidias 7nm likely potential.

Well it will be the one in the most GPU's and supported by the greater amount of software development by a wide margin. Nvidia's RT was a tactical move that is only useful on its top tiers of product greatly limiting the user base.One wild card would be the ray-tracing performance. If AMD's method is vastly faster than Nvidia, that could be a big deal.

in the past I wouldn't have taken this seriously but with the huge success of their recent CPU line I'm hoping it bleeds over to their GPU division

And let's not forget that Big Navi real competitor will be Ampere.

I don't know if AMD can do better than 1st gen RTX on thier first try, and then there's Intel. So who knows.

Hopefully nvidia learned a lot from Turing and Ampere should be a lot better.

2020 sound like a great year for gaming, no matter who comes on top.

"Nvidia killer" is the RDNA2 chip that takes the top Gaming crown from Nvidia in 2020. While "Big Navi" is the chip that is bigger than Navi10, that is being released within the next few months @ above 2080 Super's Performance.

So:

Radeon 5800 Series = big navi ($499)

Radeon 5900 Series = nvidia killer ($799)

So many nvidia trolls here, are being jebaited right now.... because most of you will be buying AMD gpus within the next year. Ampere won't be out for 13 months, and that is just about the time that AMD will release it's 4th 7nm GPU... (before NVidia releases it's first).

In all honestly, Nvidia really doesn't factor in to anything for Gamers... they have nothing to compete with RDNA. As RDNA was a surprise on the Gaming Industry, as Dr Su kept their new GPU architecture hidden/secret really well. Now Nvidia is following (as in behind) AMD architecturally and have no answer for 13 months.

While at the same time, AMD will just keep pumping out cheap 7nm GPUs and Jensen tries to figure out how to make a 225mm^2 that can compete with mainstream Navi10. Because a shrunken down Turing is still much, much bigger than RDNA.

In fact, Nvidia killed themselves and thought 2080 Super was the best gaming GPU a company can provide to gamers.... @ $800

2070 has 5% more transistors and is 2% slower than the 5700xt IIRC. The difference in transistor/rasterized performance isn’t great. AMD needs RDNA2 (and a decent improvement) when nVidia launches 7nm to keep parity.

I am really hoping for some kind of AMD chiplets design after Ryzen’s success and some monster GPUs. It has to be on their minds. I realize it’s harder with GPUs, though.

2070 has 5% more transistors and is 2% slower than the 5700xt IIRC. The difference in transistor/rasterized performance isn’t great. AMD needs RDNA2 (and a decent improvement) when nVidia launches 7nm to keep parity.

I am really hoping for some kind of AMD chiplets design after Ryzen’s success and some monster GPUs. It has to be on their minds. I realize it’s harder with GPUs, though.

I may be alone here, but I care extremely little about Raytracing. And I don't care so much about who has "the fastest" card - I care about who has the fastest card that fits my budget.

I care about HDMI 2.1 and DP 2.0 much more than I do Raytracing.