erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,875

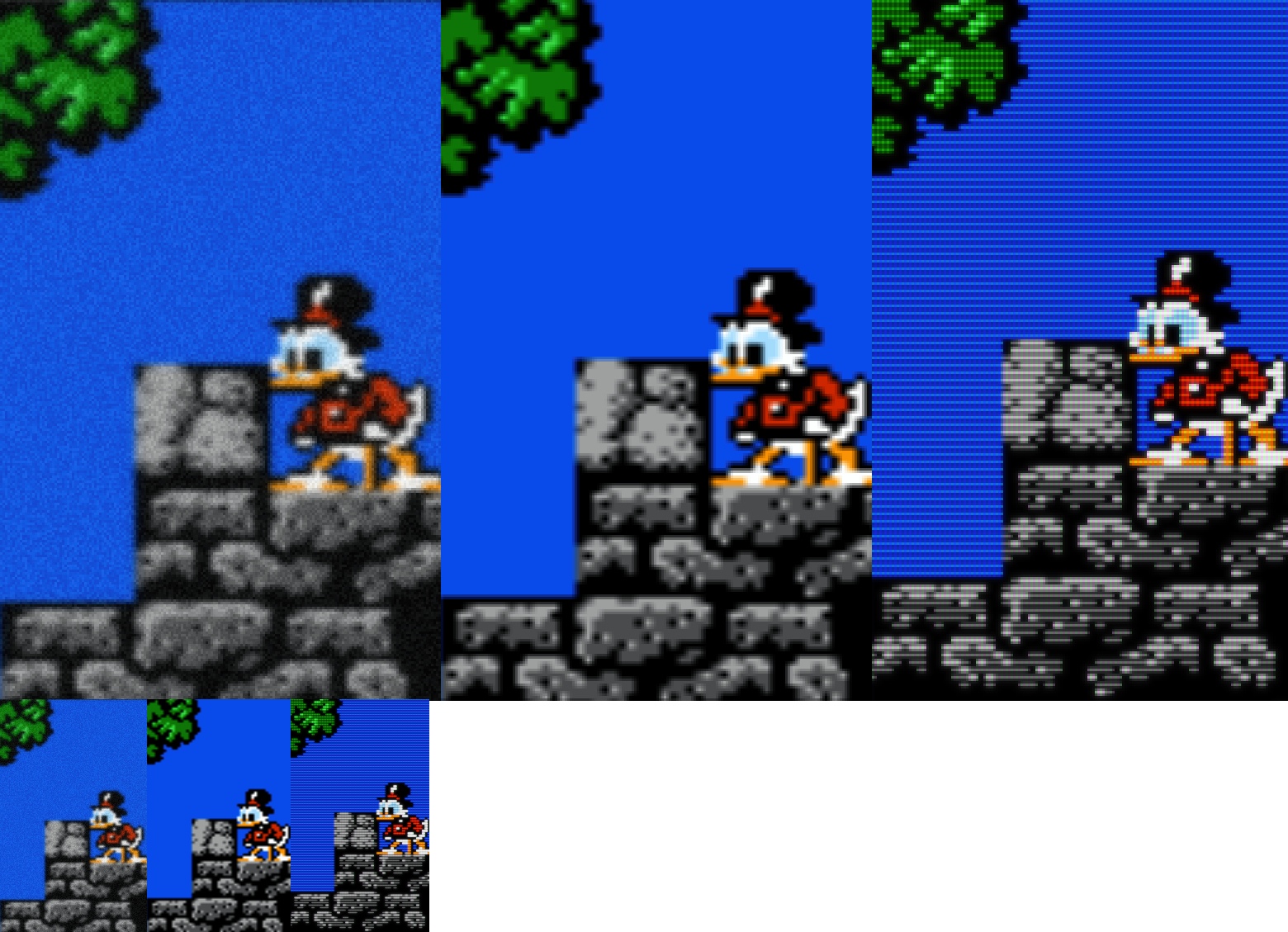

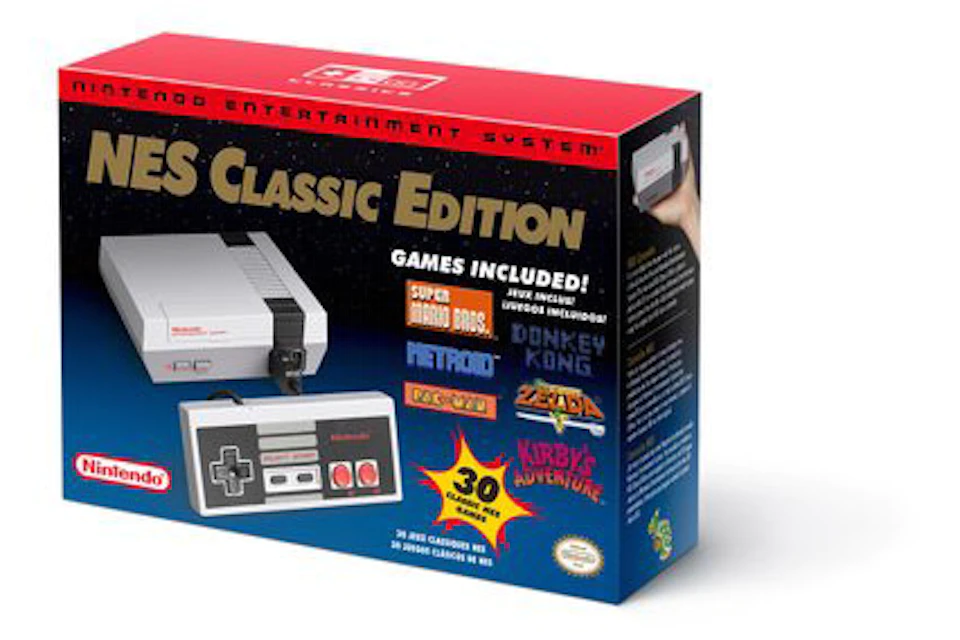

The NES classic is just an emulation machine. All the classic NES, SNES, N64 games are running on emulators on the Switch.

I'm sure anyone can, but is it because it looks worse on original hardware or because of some magically experience that you can only feel?

Again, you say this but what evidence do you have besides feels?

If it's emulating the transistors then that would explain why it's really slow. Also the end result won't change, just the frames per second. This argument breaks down quickly when you discuss 3D rendering as newer hardware is just fantastically better at it.

subjective feeling is important, and it's just my opinion that emulation blows

on the last point about the transistor level implementation, this is referred to as Simulation

it's nearer to hardware simulation done on big machines that nvidia / amd and others use from Cadendance and Synopsys, etc

if it was merely "emulation" then that'd be an unreliable means of design verification for their ASICs, but no it's Simulation at the transistor level

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)