Legendary Gamer

[H]ard|Gawd

- Joined

- Jan 14, 2012

- Messages

- 1,590

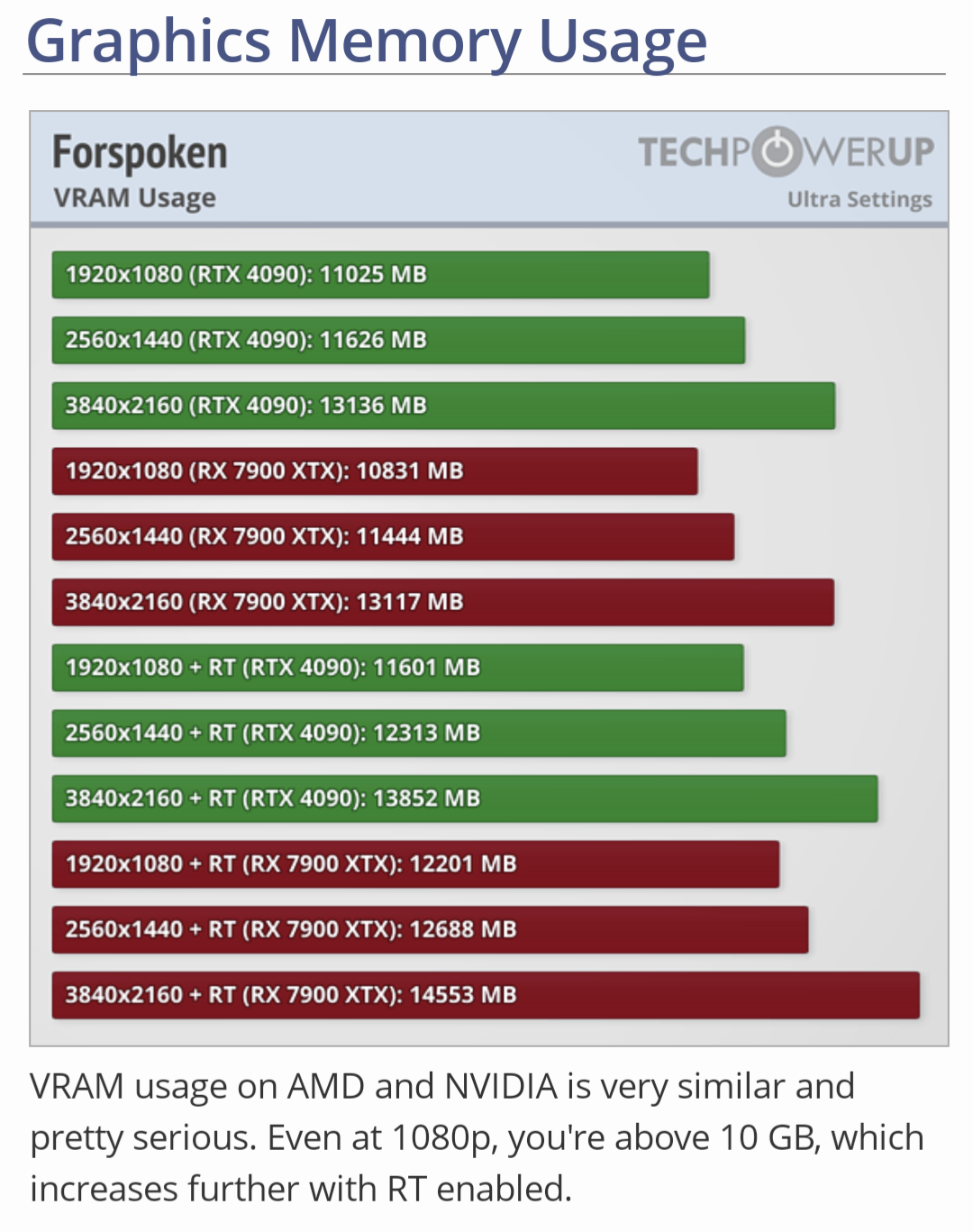

In a perfect world I agree with you. However, today we don't see optimized titles for PC much if at all. Broken is how games have been shipping for almost a decade now (if not longer, see below). As long as broadband internet has been viable and generally available to the masses, everything launches broken and is patched over time. Mass Effect Andromeda came out in 2017. That was six years ago and it was a broken mess. It got patched to a point and then dropped due to the backlash it faced. There are numerous titles that got dropped due to backlash including Anthem. Which could have been good had it gotten continued support and updates.I'd agree with high VRAM usage if it's "optimized" VRAM usage, in other words no bugs, memory leaks, etc. Unfortunately, we're not at a point to where dev's are just going to start churning out games using 12GB+ of VRAM, as you said not until 16GB is mainstream, which would be awhile since consoles pretty much dictate where gaming is at, and where it's going.

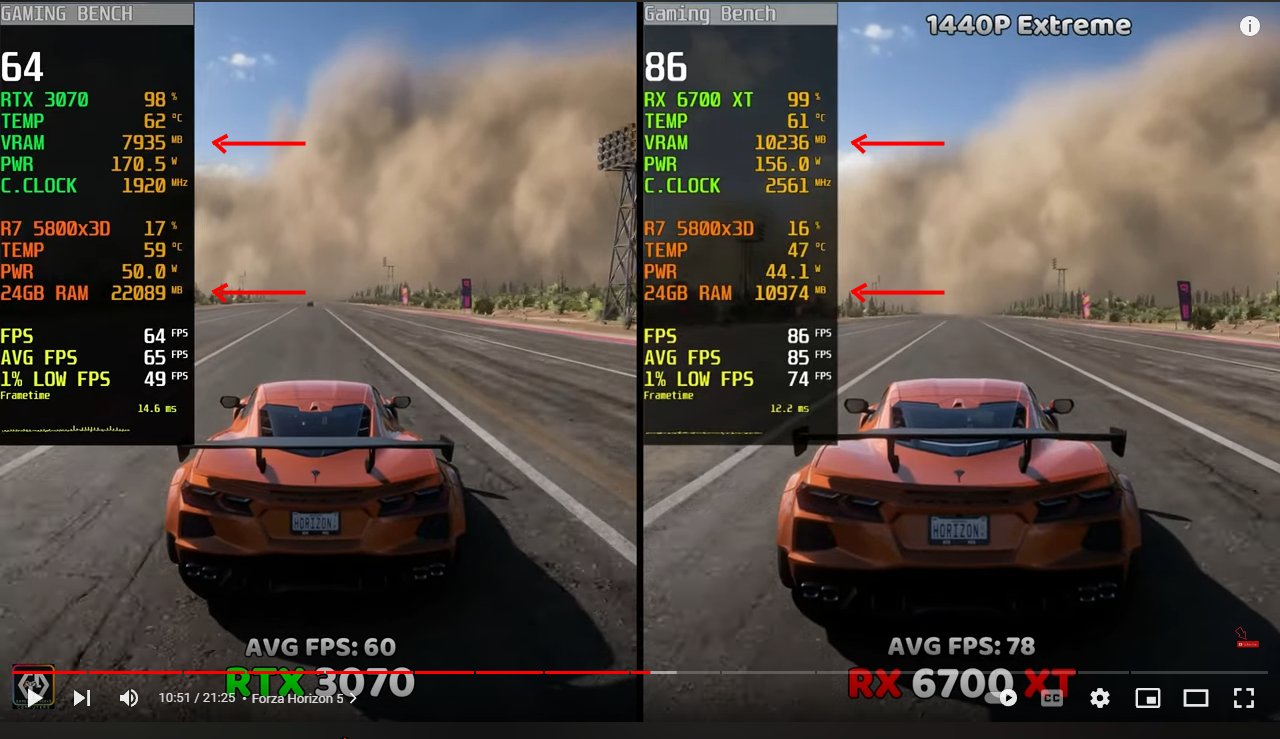

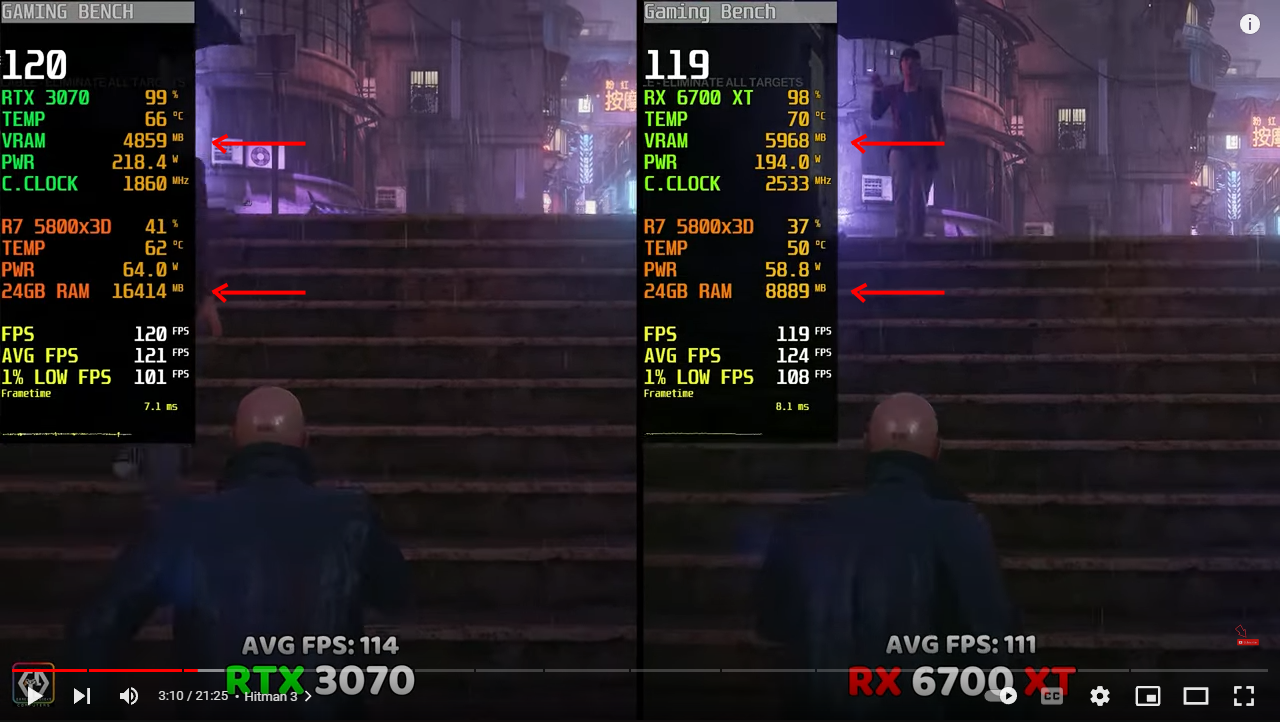

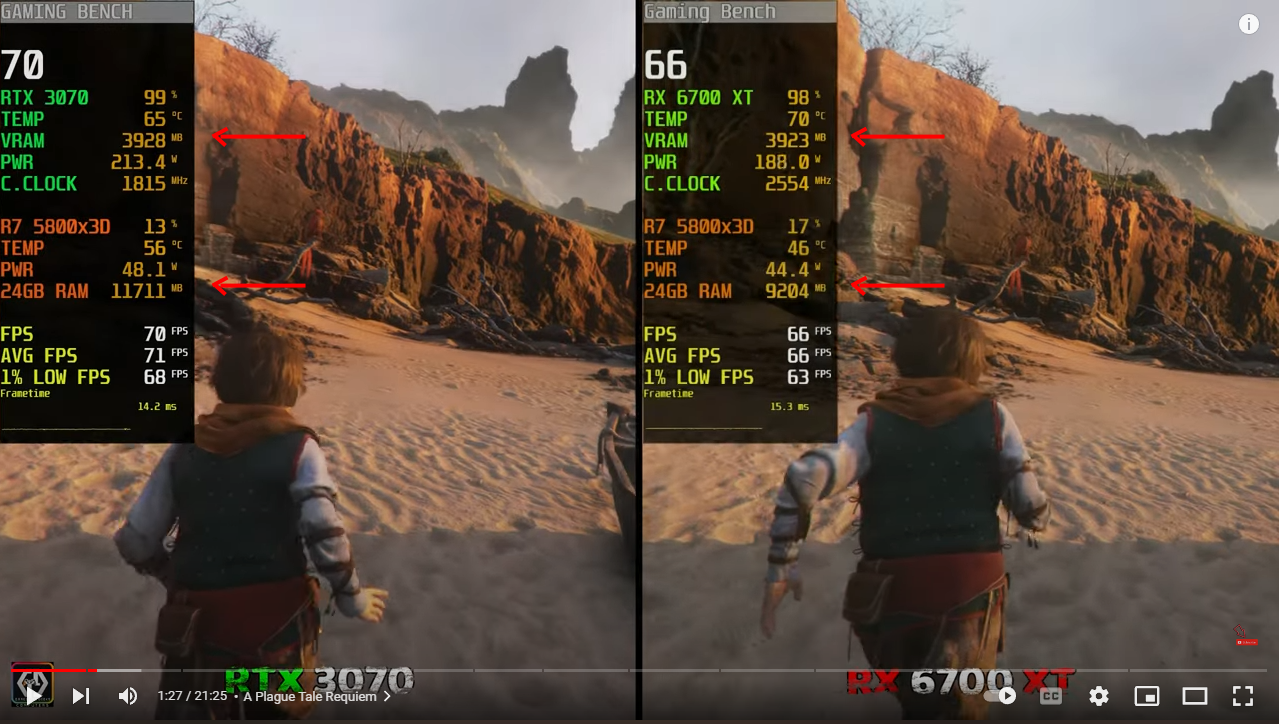

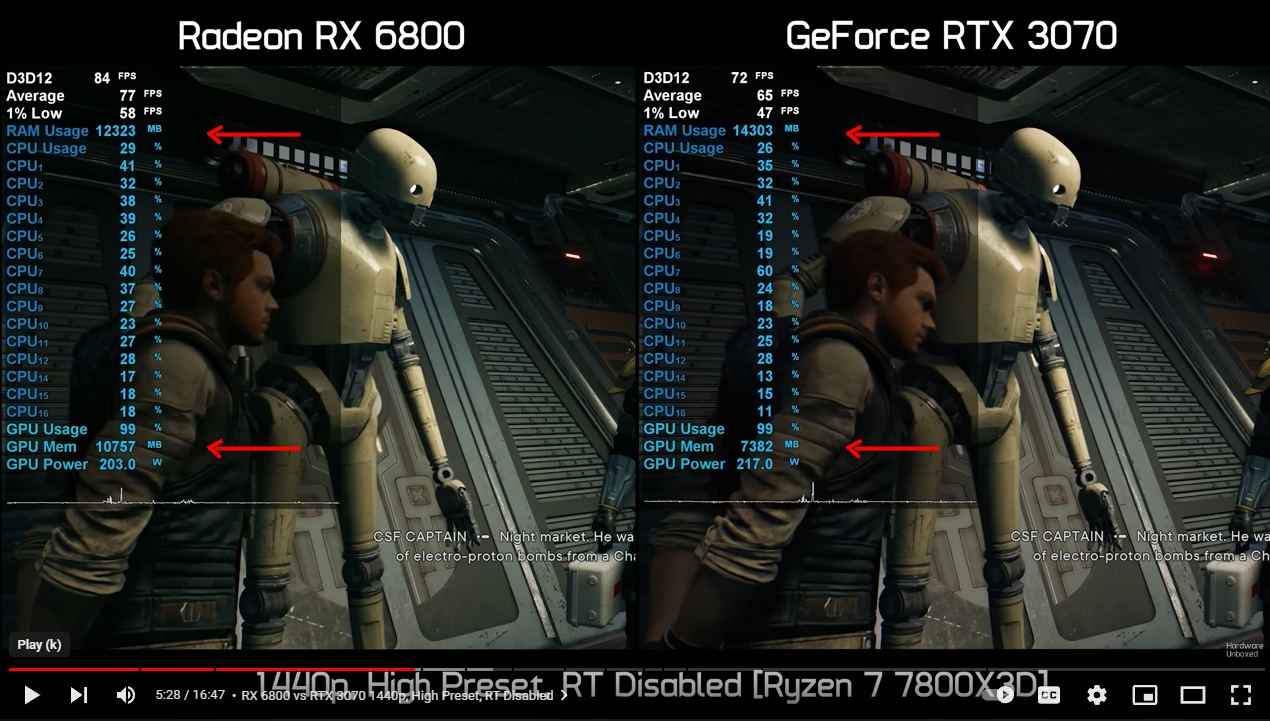

I think the backlash in this title won't set us back, I think, if anything it'll hopefully push dev's to properly optimizing their games prior to release. The issue with AAA games on PC this year hasn't been so much graphics, or gameplay, it's mainly been optimization. Just look at games like Cyberpunk 2077, Control, and Witcher 3 as examples of games that look good, really good, but don't require 12GB+ to run, then look at Jedi Survivor and ask how in the hell can this game use 20GB+ of VRAM? Hogwarts Legacy is another title that looks great, but definitely not 12GB+ VRAM great, it's not above CP2077 or Witcher 3. Games are just being released broken, but the problem is people are putting more emphasis on VRAM than what's really, truly needed. 16GB of VRAM is quite a bit, it's an amount we probably won't see used for at least another six or seven years, unless of course you're into modding and adding high res textures and all that jazz, and the kicker is that a majority of gamers are still running 1080p, not 1440p or 2160p where more VRAM becomes more important, but not for the amount of it, but the memory bus that comes with higher VRAM.

At the end of the day I'm in the group that firmly believes that 12GB is sufficient for 1440p and below, and 16GB is sufficient (for memory bus reasons) for 4K. We won't be seeing many games using more than 12GB naturally, unless it's not properly optimized, and I'm not saying this as a 4070Ti owner, I'm saying this as a realist--games aren't looking better and better enough to justify the drastic uptick in VRAM usage, and if CP2077 is anything to go by, true usage of anything near or above 12GB+ would bring even a 4090 to it's knee. If games are coming out looking worse than CP2077 or Witcher 3 while requiring more resources, then it's on game dev's, not Nvidia or AMD to fix, otherwise game dev's would just release broken game after broken game with little, to no optimization or patching. Putting it on Nvidia and AMD continue to band-aid the issue with more VRAM or faster GPU's every time a series of broken games were released would get very expensive very quickly, and still wouldn't fix the issue at its root, and that right there would be the downfall of PC gaming.

It's nice to think games should release in a polished state but it's just not reality. Old PC games had bugs in em and you didn't really even see most of them. Though some were game breaking at times. As games got more complex they started launching in a broken state. Hell, I recall Mechwariror 3 releasing largely buggy and that was 1999...

This has been going on for a long time. Not gonna change anytime soon.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)