drescherjm

[H]F Junkie

- Joined

- Nov 19, 2008

- Messages

- 14,941

I am not writing games. I agree for UI responsiveness in a game you don't want 100% CPU usage on all cores.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

by how much? and i am not talking about now (even though perfomance has improved and is still improving since day 1 reviews) but i am talking next year when new consoles drop and every tom, d!ck and hairy have a 8c/16t ZEN 2 in their living room?!

I am not writing games. I agree for UI responsiveness in a game you don't want 100% CPU usage on all cores.

You do realize every single CPU today is governed by form factor and heat right? Feel free to put any CPU in that form factor and see what happens.The shared resources is, the RAM which was split between system and graphics. That means that 8gb is not dedicated to just system or just graphics but split between the two. That handicaps the performance of the system. Consoles are actually not future proof as you think They have a shelf life and then can't be used anymore. They get maybe 6 years max from the current generation consoles, the previous generation was 7 years. My last PC lasted me 8 years and was playing new games every year. The biggest difference is that you can change parts out and update your PC without having to buy and whole new system and all new games. That's where the PC has the advantage. Consoles only have a price advantage but that gap is closing with each new generation.

Im not talking about anything having to do with heat issues with console because lets face it thats exactly why consoles are inferior by design......they are purposely limited.

Jaguar cores are desktop cores the only thing different is the memory controller and the number of cores offered for desktop 4 vs. 8.If you have enough cores, clockspeed matters. If you don't have enough cores, both matter.

Put another way, once you have enough cores, adding more cores does nothing, but adding clockspeed (and / or IPC) does.

Currently, that number of cores for gaming is six. That's why AMD's 1600 / 2600 / 3600 have been heralded as such good buys for gamers.

Also, your "consoles have eight cores" argument has already been shown to be bunk above. Jaguar cores are not comparable to desktop x86 cores.

You are making my point.....Thank you.You do realize every single CPU today is governed by form factor and heat right? Feel free to put any CPU in that form factor and see what happens.

Also shared GDDR5 isn't the same as shared DDR4.

Intel finding new and "exciting" ways to re-release the same chips and get more reviews to attract clicks. It's all just mildly refined 2017 dies, be it this or the HDET refresh.

But reviewers need the clicks too, it's not like they're gonna say "meh it's a clock bumped 9900K, not worth a review".

Only if you completely ignore the fact that all pcs are governed by tdp.You are making my point.....Thank you.

Jaguar cores are desktop cores the only thing different is the memory controller and the number of cores offered for desktop 4 vs. 8.

My point isn't that one is better than the other. My point is that all of it matters.

Only if you completely ignore the fact that all pcs are governed by tdp.

Nope.

One is better than the other, so much so that there is a tremendous gap in performance potential on the desktop side.

They're not.

Jaguar cores are x86 desktop cores.

They're trimmed-down low-power cores for appliances.

Tell that too my Althon 5350 based system, which is a desktop computer.

So a beefed up GPU on a notebook and appliance APU core.

I can run Windows on an ARM tablet and set it on a desk, making it a 'desktop computer' too. Don't be too harsh on your Athlon 5350.

Yeah, low-power CPU and high (for a console) power GPU, combined into an APU.

Dude, the Athlon is a desktop X86 cpu and thems the facts, jack. (Truth hurts.)

The Jaguar cores were designed for APUs not for full dekstops.Jaguar cores are x86 desktop cores.

The Jaguar cores were designed for APUs not for full dekstops.

It is a compact 3.1mm2 core that targets 2-25W devices, in particular tablets, microservers, and consoles.

https://www.realworldtech.com/jaguar/

They target console and lower powered devices not desktop computers.

At least logical. Don't get your religion bunched up here; r/AMD makes The Faithful here on [H] look sane.

Has that been confirmed? So five plus years of development and a new process and you honestly think they'll do 1.8GHz like jaguar? They're about double IPC and the 3700x does 3.6 base and 4.4 Max boost in 65W... PS4 pro can use up to 310W.. Leaving plenty for system and GPU with a 65W CPU TDP...Running at 1.8Ghz...

So five plus years of development and a new process and you honestly think they'll do 1.8GHz like jaguar?

Expect them to lean more toward efficiency, especially as CPU requirements haven't risen nearly as much as GPU requirements have, and both parts have to fit within the TDP limits set by AMDs customers together.

The Jaguar cores were designed for APUs not for full dekstops.

It is a compact 3.1mm2 core that targets 2-25W devices, in particular tablets, microservers, and consoles.

https://www.realworldtech.com/jaguar/

They target console and lower powered devices not desktop computers.

Exactly. The 2.5'sh GHz 8/16 Zen2 cores programed for dedicated hardware will be extremely power efficient and a incredible leap in processing power over last gen, leaving the majority of the juice for the GPU.

And look how far last gen pushed 8 lowly cores. Current consoles are no slouch which is obvious to anyone who plays the games rather then pigeon them into "appliance" space.

"The AMD Athlon 5350 Kabini is a budget-friendly, low-power System on Chip (SoC) processor that incorporates CPU, GPU and I/O controller in a single package." - Directly from the Newegg description.And yet, it is in my Desktop Computer, go figure. Hmmmm..........

No longer available and yet, listed under the desktop processors: https://www.newegg.com/amd-athlon-5350/p/N82E16819113364

"The AMD Athlon 5350 Kabini is a budget-friendly, low-power System on Chip (SoC) processor that incorporates CPU, GPU and I/O controller in a single package." - Directly from the Newegg description.

Low budget CPU and GPU.......you trying to make an argument for something I just don't know what it is. I get it its in your dekstop but I know for a fact that your desktop is not blazing up the benchmarks.....which is the whole point of this topic......its not made to run a desktop at high performance.

People are arguing that the old jaguar cores are going to blow away specific desktop CPUs it's just not going to happen.

Ok, well I never claimed it wasn't.....All I said is it is a desktop x86 processor, despite the claims of others.

Running at 1.8Ghz...

Has that been confirmed? So five plus years of development and a new process and you honestly think they'll do 1.8GHz like jaguar?

They're about double IPC ....

Exactly. The 2.5'sh GHz 8/16 Zen2 cores programed for dedicated hardware will be extremely power efficient and a incredible leap in processing power over last gen, leaving the majority of the juice for the GPU.

And look how far last gen pushed 8 lowly cores. Current consoles are no slouch which is obvious to anyone who plays the games rather then pigeon them into "appliance" space.

This was a quote directly from the website that was posted right below that line.3.1mm²?!

Had one too running a storage server. Don't know what they are on.And yet, it is in my Desktop Computer, go figure. Hmmmm..........

No longer available and yet, listed under the desktop processors: https://www.newegg.com/amd-athlon-5350/p/N82E16819113364

"The AMD Athlon 5350 Kabini is a budget-friendly, low-power System on Chip (SoC) processor that incorporates CPU, GPU and I/O controller in a single package." - Directly from the Newegg description.

Low budget CPU and GPU.......you trying to make an argument for something I just don't know what it is. I get it its in your dekstop but I know for a fact that your desktop is not blazing up the benchmarks.....which is the whole point of this topic......its not made to run a desktop at high performance.

People are arguing that the old jaguar cores are going to blow away specific desktop CPUs it's just not going to happen.

It's not based on current hardware. The video specs are RDNA2. There's not a single card out with that architecture upgrade.The 8k claim is completely false, they don't even have video cards that can properly do that now.....and the PS5 is based on current hardware. We just started getting cards that can properly do 4k.

Had one too running a storage server. Don't know what they are on.

When it launched it was faster than what Intel had in the same tdp range.

The only reason we are even talking about Jaguar in the first place is because people have somehow gone back to the clockspeeds is king mantra. It's not.

I run a storage server on an old Raspberry Pi. You don't need to help him justify his feelings, lol.

Current consoles are impressive for what they are, but I can still see dips into what looks like around 30Hz. This gen Ryzen should fix that for the most part though, even at ~2.5Ghz.

I know VRR TVs are coming out so if that all links together that would be an even better improvement.

For desktop I’d personally go the KS over 3900x. I can’t even saturate the 8 cores of my 9900KF. The extra four cores are useless to me. Even Adobe Premiere Elements only uses 8 threads (so I’d imagine it’s mostly saturating the CPU). It’s kind of strange logic but for me, the KS is faster at everything for the same price. I’d really have to compare it to the 3800x which has nothing on an OC’d KS except a minimal price difference (in the scheme of a total system cost).

The 3900x/3950x is basically the same as Tensor cores on the RTX cards. It’s AMD trying to leverage their (massive) advantage with chiplets in the HEDT/enterprise space into the consumer realm even though it makes little sense/adds little value for the consumer.

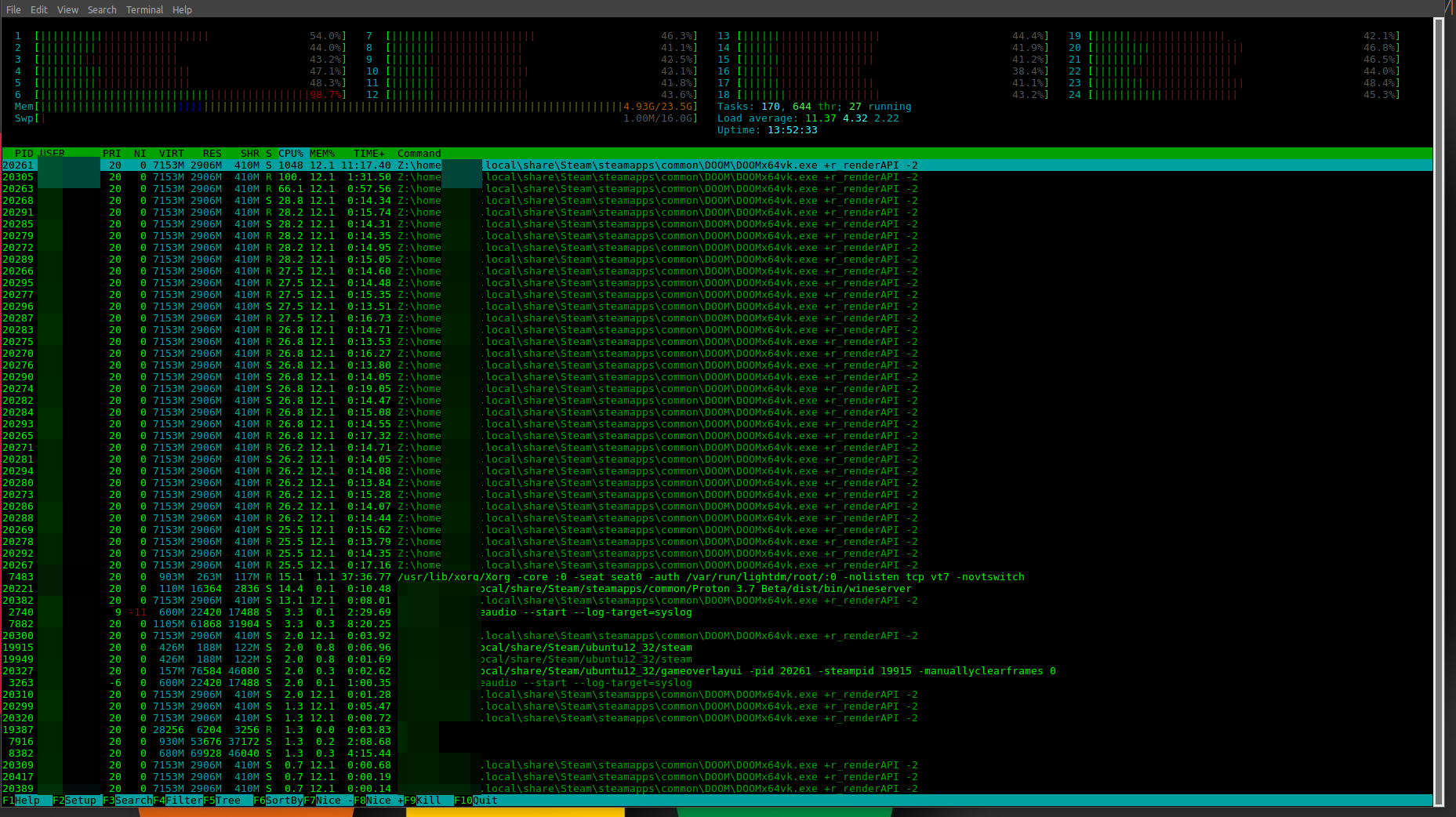

Newer API's are getting better at making full use of all cores. This is a screenshot of Doom 2016 running the Vulkan renderer, that's not jumping cores, that's using all available cores. Yes, they're not getting used 100%, but the fact they're being used reduces the ever increasing need for higher clock speeds knowing only too well that we're hitting the limits of silicon technology.

View attachment 198466

Feelings? You are projecting, aren't you? I stated objective fact, you stated subjective opinion and feelings........

Newer API's are getting better at making full use of all cores.