Thunderdolt

Gawd

- Joined

- Oct 23, 2018

- Messages

- 1,015

First of all, my apologies if this is in the wrong forum.

Secondly, my further apologies if this ends up as a crosspost because I don't get the answer I was hoping for from [H]ard.

Thirdly, let's get to the shit.

I'm putting together a quad 2080 Ti build. I'm running into two issues. One of them is important and the other one isn't. Here's the important one:

I'm trying to run this as two completely independent loops, with each loop running a pair of GPUs (the CPU is on its own third loop but it mostly sits idle anyway). THIS IS NOT IN ANY WAY INTENDED TO BE OPTIMIZED FOR GAMING SO PLEASE STFU ALREADY THANKS. Also, I'm not mining with this setup either - so STFU about that as well. Kindest regards, please do the needful.

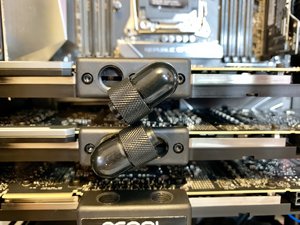

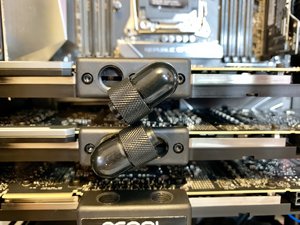

Quad blocks don't work because of the two loop thingy I mentioned just now. Twin double blocks don't really work because they are too wide to support having two of them side by side. This leaves me manually routing between cards. I initially hoped/dreamed that I could fit 90deg joints inbetween card pairs. It turns out that this is impossible:

But one of the nice things about Alphacool is that they also give you a 90deg/vertical adapter with each GPU block. OMG I'M SAVED!!@!#!!..... right?

LMAO wrong. Expletive. Heavy breathing. One more Expletive. Ok, we're good now.

I think the easiest solution is to just plumb the odd cards together on one loop and the even cards on a second loop. It will be ugly, but it will be effective (your mom joke goes here). For the primary usage, I really don't see an issue here since all cards will be worked evenly when the CUDAs are crunching. When I'm slacking off and playing games, however, cards 1 & 3 are likely going to be working harder since they'll be NVLink-connected. They'll be going through a 480x60mm radiator, so I guess WGAF it'll be fine, but I'll still know on the inside that this isn't ideal. Sometimes, it's what's on the inside that counts (joke #2 about your mom goes here).

So, with that out of the way, any suggestions on how to clean this up and make it work as I had dreamed?

As a distraction, Issue #91 out of 100 is this:

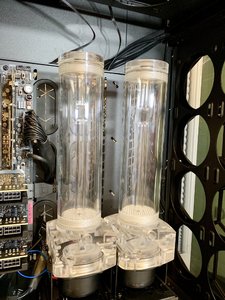

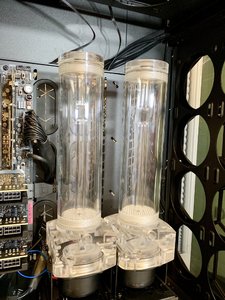

What's a good way to get these parallel with each other? The button heads down below are interfering with each other, and that ends up showing big time with the reservoirs.

The tiny little Corsair 1000D doesn't have mounting on the back wall for two reservoirs. It has mounting for one and the rest of the space is taken up by grommets and cutouts for wires which don't exist.

Any suggestions?

Secondly, my further apologies if this ends up as a crosspost because I don't get the answer I was hoping for from [H]ard.

Thirdly, let's get to the shit.

I'm putting together a quad 2080 Ti build. I'm running into two issues. One of them is important and the other one isn't. Here's the important one:

I'm trying to run this as two completely independent loops, with each loop running a pair of GPUs (the CPU is on its own third loop but it mostly sits idle anyway). THIS IS NOT IN ANY WAY INTENDED TO BE OPTIMIZED FOR GAMING SO PLEASE STFU ALREADY THANKS. Also, I'm not mining with this setup either - so STFU about that as well. Kindest regards, please do the needful.

Quad blocks don't work because of the two loop thingy I mentioned just now. Twin double blocks don't really work because they are too wide to support having two of them side by side. This leaves me manually routing between cards. I initially hoped/dreamed that I could fit 90deg joints inbetween card pairs. It turns out that this is impossible:

But one of the nice things about Alphacool is that they also give you a 90deg/vertical adapter with each GPU block. OMG I'M SAVED!!@!#!!..... right?

LMAO wrong. Expletive. Heavy breathing. One more Expletive. Ok, we're good now.

I think the easiest solution is to just plumb the odd cards together on one loop and the even cards on a second loop. It will be ugly, but it will be effective (your mom joke goes here). For the primary usage, I really don't see an issue here since all cards will be worked evenly when the CUDAs are crunching. When I'm slacking off and playing games, however, cards 1 & 3 are likely going to be working harder since they'll be NVLink-connected. They'll be going through a 480x60mm radiator, so I guess WGAF it'll be fine, but I'll still know on the inside that this isn't ideal. Sometimes, it's what's on the inside that counts (joke #2 about your mom goes here).

So, with that out of the way, any suggestions on how to clean this up and make it work as I had dreamed?

As a distraction, Issue #91 out of 100 is this:

What's a good way to get these parallel with each other? The button heads down below are interfering with each other, and that ends up showing big time with the reservoirs.

The tiny little Corsair 1000D doesn't have mounting on the back wall for two reservoirs. It has mounting for one and the rest of the space is taken up by grommets and cutouts for wires which don't exist.

Any suggestions?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)