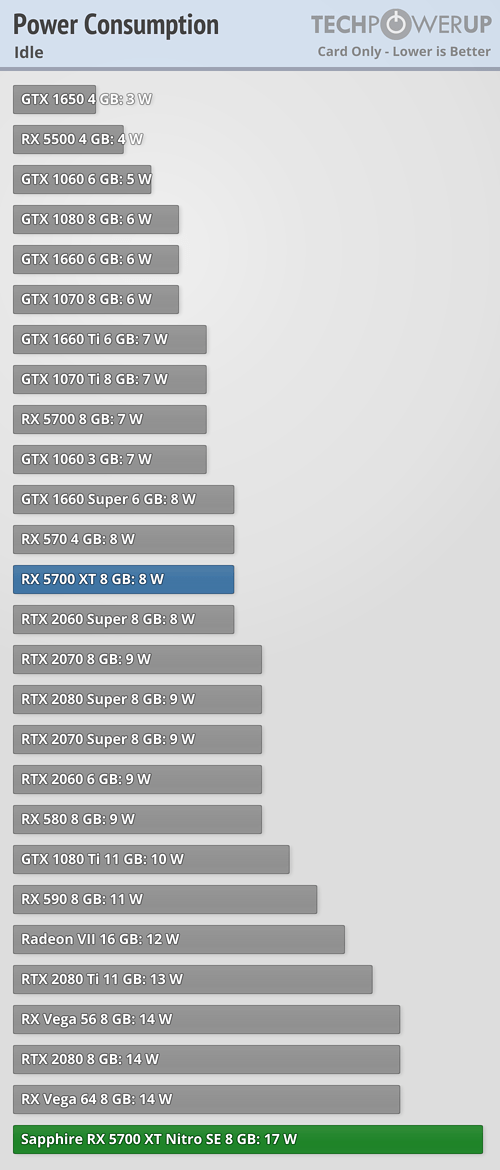

Do high-power GPUs generally consume more power during non-gaming situations, versus say an APU or 1650 (no ext power)? Realistically I'll only game about 5% of the time the computer is on, so I want to know what kind of wattage they are pulling while not being stressed. For the top cards (with 2 power cables and all) is there like a power tax, for just being in use? Think leaving the lights on in a room you never go into.

If it's all the same then I could just pick up a monster card and be done with it. But if they are different, then by how much?

If it's all the same then I could just pick up a monster card and be done with it. But if they are different, then by how much?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)