So I noticed last night while browsing through the bios that on my crosshair 6 hero w/ 1700x that on my SLI setup my first gpu slot is connected at 4x and the second is connected at 8x and when I remove the second gpu, the first slot goes to 8x

I have done benchmarks that say my 960 Evo is underperforming as well but I don't know how many lanes it is actually using, it not being connected at 4x is probably the issue with that as well

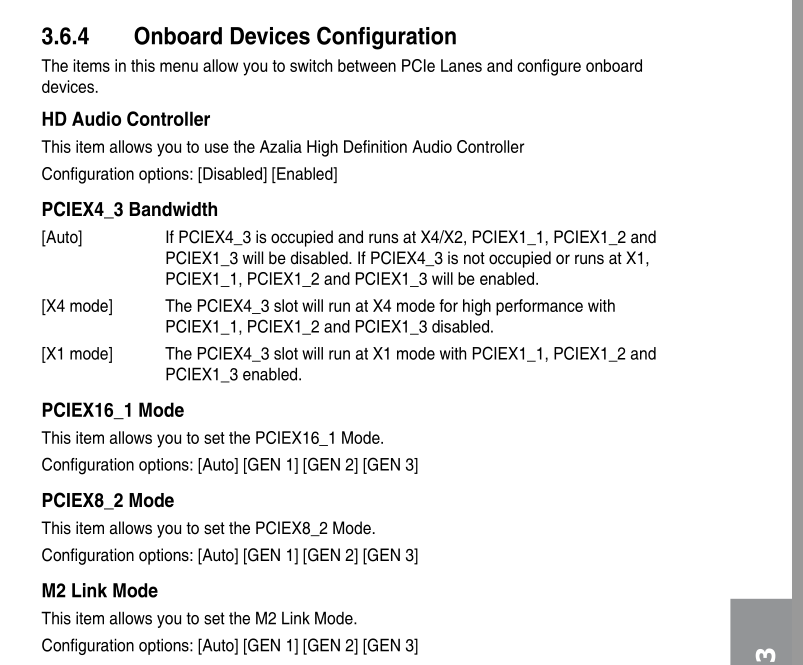

The only setting I found in the bios about pcie lanes is an is a switch for an auto or enable which in auto if it detects 2 cards it sets the link to 8x8x and if it is set to enable the link goes to 4x4x and I have that in auto

The only other setting I see in the motherboard is setting for the gen speed of the pcie slots.. the only ones I have changed from auto is the first and second pcie slots I set to gen 3 and I set the m.2 to gen 3.. motherboard is on the latest bios as well..

I never thought to look at the gpu post to see how many lanes the gpus are actually connected with, is this just limits of the 1 gen ryzen? Possibly motherboard issues? I was thinking of just getting rid of the CPU and getting a 3600 since all I do is game anyway but now I think I need a new motherboard as well...

I have done benchmarks that say my 960 Evo is underperforming as well but I don't know how many lanes it is actually using, it not being connected at 4x is probably the issue with that as well

The only setting I found in the bios about pcie lanes is an is a switch for an auto or enable which in auto if it detects 2 cards it sets the link to 8x8x and if it is set to enable the link goes to 4x4x and I have that in auto

The only other setting I see in the motherboard is setting for the gen speed of the pcie slots.. the only ones I have changed from auto is the first and second pcie slots I set to gen 3 and I set the m.2 to gen 3.. motherboard is on the latest bios as well..

I never thought to look at the gpu post to see how many lanes the gpus are actually connected with, is this just limits of the 1 gen ryzen? Possibly motherboard issues? I was thinking of just getting rid of the CPU and getting a 3600 since all I do is game anyway but now I think I need a new motherboard as well...

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)