UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

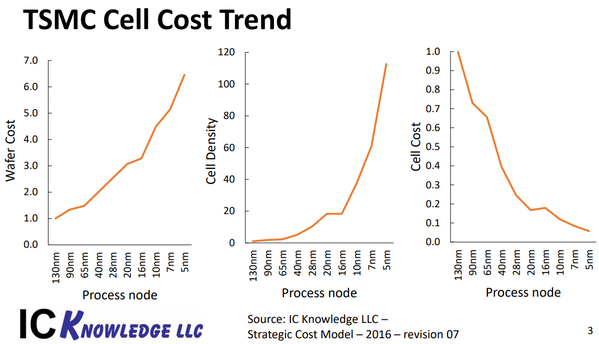

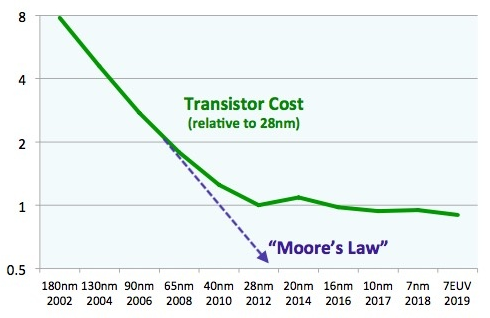

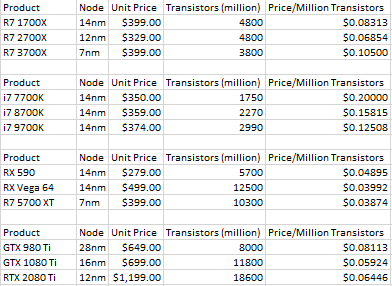

The cost is in the wafer. If you’re building a supercomputer you don’t really care. But if the only way to make an faster GPU is put two of them on a PCB, you’ve effectively just doubled the cost as well as doubled the difficulty with binning.Given how massively parallel GPUs are I don't get why they can't just make them bigger. It's not like CPUs where additional cores don't necessarily get you anything. GPUs are parallel by nature. Who gives a shit if you can't keep packing in transistors at the same form factor. Just make them bigger and slap water cooling blocks on them.

View attachment 259049

The Vega II as an example is a specialized part that costs $5000 for 1.

Although the 3080 is expected to be faster than the previous gen, if the only way faster past that is multi gpu, we can more or less expect GPU costs at the top end to shoot through the roof. As appealing as SLI was (since it’s no longer supported) less than .01% really had the capacity to afford multi top end GPUs. Putting multiple GPUs on the same PCB just gives the same problems in terms of cost and complexity as SLI.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)