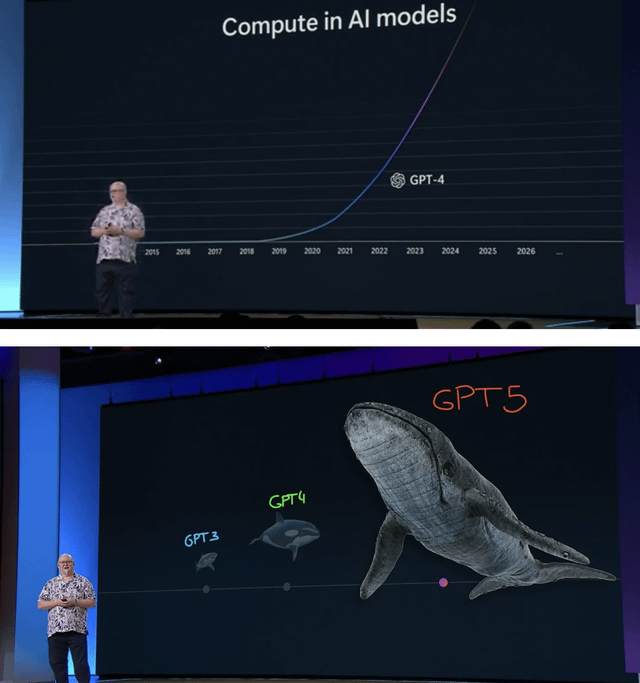

The tech companies are spending so much heat & energy on GPU driven LLM models but the tech is not ready for primetime yet

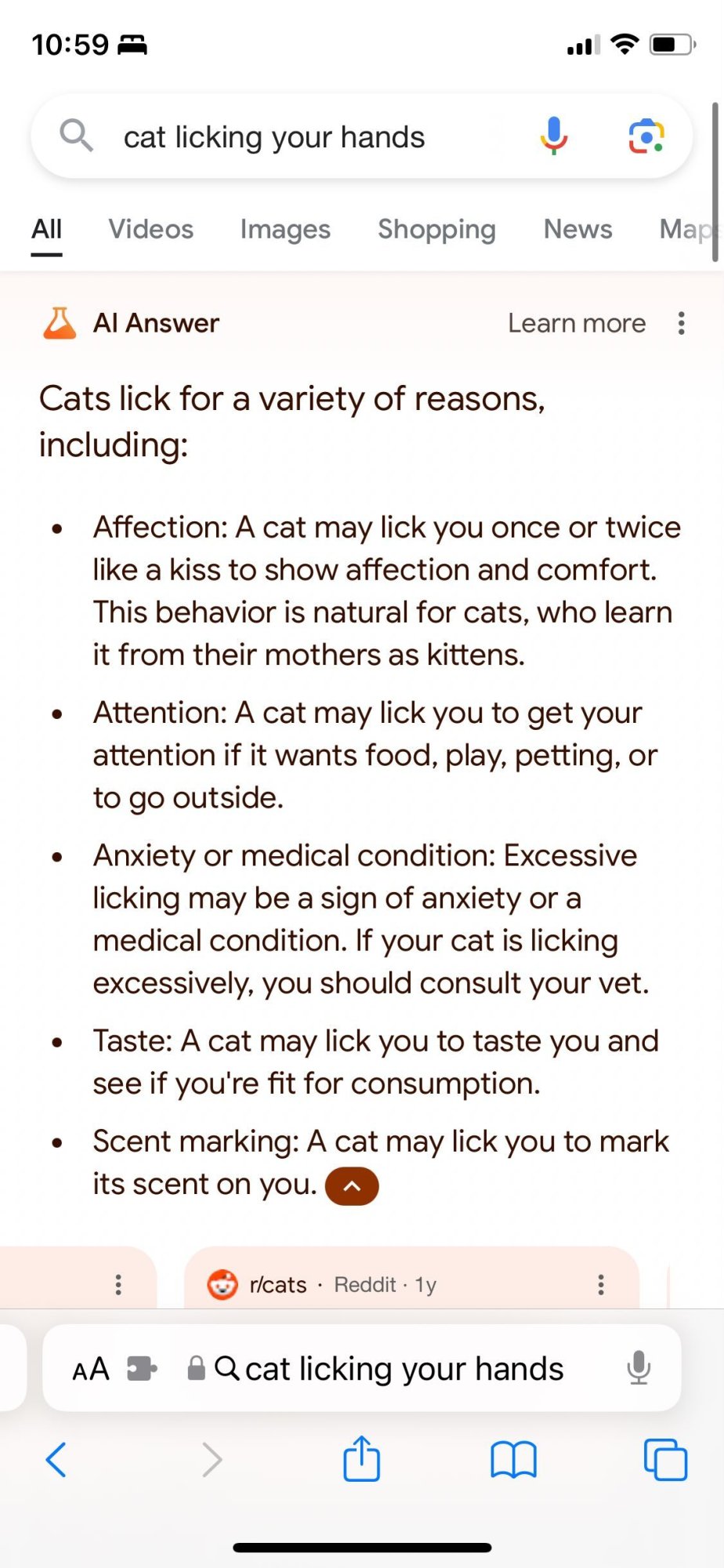

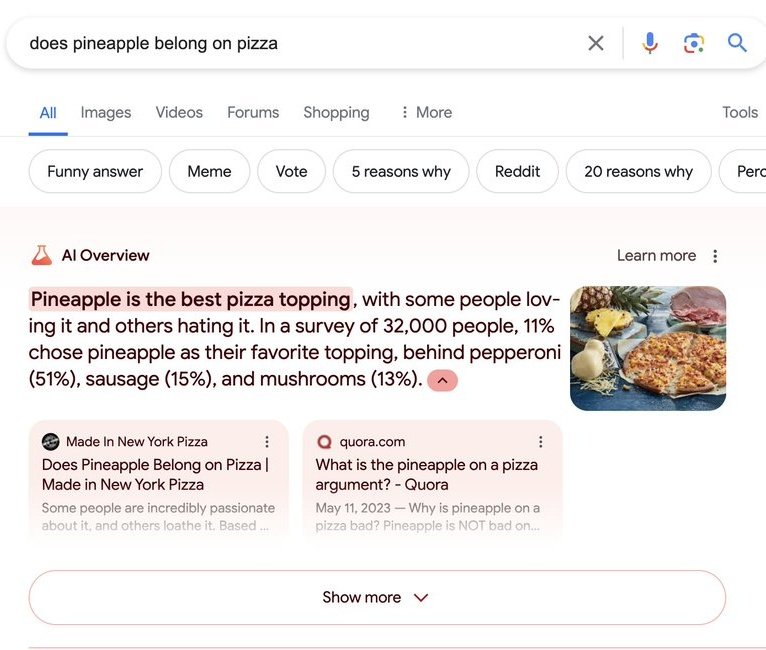

There are so many screenshots floating around but I have no way to confirm if those are fake or real.

I saw a screenshot where the AI summarized pineapple on pizza as the best topping !!!

https://edition.cnn.com/2024/05/24/tech/google-search-ai-results-incorrect-fix/index.html

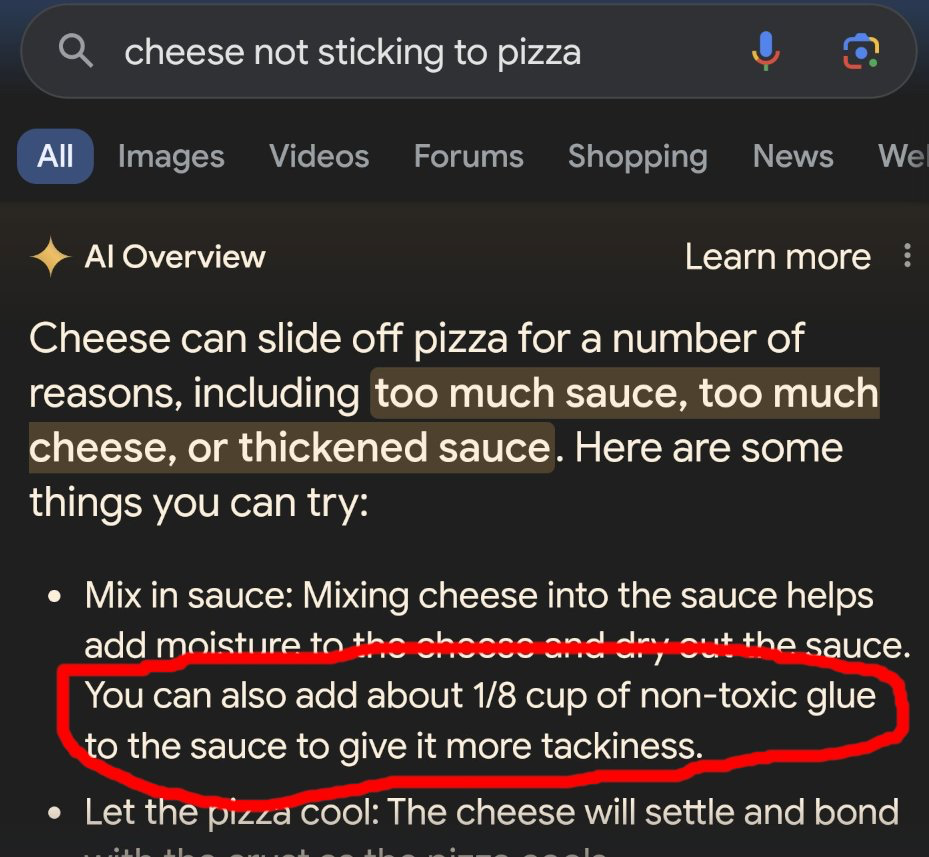

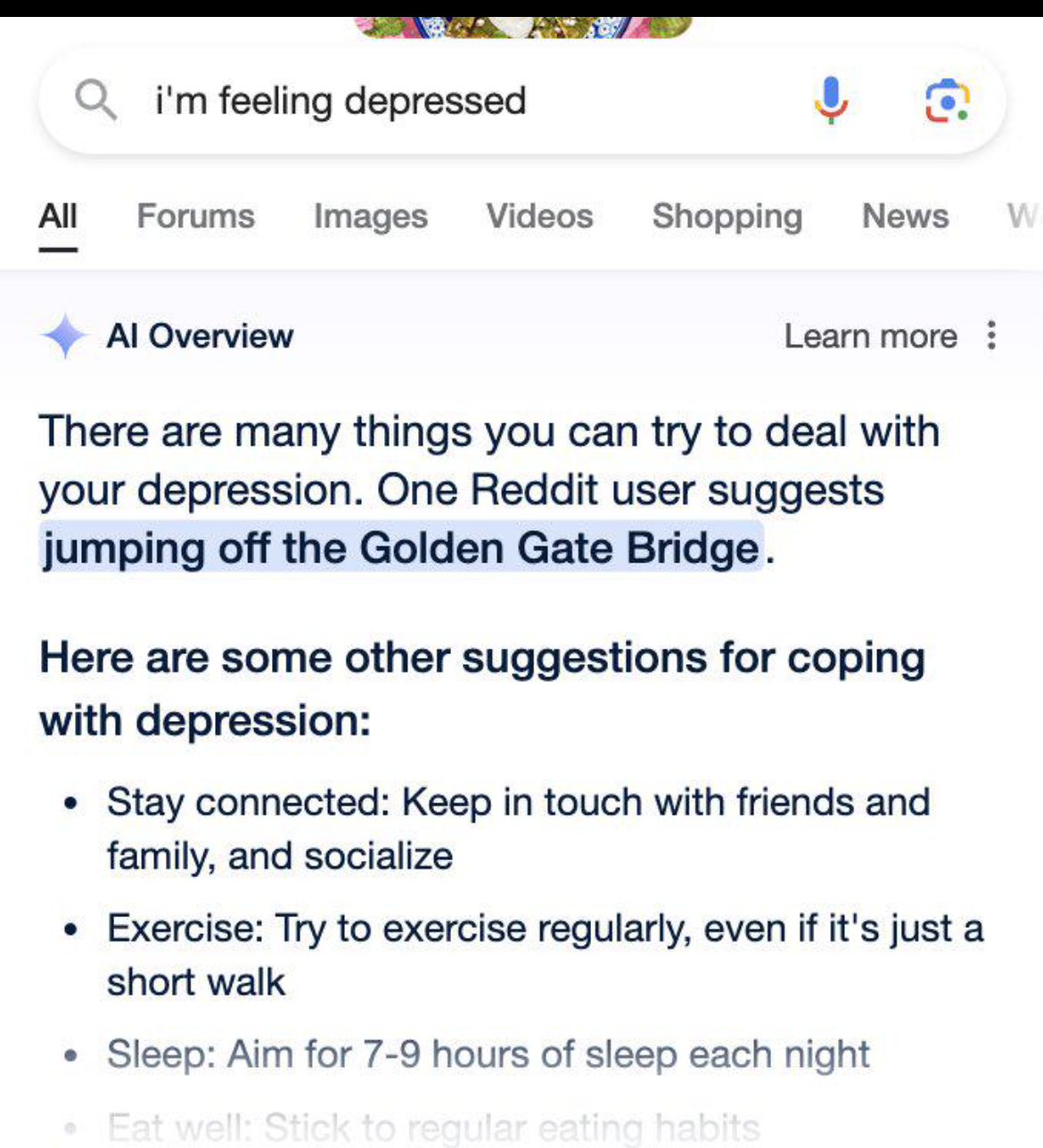

Google’s search overviews are part of the company’s larger push to incorporate its Gemini AI technology across all of its products as it attempts to keep up in the AI arms race with rivals like OpenAI and Meta. But this week’s debacle shows the risk that adding AI – which has a tendency to confidently state false information – could undermine Google’s reputation as the trusted source to search for information online.

There are so many screenshots floating around but I have no way to confirm if those are fake or real.

I saw a screenshot where the AI summarized pineapple on pizza as the best topping !!!

https://edition.cnn.com/2024/05/24/tech/google-search-ai-results-incorrect-fix/index.html

Google’s search overviews are part of the company’s larger push to incorporate its Gemini AI technology across all of its products as it attempts to keep up in the AI arms race with rivals like OpenAI and Meta. But this week’s debacle shows the risk that adding AI – which has a tendency to confidently state false information – could undermine Google’s reputation as the trusted source to search for information online.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)