Zion Halcyon

2[H]4U

- Joined

- Dec 28, 2007

- Messages

- 2,108

HITMAN Lead Dev: DX12 Gains Will Take Time, But They’re Possible After Ditching DX11

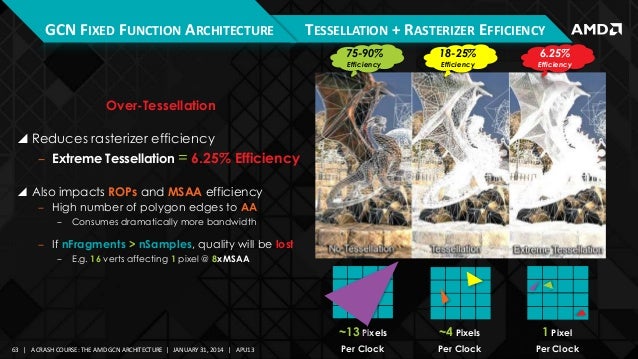

I think it will take a bit of time, and the drivers & games need to mature and do the right things. Just reaching parity with DX11 is a lot of work. 50% performance from CPU is possible, but it depends a lot on your game, the driver, and how well they work together. Improving performance by 20% when GPU bound will be very hard, especially when you have a DX11 driver team trying to improve performance on platform as well. It’s worth mentioning we did only a straight port, once we start using some of the new features of dx12, it will open up a lot of new possibilities – and then the gains will definitely be possible. We probably won’t start on those features until we can ditch DX11, since a lot of them require fundamental changes to our render code.

Read more: http://wccftech.com/hitman-lead-dev-dx12-gains-time-ditching-dx11/#ixzz45ukyOCnt

Read more: http://wccftech.com/hitman-lead-dev-dx12-gains-time-ditching-dx11/#ixzz45ukyOCnt

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)