- Joined

- May 18, 1997

- Messages

- 55,634

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Never gonna happen. You have to pay the "Nvidia Tax" in order to have that green logo on your monitor.

![1501481674403m5pkzqq_2_42_l[1].jpg 1501481674403m5pkzqq_2_42_l[1].jpg](https://cdn.hardforum.com/data/attachment-files/2017/07/85849_1501481674403m5pkzqq_2_42_l1.jpg)

I understand where you are coming from but that train of thought maybe should be expanded into something more meaningful for a buyer. For example when I ask you how those headphones perform it is more then how loud they go. How that car performs is not limited to 0-60mph times but includes handling, stopping, feel etc. Just repeat over and over again in your head 'frame times mean shit . . . mean shit . . . mean shit' and look beyond one limited set of numbers. The holy grail of only looking at frame times should fall down.It seems like you are being derisive of my post because it's not possible to run many new games at high framerates. I agree with that.

I have been saying all along that VRR is required for new games since they can be so demanding to run - whether that is due to steep hardware requirements, or poor optimization.

It's just not possible to keep all games locked above 60 FPS any more.

The post that you quoted was demonstrating how high framerates do not eliminate screen tearing if you disable V-Sync though.

The standard usage of "game performance" is referring to how well it runs.

Rendering quality or errors would not typically be grouped under "performance".

Outside of some rare exceptions, that has not been an issue in the past 15 years or so. Before then, it used to be a big deal though.

High framerates and smoothness are what matters for performance - and frame-time measurements are the best method we have of evaluating that.

Performance consistency - which is what a frame-time graph or percentile measurements show - is far more important than high average framerates.

It's why anyone that cares about smoothness rather than framerate would avoid SLI at all costs.

Look at how terrible The Witcher 3 appears to be running here, despite the framerate staying above 60 FPS.

That's why framerate is largely a useless metric without frame-time graphs.

Frame-pacing is also important, but that requires an FCAT setup to measure.

But Vega FE has launched and is available, so isn't that a concern these functions are meant to be in it but not working and require further driver-software work?Gamer Nexus indicated AMD did in fact release Vega FE with tile base rendering disabled but with Rx Vega it will be turned on and FE Vega will also use it on launch. Which should increase performance substantially depending upon application which AMD slide indicated this: (The slide after this one showed memory saved data on various games and not performance).

Also that some of the power management features for Vega FE was still not used but will be once Rx Vega is released. So we may see some pleasant surprises here in performance and maybe some better power figures to go along with it.

Reference video from GamersNexus, have to say there was more info from HardOCP video plus it was much more streamlined and to the point (not knocking GamersNexus just my observation)

It doesn't sound like you know how to read frame-time graphs.I understand where you are coming from but that train of thought maybe should be expanded into something more meaningful for a buyer. For example when I ask you how those headphones perform it is more then how loud they go. How that car performs is not limited to 0-60mph times but includes handling, stopping, feel etc. Just repeat over and over again in your head 'frame times mean shit . . . mean shit . . . mean shit' and look beyond one limited set of numbers. The holy grail of only looking at frame times should fall down.

Like that list, that blind test would be more telling than a single game and as far as I know would be the first on the internet. Probably would overnight make other type of reviews obsolete. I would really like to know if the Ultra settings or max settings make a real experience difference to players in general, also if mega fps is even noticeable to 80% of the gaming folks. Could throw the whole old fashion review system totally out the window (not talking about HardOCP reviews but other review site methods). Kyle definitely nailed the process on this one keeping it simple to the point and focus on the experience from each player. I wonder if Kyle would accept volunteers, as in you buy your plane ticket, meet up, play some games, give feedback (drink a few beers or more) and go home with some found memories.

I like the idea of the blind tests, seemed to work out pretty well.

I don't know the functional differences between G-sync and freesync, if there are any.

The main thing is how much of the price difference of the monitors' is due to the sync tech? Maybe the panels were different tech too, I don't know.

But the main thing I take from the review is that the price premium on the G-Sync isn't necessarily warranted. Assuming it is the sole factor in the price difference. I'm sure nVidia wanted to earn as much as possible while the tech was proprietary. At this point they might as well open them up. It makes no sense to me that an LCD couldn't perform both types of sync... unless there really is some underlying tech differences. Would be interesting to learn more about that.

Some posters mention that one type works better when frames get slower, that could be worth another look. Because isn't that when frame sync really comes into play anyway? So the frames aren't tearing at 120 fps... not really any major technical accomplishment. But if the frame times are controlled with cooperation between GPU and LCD at lower FPS, and the result is smooth frame times, then this seems worthwhile. Seems like I remember reading something along these lines years ago, probably in reference to the G-Sync tech. I haven't read anything about the freesync. Hopefully it does the same thing, and if so, nVidia should just open up the G-Sync tech, or do whatever is needed to allow an LCD panel to support either. I don't like the idea that a panel would only support one or the other, as this locks you into buying a particular brand of GPU.

In my experience - and there is a $125 difference between the FreeSync and G-Sync versions of my monitor (roughly) - G-Sync is better at the fringes (30-60, 120+ FPS on my 144 Hz display) and when properly set up also adjusts better to frame-time variances. There's also ULMB. Is it worth the premium? That's the big question. I could pay $250 for a wireless, wireless-charging mouse (G903 + pad) for essentially the same experience as a $50 DeathAdder Chroma, and 99% of people would say a $200 difference there is insane. So it is subjective but there is still at least some difference in experience. The fact that AMD is making this a "GPU + monitor" contest tells me everything I need to know about their angle; so, if you want the best, even if almost insignificantly for many people, you'll go NVIDIA + G-Sync, but it's painted as a rich man's game versus the value-oriented AMD + FreeSync option.

Nothing wrong with that but at the end of the day if I get a Pepsi when having asked for a Coke, my experience is lessened.

Thanks, that helps. Also good to know that the price difference isn't always $300. The sync module in the monitor for G-Sync monitors, is a part nvidia sells to the monitor manufacturers. The free-sync uses a standard API that was already a part of the displayport spec (or something) so the LCD vendor is using their own boards to do this. The plus side is it's cheaper for the LCD vendor. Possible down side is it isn't as robust as the nVidia supplied part, which is just a guesstimate based on the frame times and input lags' performance:

I did a bit more research, and read a good input lag review on blur-busters. Linus also has a comparison of G-sync vs freesync on input lag. The G-sync was overall smoother. He found that around 45fps, G-Sync with V-sync off was best, but at higher fps (say >100) that G-Sync with V-sync was best. This is in frametimes and input lag times. The freesync results looked sloppier. I'd wager that for some ranges of performance, you can't tell the difference, but at others (like the lower fps results) some people will be able to tell.

Good stuff.

Can't say about free-sync experiences since I don't have any of that tech. I can say I've seen some similar results with G-Sync and read similar posts about the optimal thresholds of each. I've been really impressed when something manages to pull my FPS to the 40-50 range and even though I can tell the frame drop I don't see any tearing. On the other hand, when the frames get closer to 110-120+ and then fluctuate quickly I can absolutely see G-Sync begin to have issues. My eyes quickly look at the various afterburner specs to see whats happening. I'll have to do some testing with both G & V on to see if it helps in those 110+ areas since I mainly do G on and V off. I still recommend G-Sync or Free. Either solution really seems like what ought to be a standard instead of any kind of premium(card or display or both).

You should hang out on audio forums to get a window into the whole "subjective" vs "objective" viewpoints.

"The tube amp displayed silky midrange!"

"Yeah, because it was running at 10% harmonic distortion".

That said, tube amps are still popular

Only because basic blind tests are also less than ideal when used as they are on various forums to give a conclusion/narrative, coming from an audiophile and engineering/scientific backgroundYep, but worth noting that the audiophiles on the subjectivist side are usually allergic to blind tests.

This is why the options are a - b - or UNSURE. Only in cases where being pressured to make a decision (eye exam) does the bias come in and then it makes any blind test tainted if pressured. Doesn't mean all blind tests are tainted or biased. I do find that most forums don't like blind tests simply because AMD tends to win them.Only because basic blind tests are also less than ideal when used as they are on various forums to give a conclusion/narrative, coming from an audiophile and engineering/scientific background

There are also biases that affect decisions when one is not sure, and without a reference point (which most audio blind tests do not allow or provide enough time for a listener to go back to) these can be triggered; one study showed there is a bias for selecting A over B or one side (cannot remember if it is right or left) if the person is unsure.

A good (context being of actual scientific merit towards any conclusions) blind test is a freaking nightmare to setup because there are many bias mechanisms that need to be controlled, and one also needs a protocol that identifies if a bias triggers and skews results.

I liken audiophile blind testing like trying to identify aliasing in a fast moving rendered movie where one can only see one screen at a time and must flick a switch for it to change to the other format, good luck without training

Cheers

A good test actually has a force or weighted.This is why the options are a - b - or UNSURE. Only in cases where being pressured to make a decision (eye exam) does the bias come in and then it makes any blind test tainted if pressured. Doesn't mean all blind tests are tainted or biased. I do find that most forums don't like blind tests simply because AMD tends to win them.

Don't be obtuse. In this case here unsure is VERY acceptable as in equal performance and therefore negating price difference and performance graphs as they pertain to real world performance.A good test actually has a force or weighted.

Unsure is not good enough for good blind tests looking for conclusive data metrics.

Cheers

Explain how I am being obtuse?Don't be obtuse. In this case here unsure is VERY acceptable as in equal performance and therefore negating price difference and performance graphs as they pertain to real world performance.

I don't need to research, I KNOW. And I don't feel the need to claim I have done it all and know it all as most of you arm-chair engineers claim. In this particular tests the biases you reference just do not exist. And your claiming of the ones in other TESTS do not diminish the finding within this or other tests such as these. Just because the typical graphs used to parrot superiority don't have any bearing here doesn't diminish it either.Explain how I am being obtuse?

I have given you some basics and fundamentals that if you bothered researching before being argumentative would realise.

But then like I said I have followed 100s of research papers on this subject, been involved in an industry standard in the past and worked with those that both designed some of these tests....

I guess it is simpler to ignore the fact it costs a lot of money to do a valuable blind test, TRAINING TO ACTUALLY BE ABLE TO DIFFERENTIATE DIFFERENCES ACCURATELY AND CORRECTLY RATHER THAN UNRELIABLE GENERALISED PERCEPTION...

It is a complex situation made ever more worst by JND and multiple biases that also influence blind tests, which also applies not just to audio but moving visual and HOW one sets the test to be able to switch; at a very basic level there is also a short time limit on memory perception, made worst if one does not have the techniques and training.

Cheers

Thanks, that helps. Also good to know that the price difference isn't always $300. The sync module in the monitor for G-Sync monitors, is a part nvidia sells to the monitor manufacturers. The free-sync uses a standard API that was already a part of the displayport spec (or something) so the LCD vendor is using their own boards to do this. The plus side is it's cheaper for the LCD vendor. Possible down side is it isn't as robust as the nVidia supplied part, which is just a guesstimate based on the frame times and input lags' performance:

I did a bit more research, and read a good input lag review on blur-busters. Linus also has a comparison of G-sync vs freesync on input lag. The G-sync was overall smoother. He found that around 45fps, G-Sync with V-sync off was best, but at higher fps (say >100) that G-Sync with V-sync was best. This is in frametimes and input lag times. The freesync results looked sloppier. I'd wager that for some ranges of performance, you can't tell the difference, but at others (like the lower fps results) some people will be able to tell.

Good stuff.

But is it because you have framerate counters on screen? I find that I tend to stare at those way too much if I have them on whereas I don't care about the framerate otherwise with G-Sync display unless it drops way low or there's a noticeable dip (like 60+ down to 30). At really high framerates I doubt I would notice a difference.

Adaptive sync should definitely be a standard feature of every high end display and so should backlight strobing (ULMB). One benefit of the Nvidia solution is that you mostly get the same experience and feature set over vendor's own implementations but I am not fond of the premium it costs. So far Displayport/HDMI limitations have caused ULMB to get dropped from 60-100 Hz models so I hope that becomes a non-issue in displays coming out next year with DP 1.4 and hopefully HDMI 2.1 support.

Obviously, I'm a bit team green/blue, I would advise at least waiting till these new Vegas drop in price. Your TI will still give great performance and even if you still feel the need to switch then wait so you don't pay the early adopter fee. If you had anything less than a 1070 I would recommend the jump but a TI is such a good ride it'd only be worth it if you had the money to burn.I'm really looking forward to seeing the final reviews and benchmarks on Vega.

I started out my gaming PC build with a RX 480 and a 1440p Freesync monitor. The initial plan was to run the RX 480 until Vega was released and then upgrade. However, a couple of months ago I became impatient with the Vega delays and capitalized on the mining craze to sell my RX 480 at a significant profit and purchase a GTX 1080 ti. It's a great card and I have no complaints. Runs very cool and quiet! However, I'm seriously debating selling the 1080 ti to get a liquid cooled Vega. I know that Vega will perform slightly worse than the 1080 ti, but since I will be able to take advantage of the Freesync in my monitor I wonder if I won't have a better, smoother overall gaming experience even with lower framerates. What do you guys think?

Your point? Then explain why 6 did not see a difference, 3 thought the AMD setup was better and only one thought the NV Gsync gig was better? With frame times as you so well describe - it totally failed to predict the outcome or even the significance of the results. So in other words there is more than just frame times for a good gaming experience for real humans. The answer is more complex than timing variations between frames in other words. As it was only one test it does open up good questions. Now repeat "Frame Times mean shit" over and over againIt doesn't sound like you know how to read frame-time graphs.

Frame-time graphs show performance (framerate), frame delivery, and smoothness.

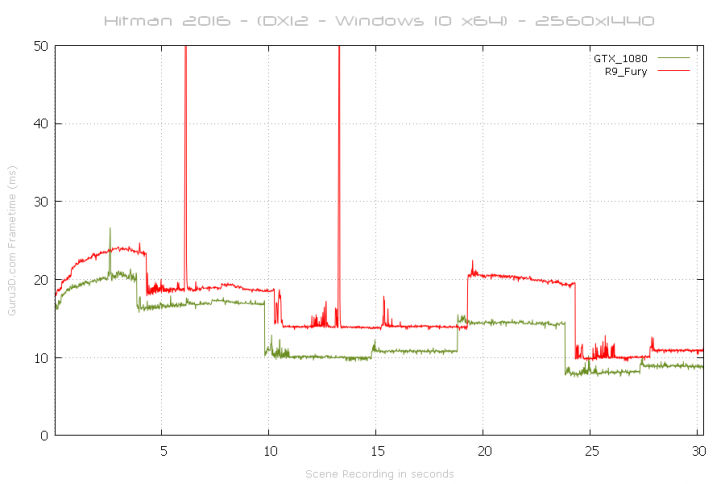

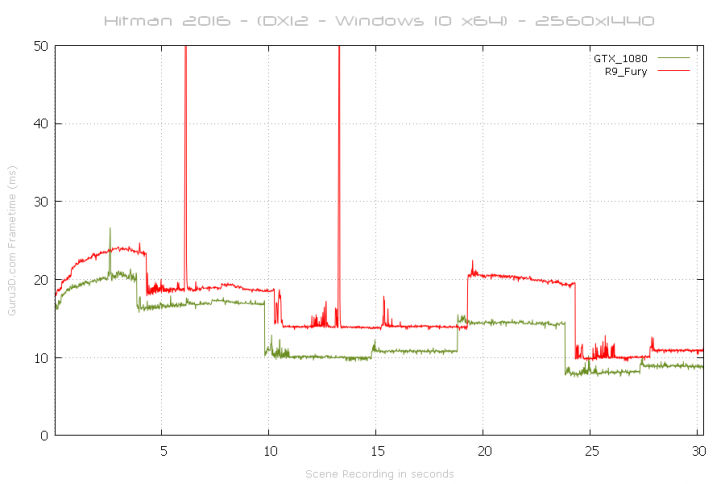

In HITMAN we can see that the GTX 1080 has better performance (lower on the graph), less stuttering (fewer spikes in frame-time), and any stuttering is less severe. (smaller spikes on the graph)

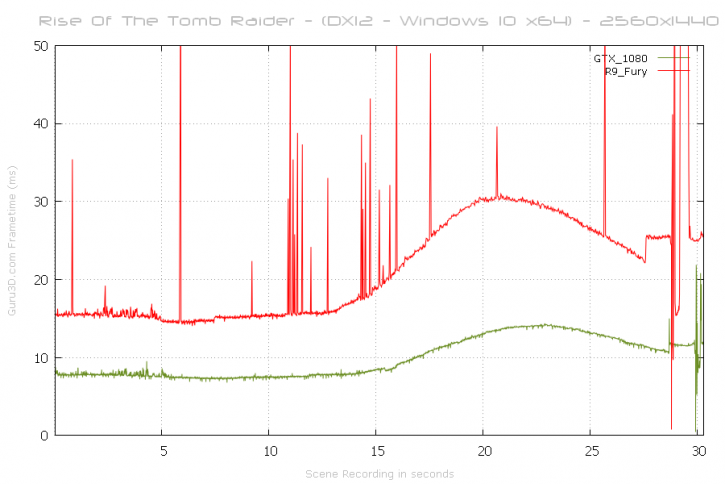

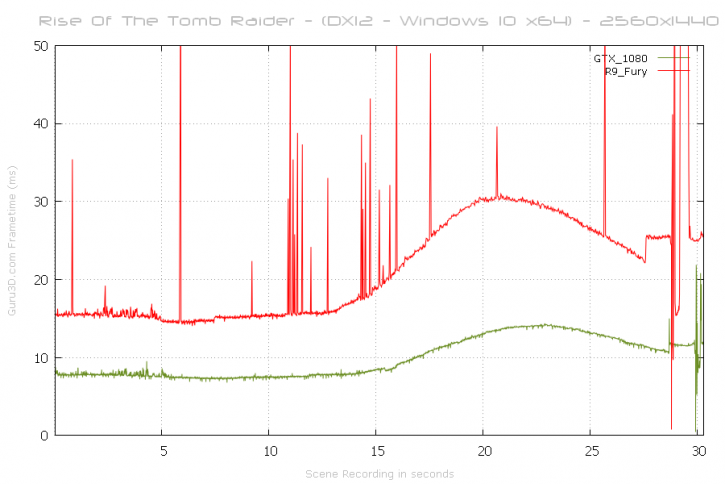

We can see that Rise of the Tomb Raider is going to be a significantly better experience on the GTX 1080 than the R9 Fury.

It's very smooth on the 1080, without any stuttering.

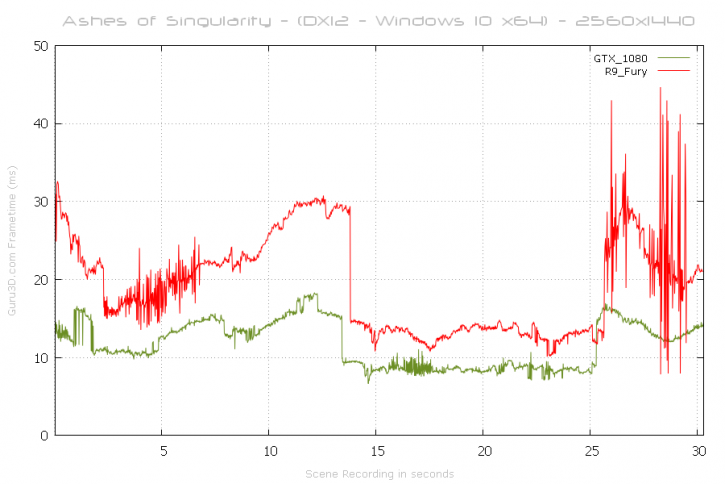

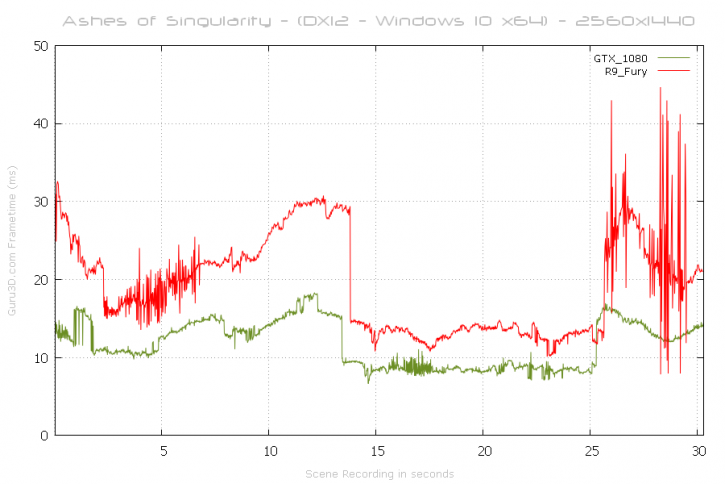

In Ashes of the Singularity we can see that the GTX 1080 has better frame delivery than the R9 Fury, as there is less variance in the graph (lines are smoother) and performance is much better overall - the difference between the best and worst frame-times is much smaller.

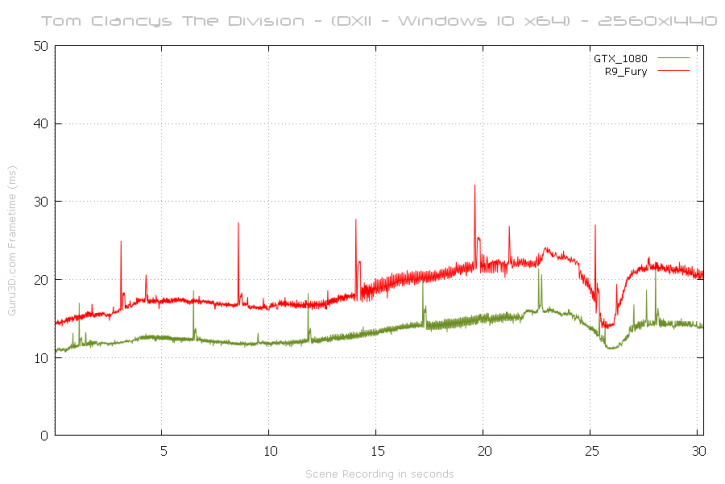

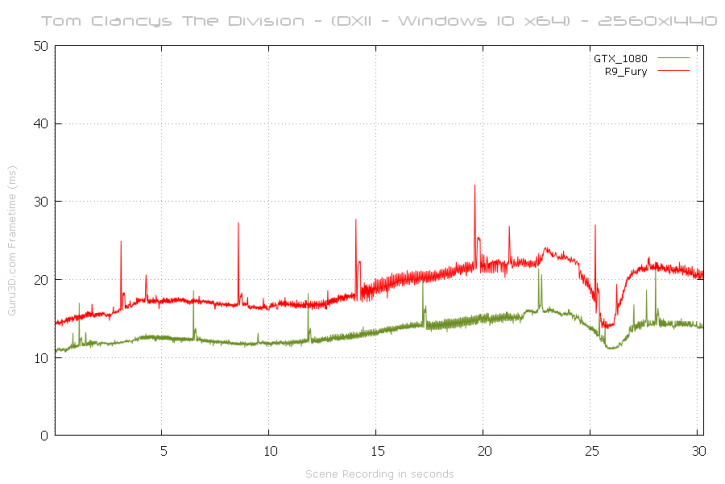

And finally in Tom Clancy's: The Division, we can see that the GTX 1080 has much better performance, better frame delivery, and less variance, but it still stutters occasionally - though not at the same points in the test, and not as severely.

Source Article

Your point? Then explain why 6 did not see a difference, 3 thought the AMD setup was better and only one thought the NV Gsync gig was better? With frame times as you so well describe - it totally failed to predict the outcome or even the significance of the results. So in other words there is more than just frame times for a good gaming experience for real humans. The answer is more complex than timing variations between frames in other words. As it was only one test it does open up good questions. Now repeat "Frame Times mean shit" over and over again

Now repeat "Frame Times mean shit" over and over again

AMD did not choose the software, I did. And I chose the 1080 Ti.I can explain it. The event was in no way a scientific. There were different monitors and video cards, monitors were different technologies even (not talking about Gsync or freesync). And the hardware was chosen by AMD. The software was also chosen by AMD.

Still interesting. AMD still won. Don't pretend like it's conclusive in any way though.

Your point? Then explain why 6 did not see a difference, 3 thought the AMD setup was better and only one thought the NV Gsync gig was better? With frame times as you so well describe - it totally failed to predict the outcome or even the significance of the results. So in other words there is more than just frame times for a good gaming experience for real humans. The answer is more complex than timing variations between frames in other words. As it was only one test it does open up good questions. Now repeat "Frame Times mean shit" over and over again

So taking the human perception into consideration vice some canned method leads to a more insightful understanding. The whole purpose of the setup is to hopefully give the best gaming experience - period. Not to one particular test which is very limited in scope. I think maybe I was not clear - by itself only - frame times mean shit. It will not tell anyone how the rendered frames will be perceived with different monitors, system configurations, software configurations etc. It can tell what a certain card will do in a certain system for frame variations and a given FPS estimate between frames. I don't think this one test will decide much of anything except folks may see things differently then what is thought what is important. If more tests like these can be done may well blow open how cards are tested and especially what really is important.Perception varies with the individual.

On the flip side, if subjective experiences are what we primarily rely on for technological innovation with a blatant disregard for the scientific method, we would be living in a very different world from what we have now.

Besides, if it weren't for Tech Report's frame time testing, AMD/ATi wouldn't have been made aware of their frame pacing issues which they were able to eventually address. I doubt it if Nvidia would be willing to point that out to them for competitive reasons. I still remember when HardOCP used to conclude that SLI was smoother than CFX.

So the monitor out weighed the video card advantage with the 1080Ti in Doom. Which indicates maybe frame times mean shitI can explain it. The event was in no way a scientific. There were different monitors and video cards, monitors were different technologies even (not talking about Gsync or freesync).

Still interesting. AMD still won. Don't pretend like it's conclusive in any way though.

So the monitor out weighed the video card advantage with the 1080Ti in Doom. Which indicates maybe frame times mean shit.

So taking the human perception into consideration vice some canned method leads to a more insightful understanding. The whole purpose of the setup is to hopefully give the best gaming experience - period. Not to one particular test which is very limited in scope. I think maybe I was not clear - by itself only - frame times mean shit. It will not tell anyone how the rendered frames will be perceived with different monitors, system configurations, software configurations etc. It can tell what a certain card will do in a certain system for frame variations and a given FPS estimate between frames. I don't think this one test will decide much of anything except folks may see things differently then what is thought what is important. If more tests like these can be done may well blow open how cards are tested and especially what really is important.

On this interesting test, only difference was the video card and monitor - that was it. The combination of Rx Vega and that monitor showed a slight advantage. Why? Other games maybe different - I would not know, maybe 60% of the games Nvidia combination would be a clear winner. The bottom line is why, what is really important for folks playing games from a hardware standpoint?

No need, nothing there of importance. You could have great frame times but black and white or let say a blurred mess on the screen when you move. It would not predict gaming experience in other words or gaming worth. It may predict smoothness but even that is not true, FreeSync/Gsync may have obvious better smoothness at a much lower frame rate (higher frame time) then a lower frame time (higher frame rate) non sync display - Frame Times Mean Shit by itself.I can guarantee that if the frame times measure poorly, it will be a stutterfest and that will be perceptible no matter what. Frame time testing can stand on its own.

I strongly suggest you reading the article in the link provided for by IdiotInCharge.

Hmmm, I wonder why AMD hired the guy who wrote it? Maybe all their internal frame-time testing has already paid off, hence the results of the blind test.

No need, nothing there of importance.

Frame Times Mean Shit by itself.

I'm sorry, where did you see FCAT measurements posted for this setup?Your point? Then explain why 6 did not see a difference, 3 thought the AMD setup was better and only one thought the NV Gsync gig was better? With frame times as you so well describe - it totally failed to predict the outcome or even the significance of the results. So in other words there is more than just frame times for a good gaming experience for real humans. The answer is more complex than timing variations between frames in other words. As it was only one test it does open up good questions. Now repeat "Frame Times mean shit" over and over again

Can you point to any meaningful examples of this in the last decade?No need, nothing there of importance. You could have great frame times but black and white or let say a blurred mess on the screen when you move. It would not predict gaming experience in other words or gaming worth.

That is why I would consider it to be a bad test - especially with the responses from people like noko.This was not a bad test, far from it. But its results are confined literally to the setup used and would be difficult to extrapolate for use in broader conclusions, and is entirely divorced from objective analysis.

Frame Times Mean Shit - you just can't get over it. HardOCP put frame times in perspective as in VR testing which shows relevance to prevent going into reprojection - still the testing always is based on user experience and not just a single item being looked at using a canned benchmark with zero actual involvement. Kyle got rather sweaty and into the action to give use the experience. That is fine if all you want to consider is Frame Times and think as whatever you may. So in essence I could care less what you think dealing with frame times, it is a single measurement which if put in proper perspective has some use.We get it, you prefer to remain ignorant. I can only point you to the information that was then used by all reputable sites- including this one- to perform performance analysis of video cards.

See, you really like to quote yourself when you're wrong- we get it! Frametimes explain performance, not rendering issues, which are game specific. Literally every time you complain about your lack of understanding, you use a 'but what about!' argument that was the staple of the failed Soviet Union. Great friends you keep!