- Joined

- Aug 20, 2006

- Messages

- 13,000

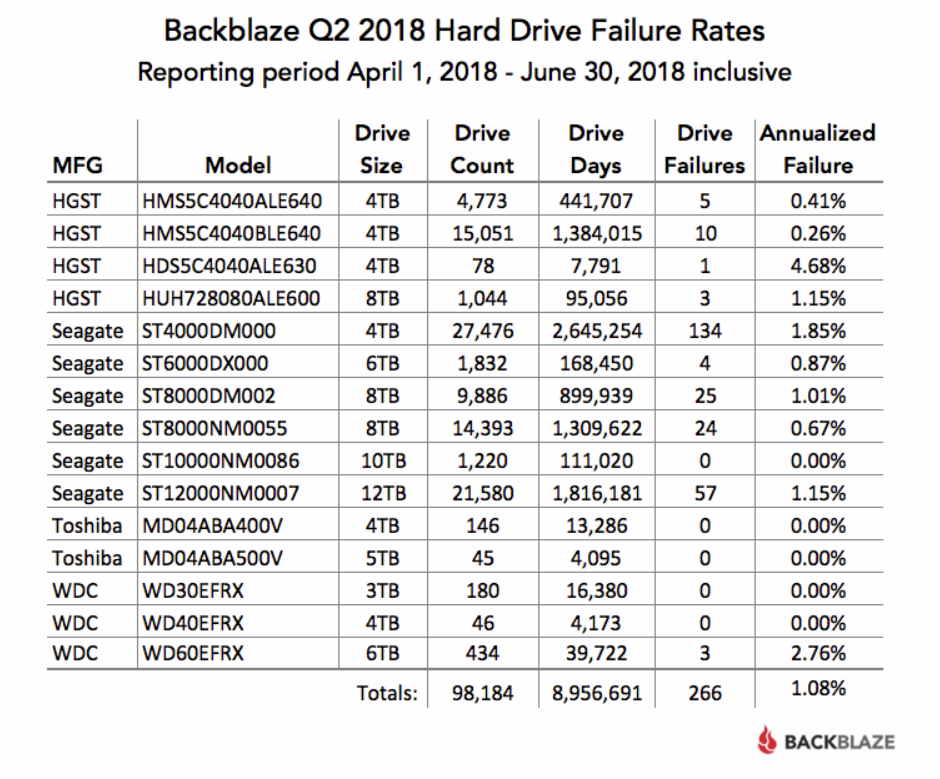

Backblaze has returned with new quarterly and lifetime statistics for its 100,254 data center drives. Enterprise and consumer drives are again compared to deduce whether the former is worth the price premium (they do show a lower annualized failure rate), while 14 TB Toshiba drives have been introduced for later analysis.

The combined AFR for all of the larger drives (8-, 10- and 12 TB) is only 1.02%. Many of these drives were deployed in the last year, so there is some volatility in the data, but we would expect this overall rate to decrease slightly over the next couple of years. The overall failure rate for all hard drives in service is 1.80%. This is the lowest we have ever achieved, besting the previous low of 1.84% from Q1 2018.

The combined AFR for all of the larger drives (8-, 10- and 12 TB) is only 1.02%. Many of these drives were deployed in the last year, so there is some volatility in the data, but we would expect this overall rate to decrease slightly over the next couple of years. The overall failure rate for all hard drives in service is 1.80%. This is the lowest we have ever achieved, besting the previous low of 1.84% from Q1 2018.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)